你可能會喜歡

For early adopters, Horace and his PyTorch team released some early in 🤗Transformers benchmarks using Torchdynamo + AOTAutograd There is an example of usage in the benchmark code in github.com/huggingface/tr… e.g. getting 35% speed up and 45% memory improvement on Albert

I really enjoyed attending and speaking at @MLPrague, kudos to the team for organising such a great event. Looking forward to next year :)

B1T is fantastic. Just needs to work on game sense, communication, aim, map awareness ...

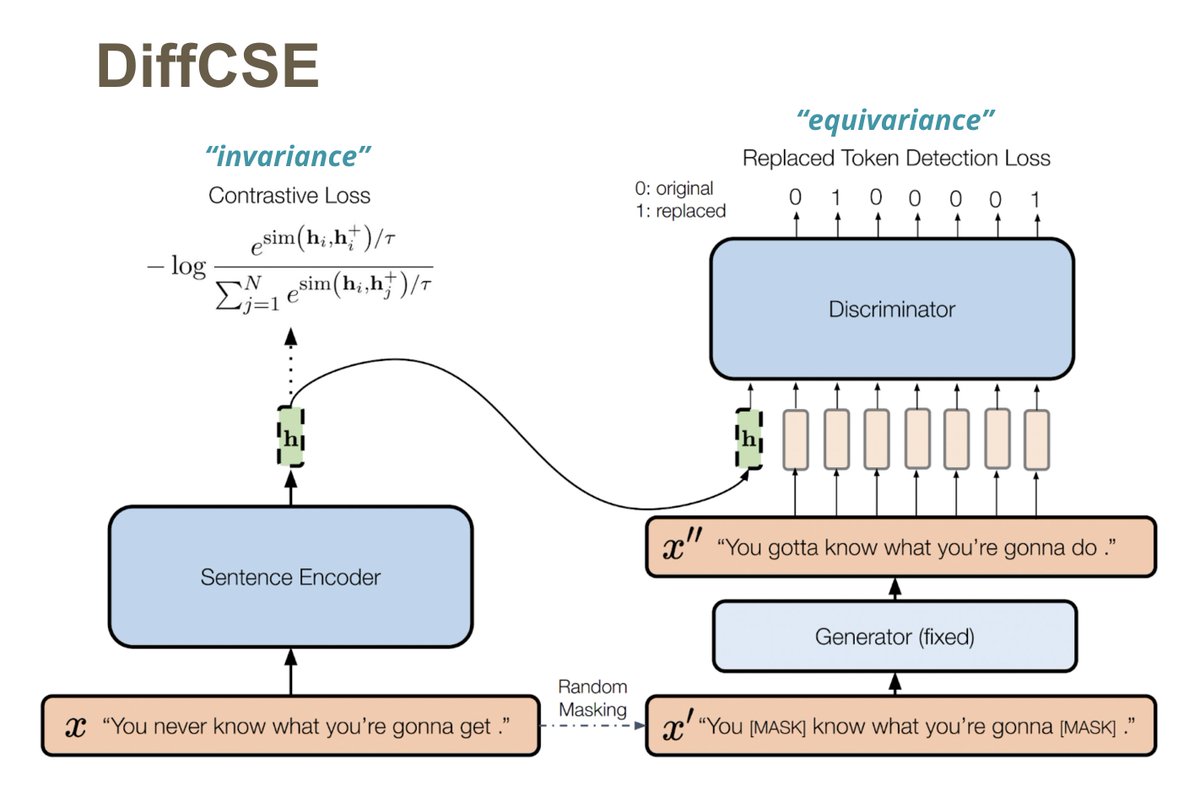

🎉 Our paper on SoTA unsupervised contrastive sentence embedding was accepted as a long paper by NAACL 2022! Thanks to my co-authors from @MIT_CSAIL @MetaAI @MITIBMLab! arXiv: arxiv.org/abs/2204.10298 code: github.com/voidism/DiffCSE Pretrained models are available on @huggingface

In this #TMLS2021 talk, @Piet_El CTO @_PrivateAI will go over the challenges they faced and how they managed to improve the latency and throughput of their Transformer networks, allowing their system to process Terabytes of data easily and cost-effectively bit.ly/TMLS2021

United States 趨勢

- 1. Pat Spencer 2,523 posts

- 2. Kerr 5,293 posts

- 3. Podz 3,169 posts

- 4. Jimmy Butler 2,571 posts

- 5. Shai 14.6K posts

- 6. Seth Curry 4,359 posts

- 7. Hield 1,539 posts

- 8. Mark Pope 1,905 posts

- 9. #DubNation 1,403 posts

- 10. Carter Hart 3,921 posts

- 11. Derek Dixon 1,252 posts

- 12. Connor Bedard 2,305 posts

- 13. Kuminga 1,399 posts

- 14. Caleb Wilson 1,151 posts

- 15. Brunson 7,294 posts

- 16. #ThunderUp N/A

- 17. Notre Dame 38.8K posts

- 18. Braylon Mullins N/A

- 19. #SeanCombsTheReckoning 4,387 posts

- 20. Elden Campbell N/A

Something went wrong.

Something went wrong.