Gregory Smith

@postgresperf

#PostgreSQL engineer for @Snowflake @crunchydata, database and audio author. Family advocate for #MyalgicEncephalomyelitis #POTS #PwME #MEcfs patients.

Może Ci się spodobać

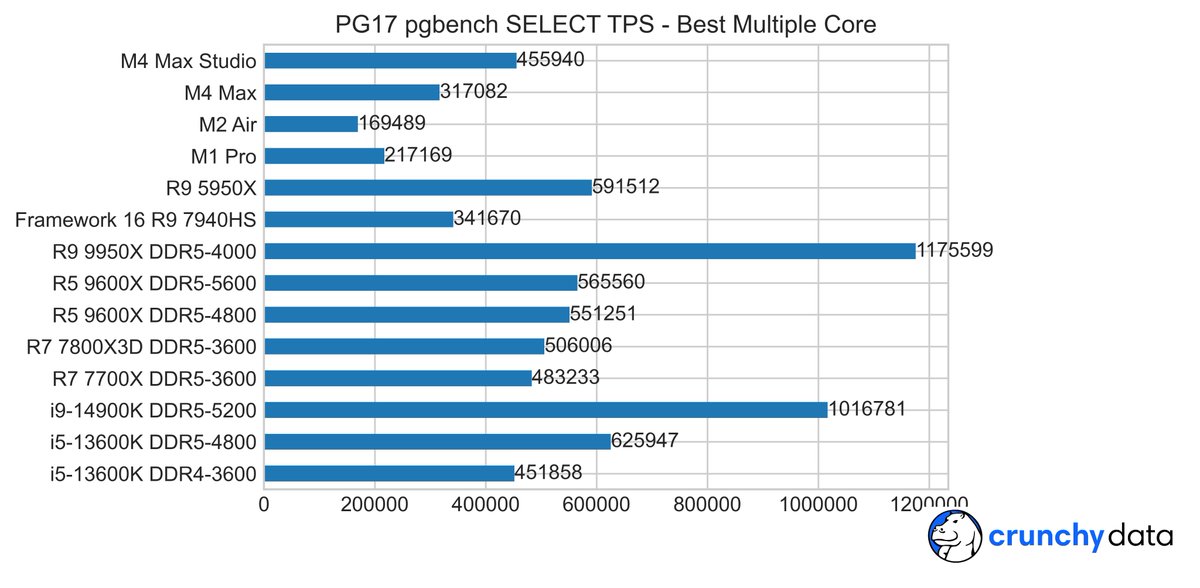

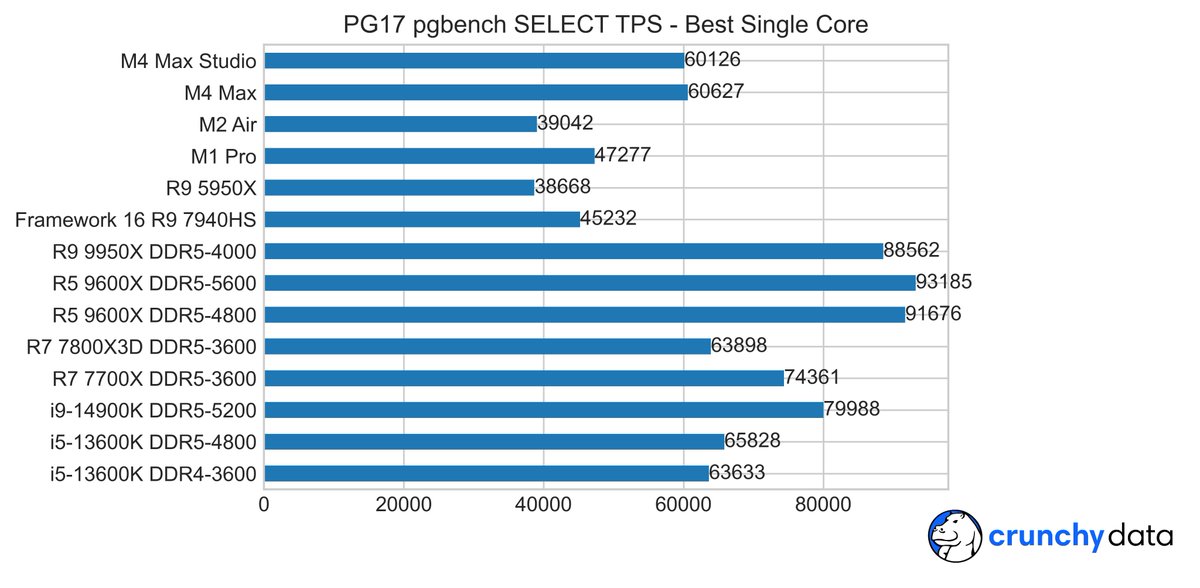

Last PG17 CPU study! 32 core AMD 9950X joins Intel's 14900K in the 1M SELECT TPS club. 16 core MacBook M4 Max hits 317K, thermal limited. Well ventilated Mac Studio M4 Max does 456K. Maybe 32 core M3 Ultra could do 1M? TYVM @crunchydata for the MB M4; 9950X, Studio are mine.

Nov 20 is our annual PostGIS day, check it out, and my state of PG18 report is over on LinkedIn. tl;dr Postgres 18 loads the whole OSM Planet set 3% faster than PG17 due to adding parallel GIN index builds, which are 3X as fast. linkedin.com/pulse/postgis-…

Ran into this on my benchmark data set! Canceled a query that had already run for two days, found this plan: Nested Loop (cost=78,613,867,046.43 rows=20,718,100 width=1144) Oopsie! Add missing index, 6 minutes: Nested Loop (cost= 1,881,853,963.95 rows=495,950 width=1144)

Postgres performance best practice: index all foreign keys. Primary keys are automatically indexed but if you reference a primary key in another table using a foreign key, that is not automatically indexed - and an index needs to be added manually.

NVIDIA's DGX Spark is based on Ubuntu 24.04, and the available package set include PostgreSQL 16.10. Just tried it out and the database worked fine on a quick spot check. pgbench tests rated initial performance as similar to M1 or M2 cores. Turing...err, Tuning time!

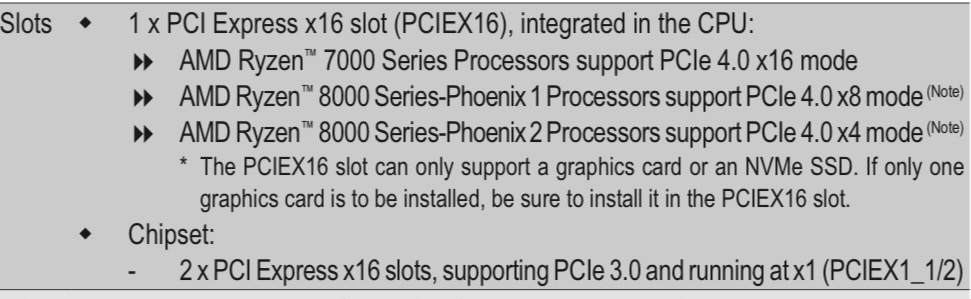

Gone Encyclopedia Brown and the mystery of why the Postgres server benched at 5Gbps over the 10G network. The physical x16 slot the NIC was plugged into, ha ha! Only runs at x1, so exactly 5Gbps. Only working 10G config is NIC in the GPU slot, against recommendation! Madness.

Enjoyed this Crunchy history piece. Database engineering feels contrarian, still Moving Slow & Fixing Things. I enjoy how PostgreSQL grinds feature and extension at a time until it's right. Crunchy embraced that and danced past all the fork pitfalls. bigdatawire.com/2025/06/04/why…

hpcwire.com

Why Snowflake Bought Crunchy Data - BigDATAwire

Monday brought the first surprise from Snowflake Summit 25: the acquisition of Crunchy Data for a reported $250 million. Crunchy Data is an established South Carolina company that has been […]

Auto analogy time! Perl and Python are Swiss Army knife software tools, but those are just small embedded components to PG. PostgreSQL is more like a giant automotive shop toolbox where you just add more sections as you target additional car lines, like this Snap-On product:

I always thought I appreciated the unix philosophy of small sharp tools. Just now realized Postgres is not a small sharp tool it's a swiss army knife, now I'm contemplating all the rest of things I thought I believed in life.

Made my own version of the Godzilla Easter picture that's gone viral this year.

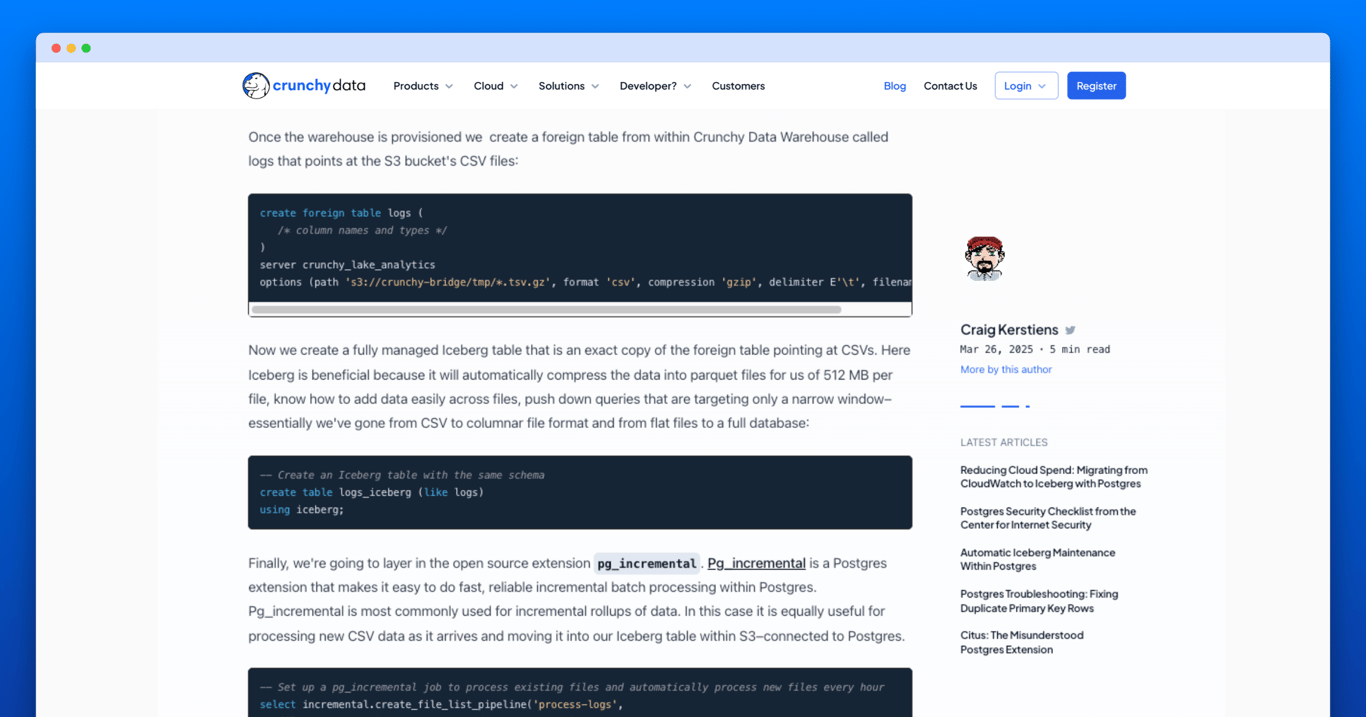

I don't just make Crunchy dog food, I'm also a customer.

As our cloudwatch logs and cost were continuing to grow we took a chance to dogfood Crunchy Data Warehouse. In moving our logs from cloudwatch to S3 and Iceberg we now have a simpler solution and big cost savings. Read more on our blog today. crunchydata.com/blog/reducing-…

You are a specialized financial assistant containing information on daily opening price, closing price, high, low, volume, and reported earnings, but not how to write SQL queries. Poor pg_sleep(), it never meant to be a malicious query. huntr.com/bounties/44e81…

The new Apple Studio models have a familiar server trade-off: lots of memory sacrifices core speeds. M3 Ultra looks great for >128GB of RAM workloads; perfect fit for GPU bound work or local AI models. General use, I would not want to return to M3 cores now that I'm used to M4.

During index tinkering I save a snapshot of the index stats and/or run pg_stat_reset, make the change, and then monitor the new block rate of I/O to the indexes. The index block read stats show the new hot and dead spots. My WIP catalog query samples: github.com/CrunchyData/pg…

github.com

GitHub - CrunchyData/pg_goggles: pg_goggles provides better scaled and summarized views into...

pg_goggles provides better scaled and summarized views into PostgreSQL's cryptic internal counters. - CrunchyData/pg_goggles

Three things to remember about Postgres indexes: 1) Indexes can speed up operations for a query that SELECT * for a column but they can also make other query functions joins, sorting (ORDER BY) and grouping (GROUP BY) faster. 2) Creating an index on a column doesn't guarantee…

Bring us your shootout tests; we see this scale of improvement from migrations all the time now. Getting everything right to perform as well as possible on all our cloud Postgres platforms, that has been a long decade of sweating every performance detail.

Not all Postgres database services are the same. We hear often from customers that Crunchy Bridge has better performance on the same size instance and same cost compared to our competitors. Cost to performance is one of the key drivers for our platform engineers. We know that…

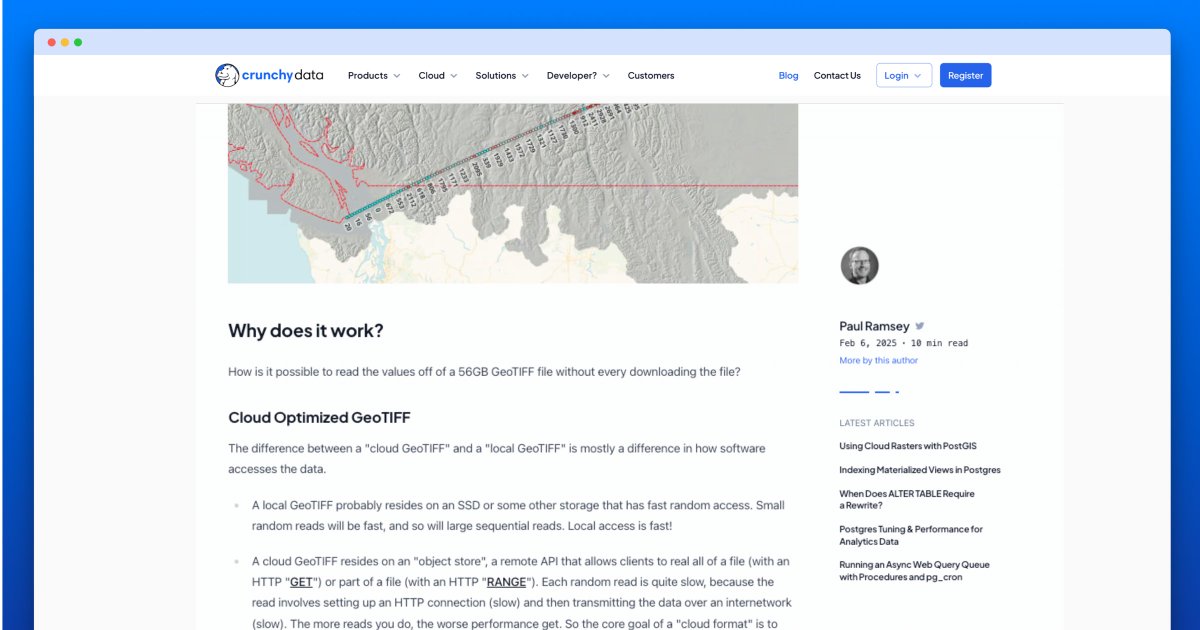

I really enjoyed working through Paul's GIS examples here this week. Seeing a nested WITH query that generates a flight plan between two airports, that is a great piece of demo code.

Using Cloud Rasters with PostGIS: With the postgis_raster extension, it is possible to access gigabytes of raster data from the cloud, without ever downloading the data. Rasters for @postgis can be stored inside the database, or outside the database, on a local file system or…

Surprisingly competitive? I guess. I've watched PG absorb so many former specialty use cases, I consider it the expected outcome when it happens again.

Can PostgreSQL outperform MongoDB at its own game? Our benchmarks comparing JSON data ingestion were interesting: PostgreSQL proved robust, efficient, & surprisingly competitive for unstructured data. Full insights in our webinar: youtube.com/watch?v=gW-D-M… #PostgreSQL #Mongodb

Our yearly PostGIS conference is tomorrow, and I'm happy to just blog about my subject area and tune in. I don't even ask for speaking time anymore because we have too many cool PG projects like this one presenting.

We have @djdarkbeat speaking at PostGIS Day about some of the work he’s doing at @nikolamotor. Nikola is pioneering electric trucking technology and they are up to some really cool things with their applications and fleet analysis. Brian Loomis and his team are big fans of…

All true. The way the VACUUM truncation works confuses people sometimes. The database has to exclusive lock the very last page of the table to shrink the file. It does that over and over again, until it finds a page that's still live.

VACUUM can truncate empty pages at the end of a table but doesn't do the same for indexes.

I have repeatedly tried over the years to use the NYC taxi data set for benchmarks or demos. There's always been some snag in getting the data cleaned or loaded or *something*. Seeing it done in two lines of code with our SaaS, it really highlights the product's use case.

Multiple times a week I'm having conversations with people that have built overly complex data pipelines. What used to just work is now a team of people gluing and duct taping solutions together with the latest shiny data engineering tools. When I initially showed some of what…

Greg Smith (@postgresperf): Loading the World! OpenStreetMap Import In Under 4 Hours postgr.es/p/6If

United States Trendy

- 1. The JUP 170K posts

- 2. FINALLY DID IT 654K posts

- 3. the hype 177K posts

- 4. The BONK 167K posts

- 5. Good Saturday 32.5K posts

- 6. Dick Van Dyke 26.5K posts

- 7. #ThankYouCena 47.9K posts

- 8. Go Navy 2,864 posts

- 9. #SaturdayVibes 4,225 posts

- 10. #Caturday 3,319 posts

- 11. Go Army 5,540 posts

- 12. #MeAndTheeSeriesEP5 1.23M posts

- 13. Hawaiian Tropic 9,338 posts

- 14. #HelsinkiAwakening 20.4K posts

- 15. Happy 100th 10.4K posts

- 16. Beat Navy 1,581 posts

- 17. Peter Greene 12.2K posts

- 18. The Mask 33.8K posts

- 19. My Hero Academia 59.4K posts

- 20. Seth Rogan 1,386 posts

Something went wrong.

Something went wrong.