You might like

Very cool blog by @character_ai diving into how they trained their proprietary model Kaiju (13B, 34B, 110B), before switching to OSS model, and spoiler: it has Noam Shazeer written all over it. Most of the choices for model design (MQA, SWA, KV Cache, Quantization) are not to…

Multi-vector embeddings (e.g., ColBERT) are powerful but expensive to scale. MUVERA cuts their memory costs by 70%. MUVERA (Multi-Vector Retrieval via Fixed Dimensional Encodings) is an interesting approach by Google Research. It transforms multi-vector representations into…

wtf, a 80-layers 321M model???

Synthetic playgrounds enabled a series of controlled experiments that brought us to favor extreme depth design. We selected a 80-layers architecture for Baguettotron, with improvements across the board on memorization of logical reasoning: huggingface.co/PleIAs/Baguett…

卡内基梅隆大学机器学习系主任 Zico Kolter 要开一门新课《Intro to Modern AI》,课程内容是从零构建一个 PyTorch 聊天机器人。 讲的就是我们每天接触的 AI 是怎么跑起来的。 我国内就读大学时的整套课程体系就是采购的 CMU 的内容,亲身体验过,CMU 在教学体系这块是真的强。…

I'm teaching a new "Intro to Modern AI" course at CMU this Spring: modernaicourse.org. It's an early-undergrad course on how to build a chatbot from scratch (well, from PyTorch). The course name has bothered some people – "AI" usually means something much broader in academic…

MiniMind:3块钱、2小时训练一个小GPT 从头开始训练一个小型LLM,仅0.02B参数,没有使用第三方封装好的库,全部使用PyTorch重构实现。是LLM的开源复现,也是一个LLM入门实操教程。 Github:github.com/jingyaogong/mi…

7+ main precision formats used in AI ▪️ FP32 ▪️ FP16 ▪️ BF16 ▪️ FP8 (E4M3 / E5M2) ▪️ FP4 ▪️ INT8/INT4 ▪️ 2-bit (ternary/binary quantization) General trend: higher precision for training, lower precision for inference. Save the list and learn more about these formats here:…

My new field guide to alternatives to standard LLMs: Gated DeltaNet hybrids (Qwen3-Next, Kimi Linear), text diffusion, code world models, and small reasoning transformers. magazine.sebastianraschka.com/p/beyond-stand…

With the release of the Kimi Linear LLM last week, we can definitely see that efficient, linear attention variants have seen a resurgence in recent months. Here's a brief summary of what happened. First, linear attention variants have been around for a long time, and I remember…

想深入了解 ChatGPT、Claude 这些 AI 背后的训练机制,尤其是它们背后那套如何通过人类反馈变得越来越智能的原理。 可以看下,来自加州大学数学系教授 Ernest K. Ryu 开设的《大语言模型的强化学习》课程,配套 PPT 和视频可以免费学习。 课程从深度强化学习基础讲起,逐步深入到 Transformer…

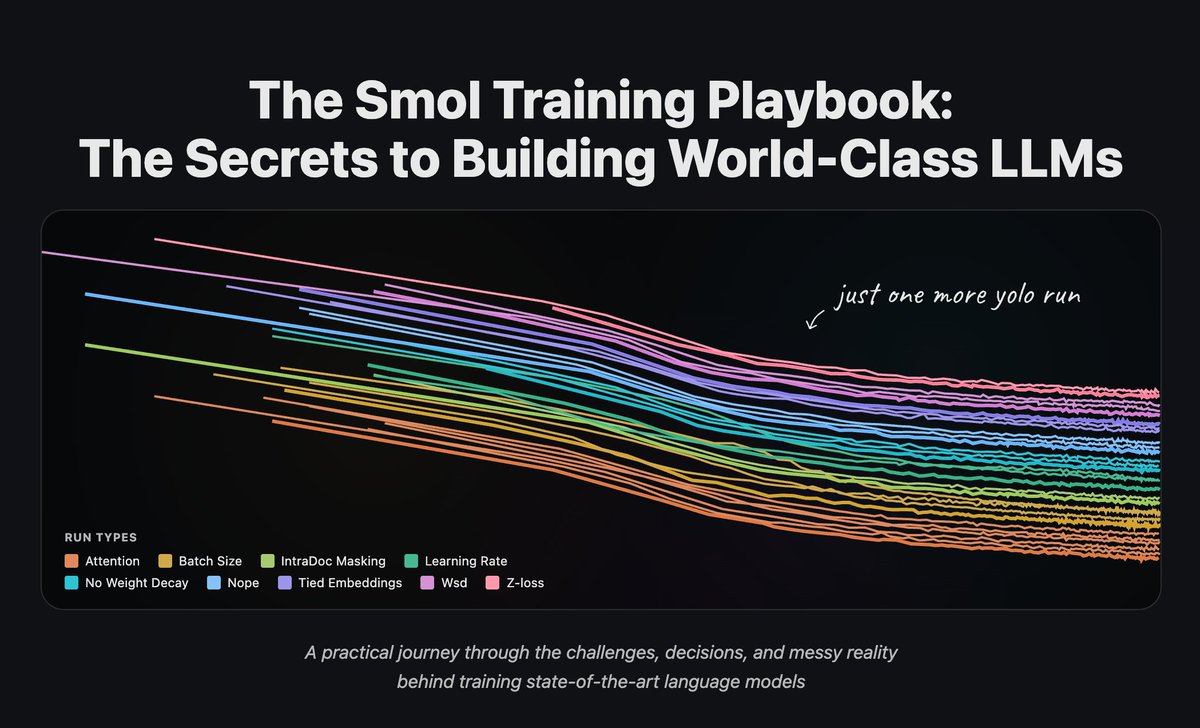

HuggingFace 发布的超长技术博客(200页,2-4天才能读完),完整记录了团队训练 SmolLM3 的全过程,对于想训练小模型的团队,必看!…

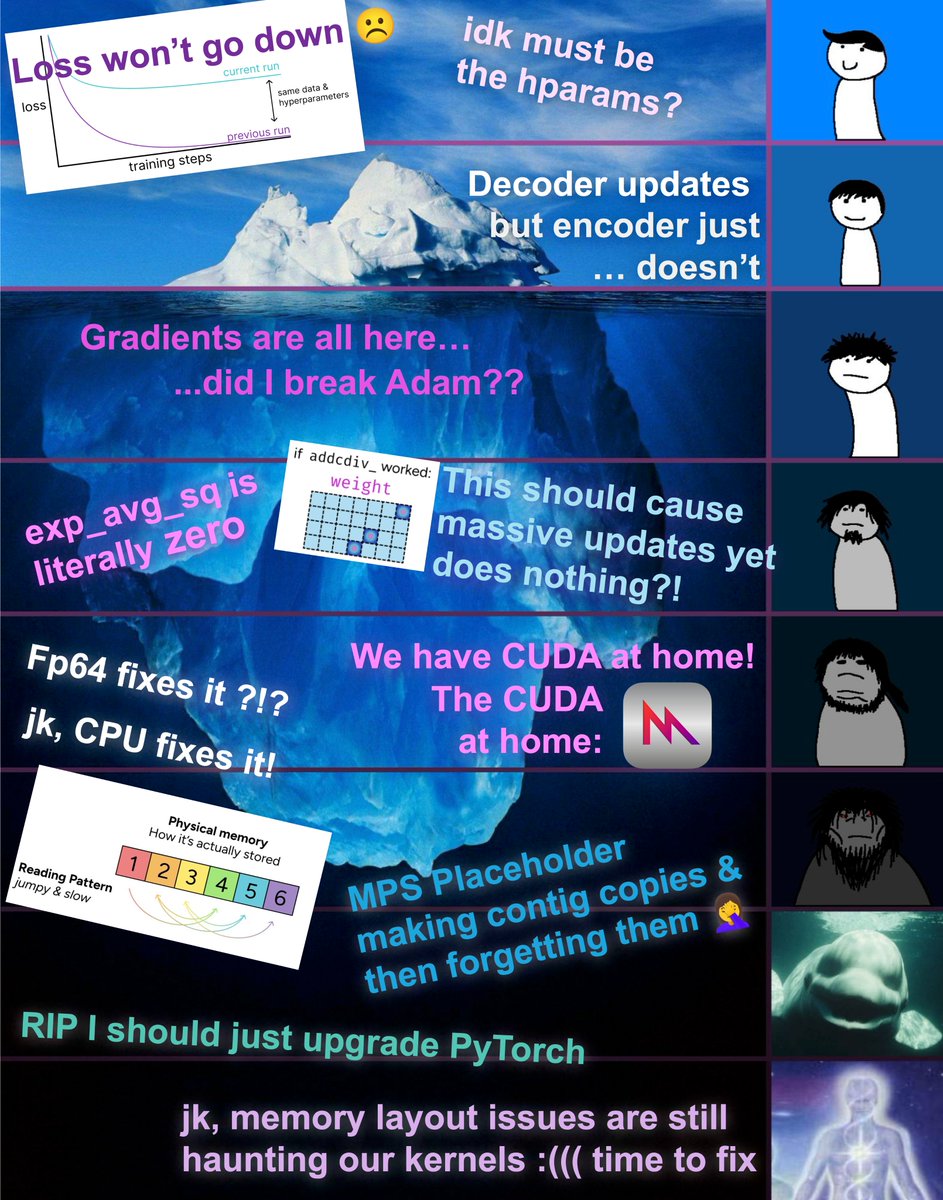

Beautiful technical debugging detective longread that starts with a suspicious loss curve and ends all the way in the Objective-C++ depths of PyTorch MPS backend of addcmul_ that silently fails on non-contiguous output tensors. I wonder how long before an LLM can do all of this.

New blog post: The bug that taught me more about PyTorch than years of using it started with a simple training loss plateau... ended up digging through optimizer states, memory layouts, kernel dispatch, and finally understanding how PyTorch works!

如何给 llama.cpp 推理引擎增加新模型架构的教程! 来自 pwilkin,没错,就是前几天给 llama.cpp 增加 Qwen3-Next 架构的大佬。 教程很不错,我觉得甚至能当 prompt 用,把新架构和这篇教程塞给大模型,直接让大模型开始实现你需要的大模型架构。 地址:github.com/ggml-org/llama…

在花费 60 个小时解构 Elon Musk 的传记后,Founders Podcast 主持人 David Senra 提炼了 100 条 Elon 三十年来的职业生涯法则,强烈推荐本期播客!这里列出了 31 条极其宝贵的公司运作原则: 1. 使命第一; 2. 绝不退缩; 3. 疯狂的紧迫感是我们的行动准则; 4. 产品设计应由工程师驱动; 5.…

New episode: "How Elon Works" This episode covers the insanely valuable company-building principles of Elon Musk A few notes from the episode: 1. The mission comes first. 2. Retreat is not an option. 3. A maniacal sense of urgency is our operating principle. 4. Product…

Tiny Reasoning Language Model (trlm-135) - Technical Blogpost⚡ Three weeks ago, I shared a weekend experiment: trlm-135, a tiny language model taught to think step-by-step. The response was incredible and now, the full technical report is live: shekswess.github.io/tiny-reasoning…

想学习高级逆向工程技术,特别恶意软件分析这块,网上资料要么过于零散,要么太理论化缺少实操,不知道该从何学起。 恰巧,在 GitHub 上发现了 CS7038-Malware-Analysis 这门完整的大学课程,为我们提供了一条从零到精通的系统学习路径。…

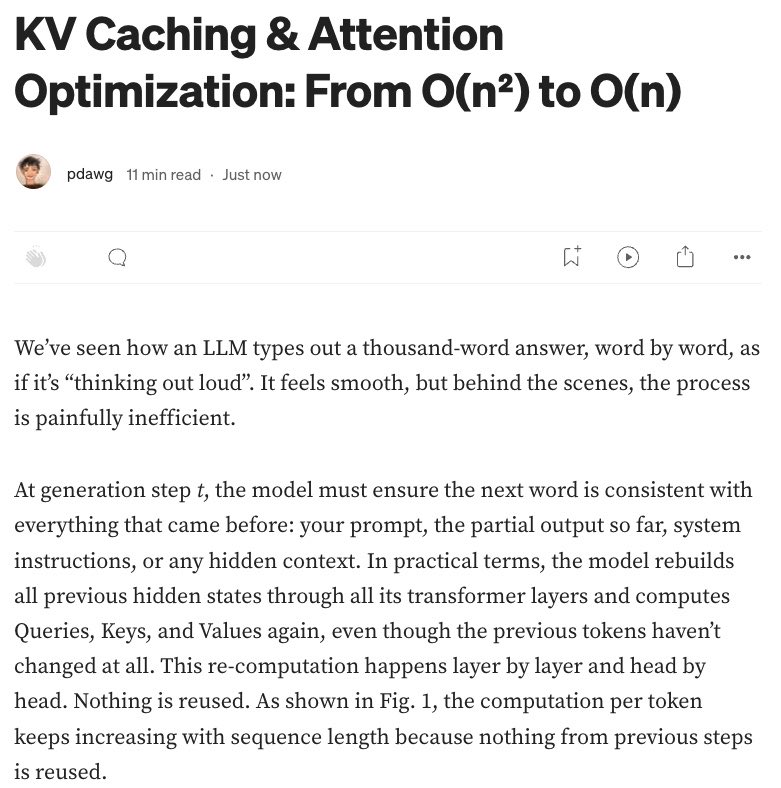

wrote a blog on KV caching. link in comments.

How to become expert at thing: 1 iteratively take on concrete projects and accomplish them depth wise, learning “on demand” (ie don’t learn bottom up breadth wise) 2 teach/summarize everything you learn in your own words 3 only compare yourself to younger you, never to others

United States Trends

- 1. Steph 61.8K posts

- 2. Wemby 28.8K posts

- 3. Spurs 29.3K posts

- 4. Draymond 11.4K posts

- 5. Clemson 11K posts

- 6. Louisville 10.8K posts

- 7. #SmackDown 49.5K posts

- 8. Zack Ryder 15.2K posts

- 9. Aaron Fox 2,024 posts

- 10. #DubNation 1,943 posts

- 11. Harden 13K posts

- 12. Dabo 1,960 posts

- 13. Brohm 1,604 posts

- 14. Marjorie Taylor Greene 42.4K posts

- 15. Landry Shamet 5,709 posts

- 16. Massie 51.8K posts

- 17. Matt Cardona 2,780 posts

- 18. Mitch Johnson N/A

- 19. #OPLive 2,444 posts

- 20. Miller Moss N/A

You might like

-

Ning Ding

Ning Ding

@stingning -

Jie Huang

Jie Huang

@jefffhj -

Zhuosheng Zhang

Zhuosheng Zhang

@zhangzhuosheng -

Shizhe Diao

Shizhe Diao

@shizhediao -

Zengzhi Wang

Zengzhi Wang

@SinclairWang1 -

WestlakeNLP

WestlakeNLP

@NlpWestlake -

Yu Zhang

Yu Zhang

@yuz9yuz -

Chenghao Yang

Chenghao Yang

@chrome1996 -

jinyang (patrick) li

jinyang (patrick) li

@jinyang34647007 -

Qingxiu Dong

Qingxiu Dong

@qx_dong -

Shuyan Zhou

Shuyan Zhou

@syz0x1 -

Tianbao Xie

Tianbao Xie

@TianbaoX -

Leo Liu

Leo Liu

@ZEYULIU10 -

Yujia Qin

Yujia Qin

@TsingYoga -

Ming Zhong

Ming Zhong

@MingZhong_

Something went wrong.

Something went wrong.