Satyabrat Singh

@satyabratsingh

Interest in Software, ML, Quant Research, MSc in ML from UCL, MSc Maths from IIT

You might like

Some learnings while training large #NeuralNets for Quantitative dataset - Data, very important - ensure that data is correctly distributed and has variety of signals - Normalise before feeding to NN - Condense features before feeding - Attention is difficult - more to come :)…

So, some insights for me from Ilya's podcast - Continual learning would be more effective as it's same as evolution for humans - Some value functions might be ingrained in human genes. - Might have hit limit of scaling, so need new ways of pre-training

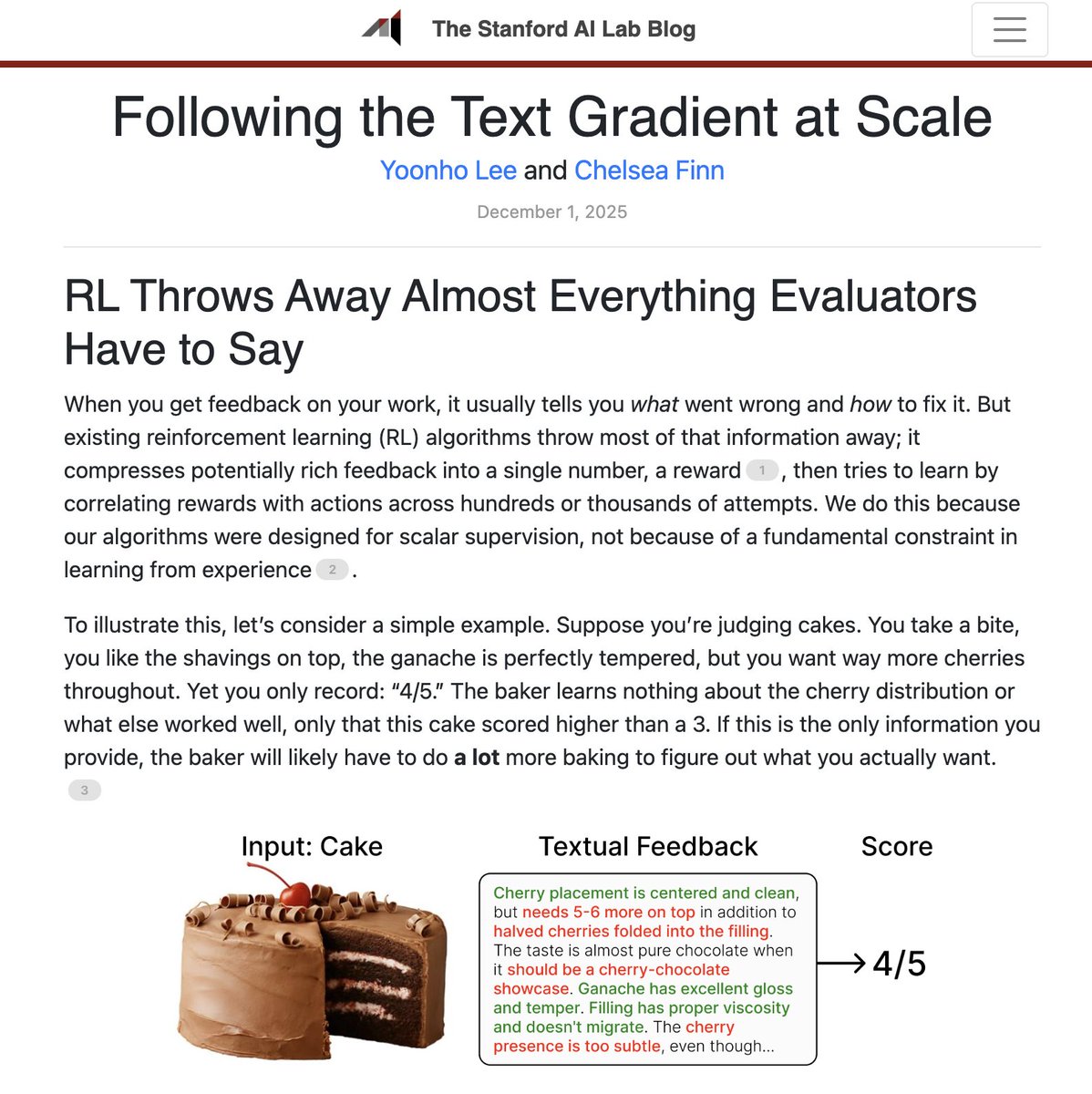

Following the Text Gradient at Scale We wrote a @StanfordAILab blog post about the limitations of RL methods that learn solely from scalar rewards + a new method that addresses this Blog: ai.stanford.edu/blog/feedback-… Paper: arxiv.org/abs/2511.07919

So contextual-based retrieval turns out to be effective. Even with very granular chunking, search performance improved, LLM judge gave higher scores.. More details on anthropic.com/engineering/co…

#Gemini3 is indeed good in reasoning tasks, helped to optimize a neural network, where it suggested, rather than optimizing the network, we could tweak the loss function, pretty smart !!!

If you want to learn AI from the experts, keep reading. 💡 Together with @UCL, we made a free AI Research Foundations curriculum – available now on Google Skills. With lessons from a Gemini Lead like @OriolVinyalsML, you'll explore how to code better, fine-tune an AI model and…

Glad to introduce our new work "Game-Theoretic Regularized Self-Play Alignment of Large Language Models". arxiv.org/abs/2503.00030 🎉 We introduce RSPO, a general, provably convergent framework to bring different regularization strategies into self-play alignment. 🧵👇

Thrilled to introduce our test-time algorithm for robust multi-objective alignment! Huge kudos to my incredible collaborators for making this happen!

❓No clue about the priorities of the objectives? ❗️ Focus on robustness at test-time! 🚀Robust Multi-Objective Decoding (RMOD) is a novel inference-time alignment algorithm that produces robust responses under multiple objectives to consider.

❓No clue about the priorities of the objectives? ❗️ Focus on robustness at test-time! 🚀Robust Multi-Objective Decoding (RMOD) is a novel inference-time alignment algorithm that produces robust responses under multiple objectives to consider.

🚀Sampling = Reinforcement Learning🤖 This means you can train a neural sampler using RL! We introduce the Value Gradient Sampler (VGS)—a novel diffusion sampler that leverages value functions to generate samples from an unnormalized density. 📄 Paper: arxiv.org/abs/2502.13280

(1/9) Flying to #NeurIPS2024 ? Our paper arxiv.org/abs/2405.20304 and blog shorturl.at/aIShm might be an interesting read on ur long flight to Vancouver! Accepted at #NeurIPS2024 and excited to present it as a poster on 13th December (1-4pm)!

On my way to #NeurIPS2024 ✈️ We are presenting several papers this year, including REDUCER, ARDT, GR-DPO/IPO, invariant BO. I’d love to connect and chat about topics like Alignment, RL/RLHF, LLM deception, robustness, and reasoning!

🚀🚀🚀 Introducing Adversarially Robust Decision Transformer (ARDT) 🚀🚀🚀 The first Decision Transformer for adversarial game-solving and robust decision-making, accepted to #NeurIPS #NeurIPS2024 🚨Change slightly : Replacing returns-to-go with minimax return. 🚨 Improve…

15 years ago today, I got a second chance at life… never realized how close death could be #MumbaiTerrorAttack #GratefulForLife

📣 If you've got an objective that exhibits symmetries, you should be using invariant kernel BO 📣 🚀 More sample efficient than constrained/naive BO! 🚀 More compute efficient than data augmentation! 🧵 1/4 #NeurIPS2024 #BayesianOptimisation #ai

This book is an absolute gem for understanding the intricacies of neural nets. Huge thanks to @SimonScardapane #MachineLearning #DeepLearning #AI

DeepSets are useful where we need permutation invariance. Imagine a batch of data with shape (n,m) —we split this batch into k sets, each of size (k,m) feed them through a neural network, and aggregate the outputs as: f(X) = ∑(i=1 to k) g(x_i). This method captures the essence…

The 2nd edition of my #ReinforcementLearning 477-page textbook for my course at ASU has just been published and is freely available at the book's website web.mit.edu/dimitrib/www/R… which also contains slides, videolectures, and supporting material

Competition Launch Alert! Realtime Marketdata hosted by @JaneStreetGroup 🎯 Challenge: Develop an ML forecasting model using real-world data derived from production systems 💰 Prize Pool: $120,000 ⏰ Entry Deadline: 12/30/2024 Explore the difficult dynamics that shape financial…

United States Trends

- 1. Lobo 44.4K posts

- 2. Indiana Senate 30.9K posts

- 3. Indiana Republicans 20.6K posts

- 4. Supergirl 228K posts

- 5. Unknown 44.1K posts

- 6. Obamacare 116K posts

- 7. Tyler Robinson 36.7K posts

- 8. Letitia James 13.1K posts

- 9. Rivian 5,549 posts

- 10. Bennie 43.1K posts

- 11. Do Kwon 4,456 posts

- 12. #TheGameAwards 44.8K posts

- 13. Kristi Noem 84.3K posts

- 14. Chess 46.1K posts

- 15. Superman 61.5K posts

- 16. Aquaman 2,339 posts

- 17. $AVGO 14.8K posts

- 18. Mike Pence 3,230 posts

- 19. GPT-5.2 15.4K posts

- 20. #SleighYourHolidayGiveaway N/A

Something went wrong.

Something went wrong.