Selena Ling 凌子涵

@seleniumlzh

U of Toronto CS PhD at DGP | Prev. @AdobeResearch @NVIDIA : )

你可能會喜歡

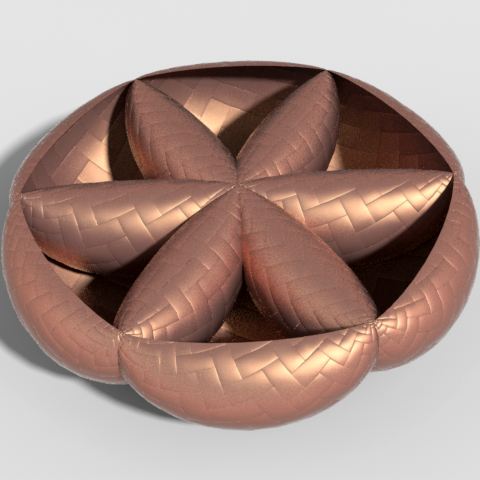

Our #Siggraph25 work found a simple, nearly one-line change that greatly eases neural field optimization for a wide variety of existing representations. “Stochastic Preconditioning for Neural Field Optimization” w/ @merlin_ND @_AlecJacobson @nmwsharp

📢 Lyra: Generative 3D Scene Reconstruction via Video Diffusion Model Self-Distillation Got only one or a few images and wondering if recovering the 3D environment is a reconstruction or generation problem? Why not do it with a generative reconstruction model! We show that a…

📢📢📢 3D Gaussian Flats @ #NeurIPS2025 A hybrid 2D/3D representation that reconstructs photorealistic scenes. 🔗 Project: theialab.github.io/3dgs-flats 📄 ArXiv: arxiv.org/abs/2509.16423 💻 Code: github.com/theialab/3dgs-…

Season 1 of Toronto School of Foundation Modelling kicks off this Thursday at New Stadium!!! 60 people will be attending weekly sessions for 3 months, learning to build Foundation Models from scratch. Around 10 guest speakers (more to come) will be flying to Toronto to talk…

Continuing to press forward with the range and depth of learning opportunities. This week we have several workshops, meet-ups, a deep-dive seminar, the beginning of a new lecture series, as well as an exhibit happening at New Stadium. Links below.

I’m excited to announce that our paper, “Learning Riemannian Metrics for Interpolating Animations,” has been accepted to GSI 2025! 🧵 co-authored with @vm2358 at @UofT and @ninamiolane at the @UCSB @geometric_intel lab! gi.ece.ucsb.edu/node/217

😍

Every lens leaves a blur signature—a hidden fingerprint in every photo. In our new #TPAMI paper, we show how to learn it fast (5 mins of capture!) with Lens Blur Fields ✨ With it, we can tell apart ‘identical’ phones by their optics, deblur images, and render realistic blurs.

“Everyone knows” what an autoencoder is… but there's an important complementary picture missing from most introductory material. In short: we emphasize how autoencoders are implemented—but not always what they represent (and some of the implications of that representation).🧵

Check out our new paper on robust motion segmentation! Wanna run your SfM pipeline on dynamic scenes? Consider using our RoMo masks to get improvements!! 🚀

📢📢📢 RoMo: Robust Motion Segmentation Improves Structure from Motion romosfm.github.io arxiv.org/pdf/2411.18650 TL;DR: boost your SfM pipeline on dynamic scenes. We use epipolar cues + SAMv2 features to find robust masks for moving objects in a zero-shot manner. 🧵👇

For folks in the @siggraph community: You may or may not be aware of the controversy around the next #SIGGRAPHAsia location, summarized here: cs.toronto.edu/~jacobson/webl… If you're concerned, consider signing this letter: docs.google.com/document/d/1ZS… via this form docs.google.com/forms/d/e/1FAI…

Total Pixel Space, which won the Grand Prix at this year's AIFF, is a wonderful video essay and, by the way, one of the clearest descriptions of universal simulation (as search in the space of all possible universes) youtube.com/watch?v=zpAeyg…

youtube.com

YouTube

Total Pixel Space

Our work was featured by MIT News today! Had so much fun working on this project with Silvia Sellán, Natalia Pacheco-Tallaj and @JustinMSolomon. Can't wait to present it at SIGGRAPH this summer! news.mit.edu/2025/animation…

📢📢📢 Neural Inverse Rendering from Propagating Light 💡 Our CVPR Oral introduces the first method for multiview neural inverse rendering from videos of propagating light, unlocking applications such as relighting light propagation videos, geometry estimation, or light…

United States 趨勢

- 1. #BornOfStarlightHeeseung 28.4K posts

- 2. Happy Birthday Charlie 76.5K posts

- 3. #tuesdayvibe 4,502 posts

- 4. #csm217 N/A

- 5. Alex Jones 17.3K posts

- 6. Sandy Hook 4,395 posts

- 7. Pentagon 81.6K posts

- 8. Good Tuesday 37.1K posts

- 9. #NationalDessertDay N/A

- 10. #PortfolioDay 4,504 posts

- 11. Shilo 2,937 posts

- 12. Monad 205K posts

- 13. Dissidia 7,971 posts

- 14. Victory Tuesday 1,290 posts

- 15. Masuda 1,840 posts

- 16. Happy Heavenly 11.3K posts

- 17. Happy 32nd 12.2K posts

- 18. Janet Mills 2,375 posts

- 19. Time Magazine 22K posts

- 20. Larry Fink 6,760 posts

你可能會喜歡

-

Srinath Sridhar

Srinath Sridhar

@drsrinathsridha -

Andrea Tagliasacchi 🇨🇦

Andrea Tagliasacchi 🇨🇦

@taiyasaki -

Qianqian Wang

Qianqian Wang

@QianqianWang5 -

Justin Solomon

Justin Solomon

@JustinMSolomon -

Qianli Ma

Qianli Ma

@qianli_m -

Jun Gao

Jun Gao

@JunGao33210520 -

Nicole Feng

Nicole Feng

@nicolefeng_ -

Peter Hedman

Peter Hedman

@PeterHedman3 -

Esther Lin

Esther Lin

@estheroate -

Ana Dodik

Ana Dodik

@ana_dodik -

Sarah Kushner

Sarah Kushner

@helloyesimsarah -

Zhecheng Wang

Zhecheng Wang

@exfilmstudent

Something went wrong.

Something went wrong.