내가 좋아할 만한 콘텐츠

Insightful! I have been thinking about "thinking in video" too

Some further thoughts on the idea of "thinking with images": 1) zero-shot tool use is limited -- you can’t just call an object detector to do visual search. That’s why approaches like VisProg/ViperGPT/Visual-sketchpad will not generalize or scale well. 2) visual search needs to…

I am on the job market! Contact me if you are interested in building multi-modal agentic systems that understand behaviors!

✨ Introducing a new #SOTA action recognition large multimodal language model: #LLaVAction! Understanding human behavior requires recognizing actions—a challenging task given the complexity of behavior. Large multimodal language models (#MLLMs) offer a promising path forward,…

So sweet to see them standing together

Automating the Search for Artificial Life with Foundation Models Presents ASAL, the first use of vision-language models in Artificial Life, discovering diverse, novel simulations across substrates proj: pub.sakana.ai/asal/ abs: arxiv.org/abs/2412.17799

🚀 DLC AI Residents call for 2025! This year, we are doing something a little different to support even more underrepresented individuals 🥰 We are having in-person workshops again 🙌, & we are taking the 2025 AI Residency on the Road! 🛤️ App open now! deeplabcutairesidency.org

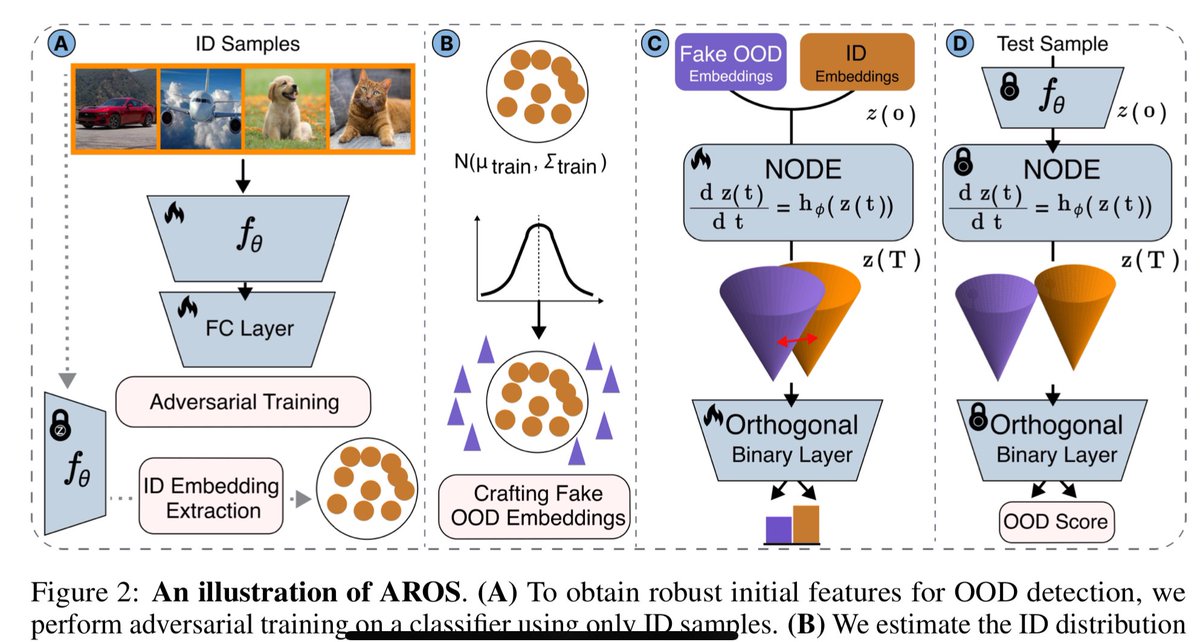

🚨adversarial robustness is becoming even more critical as AI systems are deployed in the real-world, but how can we detect outliers (adversarials) without having trained on them 👀? In our new preprint, we introduce AROS💍: It leverages neural ODEs and Lyapunov stability…

Really cool!

✨🥰 check out our article - and cover 🤩- about Decoding the Brain in @CellCellPress cell.com/cell/fulltext/… We review the mathematics, current approaches, and muse about the future… #BCI #neuraldecoding #neuroAI Thanks to my awesome co-authors Adriana Perez Rotondo,…

Come check this really cool paper if you are at ECCV!

I'm thrilled to present our paper "Elucidating the Hierarchical Nature of Behavior with Masked Autoencoders" at #ECCV24! 🎉 We focus on self-supervised learning for action segmentation to uncover the hierarchical structure of behavior. Paper: ecva.net/papers/eccv_20… 👇🧵

How does sensorimotor (S1/M1) cortex support adaptive motor control? Come find out in our latest preprint, which spans the development of a full adult forelimb model + physics simulations, neural-modeling for control, complex 🐭behavior 🕹️, large-scale imaging, and of course…

DLC Residency 2024 Recap! 🎓💜 🎉Check out the awesome video from DLC Community Manager, @TheVetFuturist, and the 2024 Residents! youtu.be/lyJ2NDKng3g?si…

youtube.com

YouTube

DeepLabCut AI Residency 2024! 🎓💜

🎉 introducing the 2024 AI Residents!!! We welcome @StuckertAnna @mrc_canela and @dikra_masrour 👏 Read about them on the blog: deeplabcut.medium.com/exploring-the-…

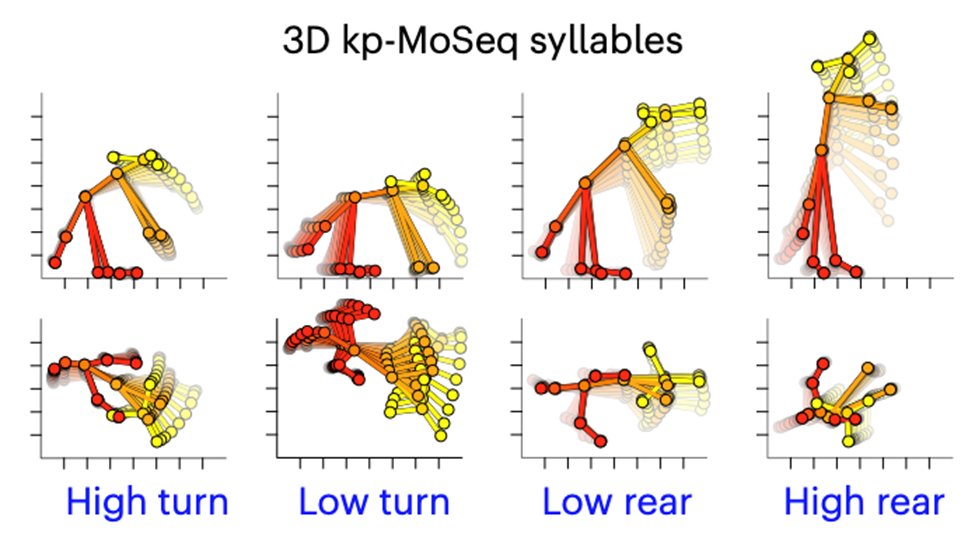

🎉 Happy to have contributed a bit to this with my PhD student @shaokaiyeah, where we use @DeepLabCut #SuperAnimal foundation model for pose to do fully end-to-end unsupervised behavioral analysis with #keypointMoSeq. Huge congrats to first author Caleb Weinreb, and Scott…

Keypoint-MoSeq from the @Datta_lab is an unsupervised behavior segmentation algorithm that extracts behavioral modules from keypoint tracking data acquired with diverse algorithms, as demonstrated on data from mice, rats and fruit flies. (1/2)

the pace of progress blows my mind

AI music videos are so hot right now 🔥

United States 트렌드

- 1. #LoveYourW2025 112K posts

- 2. Good Wednesday 21K posts

- 3. #wednesdaymotivation 4,821 posts

- 4. And the Word 75.7K posts

- 5. Hump Day 7,872 posts

- 6. #Worlds2025 47.6K posts

- 7. Raila Odinga 153K posts

- 8. #LeeKnowXGucci 5,665 posts

- 9. LEE KNOW FOR HARPERS BAZAAR 5,064 posts

- 10. #Wordle1579 N/A

- 11. Young Republicans 88.2K posts

- 12. Tami 4,893 posts

- 13. Baba 125K posts

- 14. Yamamoto 51.3K posts

- 15. George Floyd 36.7K posts

- 16. Lucia 57.9K posts

- 17. Vishnu 9,135 posts

- 18. halsey 10K posts

- 19. Politico 329K posts

- 20. Kenyan 36.4K posts

Something went wrong.

Something went wrong.