Giorgio Robino

@solyarisoftware

Conversational LLM-based Applications Specialist @almawave | Former ITD-CNR Researcher | Soundscapes (Orchestral) Composer.

You might like

My preprint "Conversation Routines: A Prompt Engineering Framework for Task-Oriented Dialog Systems" now has a revised version on @arXiv with updated experimental results. Here’s a thread with the changes! 🧵 ➡️ Paper: arxiv.org/abs/2501.11613 1/ What’s CR?

The 12 Architectural Innovations That Prompt-Oriented Programming Adds to Context Engineering Prompt-Oriented Programming goes beyond good context engineering. After mapping every architectural decision in Claude's skills system, I found 12 patterns that don't exist in…

We are hosting a series of events for the community of Python builders in AI: meet the #PyAI event series. Next events will be hosted SF, NY, and London. Followed by a conference in early 2026. Register and join us! pydantic.dev/articles/pydan…

Ollama v0.12.8 is here! This release improved Qwen 3 VL performance, and improvements and bug fixes in Ollama's engine. ❤️ Happy halloween! 👻👻👻

yesterday, Hugging Face dropped a 214-page MASTERCLASS on how to train LLMs > it’s called The Smol Training Playbook > and if want to learn how to train LLMs, > this GIFT is for you > this training bible walks you through the ENTIRE pipeline > covers every concept that matters…

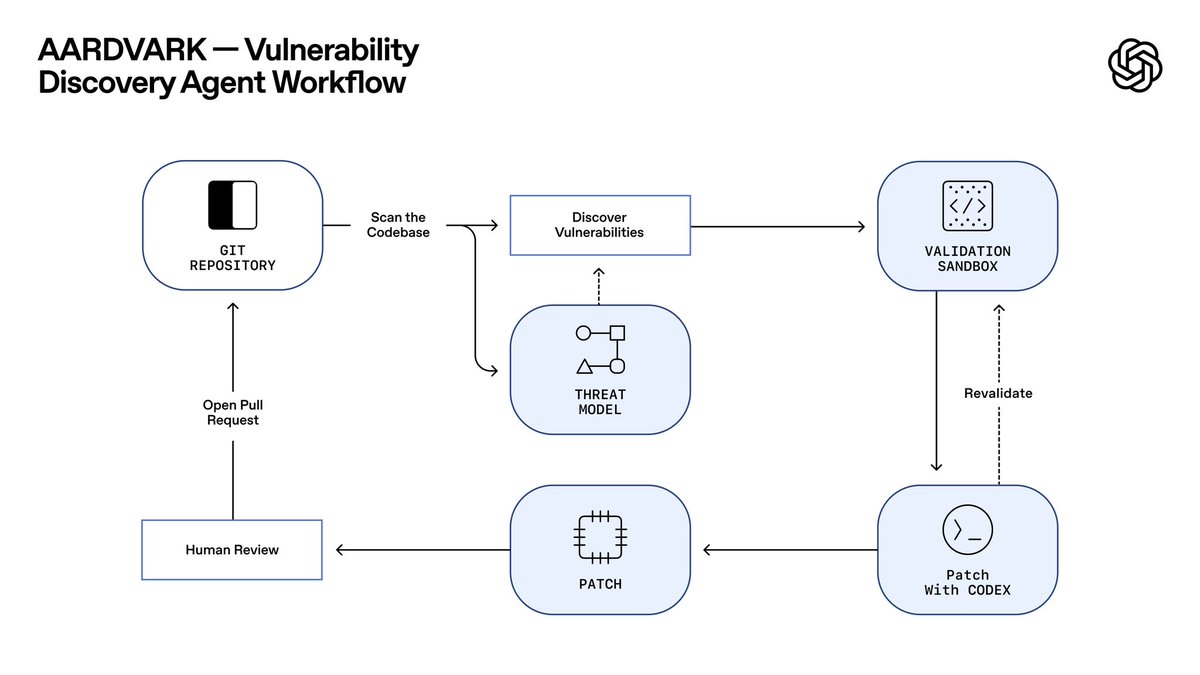

🚨 OpenAI introduces Aardvark, an agentic security researcher powered by GPT 5. Aardvark acts like an autonomous AI security expert that scans codebases, finds vulnerabilities, validates them in a sandbox, and suggests fixes using Codex. Key features - Continuously…

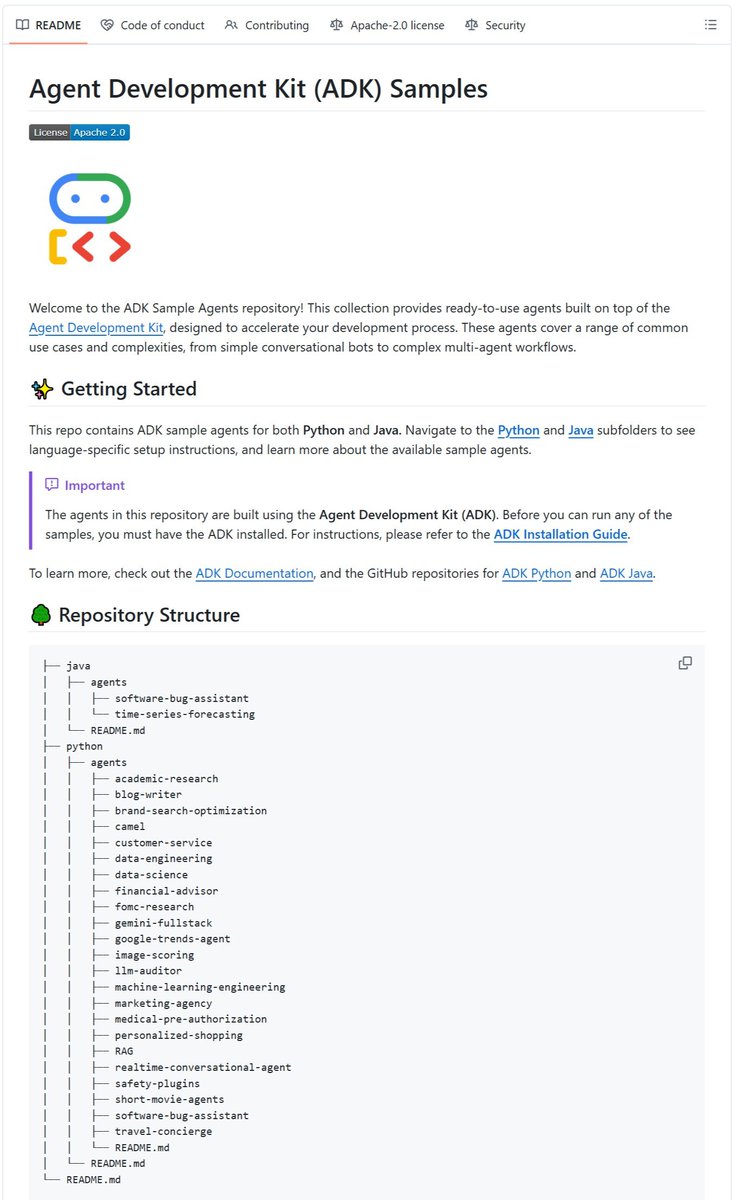

Google literally just made the best way to create AI Agents

🚨 BREAKING: Anthropic has developed a method to test whether AI models can understand their own thoughts. Using a new technique called concept injection, researchers inserted specific neural patterns into Claude models, and asked if they could detect them. Claude Opus 4.1 was…

New Anthropic research: Signs of introspection in LLMs. Can language models recognize their own internal thoughts? Or do they just make up plausible answers when asked about them? We found evidence for genuine—though limited—introspective capabilities in Claude.

CLI tool for drawing AWS infrastructure diagrams from YAML

Introducing Prompt-Oriented Programming 🤯

your AI agent only forgets because you let it. there is a simple technique that everybody needs, but few actually use, and it can improve the agent by 51.1%. here's how you can use workflow memory: you ask your agent to train a simple ML model on your custom CSV data. — it…

Kimi Linear Tech Report is dropped! 🚀 huggingface.co/moonshotai/Kim… Kimi Linear: A novel architecture that outperforms full attention with faster speeds and better performance—ready to serve as a drop-in replacement for full attention, featuring our open-sourced KDA kernels! Kimi…

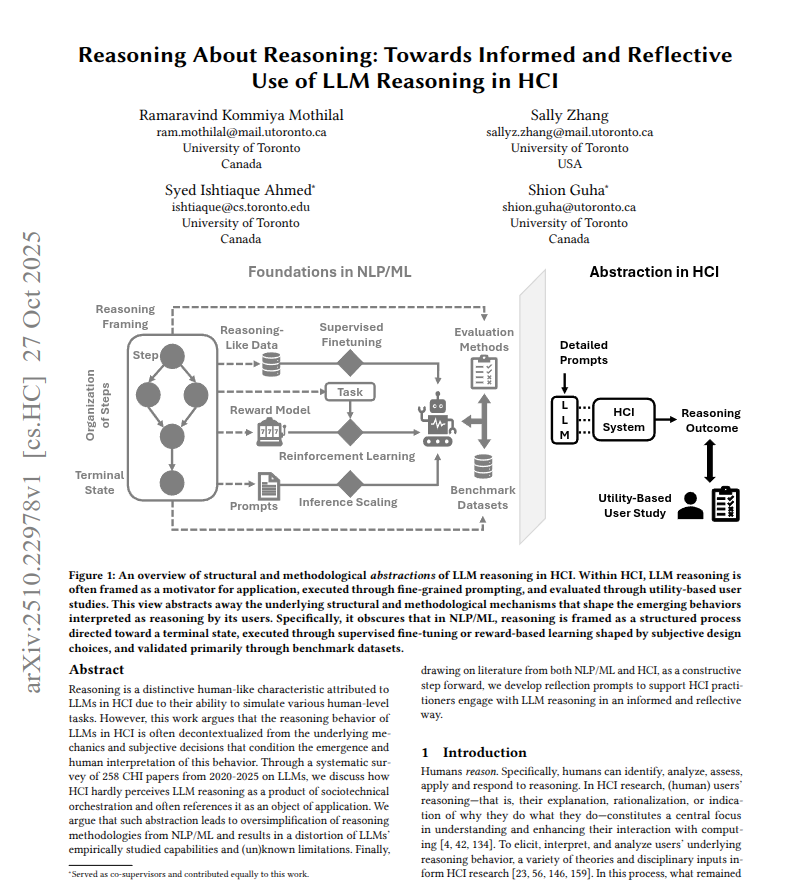

The paper shows how Human-Computer Interaction (HCI) talks about LLM reasoning without looking at what builds it. The authors read 258 CHI papers from 2020 to 2025 to see how reasoning is used . They find many papers use reasoning as a selling point to try an idea with LLMs.…

Not only have I built hundreds of AI agents myself, I've seen other people build thousands of AI agents for every use case under the sun. The people who are the most successful are the ones who don't overcomplicate it - and I want that to be you too. It's easy to think building…

What is "good" reasoning and how to evalute it? 🚀We explore a new pipeline to model step-level reasoning, a “Goldilocks principle” that balances free-form CoT and LEAN! Led by my student @yuanhezhang6, in colloboration with Ilja from DeepMind, @jasondeanlee, @CL_Theory

Full prompt in text format /////////////////////////////////////////////////////// You are an expert game theorist who uses first principles thinking. I want to learn game theory from the ground up. Teaching approach: 1.Start with fundamental questions and build concepts from…

Our first official vLLM Meetup is coming to Europe on Nov 6! 🇨🇭 Meet vLLM committers @mgoin_, @tms_jr, Thomas Parnell, + speakers from @RedHat_AI, @IBM, @MistralAI. Topics: vLLM updates, quantization, Mistral+vLLM, hybrid models, distributed inference luma.com/0gls27kb

Open-source Agentic browser; privacy-first alternative to ChatGPT Atlas, Perplexity Comet, Dia.

Now you can fine-tune your local LLMs on your iPhone. Apple presents MeBP, Memory-efficient BackPropagation, a new method that makes on-device fine-tuning practical. Unlike zeroth-order optimization (ZO), which needs 10–100× more steps, MeBP achieves faster convergence and…

Glyph: Scaling Context Windows via Visual-Text Compression Paper: arxiv.org/abs/2510.17800 Weights: huggingface.co/zai-org/Glyph Repo: github.com/thu-coai/Glyph Glyph is a framework for scaling the context length through visual-text compression. It renders long textual sequences into…

Can AI teach itself to reason better and without any human labels? Enter Socratic-Zero, a fully autonomous framework where three agents—Teacher, Solver, and Generator—co-evolve to create high-quality reasoning data from just 100 seed questions. - Teacher crafts harder problems…

United States Trends

- 1. Vandy 9,275 posts

- 2. Jeremiah Smith 6,388 posts

- 3. Julian Sayin 5,130 posts

- 4. Ohio State 15.2K posts

- 5. Caleb Downs 1,186 posts

- 6. Caicedo 26.2K posts

- 7. Pavia 3,545 posts

- 8. Vanderbilt 7,221 posts

- 9. Texas 110K posts

- 10. Arch Manning 3,614 posts

- 11. Clemson 8,557 posts

- 12. #HookEm 3,363 posts

- 13. Buckeyes 4,830 posts

- 14. Jeff Sims N/A

- 15. Jim Knowles 1,236 posts

- 16. French Laundry 5,155 posts

- 17. Donaldson 1,996 posts

- 18. Christmas 133K posts

- 19. Dawson 3,782 posts

- 20. Gus Johnson N/A

Something went wrong.

Something went wrong.