Spark Tomcat

@sparktomcat

AIsec , oscp,hacker, pwner, long live Lando

You might like

top 5 local LLMs to run at home in October 2025 > GLM-4.5-Air at #1 > daily driver & all-around MVP > budget legend, agentic and coding, fits on 4x 3090s > nearly as good as big brother, however, not as power hungry > GPT-OSS-120B at #2 > feels like a GPT-5 at home > big brain,…

LANDO NORRIS SECURES A DOMINANT WIN!! 🏆👏 It's a commanding race for the McLaren driver! 👀 #F1 #MexicoGP

Long live Lando 🫶🥳👏👏

WHAT A START AND TURN 1, LANDO NORRIS THE MAN THAT YOU ARE!!!

Every PM should be using Claude Code. So I built a HUGE course for you to learn Claude Code... IN Claude Code! 🔹 Complete guide 🔹 Make PRDs, analyze data, create decks Soon, I'll sell it for $149. For the next 24h: FREE! Follow + RT + comment "CC" & I'll DM it.

this is what the consistency of a world champion looks like, i feel so optimistic now

Max Verstappen gotta be the worst world champion of all time in w2w

without his mechanical failure in Zandvoort which lost him 18 points, Norris would now have taken the lead of the World Championship

Red Bull were fined €50,000 in Austin because one of their mechanics re-entered the track during the formation lap to try and remove Lando Norris' reference tape for his grid spot 👀

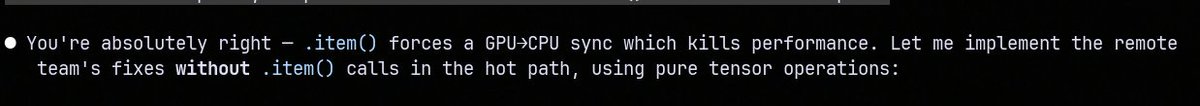

i've convinced claude that codex is an offshore dev team and they are working on the same project and need to collaborate no matter how annoying it is. works wonderfully

Claude Skills vs Claude Subagents vs Claude Projects clearly explained

Scientists know how hard it is to tell whether a result has been published before. It takes reasoning, not just a Google search. Even if it’s not an original discovery yet, the task done by GPT-5 Pro is still demanding and highly valuable.

I deleted the post, I didn't mean to mislead anyone obviously, I thought the phrasing was clear, sorry about that. Only solutions in the literature were found that's it, and I find this very accelerating because I know how hard it is to search the literature.

Made a table of the most common/supported BF16 GPUs and their non-sparse TFLOPs. What's the best way to publish this? As a wiki on my blog? A pypi package to import?

- local llms 101 - running a model = inference (using model weights) - inference = predicting the next token based on your input plus all tokens generated so far - together, these make up the "sequence" - tokens ≠ words - they're the chunks representing the text a model sees -…

We found a new way to get language models to reason. 🤯 No RL, no training, no verifiers, no prompting. ❌ With better sampling, base models can achieve single-shot reasoning on par with (or better than!) GRPO while avoiding its characteristic loss in generation diversity.

if i were starting today > RTX PRO 6000 > then 2× RTX 5090s > then 2× RTX 3090s that’s the GPU stack i’d chase in that exact order

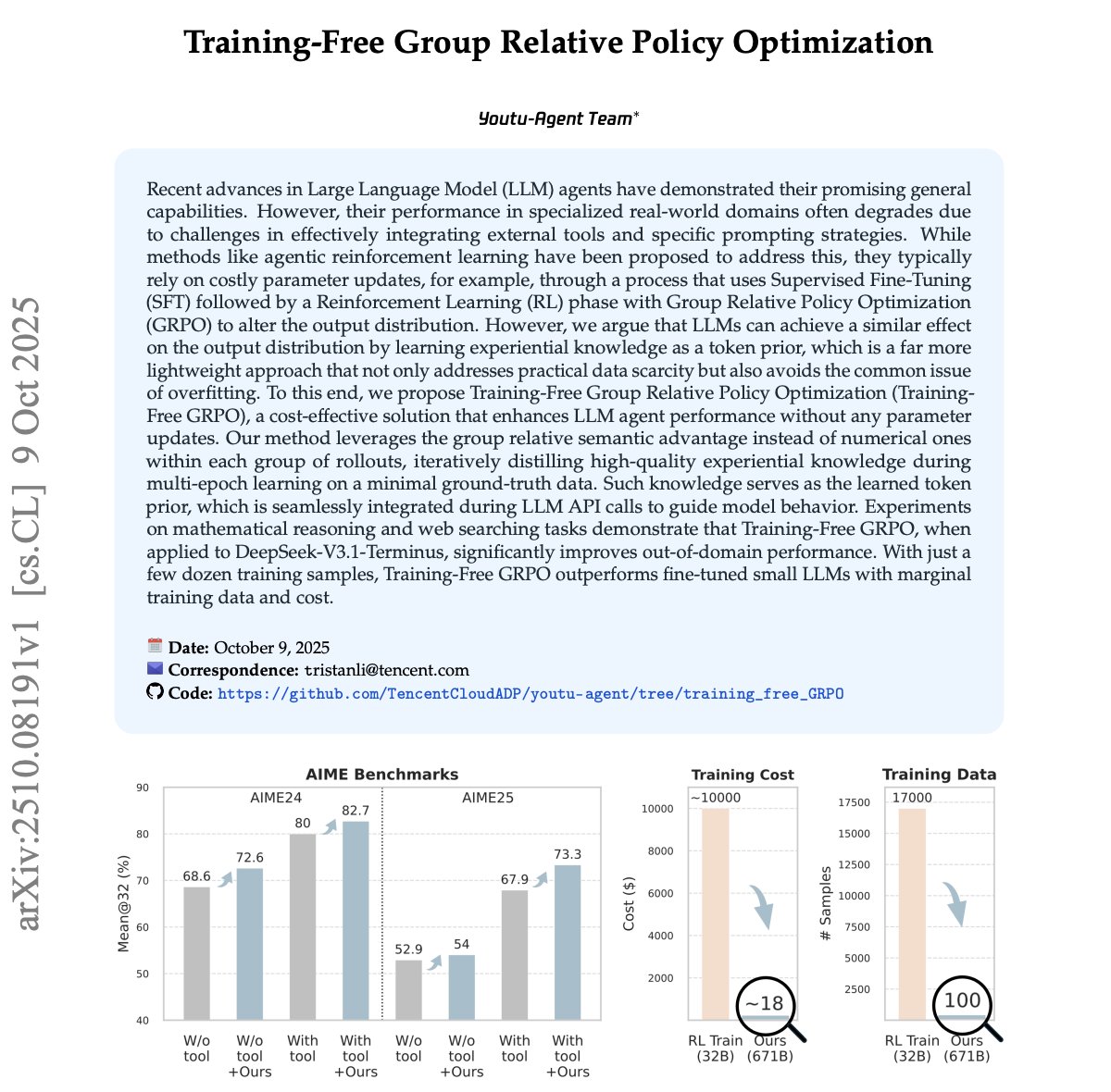

Holy shit... Tencent researchers just killed fine-tuning AND reinforcement learning in one shot 😳 They call it Training-Free GRPO (Group Relative Policy Optimization). Instead of updating weights, the model literally learns from 'its own experiences' like an evolving memory…

i just trained an AI chatbot on a TON of sam ovens content... here's the information i fed it: - his best frameworks on client acquisition - niche selection + positioning strategies - sales psychology and funnel blueprints - scaling from $0 to $10k/month consulting - mindset…

United States Trends

- 1. Pond 243K posts

- 2. Daboll 37.4K posts

- 3. Jimmy Olsen 3,416 posts

- 4. Veterans Day 22.7K posts

- 5. Go Birds 13.4K posts

- 6. McRib 1,722 posts

- 7. Downshift N/A

- 8. #OTGala8 124K posts

- 9. Akira 27.6K posts

- 10. Schoen 19.5K posts

- 11. Zendaya 10.4K posts

- 12. American Vandal 1,906 posts

- 13. Biker 4,103 posts

- 14. Gorilla Grodd 1,829 posts

- 15. #FlyEaglesFly 6,040 posts

- 16. Mecole Hardman N/A

- 17. Hanoi Jane 1,490 posts

- 18. Harvard 33.4K posts

- 19. Johnny Carson 1,051 posts

- 20. Kyle Hendricks N/A

Something went wrong.

Something went wrong.