你可能會喜歡

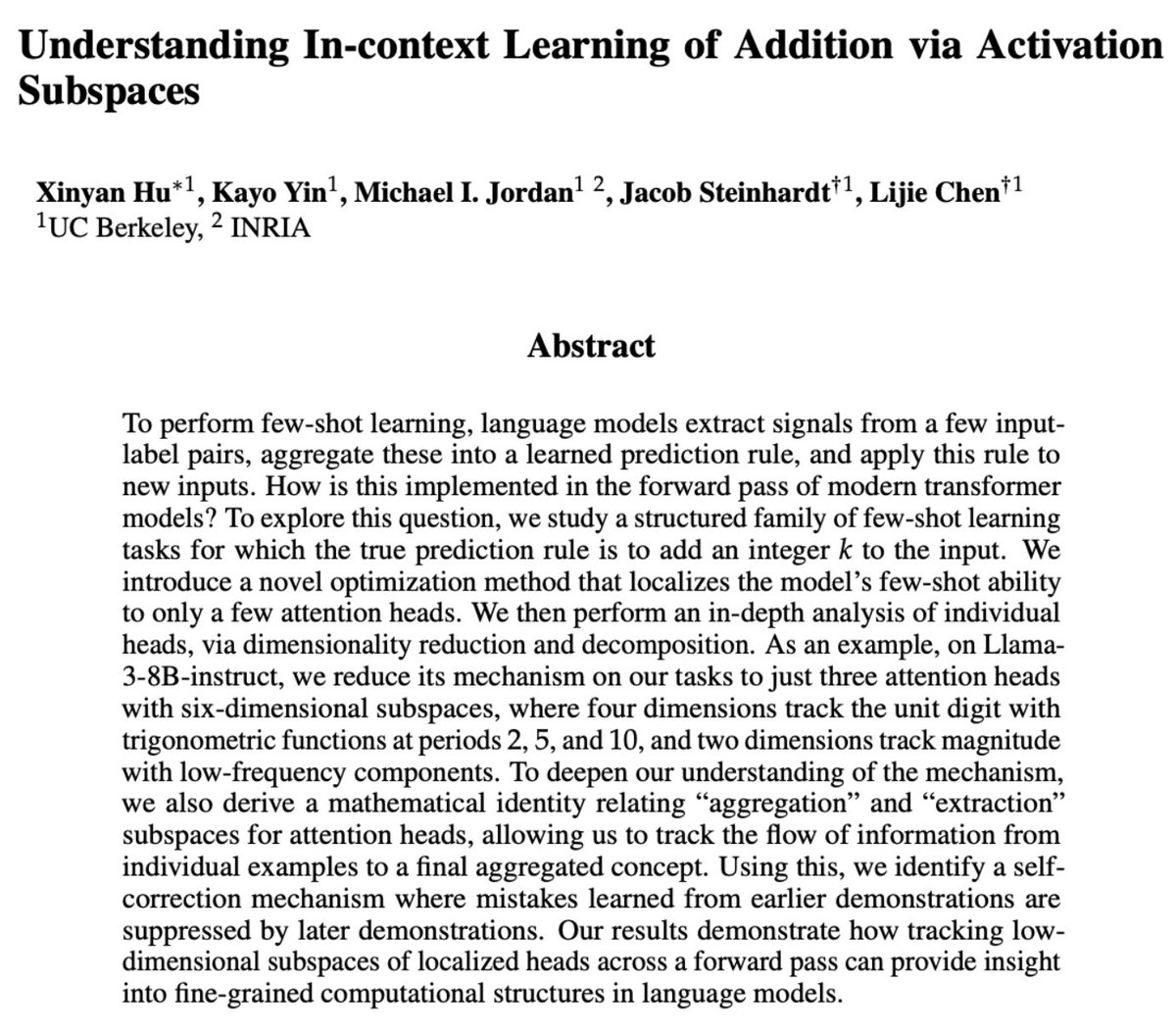

3->5, 4->6, 9→11, 7-> ? LLMs solve this via In-Context Learning (ICL); but how is ICL represented and transmitted in LLMs? We build new tools identifying “extractor” and “aggregator” subspaces for ICL, and use them to understand ICL addition tasks like above. Come to…

This was so cool to be a part of. Jack led an incredible effort to quickly analyze the internals of a new model, as versions were coming in, to assess alignment. Research at the speed of model development.

Prior to the release of Claude Sonnet 4.5, we conducted a white-box audit of the model, applying interpretability techniques to “read the model’s mind” in order to validate its reliability and alignment. This was the first such audit on a frontier LLM, to our knowledge. (1/15)

Prior to the release of Claude Sonnet 4.5, we conducted a white-box audit of the model, applying interpretability techniques to “read the model’s mind” in order to validate its reliability and alignment. This was the first such audit on a frontier LLM, to our knowledge. (1/15)

We asked every version of Claude to make a clone of Claude(dot)ai, including today’s Sonnet 4.5… see what happened in the video

We’re hiring someone to run the Anthropic Fellows Program! Our research collaborations have led to some of our best safety research and hires. We’re looking for an exceptional ops generalist, TPM, or research/eng manager to help us significantly scale and improve our collabs 🧵

Arc Institute trained their foundation model Evo 2 on DNA from all domains of life. What has it learned about the natural world? Our new research finds that it represents the tree of life, spanning thousands of species, as a curved manifold in its neuronal activations. (1/8)

Join Anthropic interpretability researchers @thebasepoint, @mlpowered, and @Jack_W_Lindsey as they discuss looking into the mind of an AI model - and why it matters:

We’re running another round of the Anthropic Fellows program. If you're an engineer or researcher with a strong coding or technical background, you can apply to receive funding, compute, and mentorship from Anthropic, beginning this October. There'll be around 32 places.

New research with coauthors at @Anthropic, @GoogleDeepMind, @AiEleuther, and @decode_research! We expand on and open-source Anthropic’s foundational circuit-tracing work. Brief highlights in thread: (1/7)

United States 趨勢

- 1. Discussing Web3 N/A

- 2. Good Sunday 41.3K posts

- 3. Auburn 46.5K posts

- 4. At GiveRep N/A

- 5. MACROHARD 6,748 posts

- 6. Brewers 66.1K posts

- 7. #SEVENTEEN_NEW_IN_TACOMA 36.6K posts

- 8. Gilligan's Island 5,014 posts

- 9. Georgia 67.8K posts

- 10. Wordle 1,576 X N/A

- 11. Utah 25.3K posts

- 12. #MakeOffer 19.4K posts

- 13. Cubs 56.9K posts

- 14. #SVT_TOUR_NEW_ 28.8K posts

- 15. Kirby Smart 8,609 posts

- 16. #HawaiiFB N/A

- 17. #BYUFOOTBALL 1,027 posts

- 18. mingyu 102K posts

- 19. QUICK TRADE 2,333 posts

- 20. Holy War 2,081 posts

你可能會喜歡

-

Chan Zuckerberg Biohub Network

Chan Zuckerberg Biohub Network

@czbiohub -

Dr. Jean Fan

Dr. Jean Fan

@JEFworks -

Debora Marks

Debora Marks

@deboramarks -

Valentine Svensson

Valentine Svensson

@vallens -

Loïc A. Royer 💻🔬⚗️

Loïc A. Royer 💻🔬⚗️

@loicaroyer -

Jonathan Hafetz

Jonathan Hafetz

@JonathanHafetz -

Peter Horvath

Peter Horvath

@hpke1980 -

Kieran Campbell

Kieran Campbell

@kieranrcampbell -

Alex Rosenberg

Alex Rosenberg

@dna_rosenberg -

David Van Valen

David Van Valen

@davidvanvalen -

AmyKistler

AmyKistler

@amykczb

Something went wrong.

Something went wrong.