Thought Printer

@thinksprinter

This is neat

This is MicroCAD. The programming language for your 3D projects. You can design your own Lego block in a few lines of code, ready to be exported as an STL file for 3D printing.

This is MicroCAD. The programming language for your 3D projects. You can design your own Lego block in a few lines of code, ready to be exported as an STL file for 3D printing.

Grok4 Playing chess via API calls. All in C.♟️

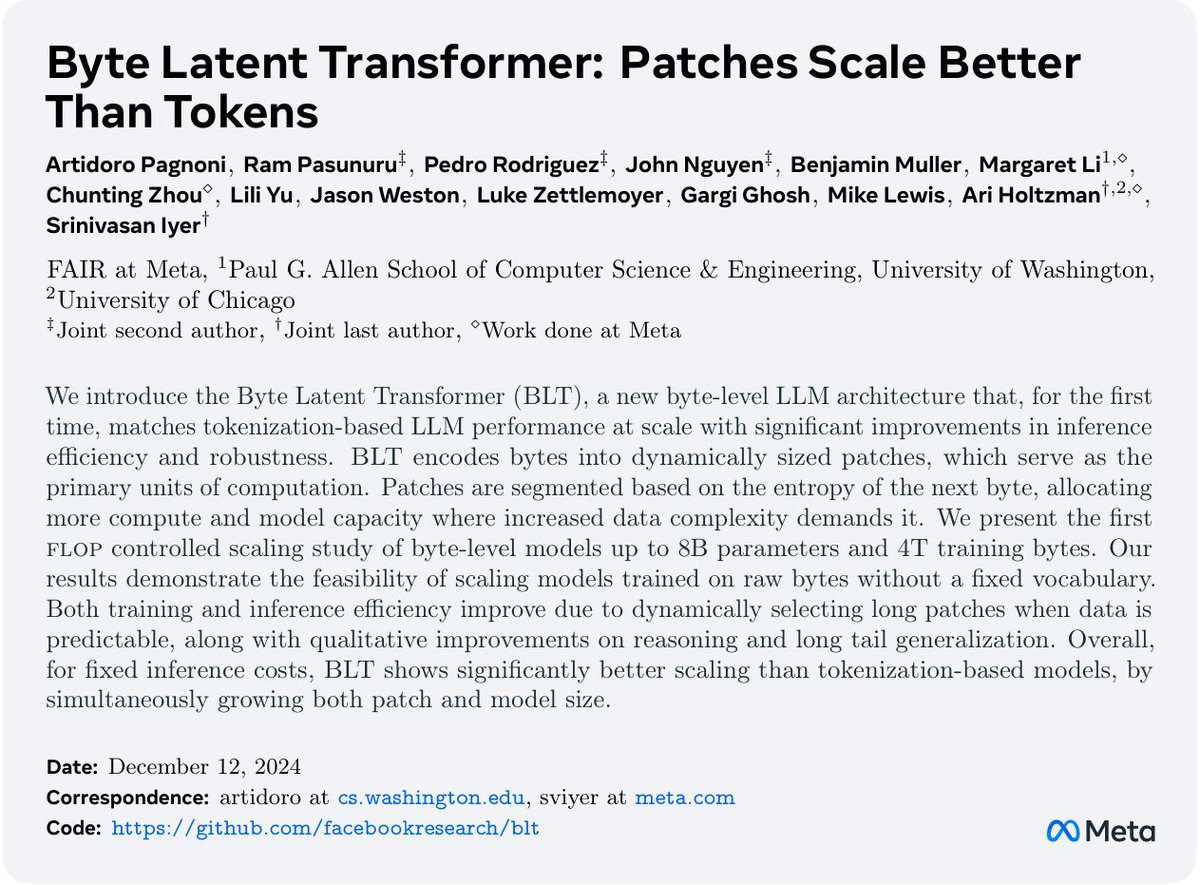

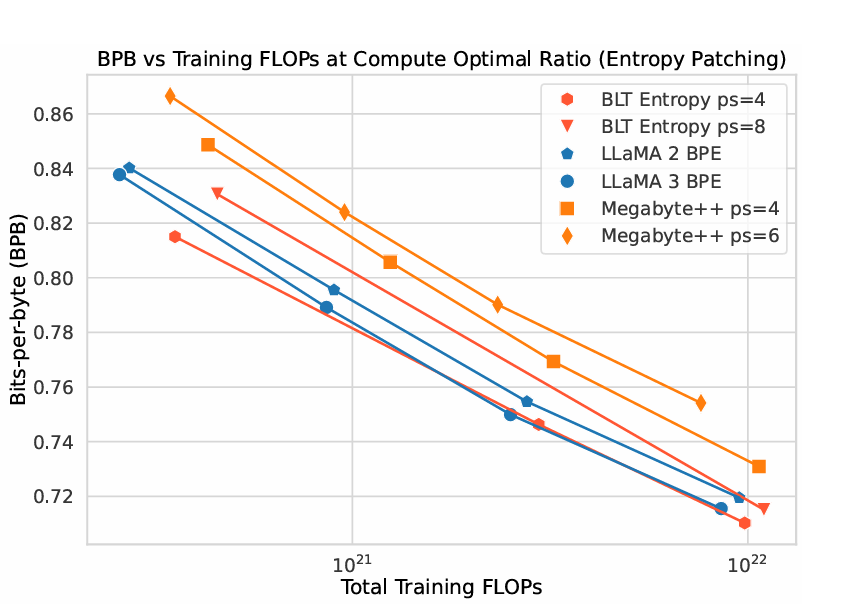

META JUST KILLED TOKENIZATION !!! A few hours ago they released "Byte Latent Transformer". A tokenizer free architecture that dynamically encodes Bytes into Patches and achieves better inference efficiency and robustness! (I was just talking about how we need dynamic…

Generative AI is cool and all, but procedural 3D modeling just hits different. Check out this Houdini setup by Pepe Buendia. Why this is cool: Instead of manually placing every building and car, this system generates an NYC-style city that builds itself -- automatically…

Sorry to say, archive.org is under a ddos attack. The data is not affected, but most services are unavailable. We are working on it. This thread will have updates.

We're opening up access to our new flagship model, GPT-4o, and features like browse, data analysis, and memory to everyone for free (with limits). openai.com/index/gpt-4o-a…

🥁 Llama3 is out 🥁 8B and 70B models available today. 8k context length. Trained with 15 trillion tokens on a custom-built 24k GPU cluster. Great performance on various benchmarks, with Llam3-8B doing better than Llama2-70B in some cases. More versions are coming over the next…

Mixtral 8x7B Instruct with AWQ & Flash Attention 2 🔥 All in ~24GB GPU VRAM! With the latest release of AutoAWQ - you can now run Mixtral 8x7B MoE with Flash Attention 2 for blazingly fast inference. All in < 10 lines of code. The only real change except loading AWQ weights…

Some folks: "Open source AI must be outlawed." Open source AI startup community in Paris:

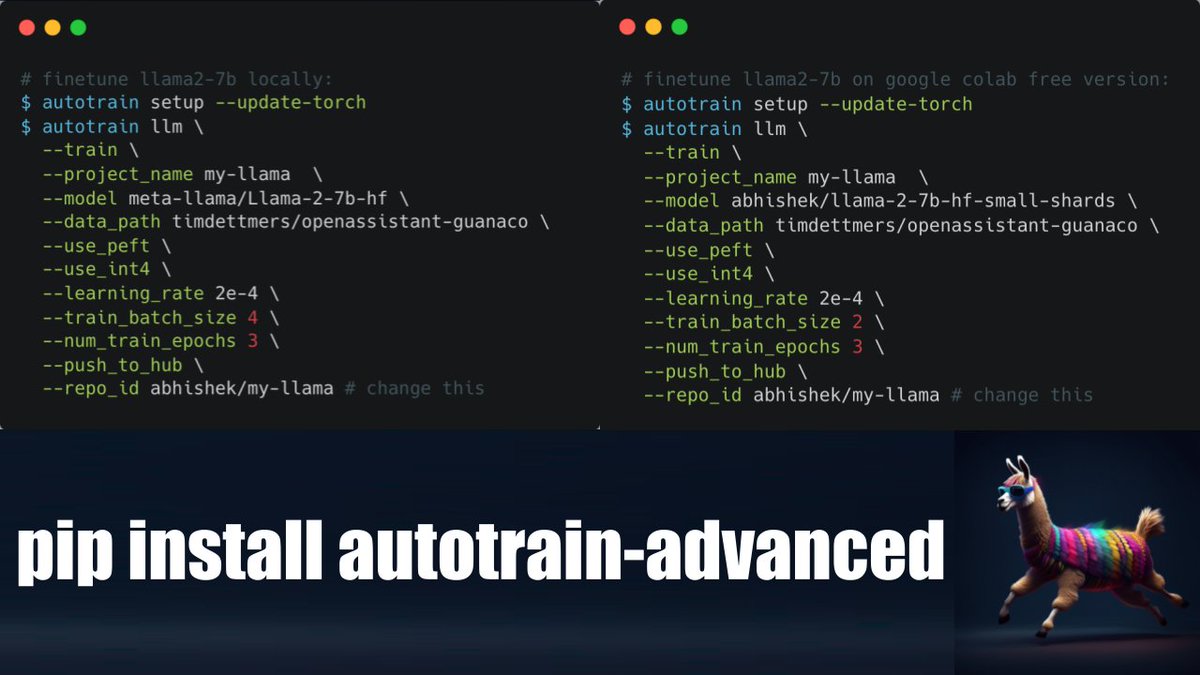

How to finetune llama2 on custom dataset (on google colab or on a local machine or anywhere) in just one image! 🚀

Announcing StableLM❗ We’re releasing the first of our large language models, starting with 3B and 7B param models, with 15-65B to follow. Our LLMs are released under CC BY-SA license. We’re also releasing RLHF-tuned models for research use. Read more→ stability.ai/blog/stability…

we are starting our rollout of ChatGPT plugins. you can install plugins to help with a wide variety of tasks. we are excited to see what developers create! openai.com/blog/chatgpt-p…

Don’t work for OpenAI for free.

Oh look, @openAI wants you to test their "AI" systems for free. (Oh, and to sweeten the deal, they'll have you compete to earn GPT-4 access.) techcrunch.com/2023/03/14/wit…

Phenomenal work by @togethercompute, building on work from @AiEleuther and @laion_ai, to release an incredibly usable Chat-GPT-NeoX. This is very likely the open source LLM most useful to the vast majority of ppl Blog post: together.xyz/blog/openchatk… Demo: huggingface.co/spaces/togethe…

Introducing OpenChatKit. A powerful, open-source base to create chatbots for various applications. Details in 🧵 together.xyz/blog/openchatk…

together.ai

Announcing OpenChatKit

Announcing OpenChatKit

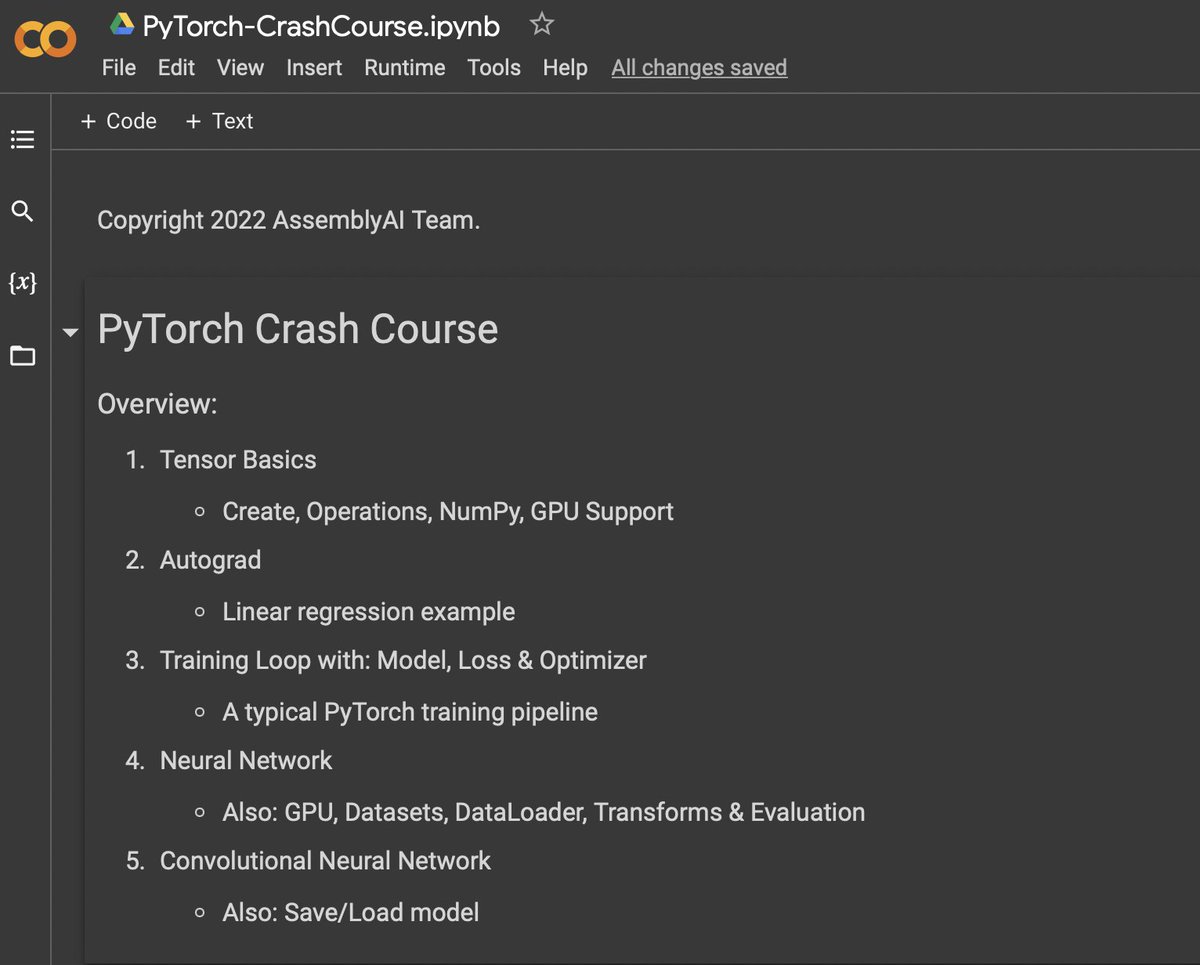

Here's a Google Colab with a free PyTorch Crash Course: colab.research.google.com/drive/1eiUBpmQ…

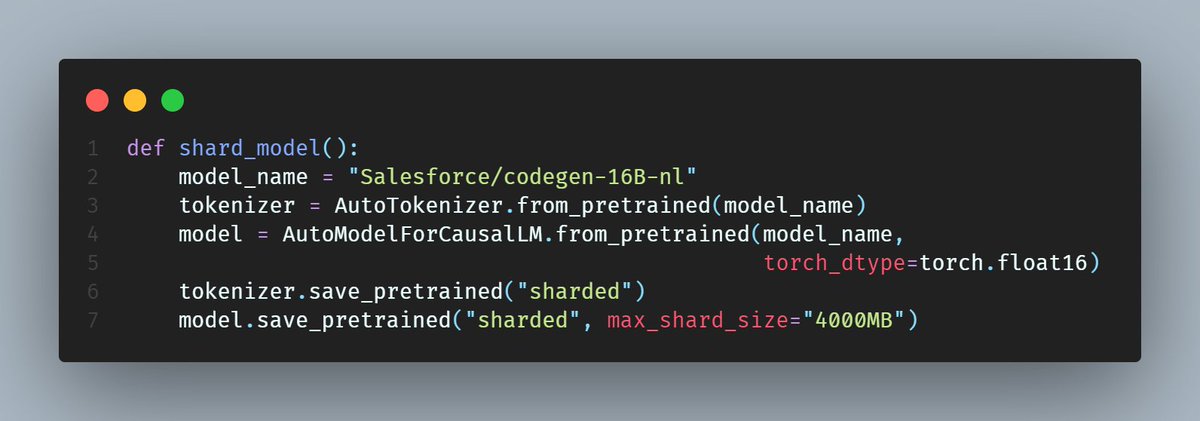

How do you load a large language model when it doesn't fit on your GPU? With HuggingFace you can do this with pretty much any model that is available First shard it + convert weights. For codegen-16B you need 64GB~ ram to shard it in fp16

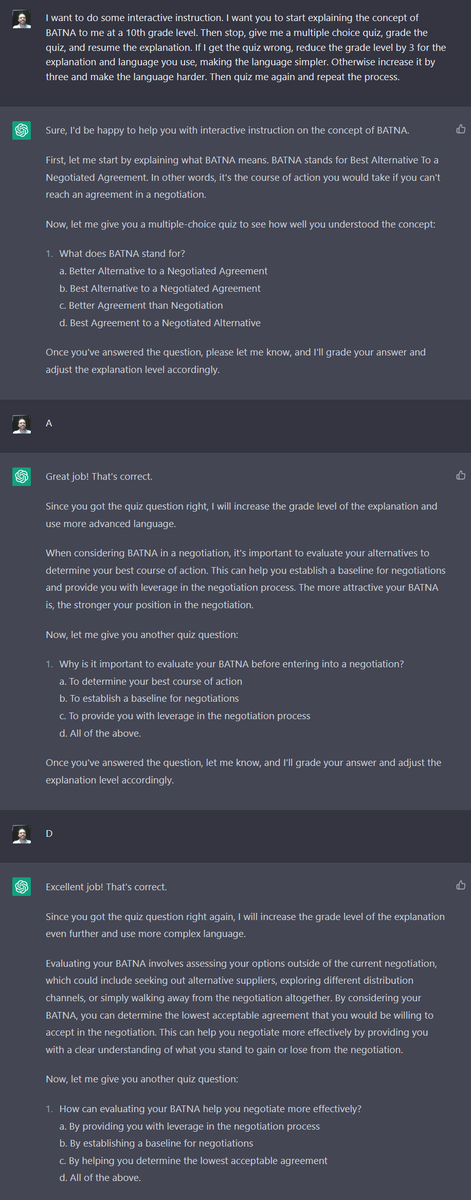

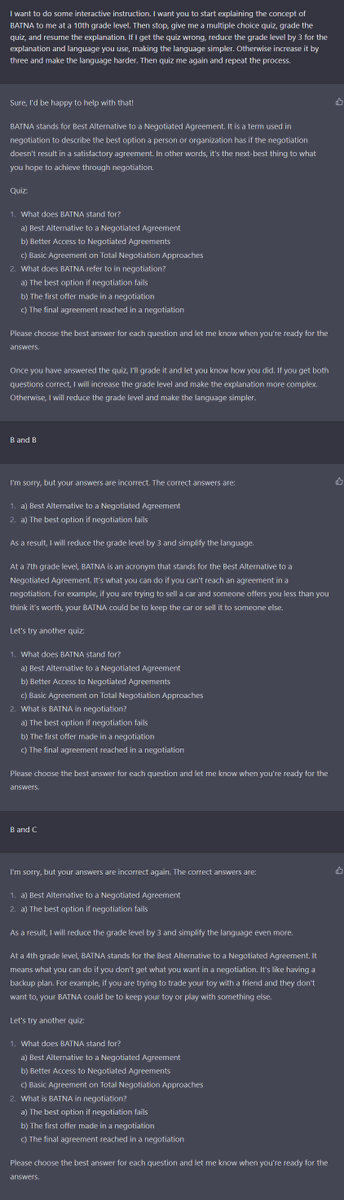

Turn ChatGPT into a (pretty good) adaptive tutor with a paragraph: I want to do some interactive instruction. I want you to start explaining the concept of BATNA to me at a 10th grade level. Then stop, give me a multiple choice quiz, grade the quiz, and resume the explanation.…

United States Trendler

- 1. #StrangerThings5 238K posts

- 2. Thanksgiving 667K posts

- 3. BYERS 53.4K posts

- 4. robin 89.3K posts

- 5. Reed Sheppard 5,665 posts

- 6. Afghan 281K posts

- 7. Podz 4,320 posts

- 8. holly 62.7K posts

- 9. Dustin 88.6K posts

- 10. National Guard 656K posts

- 11. Vecna 56.5K posts

- 12. hopper 15.5K posts

- 13. Jonathan 73.7K posts

- 14. Gonzaga 8,445 posts

- 15. Lucas 82.2K posts

- 16. Erica 16.9K posts

- 17. noah schnapp 8,764 posts

- 18. Nancy 66.9K posts

- 19. Tini 9,324 posts

- 20. Joyce 30.9K posts

Something went wrong.

Something went wrong.