Akash Srivastava

@variational_i

Director, Core AI, IBM. Chief Architect http://instructLAB.ai . Founder, Red Hat AI Innovation Team. PI @MITIBMLab. ❤️ Density Ratios.

You might like

What does it take to scale AI beyond the lab? At #RedHatSummit, @ishapuri101 and I spoke with Red Hat CEO Matt Hicks & CTO Chris Wright on inference-time scaling, open infra (LLMD), and making AI affordable for enterprise. 🎧 youtu.be/mj1dwrPfvb4 #NoMathAI @RedHat_AI

youtube.com

YouTube

Inference Time Scaling for Enterprises | No Math AI

🚀 How is generative AI transforming the way we design cars, planes, and entire systems? In Ep 2 of No Math AI, @ishapuri101 and I chat with Dr. @_faezahmed (@MIT DeCoDE Lab) about how AI boosts creativity, cuts design time, and works with engineers—not against them.

How is generative AI reshaping engineering design? In Episode 2 of No Math AI, hosts Dr. Akash Srivastava (@variational_i) and MIT PhD student Isha Puri (@ishapuri101) sit down with Dr. Faez Ahmed (@_faezahmed) from MIT DeCoDE Lab to explore just that. 👇

SQuat: KV-Cache for making reasoning models go 🚀 📄paper: lnkd.in/emKhAVZu 💻 code: lnkd.in/e8TJ7N3R From my awesome collaborators @RedHat_AI

[1/x] 🚀 We're excited to share our latest work on improving inference-time efficiency for LLMs through KV cache quantization---a key step toward making long-context reasoning more scalable and memory-efficient.

![HW_HaoWang's tweet image. [1/x] 🚀 We're excited to share our latest work on improving inference-time efficiency for LLMs through KV cache quantization---a key step toward making long-context reasoning more scalable and memory-efficient.](https://pbs.twimg.com/media/Gnx9W5FWIAA4vbt.jpg)

Excited to share our preliminary work on customizing reasoning models using Red Hat AI Innovation’s Synthetic Data Generation (SDG) package! 📄 Turn your documents into training data for LLMs. 🧵👇

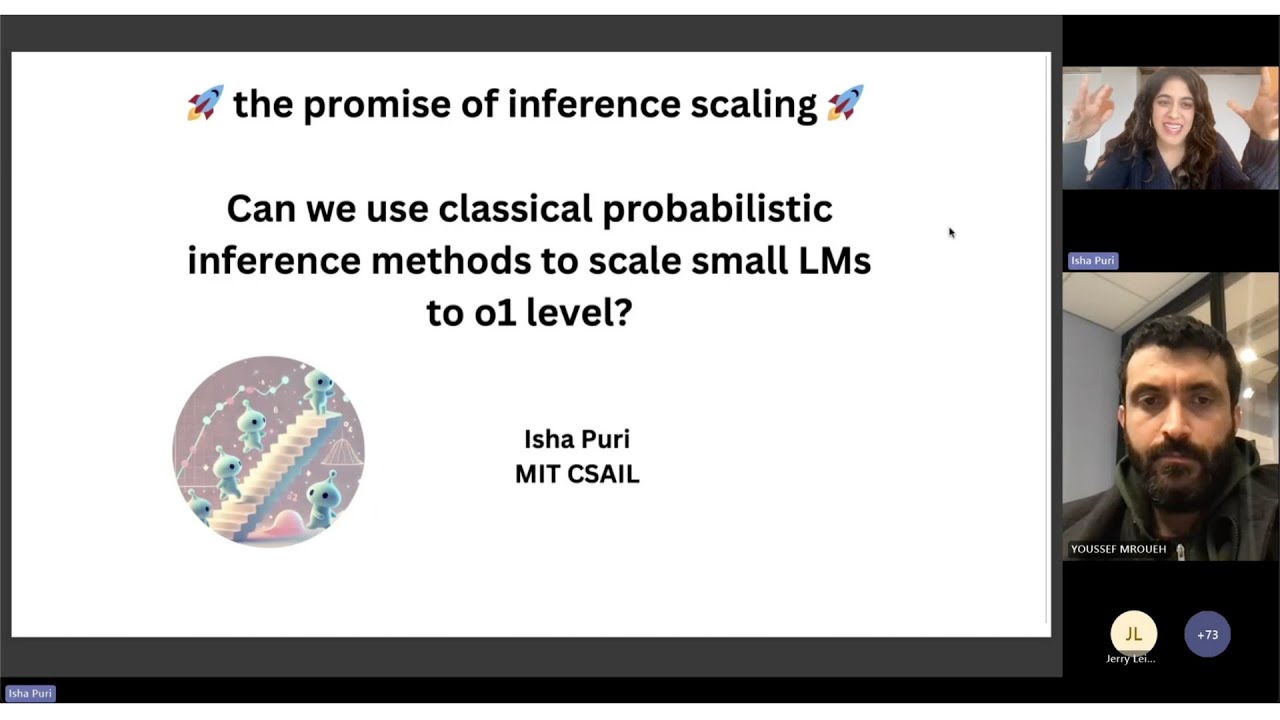

had a great time giving a talk about probabilistic inference scaling and the power of small models at the IBM Research ML Seminar Series - the best talks end with tons of questions, and it was great to see everyone so engaged : ) youtube.com/watch?v=--3rsQ…

youtube.com

YouTube

Scaling Small LLMs to o1 level! Probabilistic Methods for Inference...

Come along and help us build reasoning in small LLMs

🚀 Exploring LLM reasoning—live! We, the @RedHat AI Innovation Team, are working on reproducing R1-like reasoning in small LLMs without distilling R1 or its derivatives. We’re documenting our journey in real-time: 🔗 Follow along: red-hat-ai-innovation-team.github.io/posts/r1-like-…

Excited to share our latest work with @ishapuri101 et al.! 🚀 We introduce a probabilistic inference approach for inference-time scaling of LLMs using particle-based Monte Carlo methods—achieving 4–16x better scaling on math reasoning tasks and O1-level performance on MATH500.

[1/x] can we scale small, open LMs to o1 level? Using classical probabilistic inference methods, YES! Joint @MIT_CSAIL / @RedHat AI Innovation Team work introduces a particle filtering approach to scaling inference w/o any training! check out …abilistic-inference-scaling.github.io

![ishapuri101's tweet image. [1/x] can we scale small, open LMs to o1 level? Using classical probabilistic inference methods, YES! Joint @MIT_CSAIL / @RedHat AI Innovation Team work introduces a particle filtering approach to scaling inference w/o any training! check out …abilistic-inference-scaling.github.io](https://pbs.twimg.com/media/GjERBNebIAE6IaO.jpg)

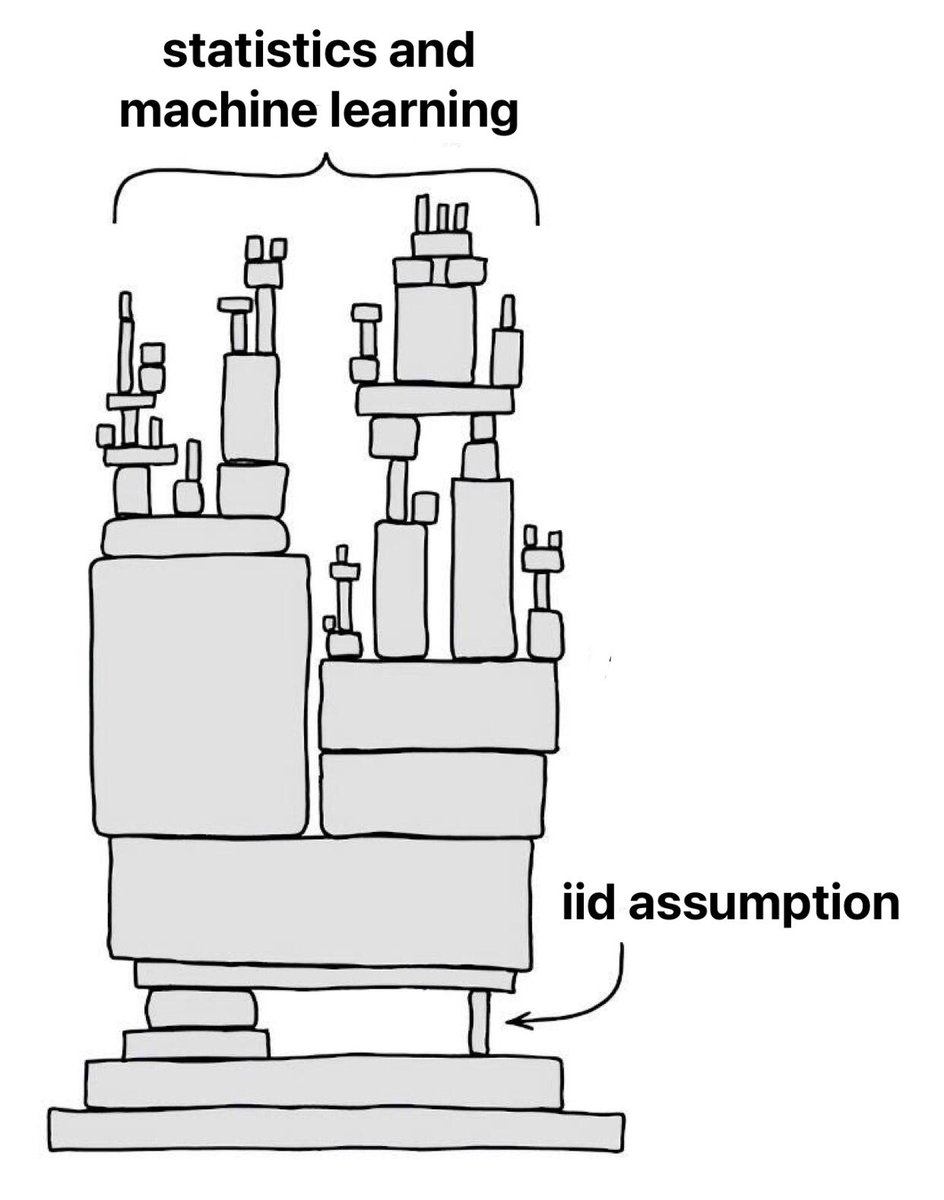

🧩 Why do task vectors exist in pretrained LLMs? Our new research uncovers how transformers form internal abstractions and the mechanisms behind in-context learning(ICL).

Neural activity is correlated among animals performing the same task and across sequential trials. Led by @zhang_yizi and @hl3616, we develop an reduced-rank model that exploits shared structure across animals to improve neural decoding. biorxiv.org/content/10.110…

What will a foundation model for the brain look like? We argue that it must be able to solve a diverse set of tasks across multiple brain regions and animals. Check out our preprint where we introduce a multi-region, multi-animal, multi-task model (MtM): arxiv.org/abs/2407.14668

🚀 Stronger, simpler, and better! 🚀 Introducing Value Augmented Sampling (VAS) - our new algorithm for LLM alignment and personalization that outperforms existing methods!

Excited to give a talk on our hottest, newest work “Value Augmented Sampling for Language Model Alignment and Personalization” at 2:30p Halle A3 in #ICLR2024 Reliable and Responsible Foundation Models Workshop 🥳🥳

📢Workshop on Reliable and Responsible Foundation Models will happen today (8:50am - 5:00pm). Join us at #ICLR2024 room Halle A 3 for a wonderful lineup of speakers, along with 63 amazing posters and 4 contributed talks! Schedule: iclr-r2fm.github.io/#program.

Attending #ICLR2024, interested in continual learning and like probabilistic modeling? Lazar from the @MITIBMLab, will be presenting our latest work that takes a probabilistic approach to modular continual learning on Tuesday, 7 May, Halle B #222 (iclr.cc/virtual/2024/p…).

I’ll be presenting our #ICLR2024 paper on a probabilistic approach to scaling modular continual learning algorithms while achieving different types of knowledge transfer. (arxiv.org/abs/2306.06545, in collaboration with @variational_i @swarat @RandomlyWalking ). A tldr (1/8):

Check out our work titled "From Automation to Augmentation: Redefining Engineering Design and Manufacturing in the Age of NextGen-AI", where we highlight the requirements for NextGenAI suitable for design, engineering, and manufacturing. mit-genai.pubpub.org/pub/9s6690gd/r…

Instead of continuing to emphasize automation, a human-centric approach to the next generation of #AI technologies in #manufacturing could enhance workers' skills and boost productivity. mit-genai.pubpub.org/pub/9s6690gd/r… @AustinLentsch @DAcemogluMIT @baselinescene @_faezahmed @MITMechE

New work from @MITIBMLab researchers on large scale alignment of LLMs. Check out the models at HF huggingface.co/ibm/merlinite-…

Hey, we did a thing: "LAB: Large-scale Alignment for chatBots"—a new synthetic data-driven LLM alignment method that yields great results without using large-scale human or proprietary model data. arxiv.org/abs/2403.01081 models: huggingface.co/ibm/labradorit…, huggingface.co/ibm/merlinite-…

New work on automated red-teaming in LLMs using curiosity-driven exploration! #iclr24

(1/4) 🎉 Excited to share our ICLR'24 paper on "Curiosity-driven Red-teaming for Large Language Models"! We bridge curiosity-driven exploration in reinforcement learning (RL) with red-teaming, introducing the Curiosity-driven Red-teaming (CRT) method. #ICLR24 #AI #LLMSecurity

❤️ #NeurIPS2023. After 4 years, met my adviser and my adviser's advisor at the same time.

Uniform sampling hampers offline RL. How to fix it? Check our paper at #NeurIPS2023. Time: Wed 13 Dec 5 p.m. CST — 7 p.m. CST Location: Great Hall & Hall B1+B2 (level 1) #1908 Paper: openreview.net/forum?id=TW99H… Code: github.com/Improbable-AI/…

Interested in learning how generative models can help with constrained design generation and topology optimization? Come to poster 540, 10:45am session today neurips.cc/virtual/2023/p… where @georgosgeorgos will be presenting our work on aligning TO with diffusion models #NeurIPS23

United States Trends

- 1. Good Sunday 68K posts

- 2. Klay 29.7K posts

- 3. #sundayvibes 5,421 posts

- 4. McLaren 126K posts

- 5. Blessed Sunday 18.6K posts

- 6. Full PPR N/A

- 7. Beirut 5,469 posts

- 8. #FelizCumpleañosNico 4,792 posts

- 9. Ja Morant 13.9K posts

- 10. Florentino 32.1K posts

- 11. #FG3Dライブ 118K posts

- 12. For the Lord 30.2K posts

- 13. #sundaymotivation 3,552 posts

- 14. #FelizCumpleañosPresidente 4,060 posts

- 15. King of the Universe 2,187 posts

- 16. Tottenham 49.3K posts

- 17. Lando 145K posts

- 18. Christ the King 11.2K posts

- 19. South Asia 41K posts

- 20. Arsenal 175K posts

You might like

-

Pavel Izmailov

Pavel Izmailov

@Pavel_Izmailov -

Ali Eslami

Ali Eslami

@arkitus -

Dhruv Batra

Dhruv Batra

@DhruvBatra_ -

Pulkit Agrawal

Pulkit Agrawal

@pulkitology -

Volodymyr Kuleshov 🇺🇦

Volodymyr Kuleshov 🇺🇦

@volokuleshov -

Paul Liang

Paul Liang

@pliang279 -

Aditya Grover

Aditya Grover

@adityagrover_ -

Francesco Locatello

Francesco Locatello

@FrancescoLocat8 -

Max Jaderberg

Max Jaderberg

@maxjaderberg -

Yisong Yue

Yisong Yue

@yisongyue -

yingzhen

yingzhen

@liyzhen2 -

Prateek Jain

Prateek Jain

@jainprateek_ -

Ivan Titov

Ivan Titov

@iatitov -

Adam Golinski

Adam Golinski

@adam_golinski -

Samarth Sinha

Samarth Sinha

@_sam_sinha_

Something went wrong.

Something went wrong.