#aibenchmarks risultati di ricerca

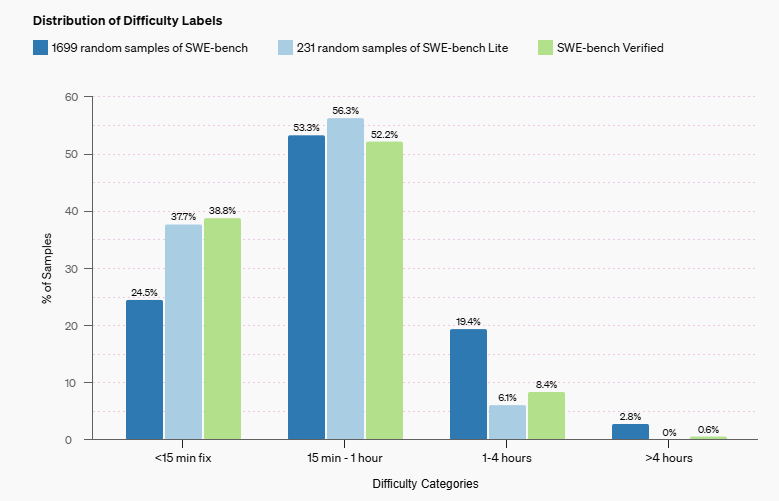

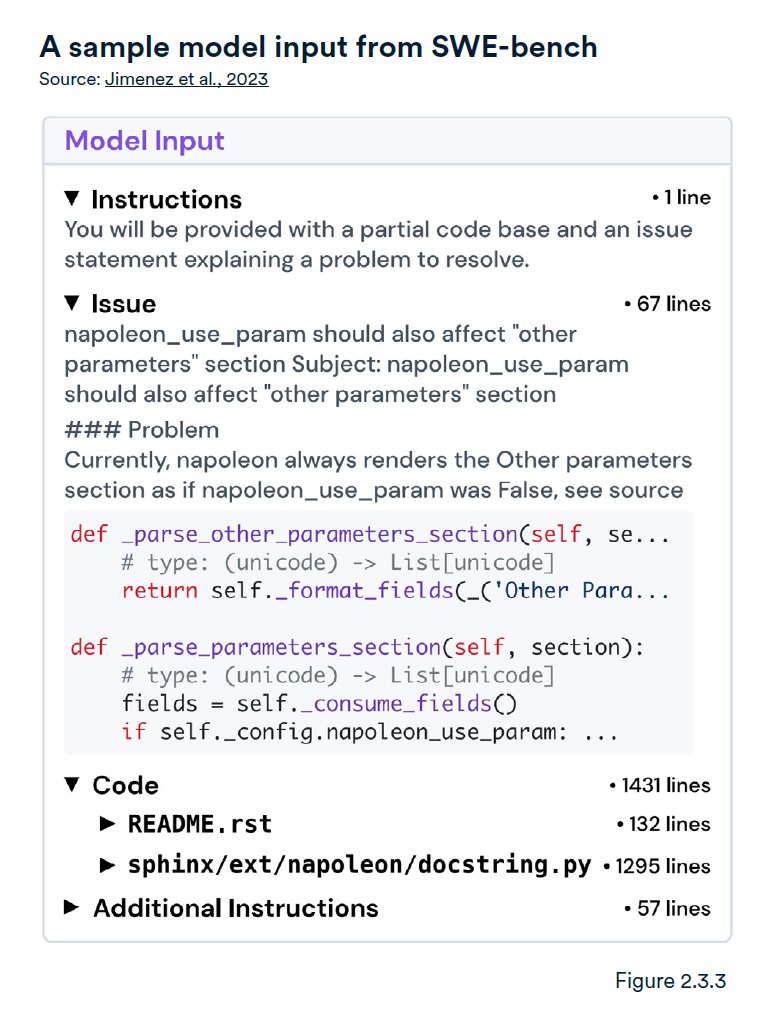

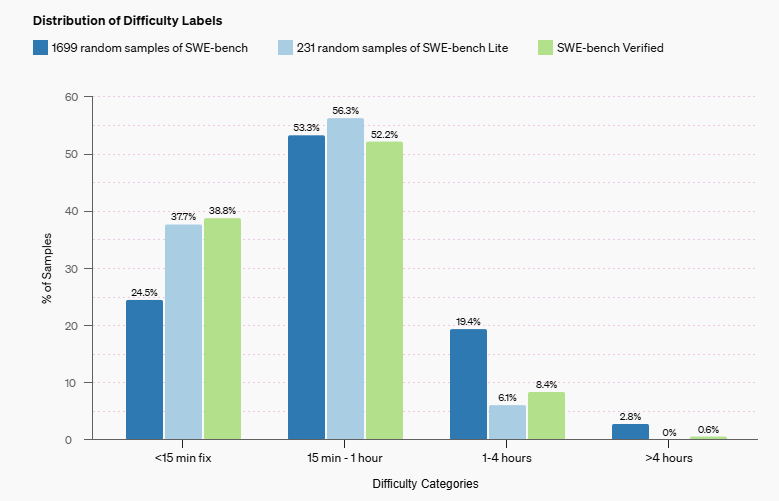

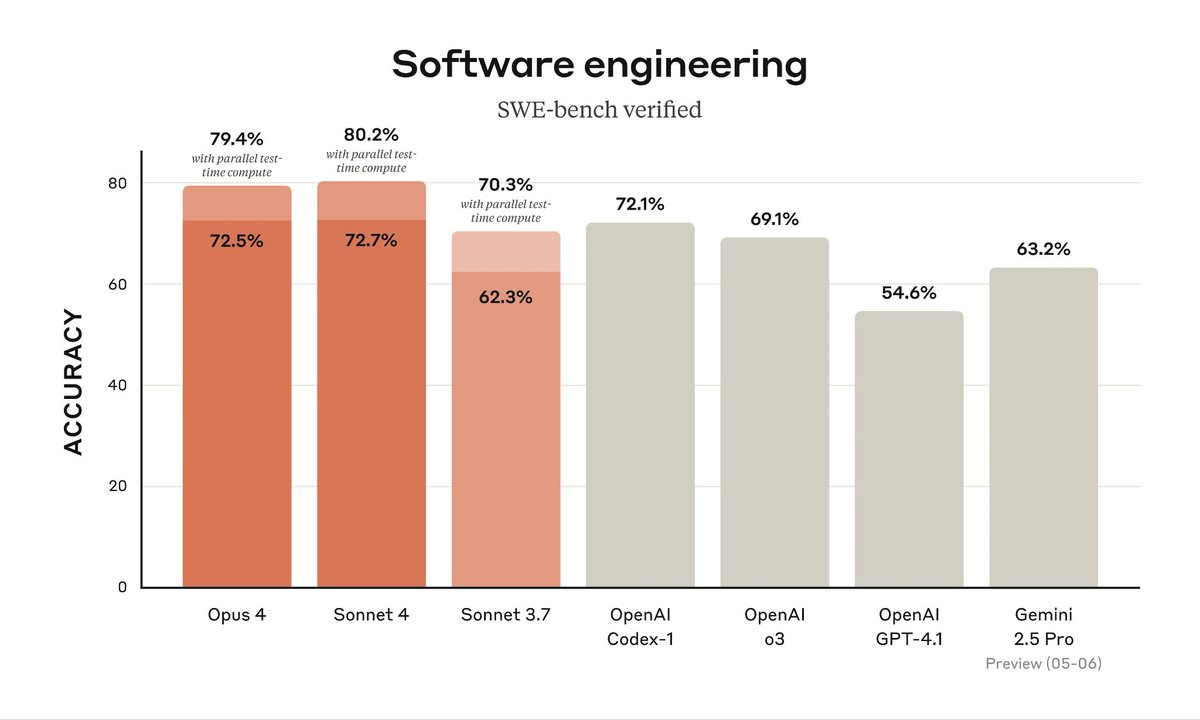

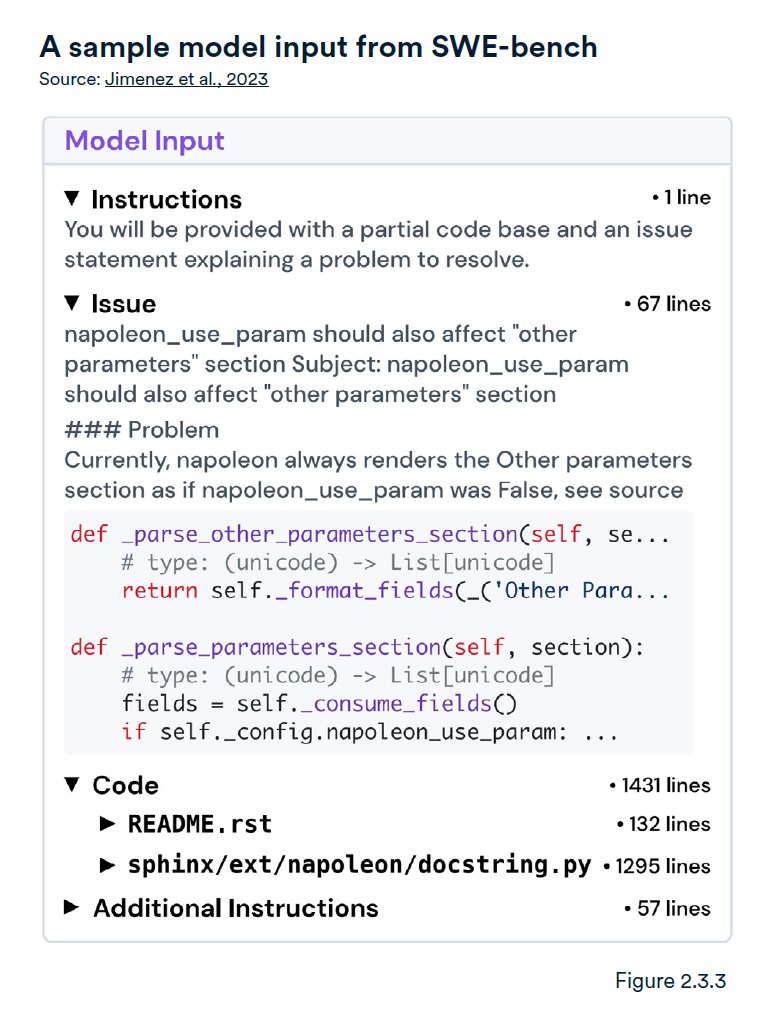

OpenAI launches SWE-bench Verified, a human-validated subset of the popular SWE-bench AI benchmark for evaluating software engineering abilities. GPT-4's score more than doubles! 📈How will this impact AI development in software engineering? #AIBenchmarks #SoftwareEngineering

"You need to have these very hard tasks which produce undeniable evidence. And that's how the field is making progress today, because we have these hard benchmarks, which represent true progress. And this is why we're able to avoid endless debate." #AIbenchmarks -Ilya Sutskever

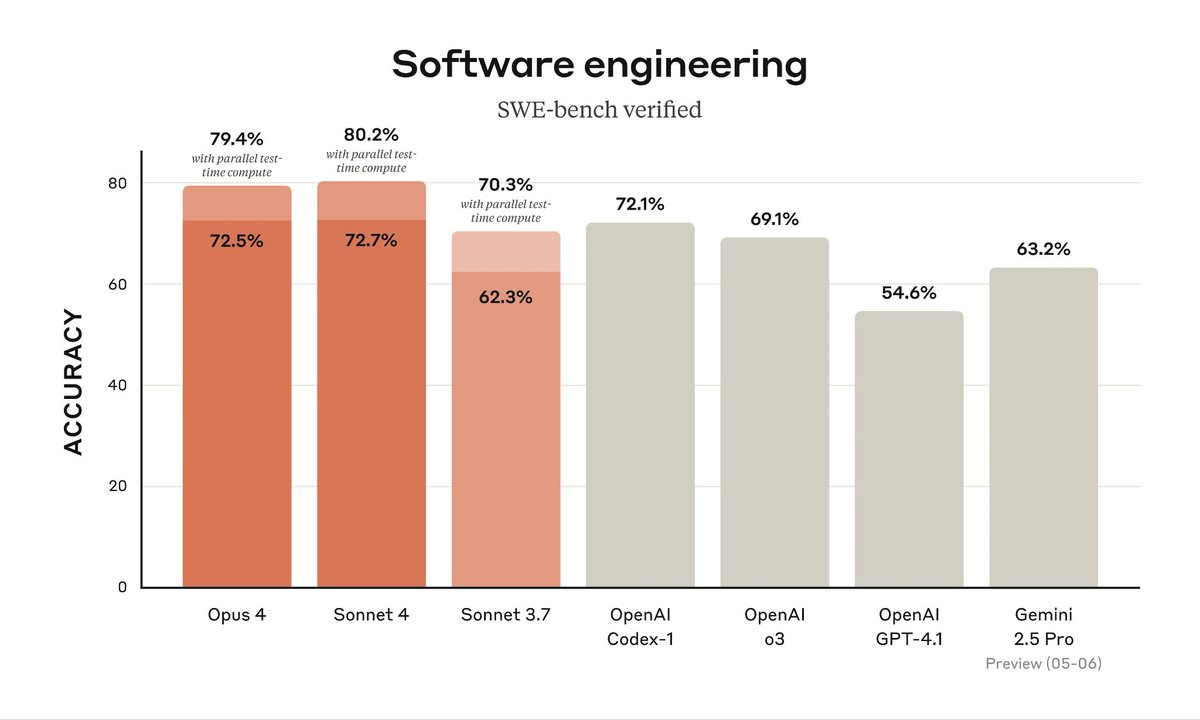

Claude 4 is here—and it's a powerhouse. Outperforms GPT-4 and Gemini 2.5 in reasoning, coding, and long-context tasks. Fast, smart, and ready. #Claude4 #AIbenchmarks

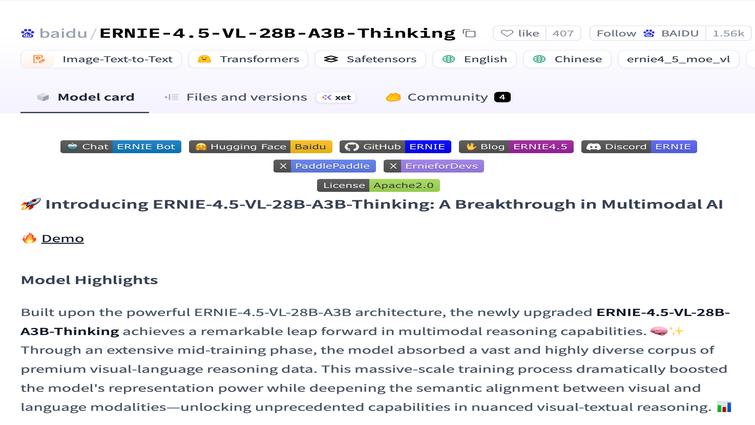

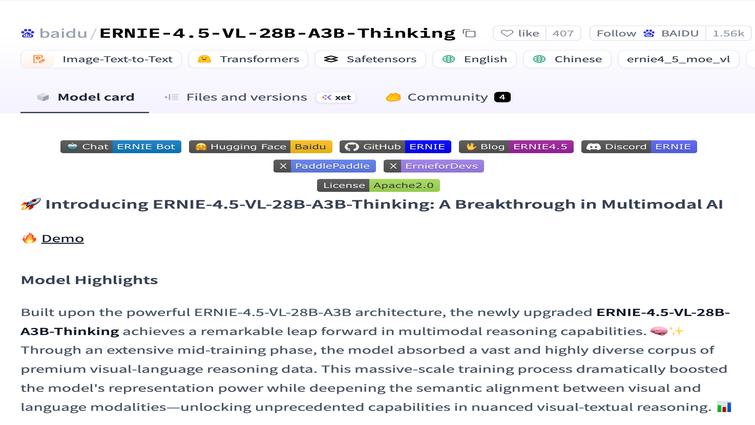

ERNIE is beating GPT and Gemini on key benchmarks While everyone obsesses over OpenAI and Google, Baidu quietly built something better. And most people have no idea. #ERNIE #BaiduAI #AIBenchmarks #GPTvsERNIE #GeminiComparison

AI Benchmarks RIGGED? Shocking Truth Exposed! (ChatGPT, Claude) #AIBenchmarks #ChatGPT #ClaudeAI #OpenAI #GoogleAI #AIModelEvaluation #ArtificialIntelligence #DataScience #MachineLearning #AIFraud

SWE-bench, launched in Oct 2023, challenges AI coding with 2,294 real-world software engineering problems, raising the bar for AI proficiency. #AIBenchmarks #CodingAI @Stanford

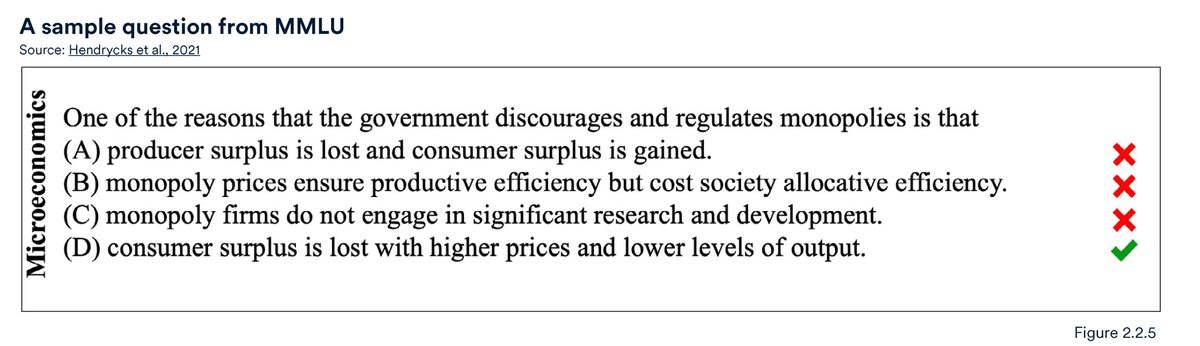

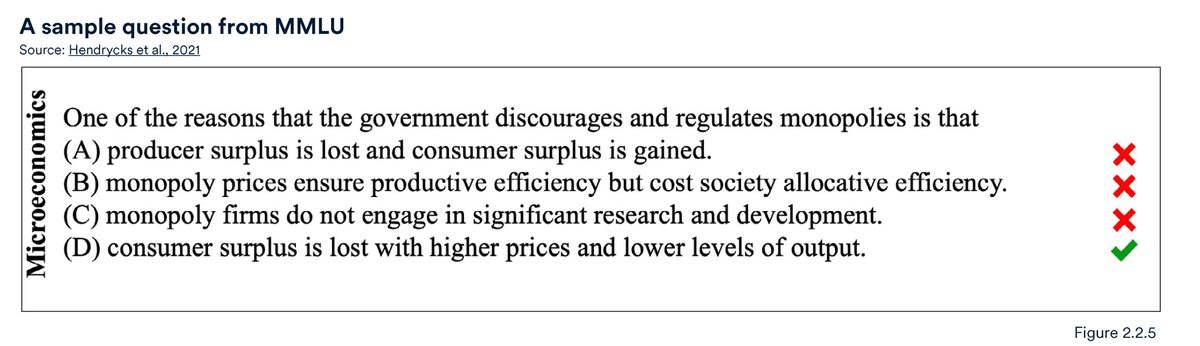

MMLU evaluates LLM performance across 57 subjects in zero-shot or few-shot scenarios, with GPT-4 and Gemini Ultra achieving top scores. #AIbenchmarks #MMLU @Stanford

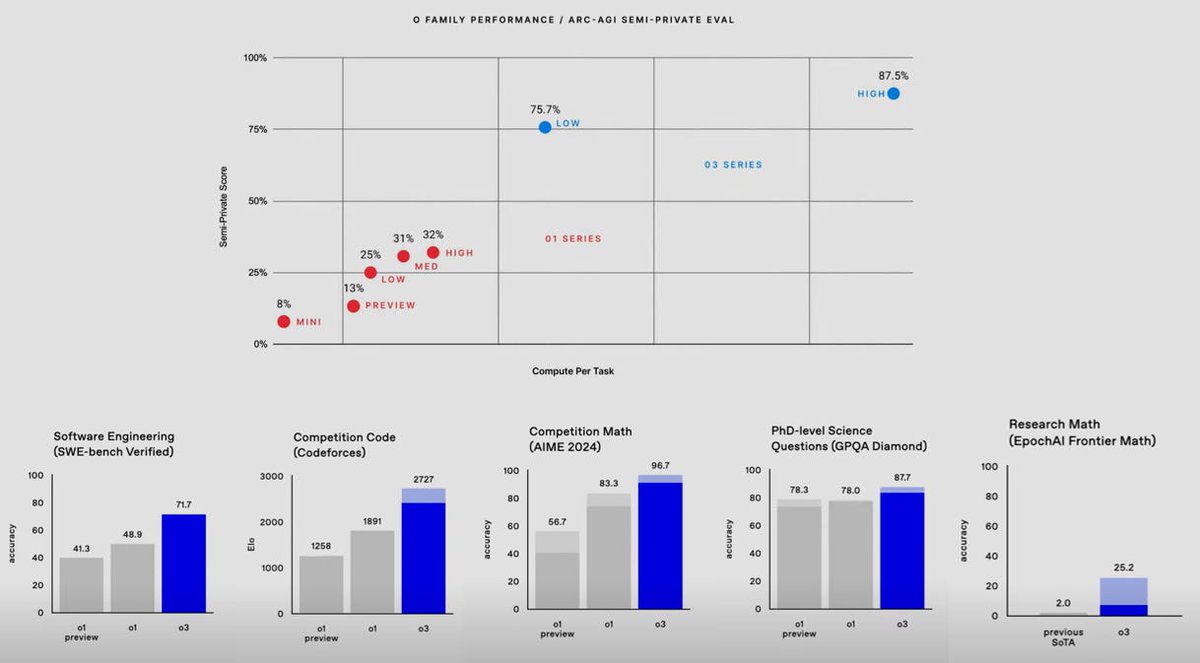

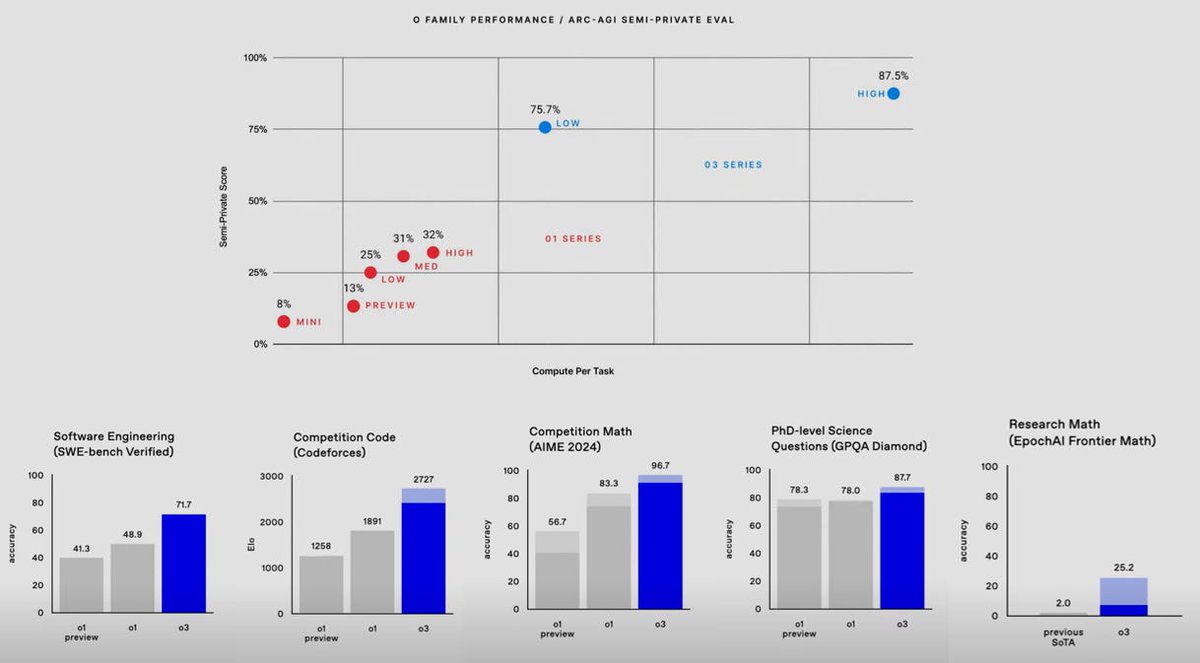

Epic AI's Frontier Math: The Toughest Benchmark Yet! #EpicAI #FrontierMath #AIBenchmarks #Mathematics #MachineLearning #DataScience #AIChallenges #CriticalThinking #ProblemSolving #Innovation

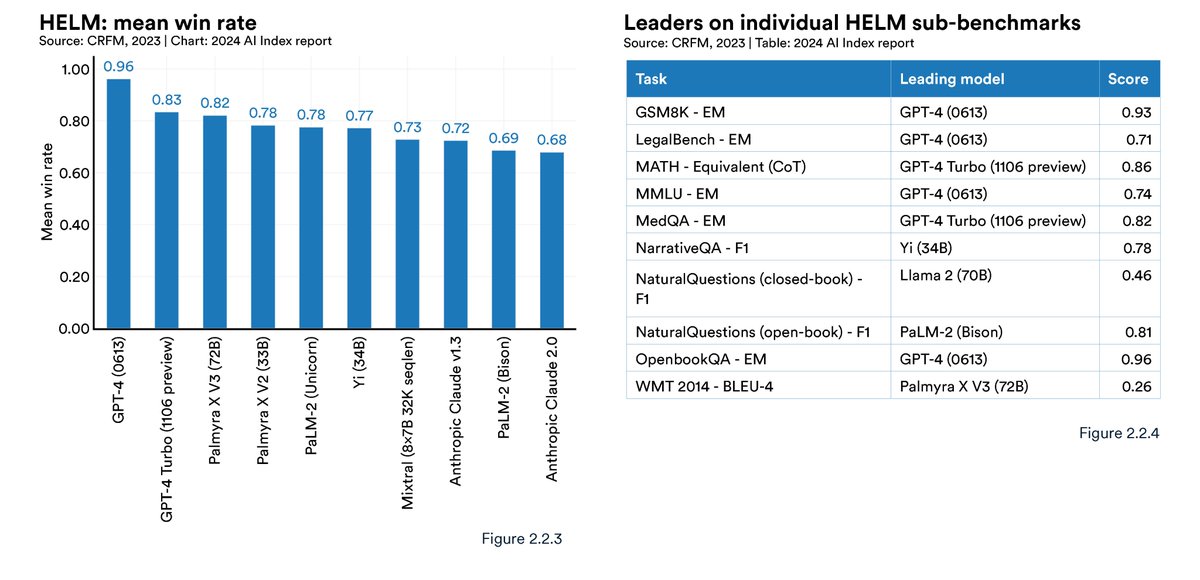

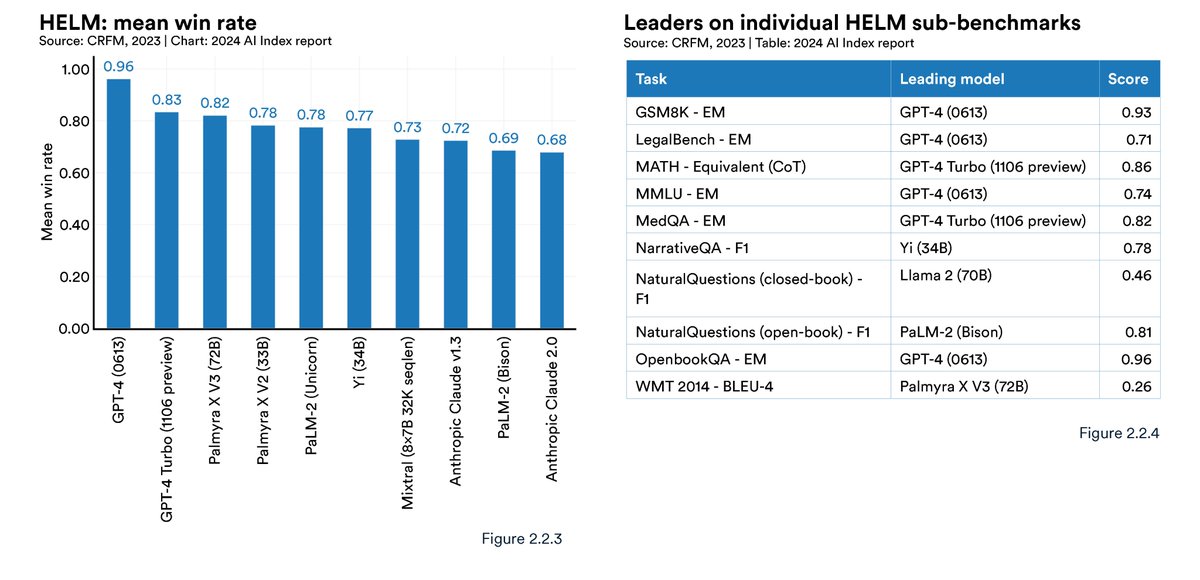

HELM (Holistic Evaluation of Language Models) benchmarks LLMs across diverse tasks like reading comprehension, language understanding, and math, with GPT-4 currently leading the leaderboard. #AIbenchmarks @Stanford

It's possible for AI tools to advance the UN's #SDGs, but industry needs to align developers' goals with communities' priorities. This requires better #AIBenchmarks. What are benchmarks, and how can they help? B Cavello explains in this video. Learn more: ow.ly/3VJ650WZ3gV

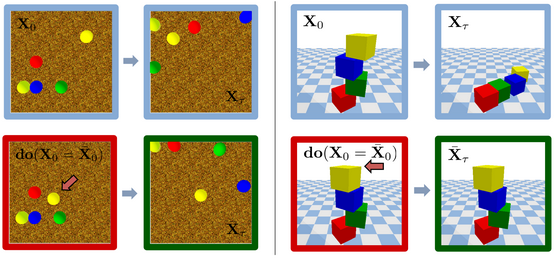

A comprehensive view of existing benchmarks for evaluating AI systems' physical reasoning capabilities. #PhysicalReasoning #AIBenchmarks #GeneralistAgents

If you are about to buy an AI product or service, wait. Listen to this before going ahead. aiforreal.substack.com/p/five-key-thi… #aiproducts #aimetrics #aibenchmarks

New benchmark launched that tests AI Models' advanced mathematical reasoning. tinyurl.com/2zy2kkm6 #aimodels #LLMs #aibenchmarks #aiforreal @EpochAIResearch

MLCommons introduces MLPerf Client group to create AI benchmarks for PCs, providing clarity on device performance for AI tasks. #TechNews #AIBenchmarks Link: techcrunch.com/2024/01/24/mlc…

How Accurate Is #AI at Fixing IaC Security Flaws? 🤔 Eye-opening results: many AI models miss the mark—not from lack of power, but focus. Read the article from our friends at @SymbioticSecAI → symbioticsec.ai/blog/cracking-… #AIBenchmarks #CodeSecurity #DevSecOps #IaC #AppSec

Scale AI Launches ‘SEAL Showdown’ LLM Leaderboard - Can it Dethrone LMArena? #AI #ScaleAI #AIBenchmarks #LMArena #SEALShowdown #Tech winbuzzer.com/2025/09/22/sca…

🧠 Reasoning results shocked people. Claude Opus 4.5 led in logic, math and multi-step planning, but GPT-5.1 & Gemini 3 Pro were right behind it. The gap is razor-thin and shrinking daily. 🤏🔥 #AIbenchmarks

1M tokens. Full codebase understanding. PhD-level reasoning. Gemini 3 is built for real work, not demo videos. #Gemini3 #GoogleCloud #AIbenchmarks

Grok’s dominance across diverse leaderboards is impressive, especially #1 in Token Usage and Programming Usecase – that indicates not just popularity but real developer trust. Curious how Grok’s agentic telecom edge will push real-world AI automation next? #AIbenchmarks…

🤯 Crushing Benchmarks! Gemini 3.0 Pro significantly outperforms 2.5 Pro on *every* major AI benchmark. It even tops the LMArena Leaderboard with an incredible 1501 Elo score! #AIBenchmarks #GeminiPro

ERNIE is beating GPT and Gemini on key benchmarks While everyone obsesses over OpenAI and Google, Baidu quietly built something better. And most people have no idea. #ERNIE #BaiduAI #AIBenchmarks #GPTvsERNIE #GeminiComparison

China’s Open-Source Triumph in AI: Kimi K2 Thinking Rewrites the Rules digitrendz.blog/?p=81781 #AiBenchmarks #GenerativeAI #KimiK2Thinking #MoonshotAi

Reproducibility validated standard Android A* pathfinding stack; uniform heuristics, 8-directional grid, weighted cost 1.0–1.4. SBOL layer calibrated bias 0.97 ± 0.02 across 1 000 runs, zero-drift at six months. Code held pending IP finalization #SBOL #Grokpedia #AIbenchmarks

Anthropic's Claude AI outperformed GPT-4 by 15% on reasoning tests, showcasing advanced multi-agent AI workflows. Hive Forge’s Swarms align perfectly to boost such complex automation across teams. Could this shift how enterprises adopt AI orchestration? #AIbenchmarks #ClaudeAI

Gemini 3.0 is a surprise leader in coding/visual benchmarks, beating Sonnet 4.5. Vision models can now reliably tell time... a huge step for multimodal AI. #GoogleGemini #AIBenchmarks

📊 Results: 1. ImageNet (256×256) FID: 3.43 using just 1 step (1-NFE) 2. 50–70% better than past best models 3. Matches big multi-step models at just 2 steps! 🤯 #SOTA #AIbenchmarks

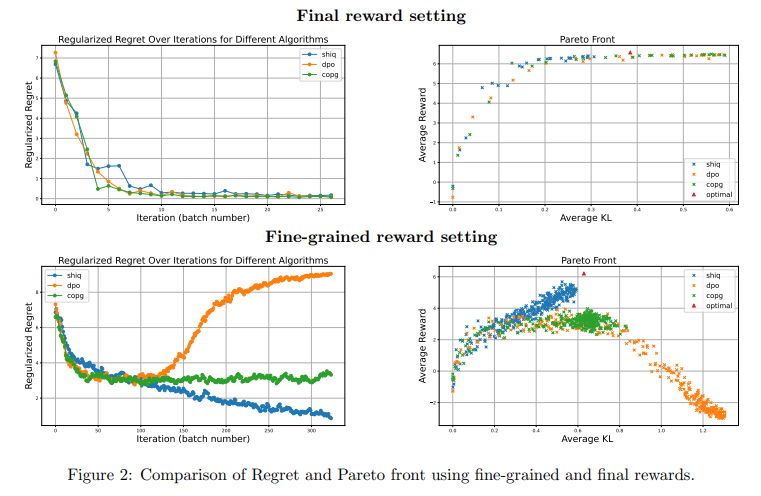

Real results 📊 ShiQ did great in tests: ✔️ It learned faster ✔️ Needed less data ✔️ Worked better for multi-turn conversations #AIbenchmarks #AIperformance

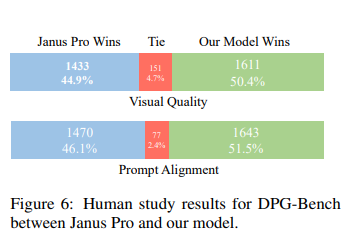

Did it work? YES. 💥 It beat big models in tests. 🧠 Understands images better 🎨 Generates nicer pictures ✅ Even humans liked its results more than others #AIbenchmarks

"You need to have these very hard tasks which produce undeniable evidence. And that's how the field is making progress today, because we have these hard benchmarks, which represent true progress. And this is why we're able to avoid endless debate." #AIbenchmarks -Ilya Sutskever

Performance of LLMs: GPT-4 from @OpenAI is still leading the open-sourced ones. #GenerativeAI #AIBenchmarks

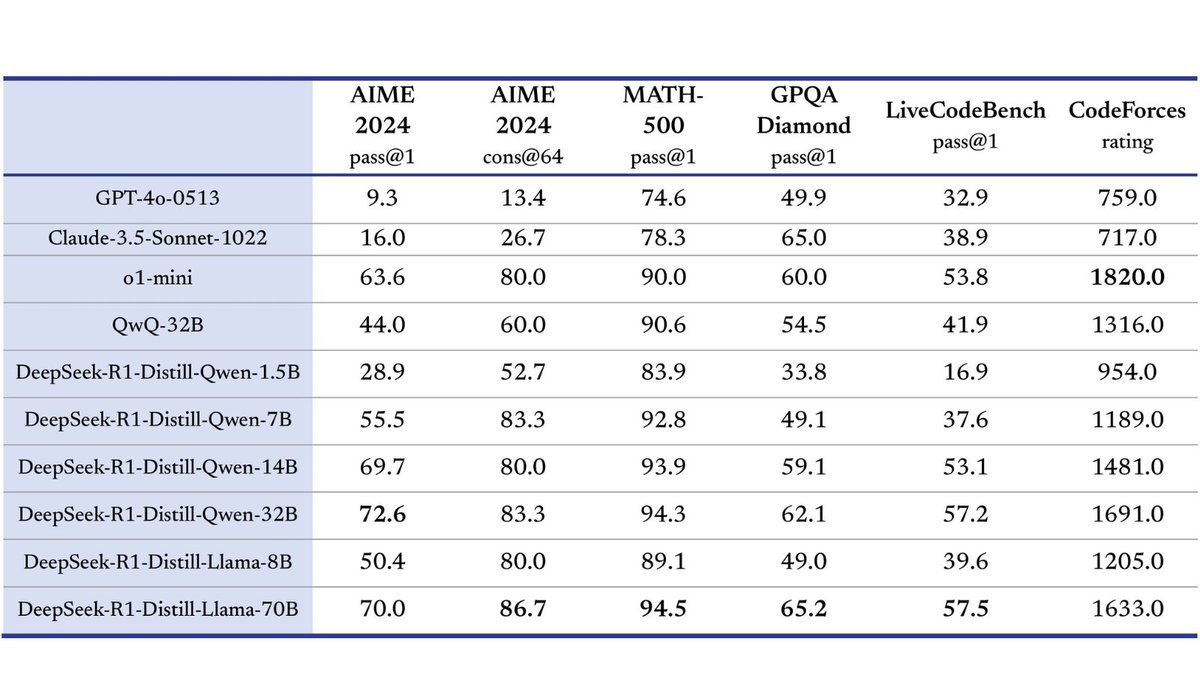

Performance speaks volumes! 📊 DeepSeek-R1 outperforms competitors across benchmarks like AIME 2024 and MATH, showcasing groundbreaking accuracy. #DeepSeekR1 #AIBenchmarks

OpenAI launches SWE-bench Verified, a human-validated subset of the popular SWE-bench AI benchmark for evaluating software engineering abilities. GPT-4's score more than doubles! 📈How will this impact AI development in software engineering? #AIBenchmarks #SoftwareEngineering

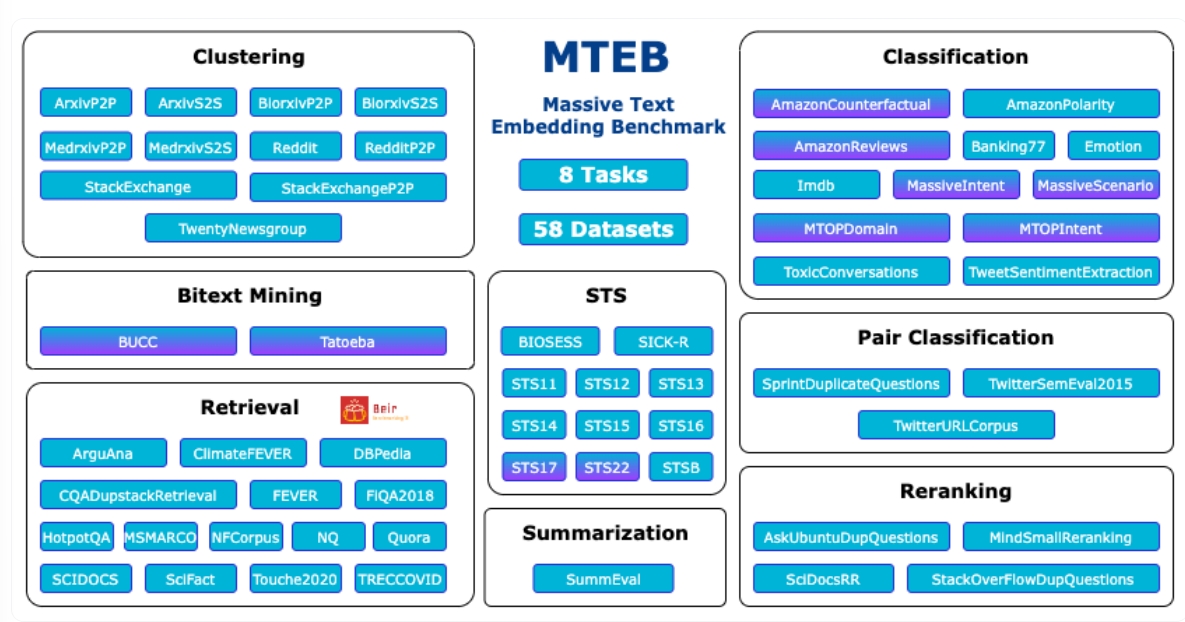

#WinBuzzerNews #AIBenchmarks #AIDevelopment #AIModels NV-Embed: NVIDIA’s Latest NLP Model Excels in Multiple Benchmarks winbuzzer.com/2024/05/28/nvi…

Claude 4 is here—and it's a powerhouse. Outperforms GPT-4 and Gemini 2.5 in reasoning, coding, and long-context tasks. Fast, smart, and ready. #Claude4 #AIbenchmarks

ERNIE is beating GPT and Gemini on key benchmarks While everyone obsesses over OpenAI and Google, Baidu quietly built something better. And most people have no idea. #ERNIE #BaiduAI #AIBenchmarks #GPTvsERNIE #GeminiComparison

The numbers are in! GPT-4O takes the lead across the board, but Qwen2.5-72B holds its ground. 93.7 vs 86.1 on MMLU, 97.8 vs 91.5 on GSM8K. The AI race is heating up! #GPT4O #Qwen25 #AIBenchmarks

MMLU evaluates LLM performance across 57 subjects in zero-shot or few-shot scenarios, with GPT-4 and Gemini Ultra achieving top scores. #AIbenchmarks #MMLU @Stanford

SWE-bench, launched in Oct 2023, challenges AI coding with 2,294 real-world software engineering problems, raising the bar for AI proficiency. #AIBenchmarks #CodingAI @Stanford

🧵 [5/n] The results are quite promising. After three iterations, the enhanced Llama 2 70B model outperformed others models such as Claude 2 and GPT-4 0613 on the AlpacaEval 2.0 leaderboard. #AIBenchmarks #Performance

![vladbogo's tweet image. 🧵 [5/n] The results are quite promising. After three iterations, the enhanced Llama 2 70B model outperformed others models such as Claude 2 and GPT-4 0613 on the AlpacaEval 2.0 leaderboard. #AIBenchmarks #Performance](https://pbs.twimg.com/media/GEj0XBpXwAAgjxX.png)

HELM (Holistic Evaluation of Language Models) benchmarks LLMs across diverse tasks like reading comprehension, language understanding, and math, with GPT-4 currently leading the leaderboard. #AIbenchmarks @Stanford

3/7:Mistral's latest model, Mixtral 8x22B, is said to outperform Meta's Llama 2 70B in math and coding tests. Mixture-of-experts architecture FTW! #MixtralLLM #AIBenchmarks #TechCompetition

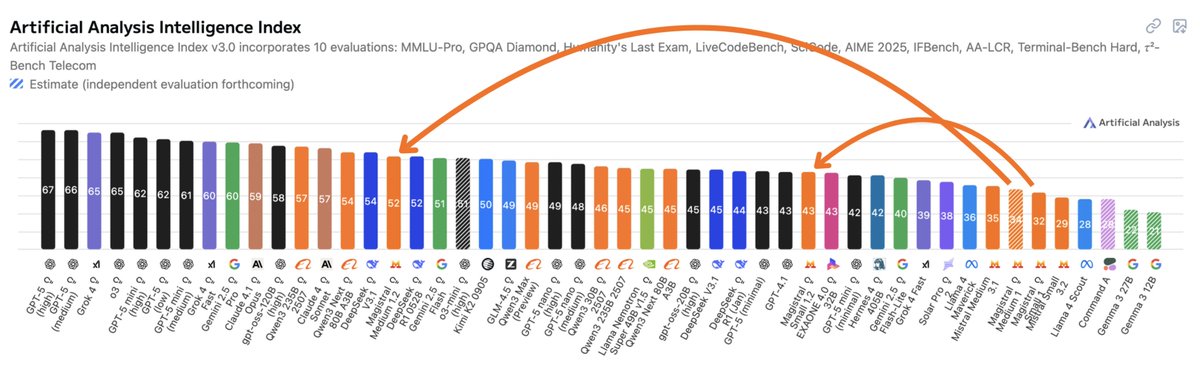

🚀 Impressive leap on the #AI leaderboard: Mistral AI's new Magistral models just jumped up the Artificial Analysis Intelligence Index—punching far above their weight and rivaling models many times larger. Size isn’t everything anymore! #AIbenchmarks #LLMs #MistralAI

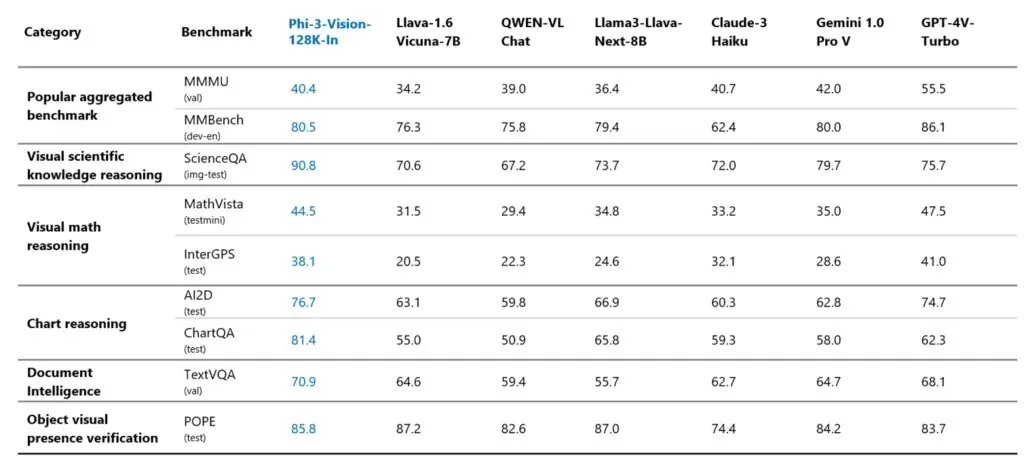

3/9 What's impressive is that despite its smaller size, Ph-3 Vision exceeds in benchmarks, scoring highly in MMU, MM Bench, Science QA, and more. 🏅📊 Smaller but mighty! #AIBenchmarks

Something went wrong.

Something went wrong.

United States Trends

- 1. Good Thursday 27K posts

- 2. Merry Christmas 69.1K posts

- 3. #thursdayvibes 1,648 posts

- 4. Happy Friday Eve N/A

- 5. #thursdaymotivation 2,163 posts

- 6. #DMDCHARITY2025 1.75M posts

- 7. Hilux 7,857 posts

- 8. Toyota 27.2K posts

- 9. Halle Berry 3,862 posts

- 10. #PutThatInYourPipe N/A

- 11. Earl Campbell 2,306 posts

- 12. Omar 185K posts

- 13. Steve Cropper 8,219 posts

- 14. Metroid Prime 4 16.6K posts

- 15. #JUPITER 258K posts

- 16. The BIGGЕST 1.03M posts

- 17. Milo 13.3K posts

- 18. Cafe 175K posts

- 19. Rogan 32.6K posts

- 20. Mike Lindell 24.8K posts