#activationfunction search results

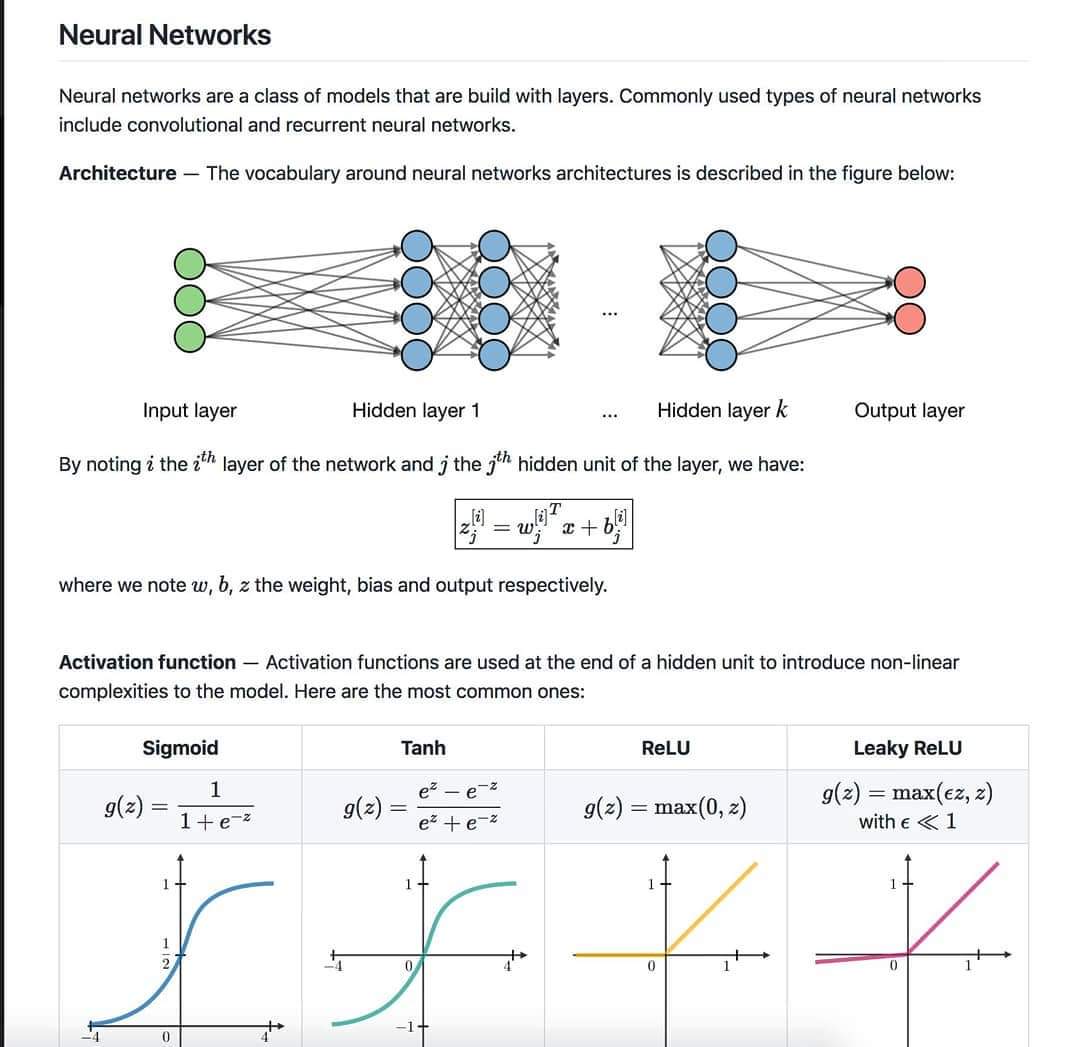

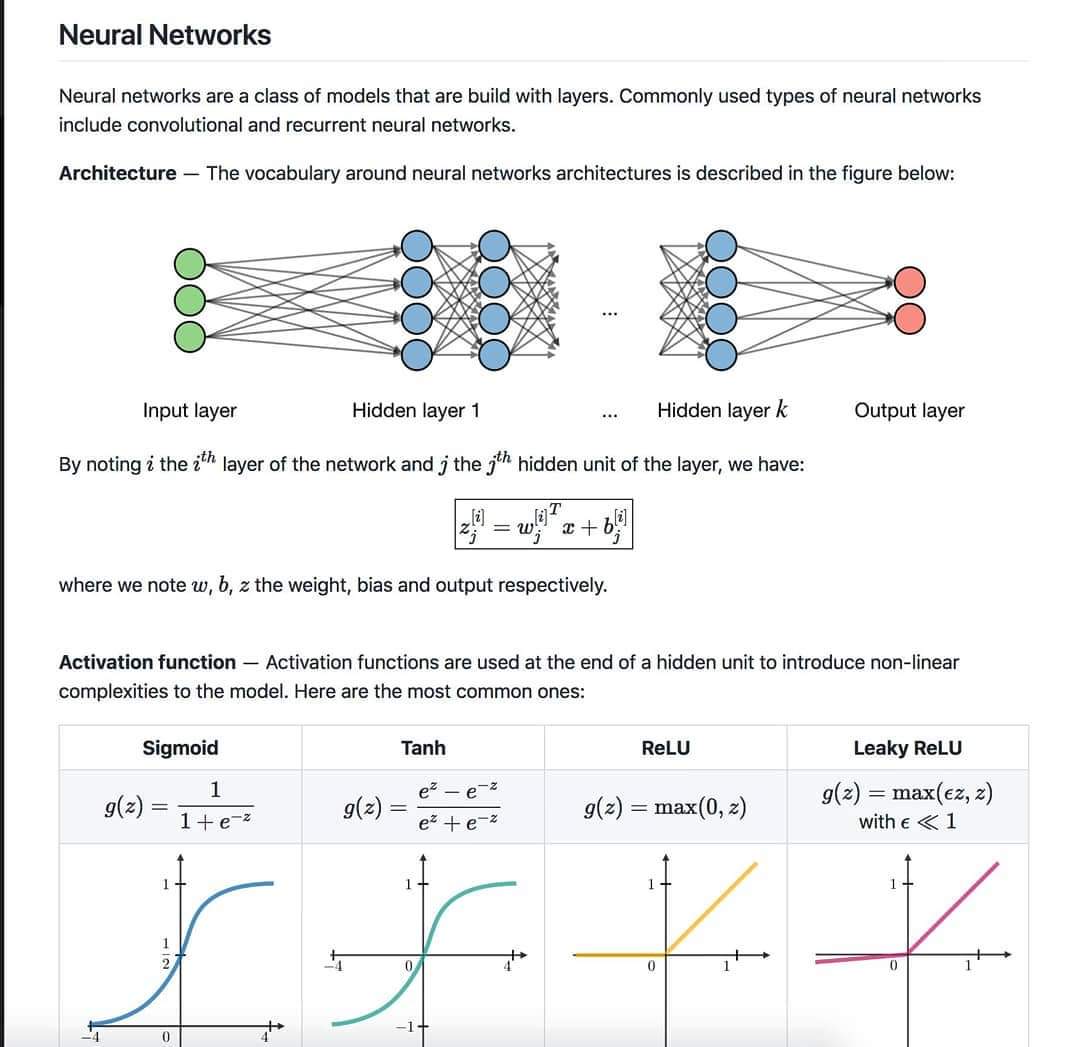

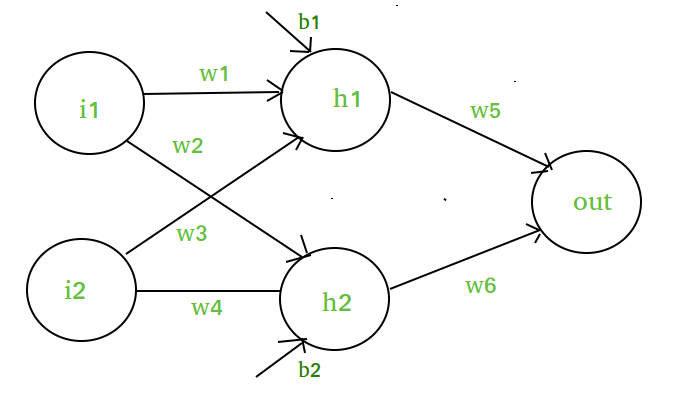

Introduction to #NeuralNetworks & #ActivationFunction #AI #MachineLearning #ANN #CNN #ArtificialIntelligence #ML #DataScience #Data #Database #Python #programming #DataAnalytics #DataScientist #DATA #coding #newbies #100daysofcoding

#Activationfunction #neuralnetworks #trainingdataset #ridgefunctions #radialfunctions #foldfunctions

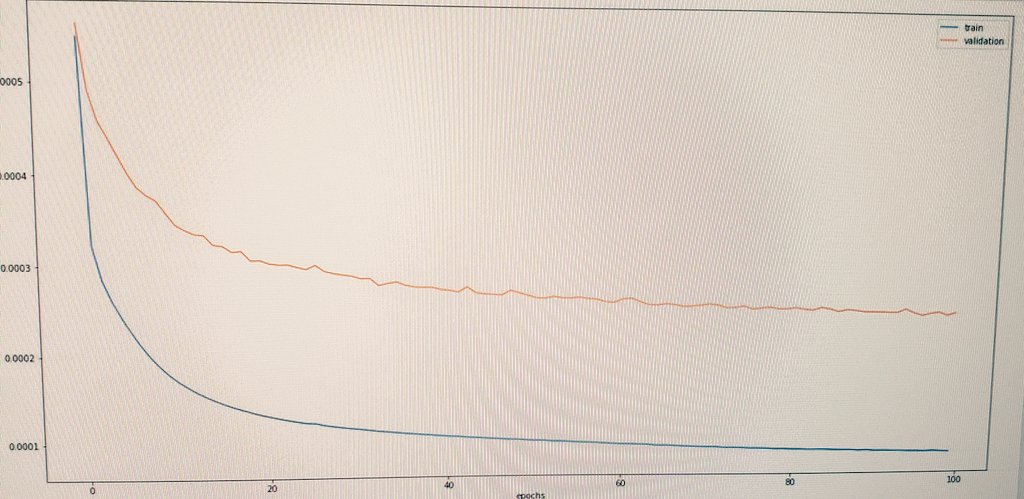

A diferença entre inferno (foto1) e céu (foto2) causada por uma simples mudança de função de ativação na camada de saída.... sigmoid x tanh em um autoencoder #deeplearning #activationfunction

What are Activation functions in DL? saifytech.com/blog_detail/wh… #activationfunction #DLsite #saifytech #blog #article #MachineLearning #pythonlearning #programming #Android18 #iOS16 #robotics #robot #Machine #software #DeveloperJobs #HTML名刺

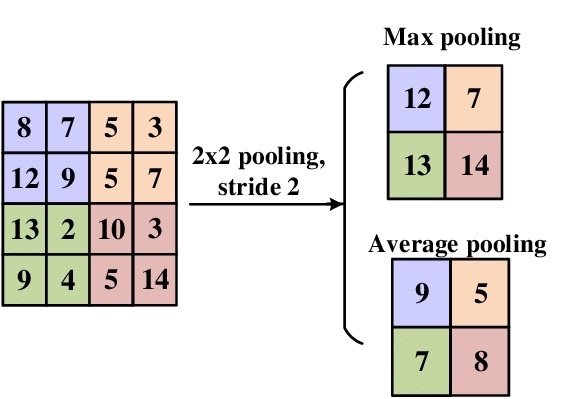

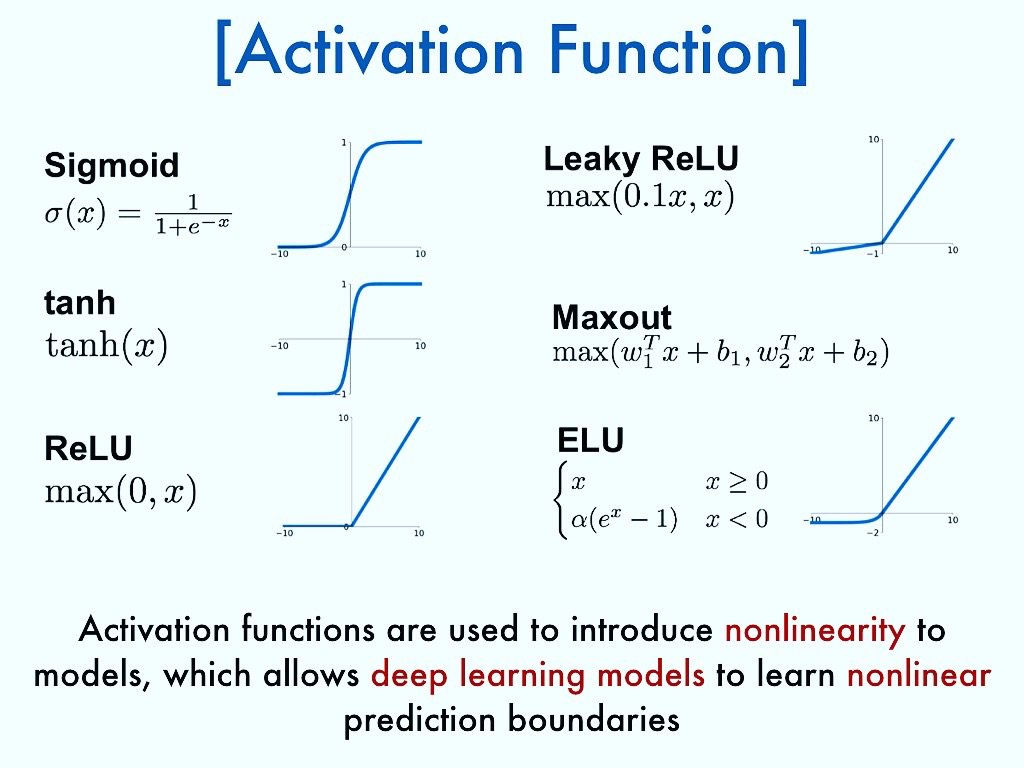

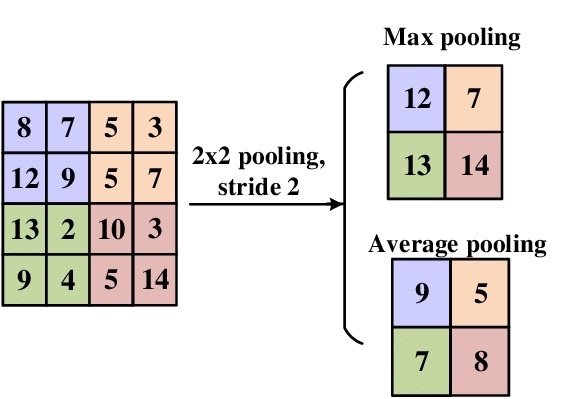

في التعلم العميق #DeepLearning #CNN يكون هنالك خطآ في فهم دور #ActivationFunction and #Pooling حيث AF تعمل على اضافه وظيفه غيرخطية الى النموذج لتمثيل وظائف معقدة بينما P تعمل علي تقليل الابعاد والسمات وكمية بيانات التعلم وبطريقة غير خطيه ويجب تطبيقها بعد AF في النموذج #Python #AI

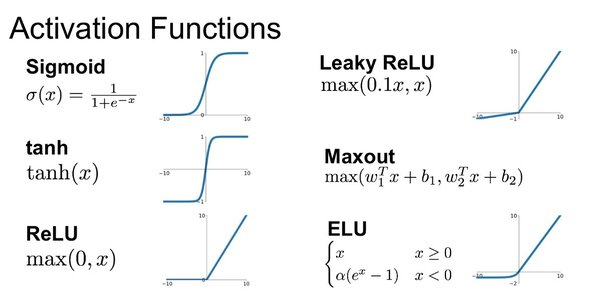

Tanh as an activation function in machine learning offers high accuracy but is computationally expensive. #tanh #activationfunction #machinelearning

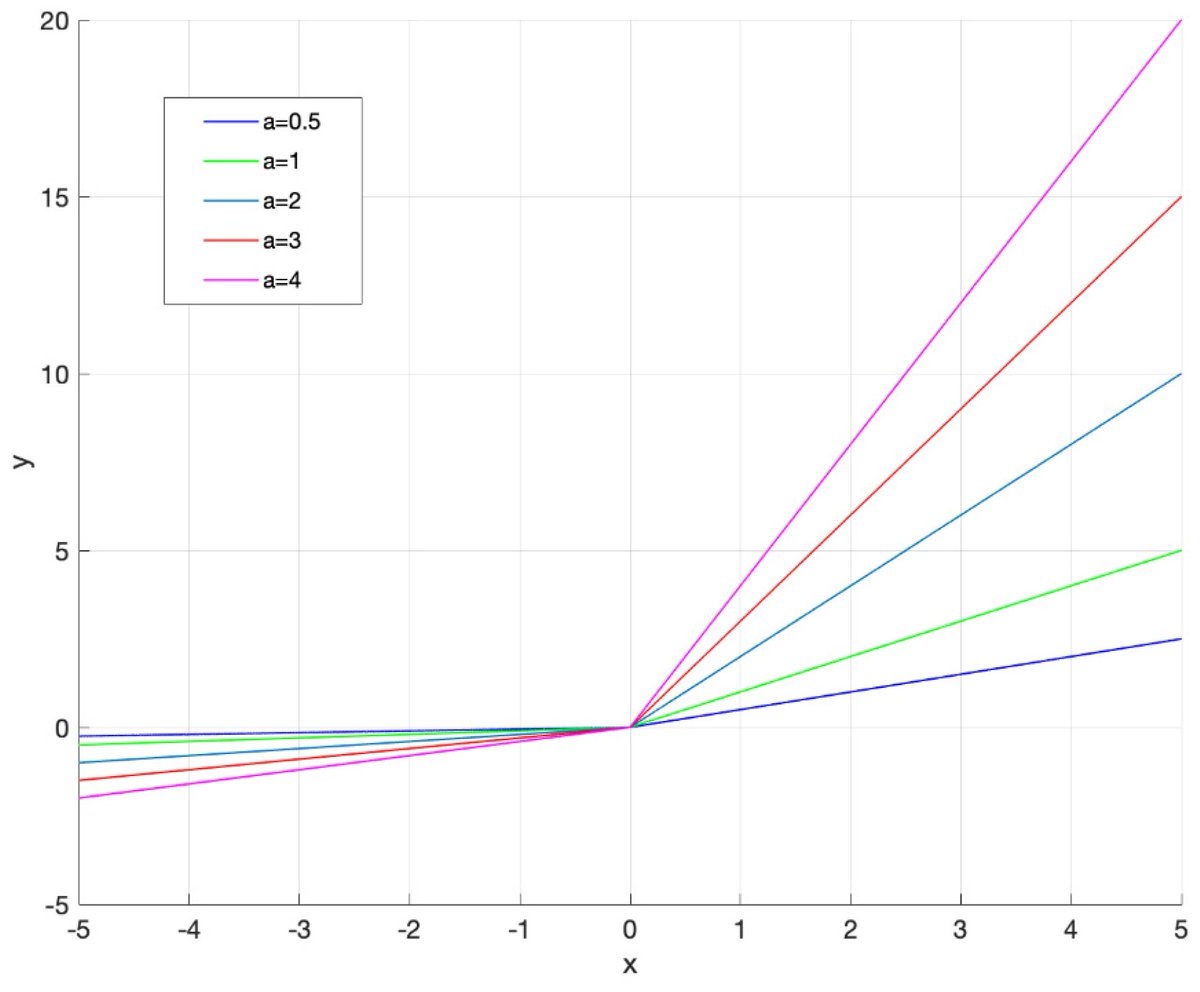

Where is the "negative" slope in a LeakyReLU? The slopes for both positive and negative inputs is defined to be positive in documentations stackoverflow.com/questions/6886… #activationfunction #pytorch #tensorflow #python

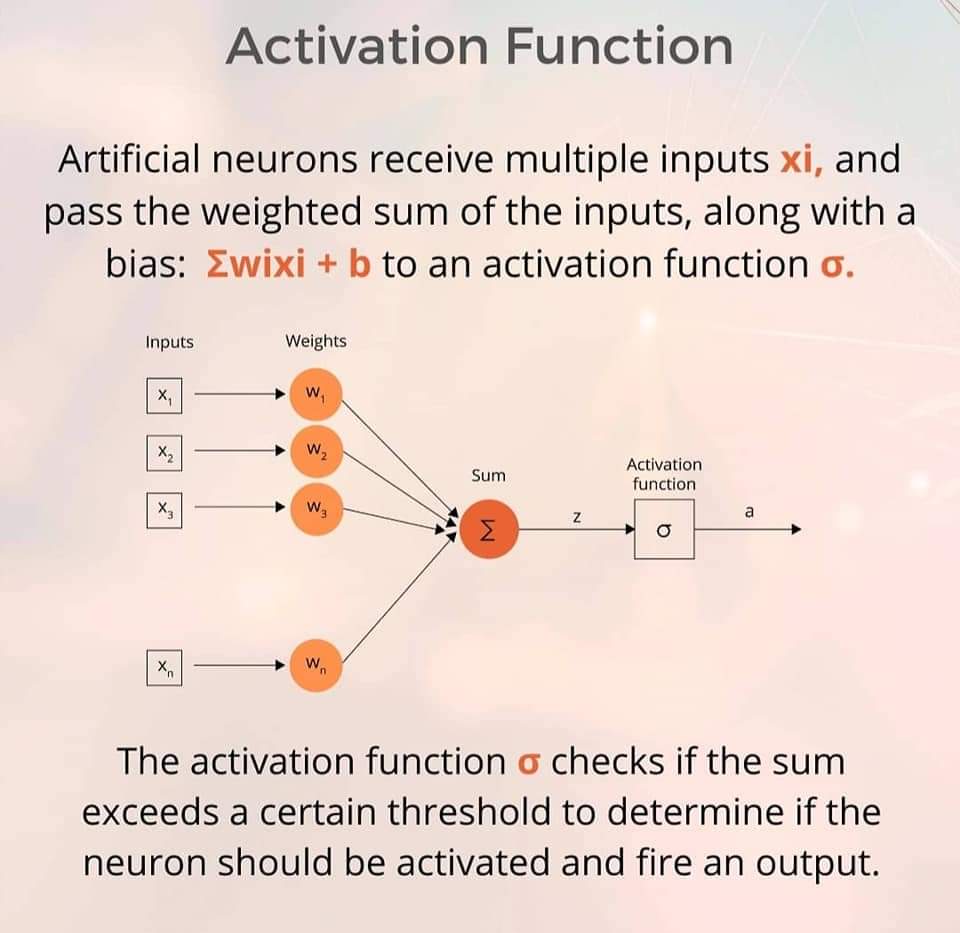

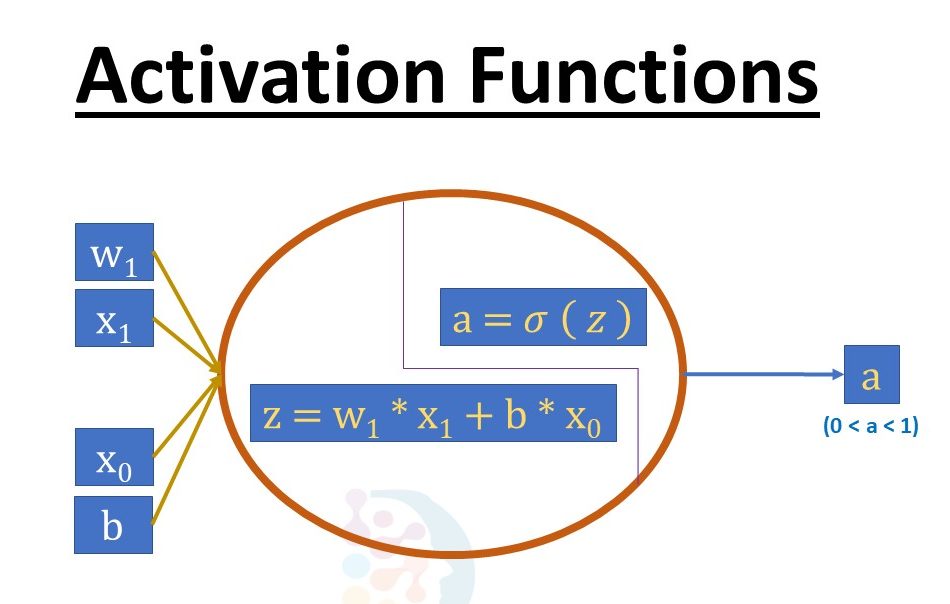

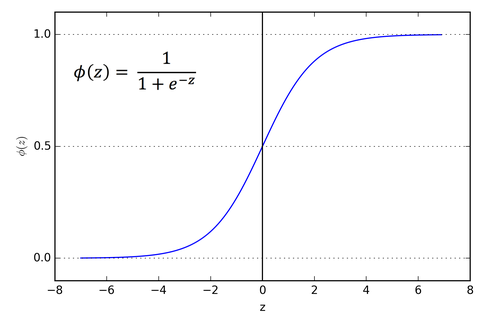

📝Day 20 of #Deeplearning ▫️ Topic - Activation Function 🔰In Artificial Neural Networks or ANN, each neuron forms a weighted sum of its inputs & passes resulting scalar value through a function referred to as an #Activationfunction or step function A Complete 🧵

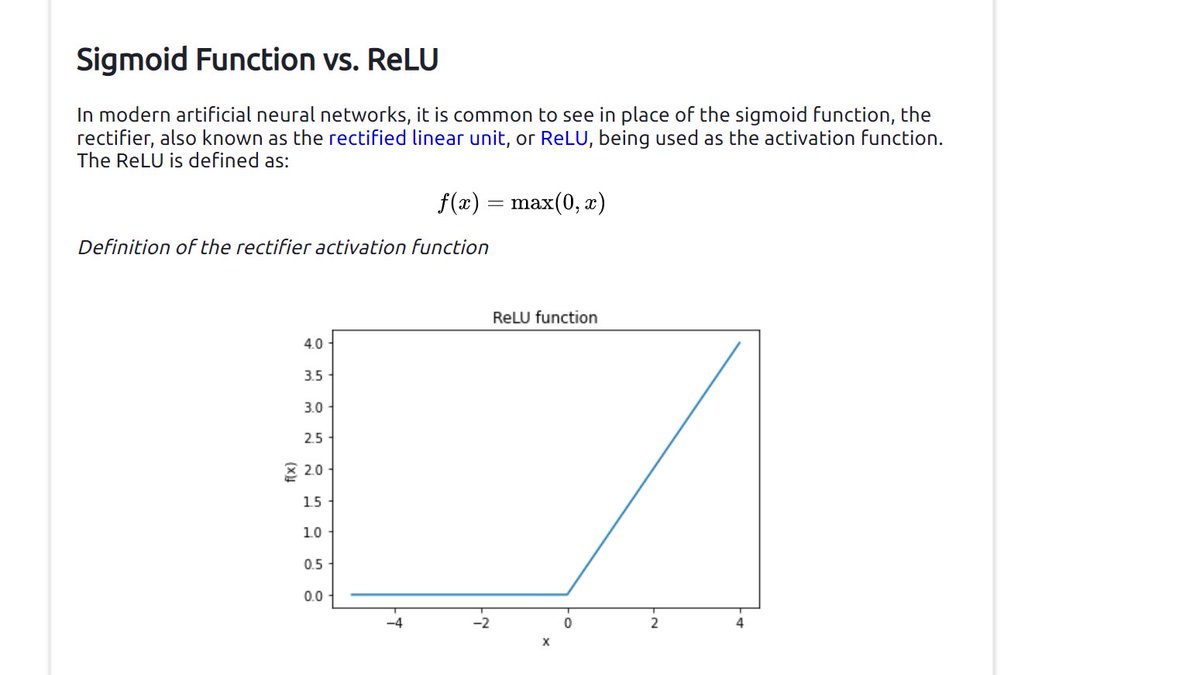

ReLU is an efficient activation function in machine learning, eliminating negative numbers. #relu #activationfunction #machinelearning

Don't all neurons in a neural network always fire/activate? stackoverflow.com/questions/6094… #convneuralnetwork #sigmoid #activationfunction #neuralnetwork #artificialintelligence

Softmax function returning nan or all zeros in a neural network stackoverflow.com/questions/6647… #numpy #python #activationfunction #neuralnetwork

Meet Adam from Koshur AI, breaking down activation functions in a way your brain actually enjoys! #KoshurAI #AIExplained #ActivationFunction #NeuralNetworks #MachineLearningSimplified #TechTok #WomenInAI #DataScienceDaily #ReLU #Sigmoid #Tanh #LearnWithAlice

#1 #activationfunction #dailycue Full Article: ow.ly/CswJ50AshO1 #ai #ml #datascience #basics_of_ai #dailypost #learning #bysri

Generative Adversarial Networks by Mark Mburu, SE @Andela 🥳 #PyConKE2022 "Discriminator Vs Generator" "Gradient Descent" "Generator Loss" "Sigmoid Function" "Linear Rectifier - ReLU" "Learning Rate" #epochs #ActivationFunction @pythonairobi Follow up: twitch.tv/pyconkeroom2?s…

Although it's weekend, we've got some really important stuff, and here's our today's #keyword 😎 #ActivationFunction is very important for deep #NeuralNetworks - it's like a trigger for a gun 😃 . . @sky_workflows, [email protected] #VitaOptimum #DeepLearning #Algorithm

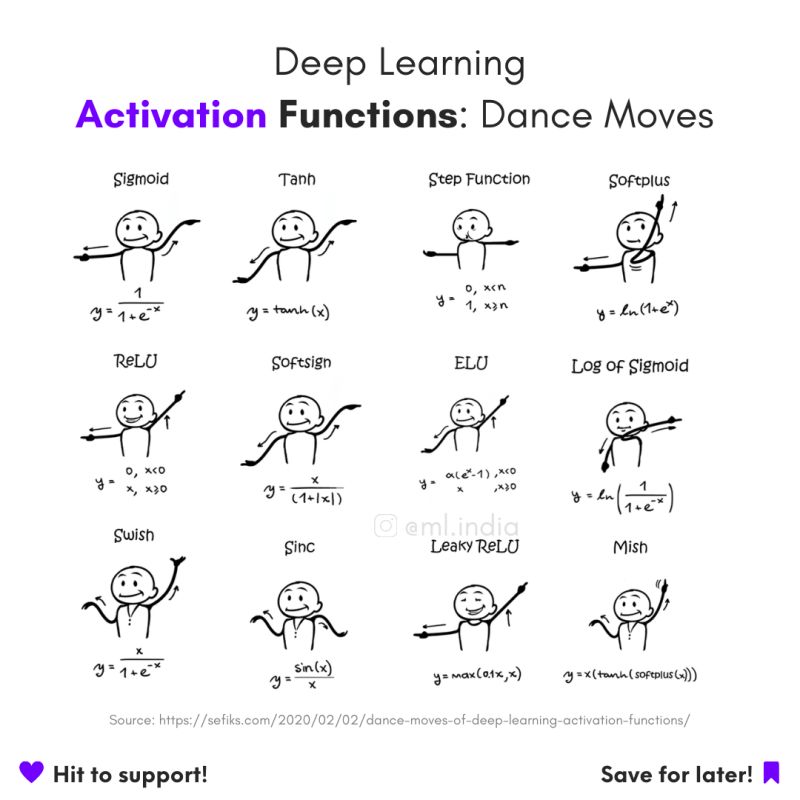

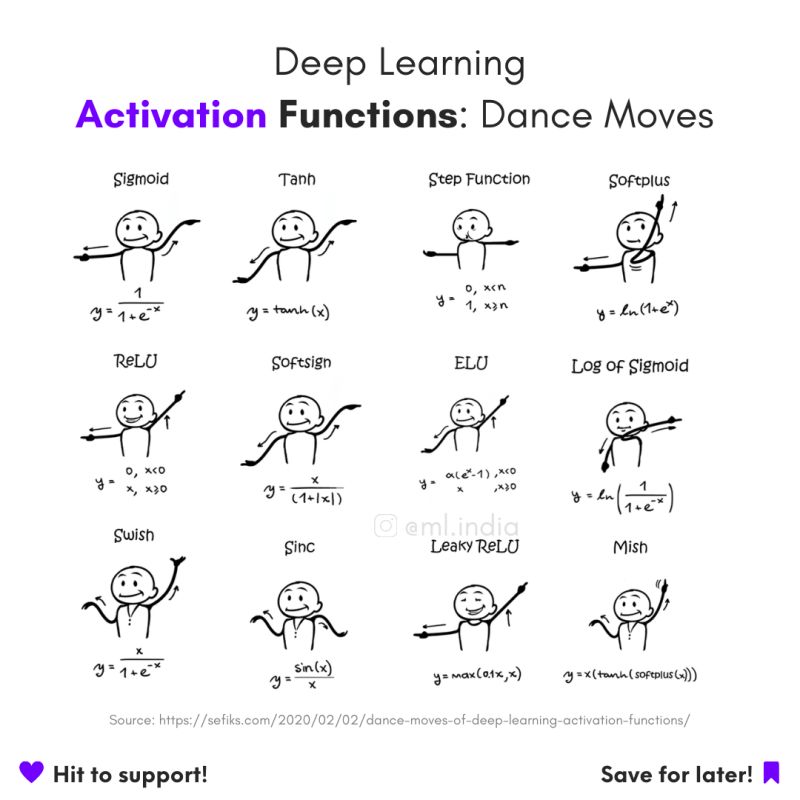

💡 Dance moves for deep learning activation functions! Source: Sefiks #DeepLearning #ActivationFunction #ML

A really cool overview of activation functions by DeepLearning.AI coursera.org/lecture/neural… #Coursera #Activationfunction #Deeplearning

Deep, À La Carte We combine a slew of known activation functions into successful architectures, obtaining significantly better results when compared with standard network #NeuralNetworks #ActivationFunction #DeepLearning #DeepNetwork #Hyperparameter drive.google.com/file/d/1Eip2OV…

Meet Adam from Koshur AI, breaking down activation functions in a way your brain actually enjoys! #KoshurAI #AIExplained #ActivationFunction #NeuralNetworks #MachineLearningSimplified #TechTok #WomenInAI #DataScienceDaily #ReLU #Sigmoid #Tanh #LearnWithAlice

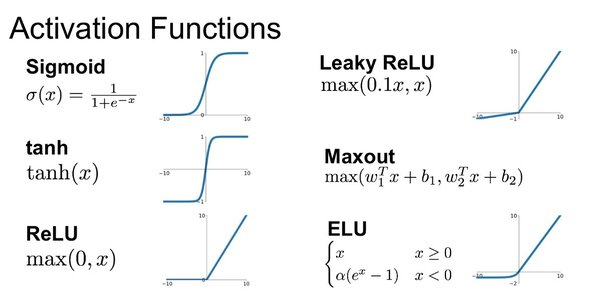

The core functionality of an ANN lies in activation functions. These determine whether a neuron should fire, allowing the network to introduce non-linearity and handle complex problems. Common activation functions: Sigmoid, ReLU, and Tanh. ⚡ #AI #ActivationFunction

Learning #deeplearning from scratch ACTIVATION FUNCTION An #activationfunction in a #NeuralNetworks is something that changes an equation product (like y = 0.042 * x1 + 0.008 * x2 - 1.53) to a non-linear absolute value before sending it to the next layer.

4/ Activation Functions The activation function in a perceptron is typically a step function, which outputs a binary result (0 or 1). This function decides whether a neuron should be activated based on the weighted sum of its inputs. 🚦🔢 #ActivationFunction #AI #Coding

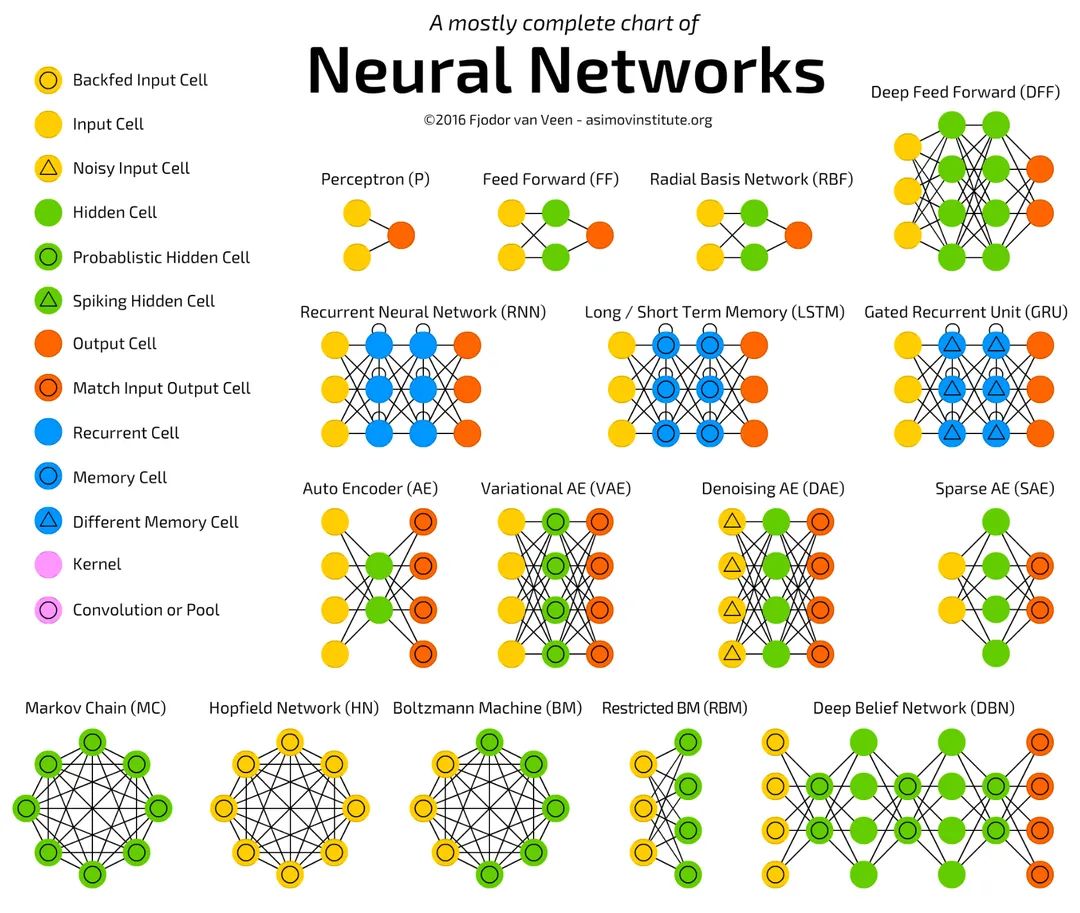

and the #activationfunction (#ReLU, #Softmax, etc) is the traffic warden allowing/restricting/converting the flow direction Now let's make it complex! Deep learning architectures come in various flavors, So:-

ReLU is an efficient activation function in machine learning, eliminating negative numbers. #relu #activationfunction #machinelearning

Read #HighlyAccessedArticle “Learnable Leaky ReLU(LeLeLU): An Alternative Accuracy-Optimized Activation Function" by Andreas Maniatopoulos and Nikolaos Mitianoudis. See more details at: mdpi.com/2078-2489/12/1… #activationfunction #ReLUfamily

Tanh as an activation function in machine learning offers high accuracy but is computationally expensive. #tanh #activationfunction #machinelearning

📝Day 20 of #Deeplearning ▫️ Topic - Activation Function 🔰In Artificial Neural Networks or ANN, each neuron forms a weighted sum of its inputs & passes resulting scalar value through a function referred to as an #Activationfunction or step function A Complete 🧵

I just published Most Used Activation Functions In Deep Learning #DeepLearning #activationfunction #100DaysOfCode link.medium.com/cSSemCf9zHb

The neuron processes inputs by summing them up, each adjusted by its weight. This sum is then transformed using an activation function. #NeuralComputation #ActivationFunction

"The 'Activation Function' is like the powerhouse of neural networks! 🧠💥 It adds non-linearity, enabling complex patterns to be recognized and making deep learning possible. #ActivationFunction #DeepLearning #NeuralNetworks"

Mendalami #ActivationFunction dalam #NeuralNetwork #MachineLearning #FajriKoto

#Activationfunction #neuralnetworks #trainingdataset #ridgefunctions #radialfunctions #foldfunctions

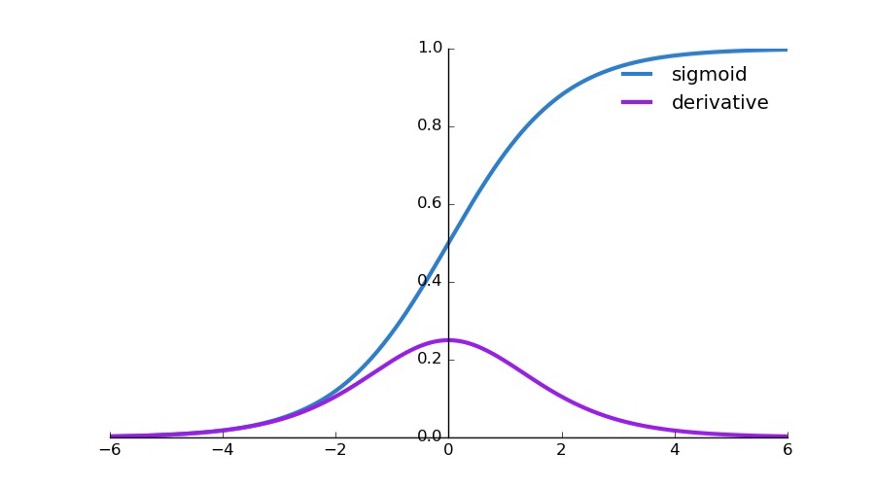

#Sigmoid vs #ReLU #ActivationFunction #AI #MachineLearning #ANN #CNN #ArtificialIntelligence #ML #DataScience #Data #Database #Python #programming #DeepLearning #DataAnalytics #DataScientist #DATA #coding #newbies #100daysofcoding

Introduction to #NeuralNetworks & #ActivationFunction #AI #MachineLearning #ANN #CNN #ArtificialIntelligence #ML #DataScience #Data #Database #Python #programming #DataAnalytics #DataScientist #DATA #coding #newbies #100daysofcoding

Where is the "negative" slope in a LeakyReLU? The slopes for both positive and negative inputs is defined to be positive in documentations stackoverflow.com/questions/6886… #activationfunction #pytorch #tensorflow #python

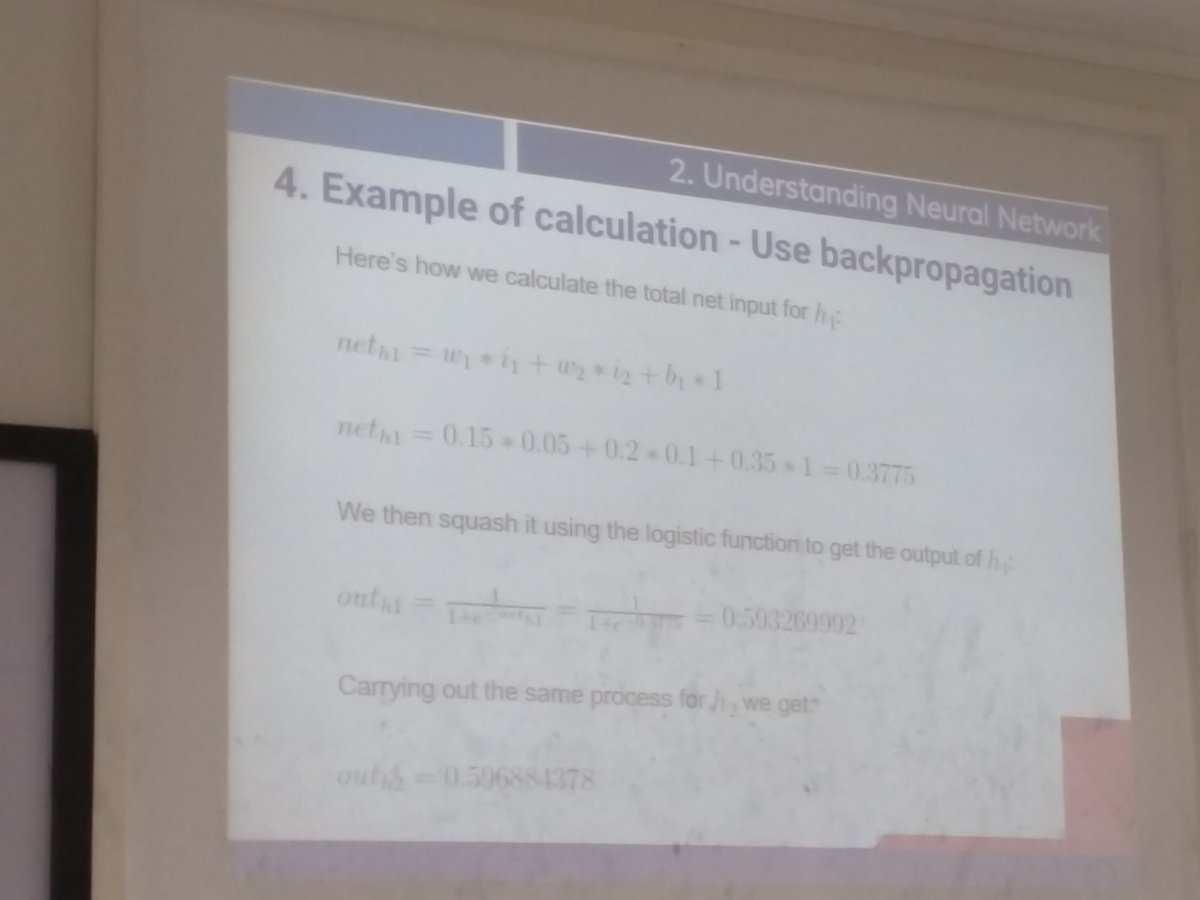

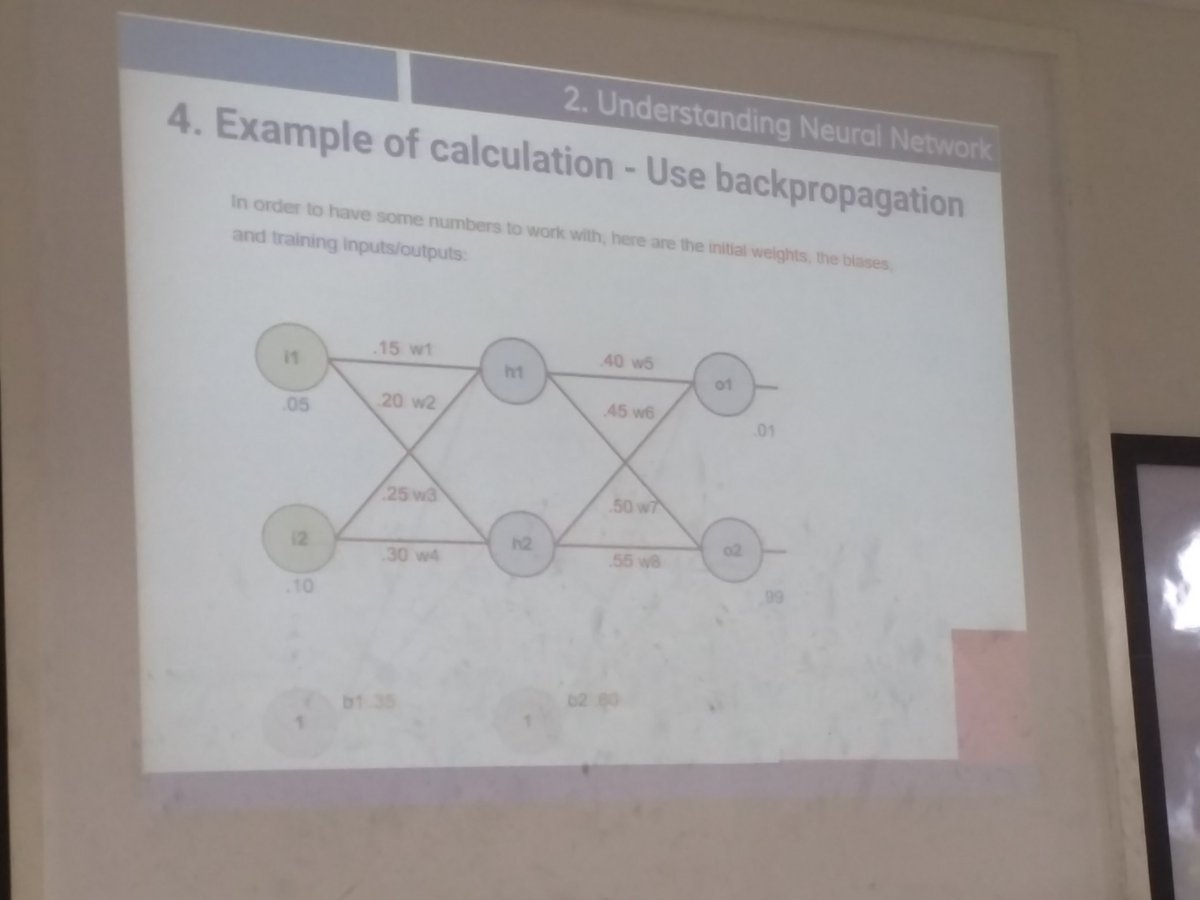

Menggunakan #BackPropagation dlm #ActivationFunction #NeuralNetwork #MachineLearning Bersama #FajriKoto @DSWeekend @DataScienceIndo

Introduction to #ActivationFunction #AI #MachineLearning #ANN #CNN #ArtificialIntelligence #ML #DataScience #Data #Database #Python #programming #DeepLearning #DataAnalytics #DataScientist #DATA #coding #newbies #100daysofcoding

في التعلم العميق #DeepLearning #CNN يكون هنالك خطآ في فهم دور #ActivationFunction and #Pooling حيث AF تعمل على اضافه وظيفه غيرخطية الى النموذج لتمثيل وظائف معقدة بينما P تعمل علي تقليل الابعاد والسمات وكمية بيانات التعلم وبطريقة غير خطيه ويجب تطبيقها بعد AF في النموذج #Python #AI

Neural Network Chart #ActivationFunction #AI #MachineLearning #ANN #CNN #ArtificialIntelligence #ML #DataScience #Data #Database #Python #programming #DeepLearning #DataAnalytics #DataScientist #DATA #coding #newbies #100daysofcoding

What is an Activation Function? #ActivationFunction #AI #MachineLearning #ANN #CNN #ArtificialIntelligence #ML #DataScience #Data #Database #Python #programming #DeepLearning #DataAnalytics #DataScientist #DATA #coding #newbies #100daysofcoding

revising some old stuff, thought of sharing this ! #activationfunction #relu #sigmoid #NeuralNetworks

Don't all neurons in a neural network always fire/activate? stackoverflow.com/questions/6094… #convneuralnetwork #sigmoid #activationfunction #neuralnetwork #artificialintelligence

What are Activation functions in DL? saifytech.com/blog_detail/wh… #activationfunction #DLsite #saifytech #blog #article #MachineLearning #pythonlearning #programming #Android18 #iOS16 #robotics #robot #Machine #software #DeveloperJobs #HTML名刺

Softmax function returning nan or all zeros in a neural network stackoverflow.com/questions/6647… #numpy #python #activationfunction #neuralnetwork

🔦 A Basic Introduction to Activation Function in Deep Learning: hubs.la/Q015h3D10 #DeepLearning #ActivationFunction #Overview

💡 Dance moves for deep learning activation functions! Source: Sefiks #DeepLearning #ActivationFunction #ML

📌 In this tutorial, you will discover how to choose activation functions for neural network models: hubs.la/Q016sTKv0 #DeepLearning #NeuralNetwork #ActivationFunction

Which #ActivationFunction Should You Use? by @sirajraval – Frank's World buff.ly/2rs05CK #AI #NeuralNetworks

Something went wrong.

Something went wrong.

United States Trends

- 1. Marshawn Kneeland 18.3K posts

- 2. Nancy Pelosi 22.6K posts

- 3. #MichaelMovie 31.2K posts

- 4. ESPN Bet 2,219 posts

- 5. #영원한_넘버원캡틴쭝_생일 24.7K posts

- 6. #NO1ShinesLikeHongjoong 24.9K posts

- 7. Gremlins 3 2,655 posts

- 8. Jaafar 9,703 posts

- 9. Good Thursday 35.7K posts

- 10. Joe Dante N/A

- 11. Chimecho 4,823 posts

- 12. #thursdayvibes 2,863 posts

- 13. Madam Speaker N/A

- 14. Baxcalibur 3,388 posts

- 15. #BrightStar_THE8Day 36.4K posts

- 16. Penn 9,502 posts

- 17. Votar No 27.9K posts

- 18. Chris Columbus 2,404 posts

- 19. Happy Friday Eve 1,001 posts

- 20. Barstool 1,631 posts