#algorithmicfairness search results

🤖 Automating decisions with AI can hide biases, making fairness & accountability tough. New research 🚀 categorizes biases, reviews fairness metrics ✅, and proposes ways to detect & mitigate bias across AI development phases. ⚖️ #AI #AlgorithmicFairness doi.org/10.9781/ijimai…

🌐 Call for Contributions: Algorithmic Fairness in Network Science (FairNetSci) Session @ NetSciX 2026 🌐 🔹 More details: fairnetsci.github.io 🔹 Submission deadlines: 15 Nov & 25 Nov 2025 #NetSciX2026 #FairNetSci #AlgorithmicFairness #NetworkScience

Kenya’s National AI Strategy 2025–2030 sets a bold tech-driven vision—but with no standalone AI law yet, gaps persist. We rely on the Data Protection, Computer Misuse & Cybercrime; and Consumer Protection Acts which are inadequate in terms of #AlgorithmicFairness & #Surveillance

X’s algorithm is now open-source for transparency. #OpenSource #AlgorithmicFairness

AI bias isn't a technical problem—it's a social responsibility. When algorithms influence hiring, lending, and judicial decisions, ensuring fairness becomes a moral imperative that requires constant vigilance and systematic correction. #AIBias #AlgorithmicFairness #EthicalAI…

How can we ensure ongoing monitoring and evaluation of AI systems to detect and address unintended biases? #AI #BiasDetection #AlgorithmicFairness

AI bias isn't a technical glitch—it's a social bug. Algorithms trained on biased data learn to perpetuate unfairness at scale and speed. Fixing AI bias requires fixing human bias first, then encoding fairness into algorithms. #AIBias #AlgorithmicFairness #SocialJusticeAI…

The challenge in image recognition isn't just accuracy—it's fairness. AI systems trained on biased datasets perpetuate those biases at scale. Diverse training data isn't just good practice; it's moral imperative for equitable AI systems. #AIImageRecognition #AlgorithmicFairness…

Very happy to announce the first Philosophy & Computer Science Summer School, at @unibt this July. The event is aimed at both undergrads and grad students, and covers topics such as #AIEthics, #algorithmicfairness, and #explainability. Spread the word! explainable-intelligent.systems/summer-

The accuracy gap in facial recognition across different demographics highlights the importance of diverse training data and bias testing. Ethical AI requires representative datasets that ensure fair performance for all users. #AIFacialRecognition #AlgorithmicFairness #InclusiveAI…

Bias in AI doesn't happen by accident—it's inherited from biased training data. Creating diverse, representative datasets and regularly auditing AI systems are essential steps toward fair and equitable artificial intelligence. #AIEthics #AlgorithmicFairness #InclusiveAI…

X’s algorithm is now open-source for transparency. #OpenSource #AlgorithmicFairness

X’s algorithm is now open-source for transparency. #OpenSource #AlgorithmicFairness

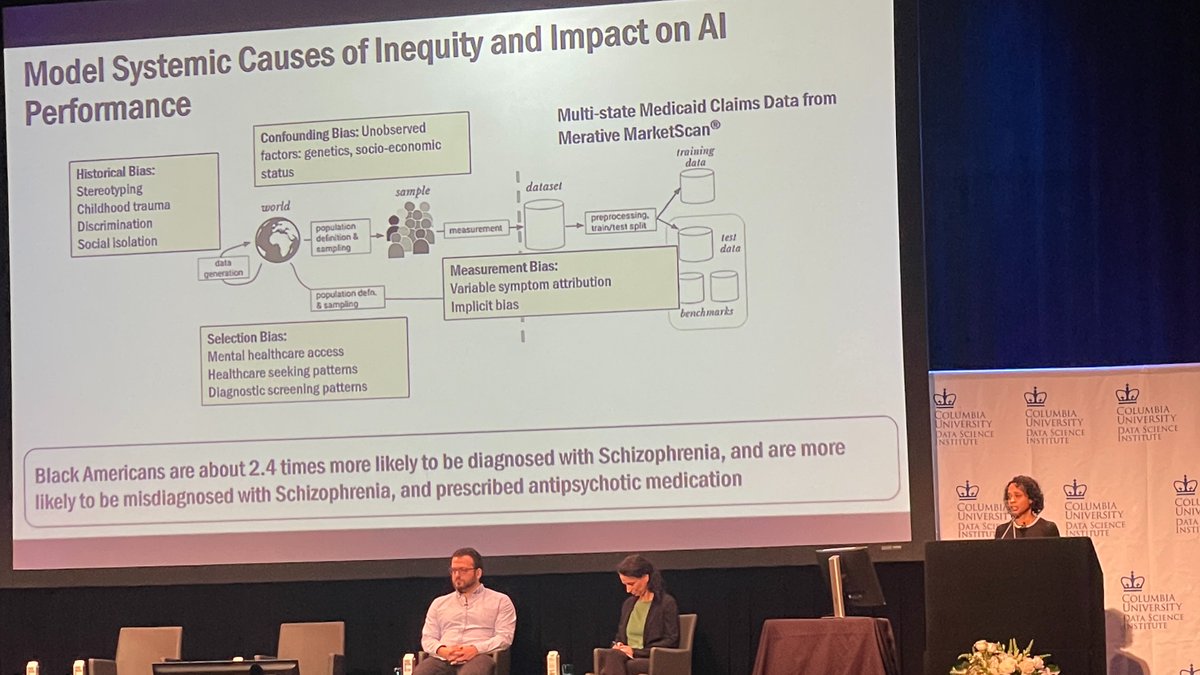

Shalmali Joshi, Assistant Professor @ColumbiaPS, is currently discussing the diagnostic disparities in psychiatry and proposes novel statistical tools for fairer outcomes. #AlgorithmicFairness #Psychiatry #DSD2024 @columbiascience @columbiapsychiatry

🚀 Delighted to join and support @EWAFWorkshop in fostering dialogue on #AlgorithmicFairness within Europe's unique legal and societal framework. 📅 Submission deadline: March 15, 2024 🎓 Acceptance Notifications: April 26, 2024 📍EWAF: July 1-3,2024 2024.ewaf.org/call-for-papers

Policy Outlook | In light of documented lack of #InternetAccess for Palestinians in Gaza, regulatory AI sandboxes to test various nuanced approaches to boost #AlgorithmicFairness on social media is the need of the hour. Ravale Mohydin wrote: bit.ly/483Ns1z

🎉Our "Improving Bias Metrics in Vision-Language Models by Addressing Inherent Model Disabilities"📜 accepted @afciworkshop at #NeurIPS2024 W/ @BalajiDarur @GssKeerthireddy @ShashwatGoel7 🎉 #AlgorithmicFairness #Metrics #Evaluation #ProfGiri 📜Paper: precog.iiit.ac.in/pubs/NeurIPS_A… 👇🧵

The 3rd European Workshop on #AlgorithmicFairness #EWAF24 is taking place between 1 & 3 July. Keynotes from @vdignum, @sethlazar, Bettina Berendt, and @IValeraM are announced. Learn more at the link below 👇👇 2024.ewaf.org/home

🤖 Automating decisions with AI can hide biases, making fairness & accountability tough. New research 🚀 categorizes biases, reviews fairness metrics ✅, and proposes ways to detect & mitigate bias across AI development phases. ⚖️ #AI #AlgorithmicFairness doi.org/10.9781/ijimai…

🌐 Call for Contributions: Algorithmic Fairness in Network Science (FairNetSci) Session @ NetSciX 2026 🌐 🔹 More details: fairnetsci.github.io 🔹 Submission deadlines: 15 Nov & 25 Nov 2025 #NetSciX2026 #FairNetSci #AlgorithmicFairness #NetworkScience

X’s algorithm is now open-source for transparency. #OpenSource #AlgorithmicFairness

🤖 Automating decisions with AI can hide biases, making fairness & accountability tough. New research 🚀 categorizes biases, reviews fairness metrics ✅, and proposes ways to detect & mitigate bias across AI development phases. ⚖️ #AI #AlgorithmicFairness doi.org/10.9781/ijimai…

When algorithms make decisions that shape lives, fairness must be designed — not assumed. Bias unchecked is justice denied. #UberDefenderX #JusticeInBalance #AlgorithmicFairness #EthicalAI #BiasMitigation #Transparency #RuleOfLaw #RightsForAll

Thank you @watha for keeping tonight's panel lively, accessible and informative. #AlgorithmicFairness #MakeItHappen @BostonGlobe

@hendersonwe to big tech companies: Expand the market by being more human! #AlgorithmicFairness. Social Impact Series. #MakeItHappen

Expert moderation by @watha : Justice can not be algorithmic, can it? Panel is up for the challenge! #AlgorithmicFairness #MakeItHappen

Jamie Williamson, Chairwoman of MCAD, wonders how algorithms might lead to opportunities #AlgorithmicFairness. Canopy Social Impact Series.

@onecade: We are not the worst thing we have done. #AlgorithmicFairness would let us escape the worst thing we've done. Social Impact Series

Stavros Tsalides shares techniques for handling algorithmic biases. 1. Incompatibility of metrics 2. Pre-processing techniques 3. In-processing techniques 4. Post-processing techniques @NipsConference #NeurIPS2018 #algorithmicfairness

“Instead of constraining the system we train the system naturally but just modify by correcting all the variables descended from the sensitive attribute (eg race) along unfair pathways” - @csilviavr on @DeepMindAI’s approach to #algorithmicfairness in #machinelearning #reworkAI

Great to be in @ELLISAlicante to speak at @ELLISforEurope doctoral symposium about #algorithmicfairness and the work of #humaint and manage to have this view @EU_ScienceHub @EU_Commission

#algorithmicfairness #childwelfare @RVaithianathan , A Chouldechova @HeinzCollege Erin Dalton @ACDHS Emily Putnam-Hornstein @CDN_USC #d4gx

"#Fairness is an ethical question. What is fair to me, might not be fair to someone else," @drturnerlee #TuringLecture @adrian_weller #AlgorithmicFairness

Algoritmes voor de gek houden.. uiteindelijk ben je misschien zelf de klos. #algorithmicfairness #algoritme #ai #accountability #aiethics #dataethics #responsibletech #dataethiek

🧐Model compression may not be the go-to method for obtaining good long-tail performance from compact models. By Nils Rethmeier et al. @Nils_Rethmeier @IAugenstein 🖥️mdpi.com/2813-0324/3/1/… #contrastivelanguagemodels #algorithmicfairness

Very happy to announce the first Philosophy & Computer Science Summer School, at @unibt this July. The event is aimed at both undergrads and grad students, and covers topics such as #AIEthics, #algorithmicfairness, and #explainability. Spread the word! explainable-intelligent.systems/summer-

Ein Talk zum Thema #AlgorithmicFairness von @alipasha bei @seibertmedia – verspricht spannend zu werden.

Good discussion of #NLP #AlgorithmicFairness in #EdTech @aloukina Madnani & Zechner @ETSInsights #Textmining #DataScience #LearningAnalytics #Algorithms #Bias #Fairness #DataAnalytics #AI aclweb.org/anthology/W19-…

There is a lot of discussion about bias in AI. In my recent pub, I explore how people w/ different political views react to one AI tech #FacialRecognition and its algorithmic bias. I've got both good and bad news. #AIbias #AlgorithmicFairness journals.sagepub.com/doi/abs/10.117…

Something went wrong.

Something went wrong.

United States Trends

- 1. Marshawn Kneeland 12.5K posts

- 2. Nancy Pelosi 17K posts

- 3. #MichaelMovie 24.2K posts

- 4. ESPN Bet 1,992 posts

- 5. #NO1ShinesLikeHongjoong 18.1K posts

- 6. #영원한_넘버원캡틴쭝_생일 18.6K posts

- 7. Gremlins 3 2,123 posts

- 8. Good Thursday 34.4K posts

- 9. Jaafar 7,182 posts

- 10. Madam Speaker N/A

- 11. #thursdayvibes 2,739 posts

- 12. Joe Dante N/A

- 13. Happy Friday Eve N/A

- 14. Mega Chimecho 3,385 posts

- 15. #BrightStar_THE8Day 27.1K posts

- 16. Penn 9,099 posts

- 17. Baxcalibur 2,879 posts

- 18. Chris Columbus 1,940 posts

- 19. Korrina 3,094 posts

- 20. Barstool 1,527 posts