#logisticregression hasil pencarian

Logistic regression uses a binary outcome (like diabetes) & multiple predictors. Which combo best predicts the outcome? This video breaks down building the model in R, step-by-step. #LogisticRegression #RProgramming

Effect modifiers in logistic regression: Does age change how glucose affects diabetes risk? Older folks may have different physiology, impacting how their bodies use glucose. #RStats #LogisticRegression

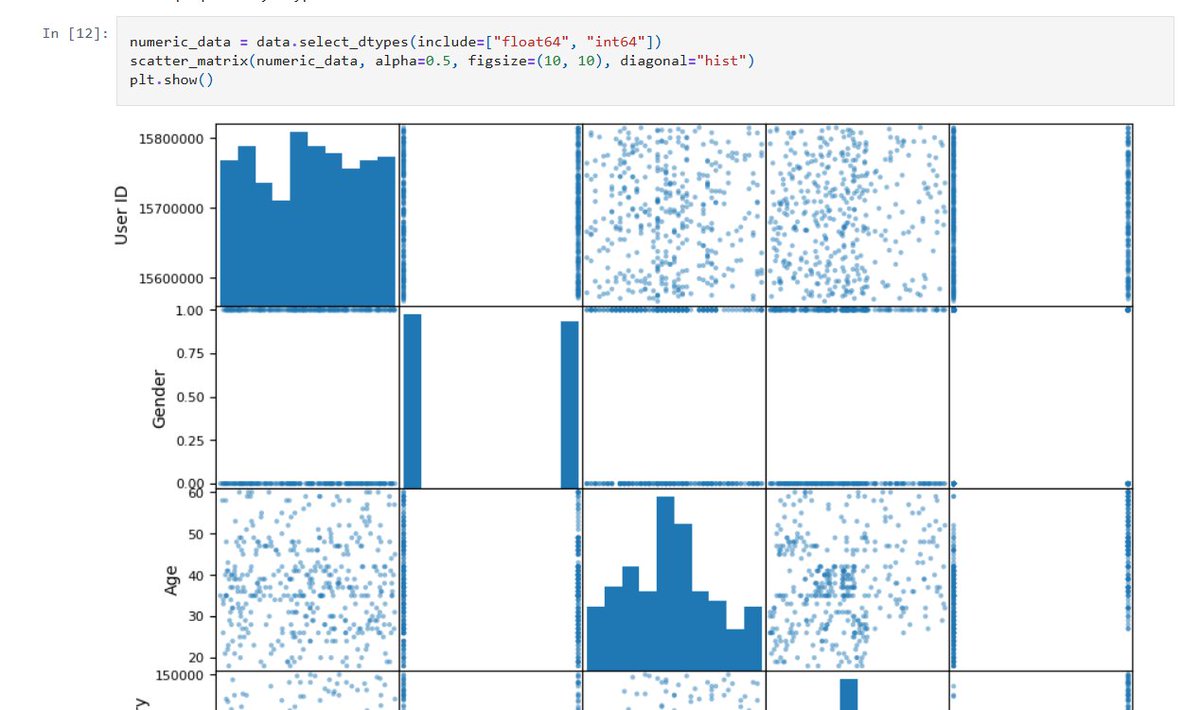

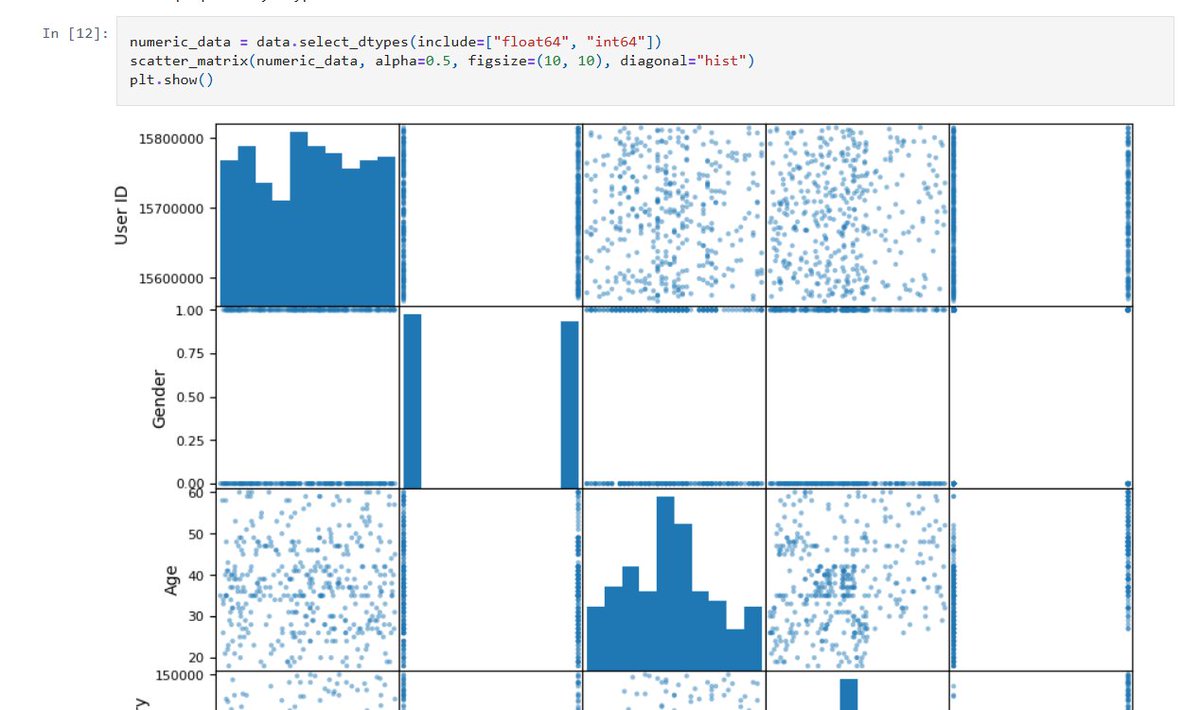

Data exploration = the foundation of every ML model!🛠️ #MachineLearning #DataScience #LogisticRegression #Python

Collinearity can befuddle a model's ability to figure out the impact of each variable on the outcome. High correlation between predictive variables means overlapping info. Aim to remove above 0.8. #LogisticRegression #RStats

An Asymmetric Ensemble Method for Determining the Importance of Individual Factors of a Univariate Problem ✏️ Jelena Mišić et al. 🔗 brnw.ch/21wVY65 Viewed: 1451; Cited: 4 #mdpisymmetry #binaryclassification #logisticregression #featureselection

Binary Logistic Regression in SPSS: The Complete Point-and-Click Guide theacademicpapers.co.uk/blog/2025/09/2… #BinaryLogisticRegression #BinaryRegression #LogisticRegression #SPSS

Become a Logistic Regression Superhero! #LogisticRegression #DataScience #MachineLearning #Statistics #Programming #Tutorial #DataAnalysis #RProgramming #DataVisualization #LearnDataScience

Day 2 of my summer fundamentals series: Built Logistic Regression from scratch using just NumPy. No scikit-learn, no TensorFlow — just math, sigmoid functions, and gradient descent. Turning probabilities into predictions, one step at a time. #MLfromScratch #LogisticRegression

Preprocessing data = turning chaos into clarity!🚀Here is pt 3 of the LR with #Python Cheat Sheet. #MachineLearning #LogisticRegression #DataScience

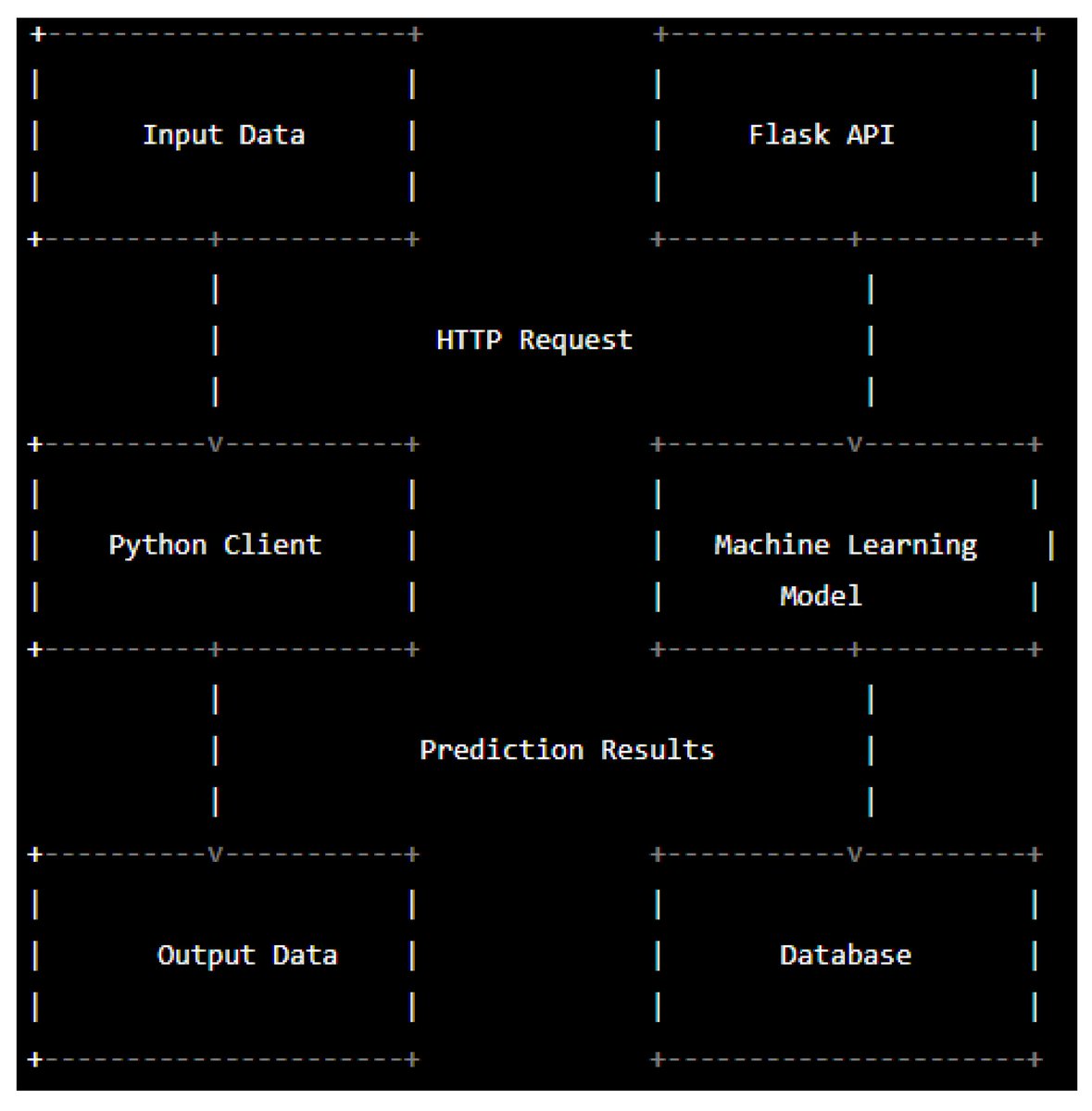

ML from Scratch — Day 17 (19/05) [~1.5 hrs] - wrote logistic regression with the help of youtube videos - read more theory and implemented basics from scratch #ML #LogisticRegression #Python

![Hidden_Neuron_'s tweet image. ML from Scratch — Day 17 (19/05)

[~1.5 hrs]

- wrote logistic regression with the help of youtube videos

- read more theory and implemented basics from scratch

#ML #LogisticRegression #Python](https://pbs.twimg.com/media/GrVj8abW0AEMAP_.png)

![Hidden_Neuron_'s tweet image. ML from Scratch — Day 17 (19/05)

[~1.5 hrs]

- wrote logistic regression with the help of youtube videos

- read more theory and implemented basics from scratch

#ML #LogisticRegression #Python](https://pbs.twimg.com/media/GrVlE8qbAAAlAWa.jpg)

This week, I delved into Classification — one of the most fundamental and widely used techniques in Machine Learning. github.com/tanjinadnanabi… #MachineLearning #Classification #LogisticRegression #mlzoomcamp #DataScience #LearningJourney #AI

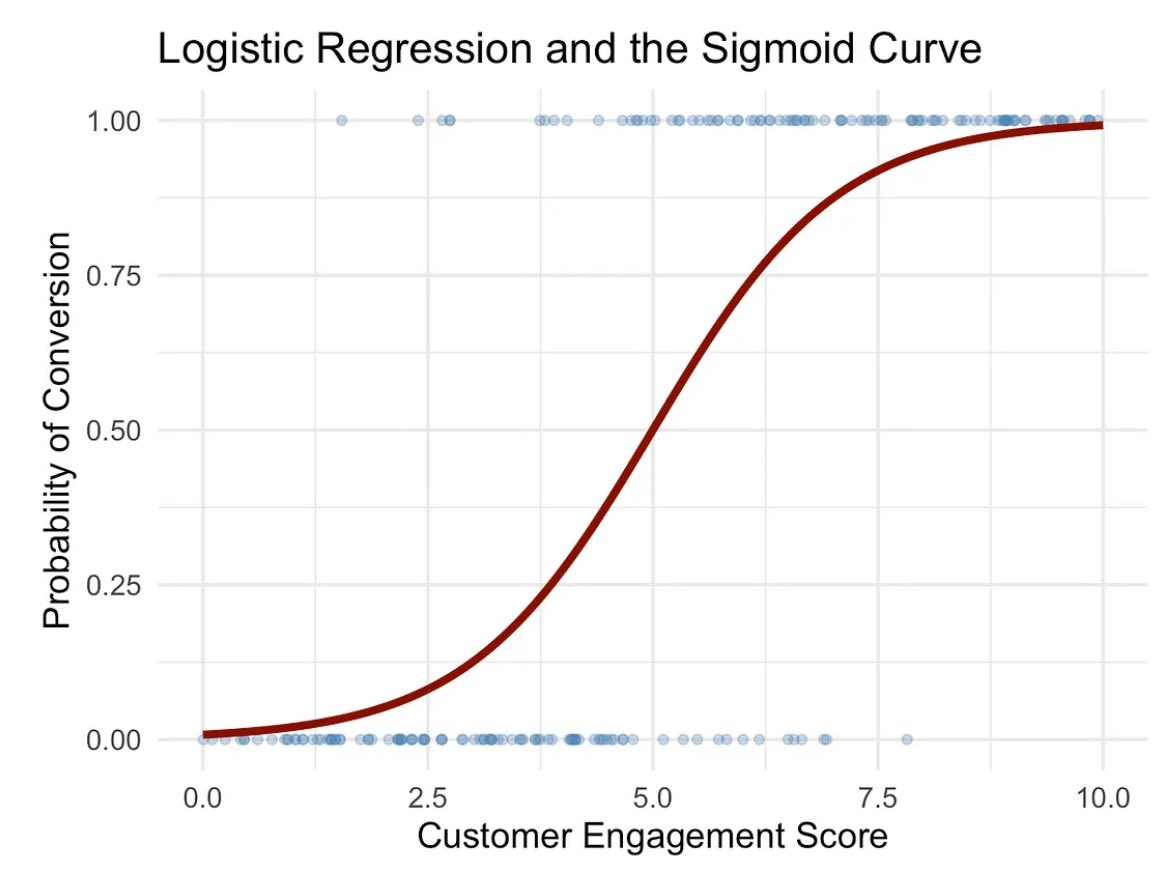

🚀 #LogisticRegression: A binary classifier that predicts the probability of a class using an S-shaped curve (sigmoid). 🎯 Outputs range from 0 to 1: If the probability ≥ 0.5, it's classified as 1; otherwise, 0. 📊 #MachineLearning #AI #DataScience

How Salvador Briggman Got into Crowdfunding #KickstarterAnalysis #LogisticRegression #EconClass #CrowdfundingSuccess #DataDriven #CollegeLife #ViralArticles #TechGadgets #TableTopGames #PassionProject #crowdfunding #kickstarter #salvadorbriggman #crowdcrux

🔍 The #SigmoidFunction in #LogisticRegression maps inputs to a 0-1 range with an S-shaped curve. 🌀 It converts model outputs into probabilities, classifying results as 1 (≥0.5) or 0 (<0.5). Ideal for binary classification! 📈 #MachineLearning #AI #DataScience

🤔 Logistic Regression isn’t about regression—it’s for classification. It predicts probabilities between 0 and 1. #ML #LogisticRegression #AI

📚 Today I explored Logistic Regression — from hypothesis function to the log loss function. Loved seeing how it shifts from squared error to cross-entropy for better optimization ! #MachineLearning #LogisticRegression #DataScience #100DaysOfML

Understand Logistic Regression in Minutes! #LogisticRegression #MachineLearning #DataAnalysis #RProgramming #StatisticalModeling #DiabetesPrediction #DataScience #ML #ProgrammingTutorial #RegressionAnalysis

Full Video coming soon on my YT Channel! #LogisticRegression #manim #AI #Ml #learning #3B1B #ArtificialInteligence

Effect modifiers in logistic regression: Does age change how glucose affects diabetes risk? Older folks may have different physiology, impacting how their bodies use glucose. #RStats #LogisticRegression

Collinearity can befuddle a model's ability to figure out the impact of each variable on the outcome. High correlation between predictive variables means overlapping info. Aim to remove above 0.8. #LogisticRegression #RStats

Logistic regression uses a binary outcome (like diabetes) & multiple predictors. Which combo best predicts the outcome? This video breaks down building the model in R, step-by-step. #LogisticRegression #RProgramming

Fine-tuning your model with regularization: Explore how different values of the regularization parameter C affect your model's AUC scores. Part of the Machine Learning Zoomcamp by DataTalks.club. #Regularization #LogisticRegression #AI

Just wrapped up Module 3 of #MLZoomcamp2025 🚀 Built a churn prediction model using #LogisticRegression — turning data into customer retention insights. ML isn’t just math. It’s strategy. 👇 Read my journey on Medium #MachineLearning #DataScience #AI medium.com/p/predicting-c…

So a score of 0.81 means an 81% chance of churn! This simple transformation turns a linear model into a powerful binary classifier. Ready to dive deeper into machine learning? Follow @Al_Grigor for amazing tutorials and guidance. #MachineLearning #LogisticRegression

This week, I delved into Classification — one of the most fundamental and widely used techniques in Machine Learning. github.com/tanjinadnanabi… #MachineLearning #Classification #LogisticRegression #mlzoomcamp #DataScience #LearningJourney #AI

🚀 Day 91 of #100DaysOfML ✅ Dove into #LogisticRegression assumptions, regularization. Key: binary outcome, independent observations, linearity to log-odd, low multicollinearity, large sample. L1/L2/Elastic Net prevent overfitting by penalizing coefficients. #ML #AI #DataScience

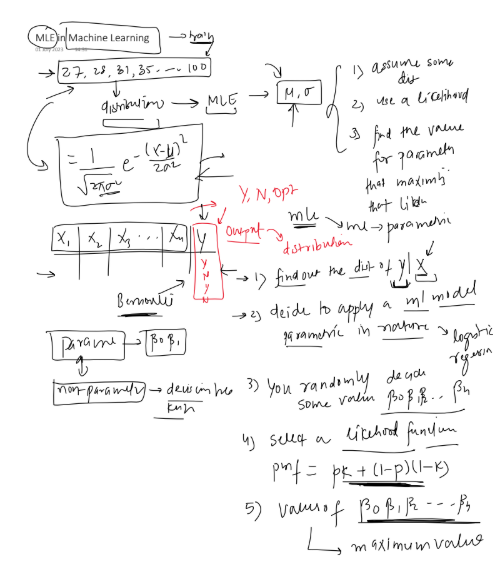

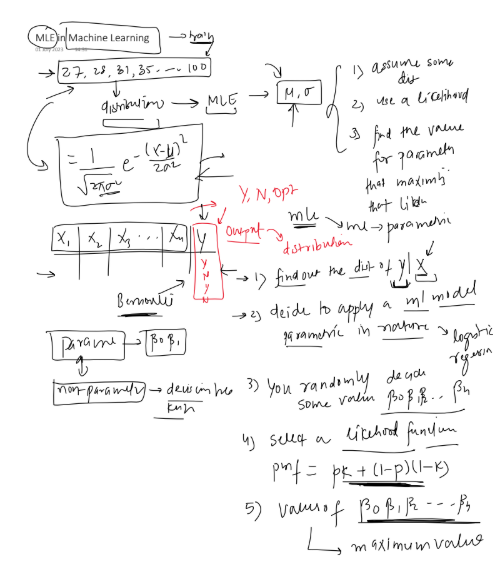

🚀 Day 90 of #100DaysOfML ✅ Dove into #LogisticRegression & MLE 🔍. Sigmoid models probabilities; training maximizes likelihood (minimizes log loss) using G Descent. MLE estimates parameters, links to loss functions, aids interpretability & model comparison. #ML #AI #DataScience

🤔 Logistic Regression isn’t about regression—it’s for classification. It predicts probabilities between 0 and 1. #ML #LogisticRegression #AI

Just built a logistic regression model to predict if a user will purchase a product after seeing an ad. By turning salary into binned categories, I was able to capture non-linear patterns and boost test accuracy from ~86% to ~95% github.com/Ravi0529/purch… #LogisticRegression

Binary Logistic Regression in SPSS: The Complete Point-and-Click Guide theacademicpapers.co.uk/blog/2025/09/2… #BinaryLogisticRegression #BinaryRegression #LogisticRegression #SPSS

👉👉 Spatial Prediction of #Landslide Susceptibility Using #LogisticRegression (LR), #FunctionalTrees (FTs), and #RandomSubspaceFunctionalTrees (RSFTs) for Pengyang County, China ✍️ Hui Shang et al. 📎 brnw.ch/21wNu4N

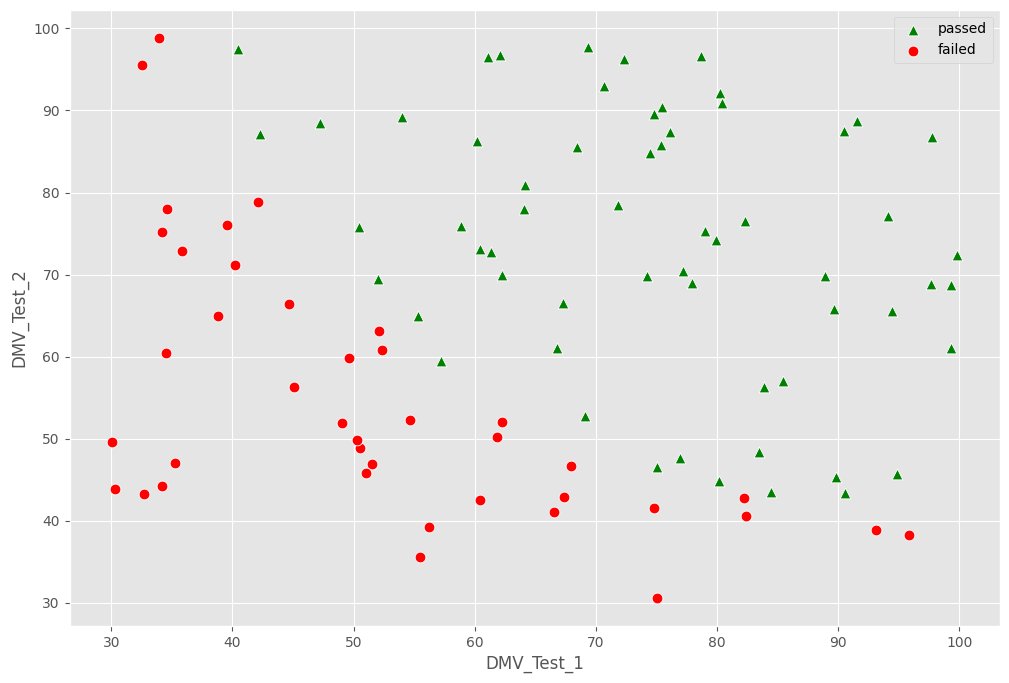

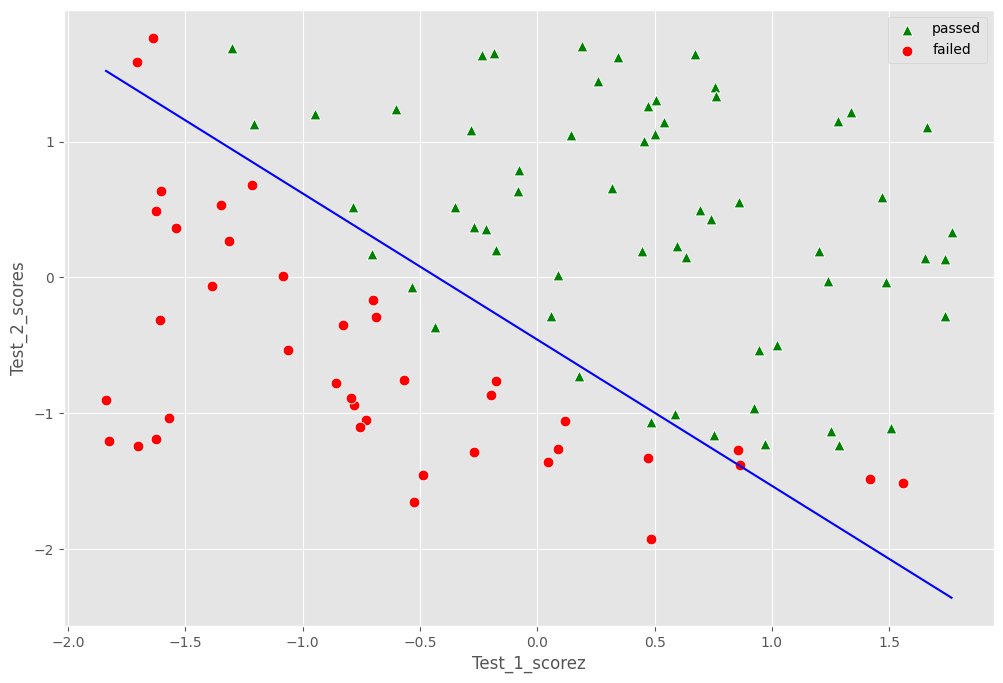

Day 42 of #LearningWithLeapfrog! 🎉 Explored logistic regression and data visualization. Plotted test scores, computed cost and gradient, implemented gradient descent, and visualized the decision boundary. #LogisticRegression #MachineLearning #60DaysofLearning2024 #LPPD42

🚀 Day 90 of #100DaysOfML ✅ Dove into #LogisticRegression & MLE 🔍. Sigmoid models probabilities; training maximizes likelihood (minimizes log loss) using G Descent. MLE estimates parameters, links to loss functions, aids interpretability & model comparison. #ML #AI #DataScience

Data exploration = the foundation of every ML model!🛠️ #MachineLearning #DataScience #LogisticRegression #Python

Should You Click ‘Yes’ or ‘No’? Predicting Choices with Logistic Regression #DataScience #logisticregression #medium medium.com/p/should-you-c…

Just built a logistic regression model to predict if a user will purchase a product after seeing an ad. By turning salary into binned categories, I was able to capture non-linear patterns and boost test accuracy from ~86% to ~95% github.com/Ravi0529/purch… #LogisticRegression

🚀 Day 91 of #100DaysOfML ✅ Dove into #LogisticRegression assumptions, regularization. Key: binary outcome, independent observations, linearity to log-odd, low multicollinearity, large sample. L1/L2/Elastic Net prevent overfitting by penalizing coefficients. #ML #AI #DataScience

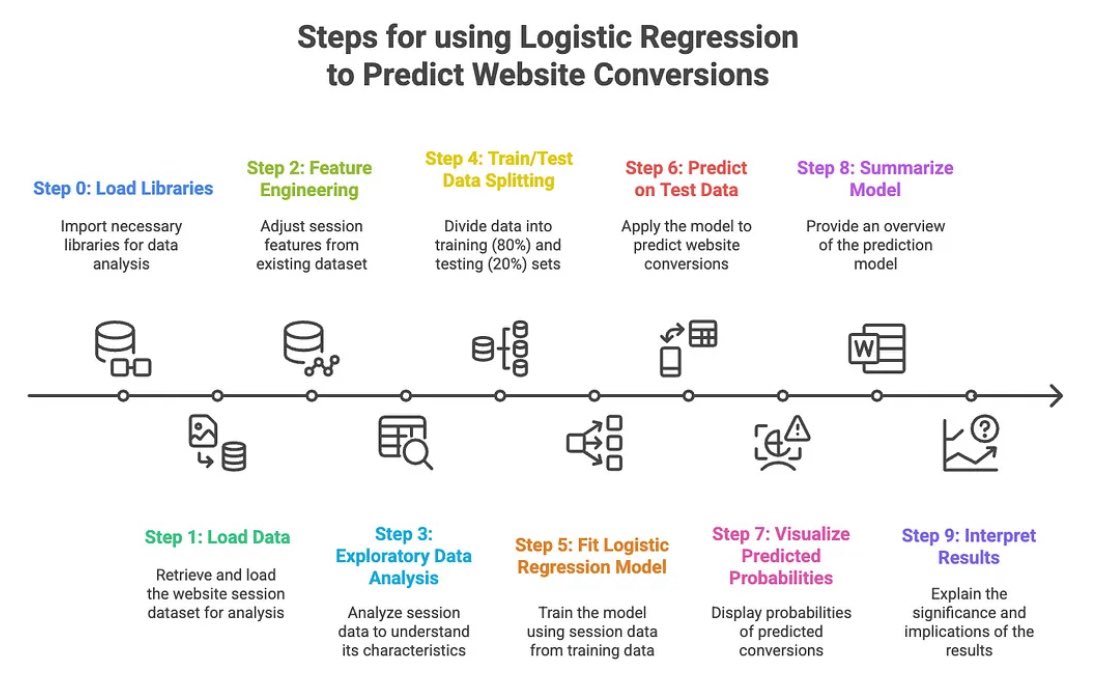

Can you predict marketing outcomes? 🤔 I used logistic regression in R to model website conversions by traffic source. 📊📈 Read my article 👉 blog.marketingdatascience.ai/what-happens-n… 🐝 @gtomsanalytics @GATechScheller #RStats #MarketingAnalytics #LogisticRegression

Preprocessing data = turning chaos into clarity!🚀Here is pt 3 of the LR with #Python Cheat Sheet. #MachineLearning #LogisticRegression #DataScience

ML from Scratch — Day 17 (19/05) [~1.5 hrs] - wrote logistic regression with the help of youtube videos - read more theory and implemented basics from scratch #ML #LogisticRegression #Python

![Hidden_Neuron_'s tweet image. ML from Scratch — Day 17 (19/05)

[~1.5 hrs]

- wrote logistic regression with the help of youtube videos

- read more theory and implemented basics from scratch

#ML #LogisticRegression #Python](https://pbs.twimg.com/media/GrVj8abW0AEMAP_.png)

![Hidden_Neuron_'s tweet image. ML from Scratch — Day 17 (19/05)

[~1.5 hrs]

- wrote logistic regression with the help of youtube videos

- read more theory and implemented basics from scratch

#ML #LogisticRegression #Python](https://pbs.twimg.com/media/GrVlE8qbAAAlAWa.jpg)

🔍 The #SigmoidFunction in #LogisticRegression maps inputs to a 0-1 range with an S-shaped curve. 🌀 It converts model outputs into probabilities, classifying results as 1 (≥0.5) or 0 (<0.5). Ideal for binary classification! 📈 #MachineLearning #AI #DataScience

🚀 #LogisticRegression: A binary classifier that predicts the probability of a class using an S-shaped curve (sigmoid). 🎯 Outputs range from 0 to 1: If the probability ≥ 0.5, it's classified as 1; otherwise, 0. 📊 #MachineLearning #AI #DataScience

Day 2 of my summer fundamentals series: Built Logistic Regression from scratch using just NumPy. No scikit-learn, no TensorFlow — just math, sigmoid functions, and gradient descent. Turning probabilities into predictions, one step at a time. #MLfromScratch #LogisticRegression

Built a logistic regression model to predict if someone will buy based on age & salary. Got 92% chance of buying for age 41, salary 78k. Accuracy: 75% #machinelearning #python #logisticregression #100daysofcode

⚙️ How is it done? A basic but powerful technique: Logistic Regression ✔️ Predicts class probabilities ✔️ Uses a sigmoid function (values between 0 & 1) ✔️ Trained on labeled data + tested on new data Simple idea big impact. #MLModels #LogisticRegression #AITools #MachineLearning

Unlocking Insights with Logistic Regression 📈🔍. A cornerstone of statistical modeling, logistic regression provides powerful binary classification, essential for fields like medicine, finance, and marketing. #LogisticRegression #DataScience

An Asymmetric Ensemble Method for Determining the Importance of Individual Factors of a Univariate Problem ✏️ Jelena Mišić et al. 🔗 brnw.ch/21wVY65 Viewed: 1451; Cited: 4 #mdpisymmetry #binaryclassification #logisticregression #featureselection

Logistic regression predicts the likelihood of an event using mathematical analysis of variables, yielding results between 0 and 1 for binary outcomes. #LogisticRegression #PredictiveModeling #DataScience #MachineLearning #Statistics #BinaryOutcomes #PredictiveAnalytics #Data

🚀 Implemented Logistic Regression from scratch in JavaScript — no libraries, just pure math and logic! 🧠 Great hands-on learning experience to understand how ML models actually work under the hood. #ml #machinelearning @python #logisticregression @ChaiCodeHQ @Hiteshdotcom

Something went wrong.

Something went wrong.

United States Trends

- 1. Cloudflare 213K posts

- 2. Gemini 3 25K posts

- 3. #AcousticPianoCollection 1,192 posts

- 4. Olivia Dean 4,100 posts

- 5. Piggy 55.9K posts

- 6. Saudi 117K posts

- 7. Taco Tuesday 14.6K posts

- 8. La Chona 1,157 posts

- 9. Salman 31.6K posts

- 10. CAIR 24K posts

- 11. Good Tuesday 35.6K posts

- 12. #tuesdayvibe 3,140 posts

- 13. Anthropic 8,399 posts

- 14. #ONEPIECE1166 4,425 posts

- 15. Sam Leavitt N/A

- 16. jeonghan 94K posts

- 17. #MSIgnite N/A

- 18. Passan N/A

- 19. Mark Epstein 26.2K posts

- 20. JUST ANNOUNCED 26.5K posts