#slurm 검색 결과

We are pleased to announce the availability of Slinky version 1.0.0-rc1. Slinky is SchedMD’s set of components to integrate Slurm in Kubernetes environments. Our landing page is here: slinky.ai #kubernetes #slurm #dra #ai #training #inference #hpc

schedmd.com

Why Choose Slinky - SchedMD

Slinky - a set of projects that works to bring the worlds of Slurm and Kubernetes together. Efficient resource management starts here.

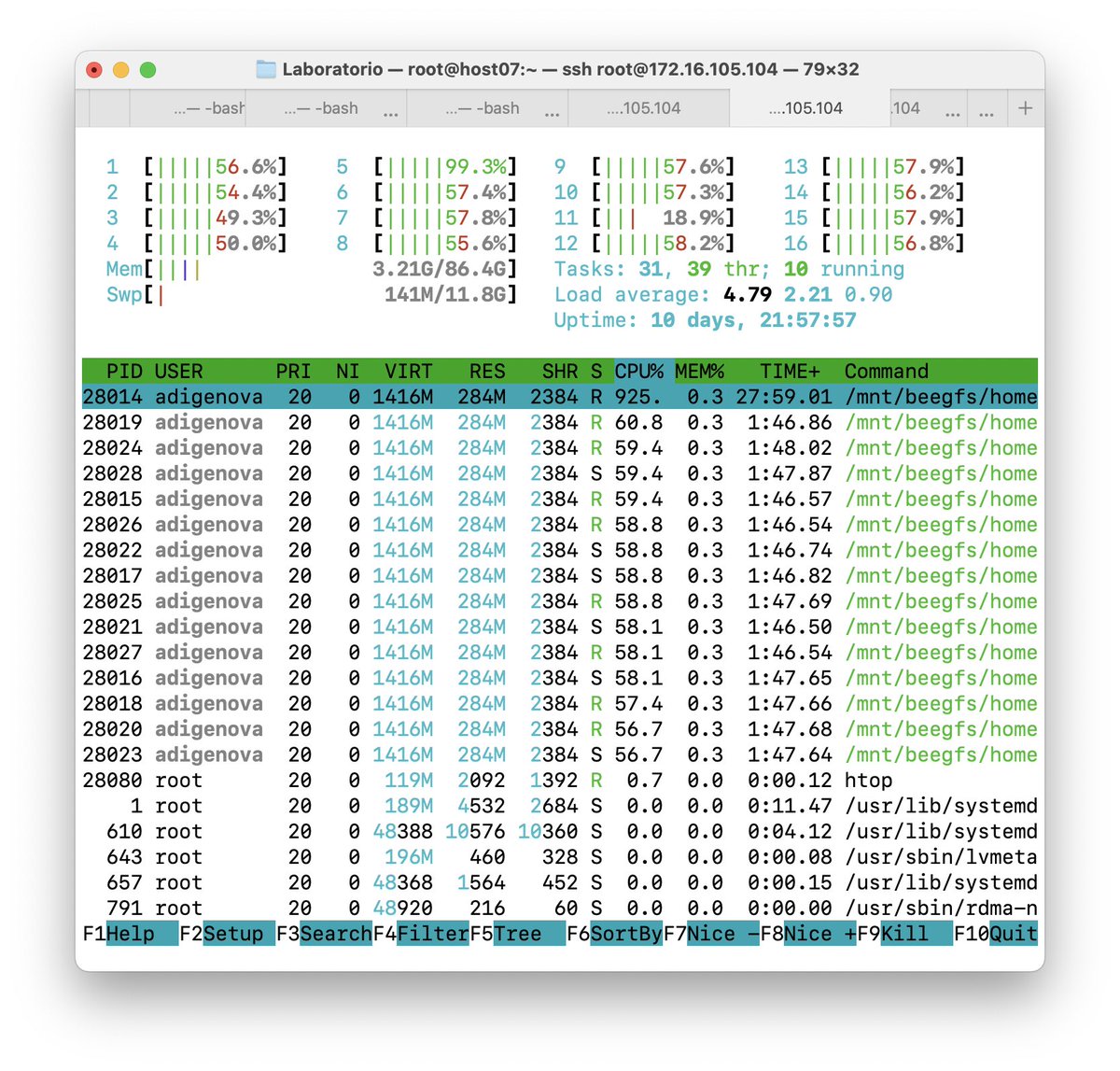

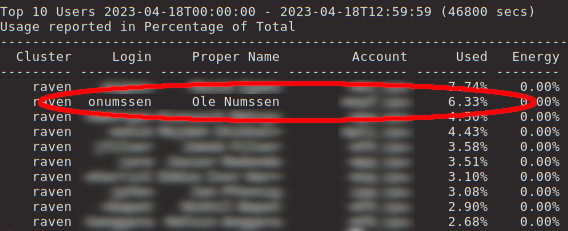

I integrated a tool that shows resource usage of running #Slurm jobs, if you are allowed to ssh into the running job. It’s not a core part of the #HPC object browser, but seems pretty useful, especially for “new to HPC” folks. Short demo video below.

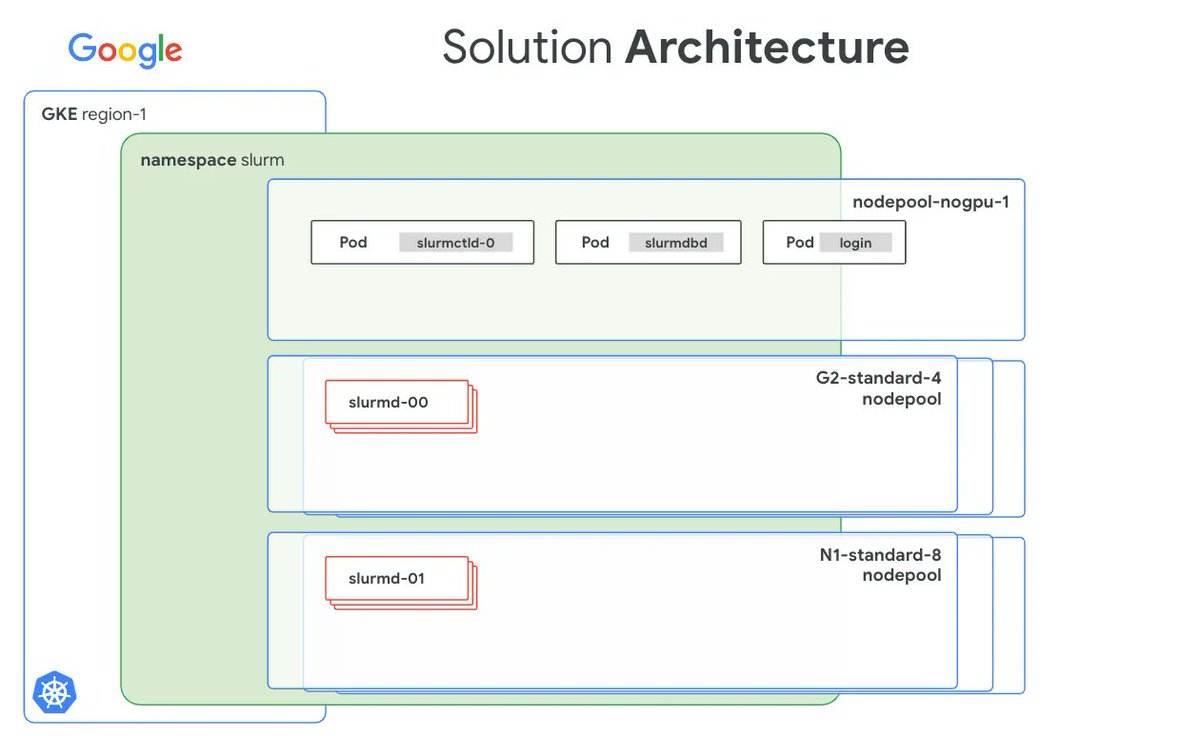

Do you use Slurm and want to deploy on Google Kubernetes Engine (GKE)? We have a solution for you. With Daniel Marzini we open sourced a full solution guide for running #Slurm on #GKE with Terraform code, the Docker image and step by step guide. Check it out…

⚡️ 𝗡𝗲𝘄 𝗘𝘅𝗽𝗿𝗲𝘀𝘀 𝗟𝗶𝘀𝘁𝗶𝗻𝗴! 🔥 Entered Trending | @slurmbeast Slurm | #SLURM ntm.ai/token/6tRtbqb4… 📊 LIQ: $34.22K | MC: $99.75K #SOL #Crypto #Bitcoin

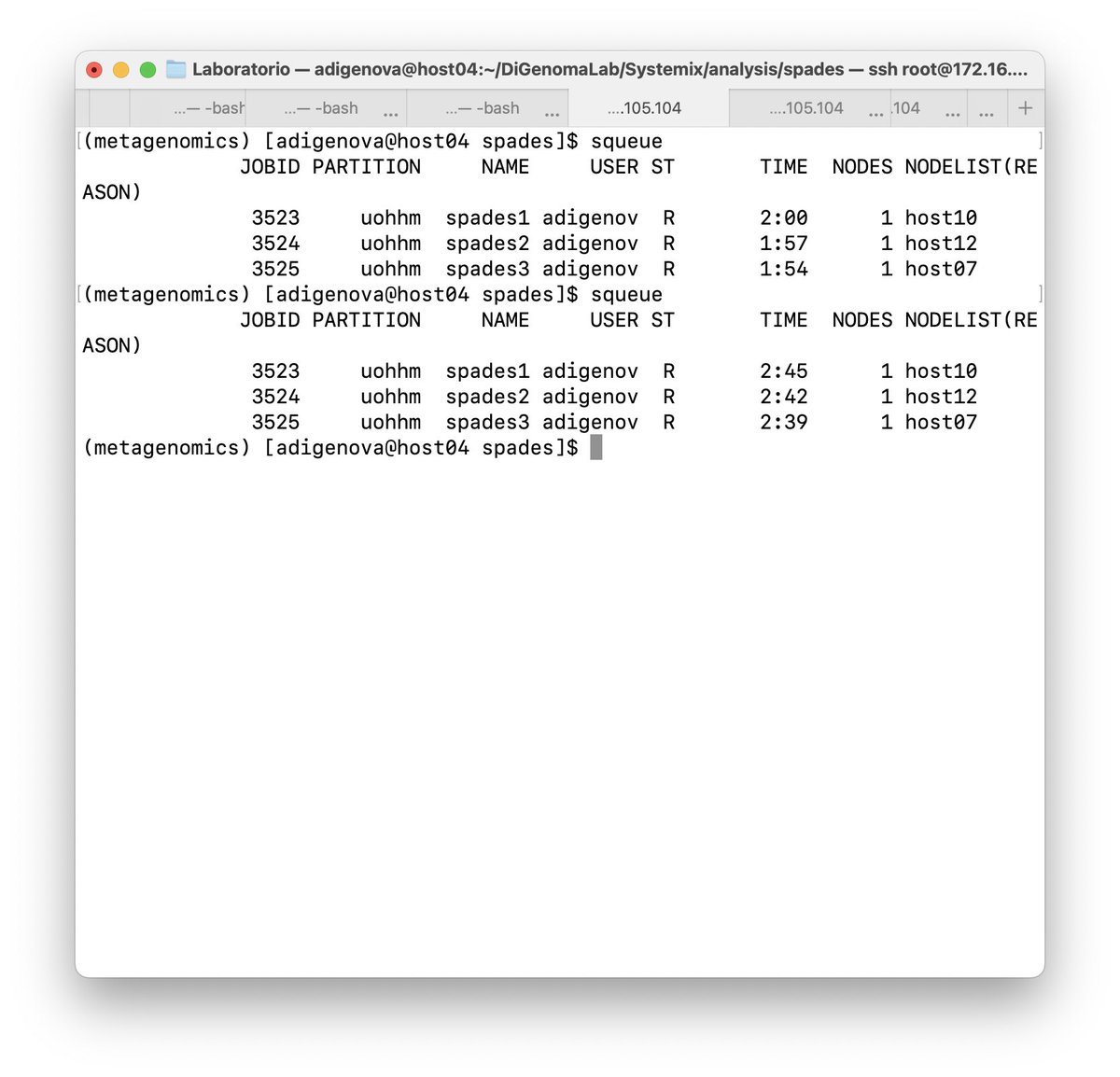

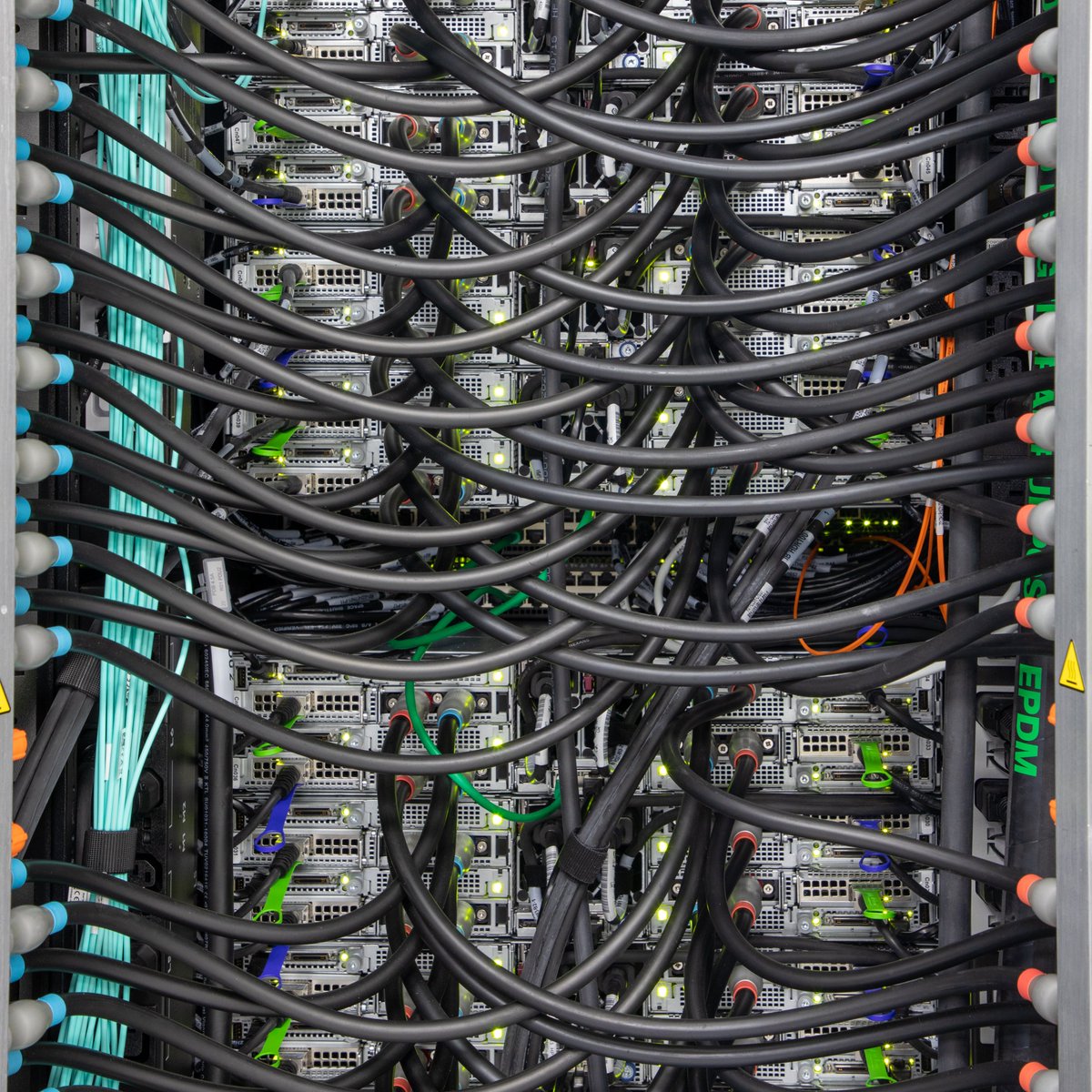

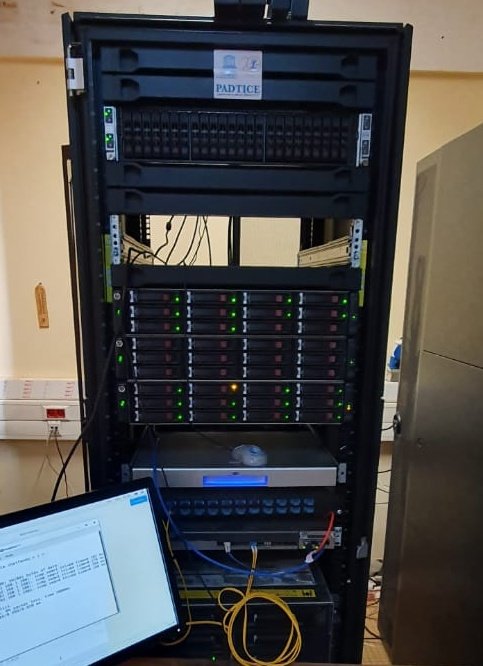

The @ici_uoh computer cluster running #BeeGFS, #SLURM, and #Ondemand is computing its first jobs!!! The cluster is 1000 cores, 6Tb RAM, and 200TB storage. More applications coming!!! @ici_uoh @uohiggins 🖥️🎉 🎉 🎉

Added an object-class-specific details view to the #HPC objects browser. Its customizable with templates and can pull in instance attributes of objects. (HTML/Jinja2). Development is progressing nicely and its fun to explore this! 😊 The video shows #Slurm partitions and jobs.

Struggling to choose between Slurm and Kubernetes for your ML workloads? Whether you need robust job scheduling with Slurm, the flexibility of Kubernetes, or maybe both - we’ve got you covered. Read more: nscale.com/blog/choosing-… #AI #ML #Slurm #Kubernetes

Here is a short video on how to get started with the #HPC object browser and the #Slurm provider. I am looking for feedback on how the tool works in your environment. Give it a try and let me know. github.com/RobertHenschel…

🚀 Added NVIDIA GPU support to slurm-docker-cluster by github.com/giovtorres — now you can run CUDA jobs on Dockerized Slurm! Great for AI/ML testing, CI, and local HPC prototyping. Next: multi-node GPU support 👀 🔗 PR: github.com/giovtorres/slu… #HPC #Slurm #NVIDIA #Docker #AI

github.com

Add NVIDIA GPU support across Docker/Slurm + example job by andrewssobral · Pull Request #68 ·...

Add NVIDIA GPU Support to Docker + Slurm Cluster ✅ Verified on Docker Engine 28.5.1 + Slurm 25.05 with NVIDIA RTX 2070 GPUs Summary This PR adds native GPU support to slurm-docker-cluster, enabli...

📉 Kubetorch users report 98% fewer prod failures. Why? Because unlike CLI-based infra (@kubeflow, #slurm, @raydistributed , etc) that crumble on errors, Kubetorch: ✅Propagates remote exceptions in pure Python ✅Avoids cascading failures ✅Supports multiple interactive calls…

💎 DEX paid for $SLURM on PumpSwap 💎 Name: Slurm MCap: $78.7k Paid: 1 min, 32 s ago Created: 25 min, 8 s ago 🔗 CA: 6tRtbqb4pxyPRPRJTRG6cmGCNw6Uomgwkt32aw8spump #SLURM #DexPaid #PumpSwap #SOL

Tired of wrestling with SLURM setups? We wrote a step-by-step guide to deploy a powerful cluster on Crusoe Cloud — and automated it with Terraform & Ansible. Your time back, to focus on the actual AI. 🧠 Check it out: crusoe.ai/blog/how-to-de… #SLURM #HPC #AI #DevOps

📢 The migration to Slurm has been successfully completed on IT4Innovations supercomputers. Why did we switch from PBS Scheduler to Slurm, and what are the benefits of Slurm for our users? ➡️it4i.cz/en/about/infos… #Slurm #CzechHPC @eINFRA_CZ

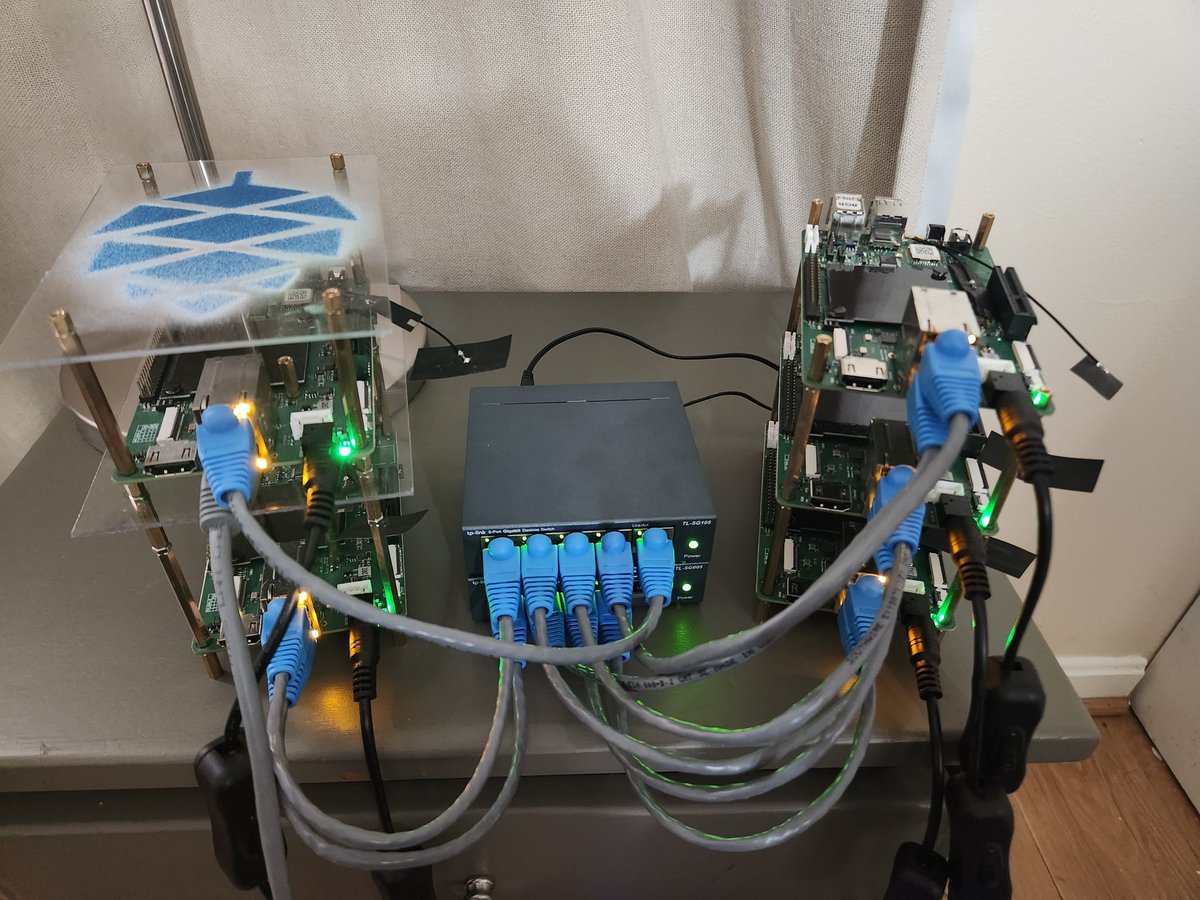

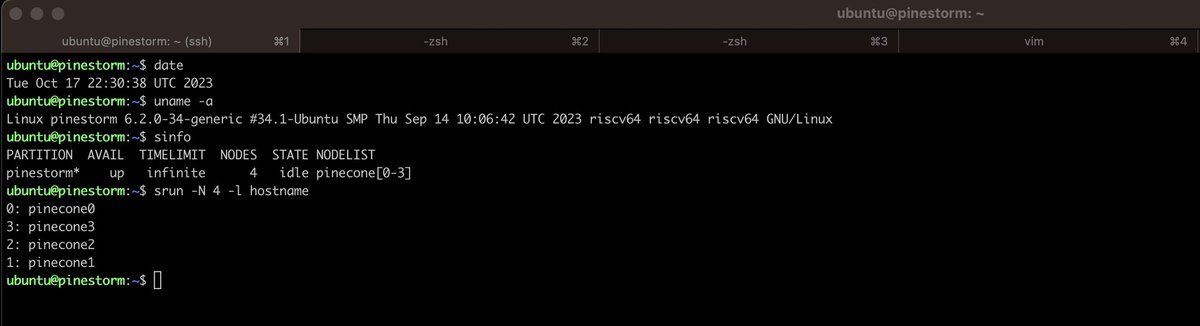

Pinestorm expands to 4 compute nodes! Pinestorm is a @risc_v #beowulfcluster built with @thepine64 #star64's. It runs #slurm, #ubuntu, and #nfs. #hpc #riscv #pine64 #pinestorm @beofound

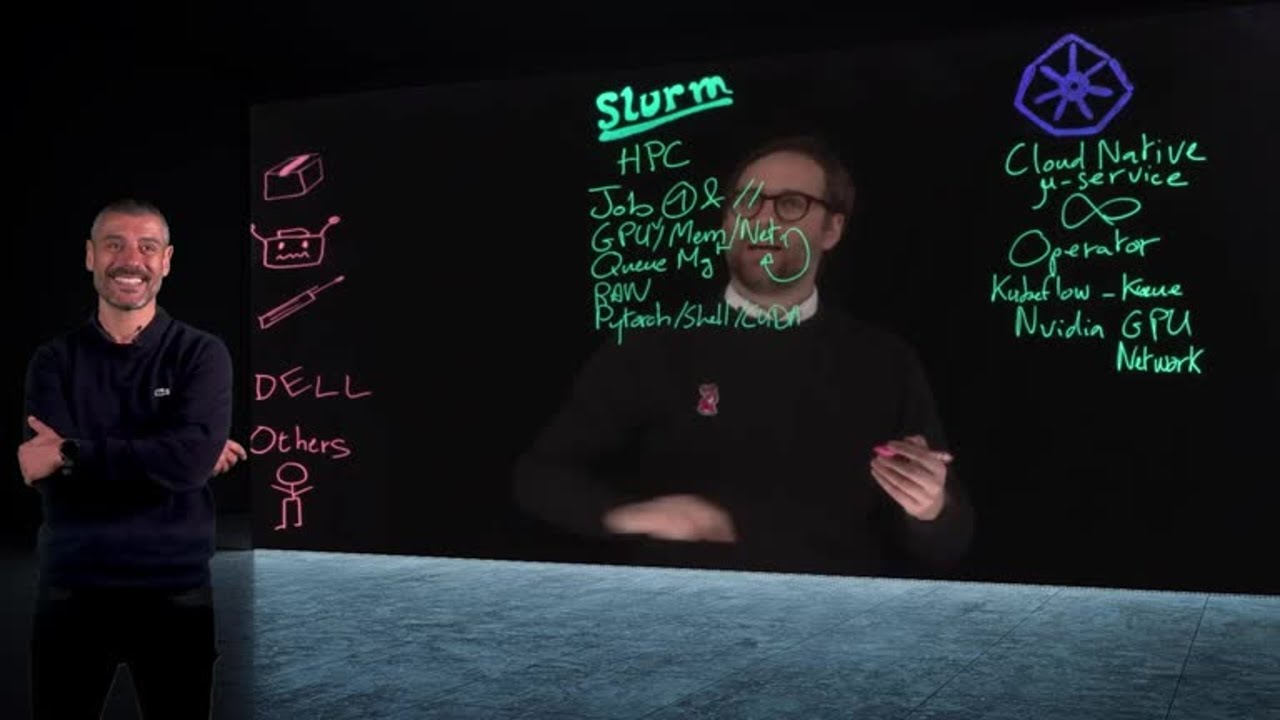

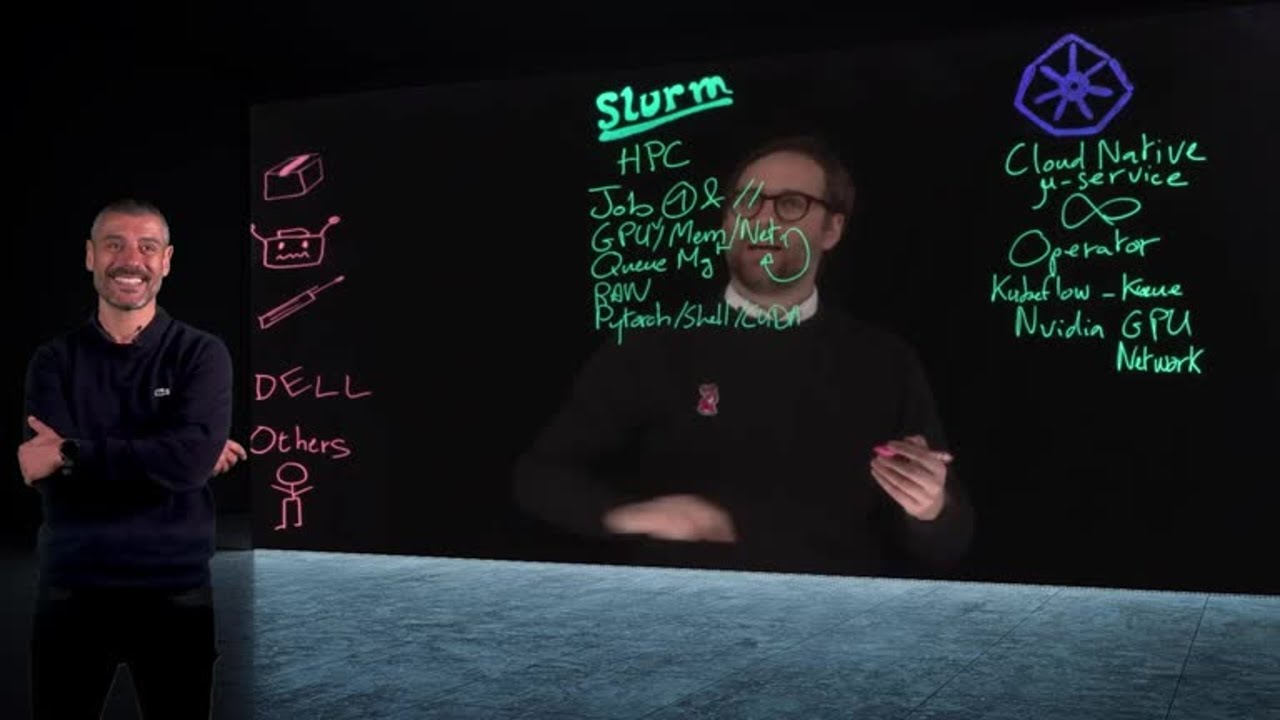

#Slurm & #Kubernetes are becoming more mainstream to run #AI workloads. Despite having the same goals these two platforms offer different possibilities, and the choice can be difficult. Let’s see the key elements that can help to make a choice youtu.be/qgzYuKfQV5o?si…

youtube.com

YouTube

Slurm vs Kubernetes : What to choose to run my AI workloads?

🗓️ Nebius monthly digest, September 2024 In September, we announced the opening of our French region, launched the world’s first open-source #K8s operator for #Slurm as well as Nebius AI Studio, a product for #GenAI builders — and there’s more: eu1.hubs.ly/H0cT_000

Our booth is all set up at #SC23! Stay tuned for speaker schedules for Tuesday-Thursday. It's gonna be a busy week at Booth 1373‼️ @Supercomputing #CWatSC23 #Slurm #Kubernetes

We are pleased to announce the availability of Slinky version 1.0.0! Slinky is SchedMD's set of components to integrate Slurm in Kubernetes environments. Our landing page is here: slinky.ai #kubernetes #slurm #dra #ai #training #inference #hpc

schedmd.com

Why Choose Slinky - SchedMD

Slinky - a set of projects that works to bring the worlds of Slurm and Kubernetes together. Efficient resource management starts here.

🚀 #AWS PCS now supports managed #Slurm REST API—submit jobs and manage clusters via HTTP. Enables web portals, CI/CD integration, Jupyter workflows, no SSH required. Available now #HPC docs.aws.amazon.com/pcs/latest/use…

#Slurm & #Kubernetes are becoming more mainstream to run #AI workloads. Despite having the same goals these two platforms offer different possibilities, and the choice can be difficult. Let’s see the key elements that can help to make a choice youtu.be/qgzYuKfQV5o?si…

youtube.com

YouTube

Slurm vs Kubernetes : What to choose to run my AI workloads?

We are pleased to announce the availability of Slinky version 1.0.0-rc1. Slinky is SchedMD’s set of components to integrate Slurm in Kubernetes environments. Our landing page is here: slinky.ai #kubernetes #slurm #dra #ai #training #inference #hpc

schedmd.com

Why Choose Slinky - SchedMD

Slinky - a set of projects that works to bring the worlds of Slurm and Kubernetes together. Efficient resource management starts here.

Docker×SlurmでGPUをフル活用! ローカルLLMの効率化を検証しました💡 ジョブ同時投入時のGPU割り当ても徹底分析👀 👉 na2.hubs.ly/H01XvDn0 #GPU #Docker #Slurm #LLM #AI開発

🚀 Added NVIDIA GPU support to slurm-docker-cluster by github.com/giovtorres — now you can run CUDA jobs on Dockerized Slurm! Great for AI/ML testing, CI, and local HPC prototyping. Next: multi-node GPU support 👀 🔗 PR: github.com/giovtorres/slu… #HPC #Slurm #NVIDIA #Docker #AI

github.com

Add NVIDIA GPU support across Docker/Slurm + example job by andrewssobral · Pull Request #68 ·...

Add NVIDIA GPU Support to Docker + Slurm Cluster ✅ Verified on Docker Engine 28.5.1 + Slurm 25.05 with NVIDIA RTX 2070 GPUs Summary This PR adds native GPU support to slurm-docker-cluster, enabli...

🚀 Slurm-web v5.2.0 is here! 🎉 Discover the new advanced core allocation visualization, direct URLs for fullscreen cluster views, and failed job filtering—plus, we’ve adopted the MIT License for more flexibility! 🔗 rackslab.io/en/blog/slurm-… #HPC #AI #Slurm #OpenSource #WebUI

After showing last week how to monitor job resources in #Slurm, the logical next step is to enable job submission. The video shows how to submit an interactive job in any partition by simply right-clicking the partition. The #HPC object browser is evolving nicely. 😀

I integrated a tool that shows resource usage of running #Slurm jobs, if you are allowed to ssh into the running job. It’s not a core part of the #HPC object browser, but seems pretty useful, especially for “new to HPC” folks. Short demo video below.

Added an object-class-specific details view to the #HPC objects browser. Its customizable with templates and can pull in instance attributes of objects. (HTML/Jinja2). Development is progressing nicely and its fun to explore this! 😊 The video shows #Slurm partitions and jobs.

Here is a short video on how to get started with the #HPC object browser and the #Slurm provider. I am looking for feedback on how the tool works in your environment. Give it a try and let me know. github.com/RobertHenschel…

Over 900 organizations in 70+ countries now use Slurm-web, our open-source interface for Slurm — from academia to cloud-native HPC. Read more on: rackslab.io/en/blog/slurm-… #hpc #opensource #slurm #webui #stats

Continuing the modeling/visualizing of #HPC objects. The video shows grouping objects by properties. #Slurm jobs are shown, but this is a generic capability of the browser, works with any property of any object. Groupings show in the breadcrumb bar for easy navigation and history

Scalable Engine and the Performance of Different LLM Models in a SLURM based HPC architecture #LLM #HPC #SLURM hgpu.org/?p=30156

We did a breakdown of the top three resource management systems, 🚚📦here’s what we’ve found: From scalability to advanced features, each scheduler has its strengths. Learn more 👉 bit.ly/45QEyoe #HPC #CloudComputing #Slurm #PBS #LSF #VantageCompute

🚀 New blog: #Slurm vs PBS vs LSF: Choosing the Right #Scheduler In today’s landscape, schedulers are more than technical plumbing. They shape how organizations balance #performance, #scalability, and cost. #hpc #gpus #cpus #aiinfra bit.ly/45QEyoe

#linuxmemes #hpc #slurm #pbs #workflowmanagement #nextflow #snakemake #cloudcomputing #linuxserver #bash #Bioinformatics #research #academia #researchpapers #researchproject #phdlife #phdstudent #phdstruggles #dataAnalysis #labrats #genomics #computationalbiology #biologymemes

Пацаны и пацанессы! Мы очень долго вели переговоры и наконец договорились! В октябре на платформе #Slurm выйдет мой ультимативный курс по #Linux для начинающих и не только! Видео будет от нашей же команды RED MAGIC!

How to use minimum memory for floating numbers ndarray in Python? stackoverflow.com/questions/6764… #numpy #hpc #slurm #python

В идеале размер кода микросервиса — это кусок кода, который не жалко выкинуть и переписать не больше чем за две недели #slurm #devops Две недели? Зал немного напрягся, но этож в идеале...

Our participants today learnt about installing #Singularity #conteners and setting #slurm configuration with our #HPC #admin Ndomassi Tando. An intensive one week training at the university Joseph Ki-Zerbo in Ouagadougou! #Bioinformatics #cluster @PathoBios @ItropBioinfo @ird_fr

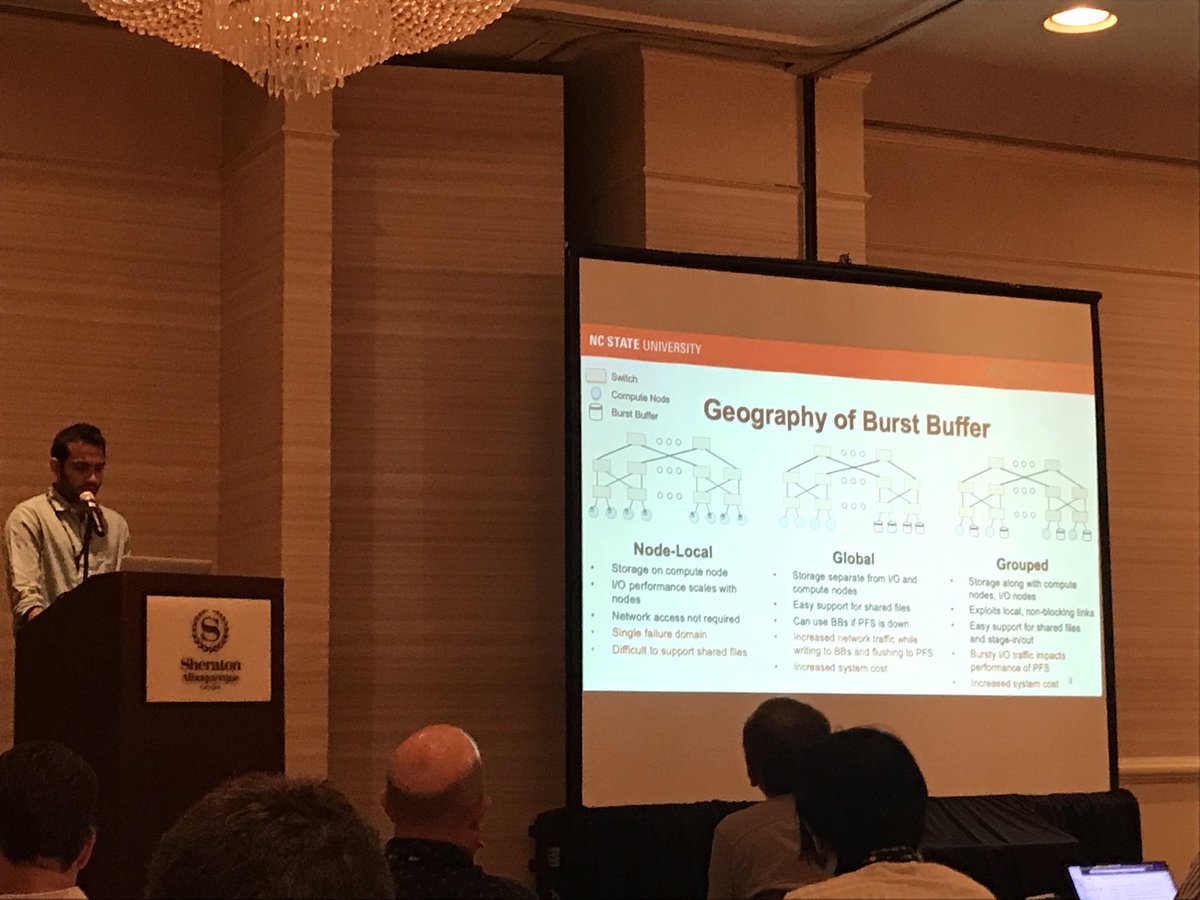

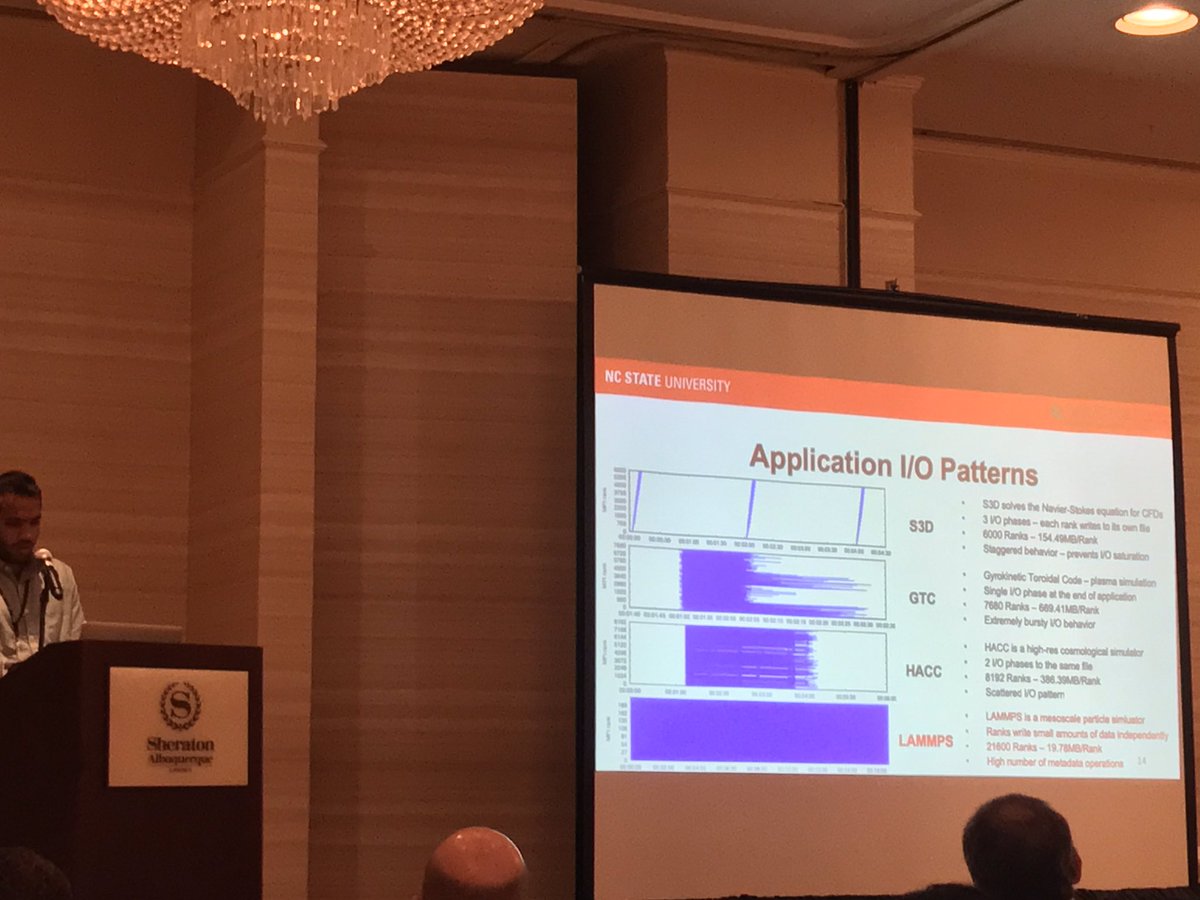

One of the 3 best papers at #cluster2019. Does #SLURM or other workload managers support the placement of co-scheduled burst buffer jobs? #MakeIOGreatAgain @geomark @glennklockwood

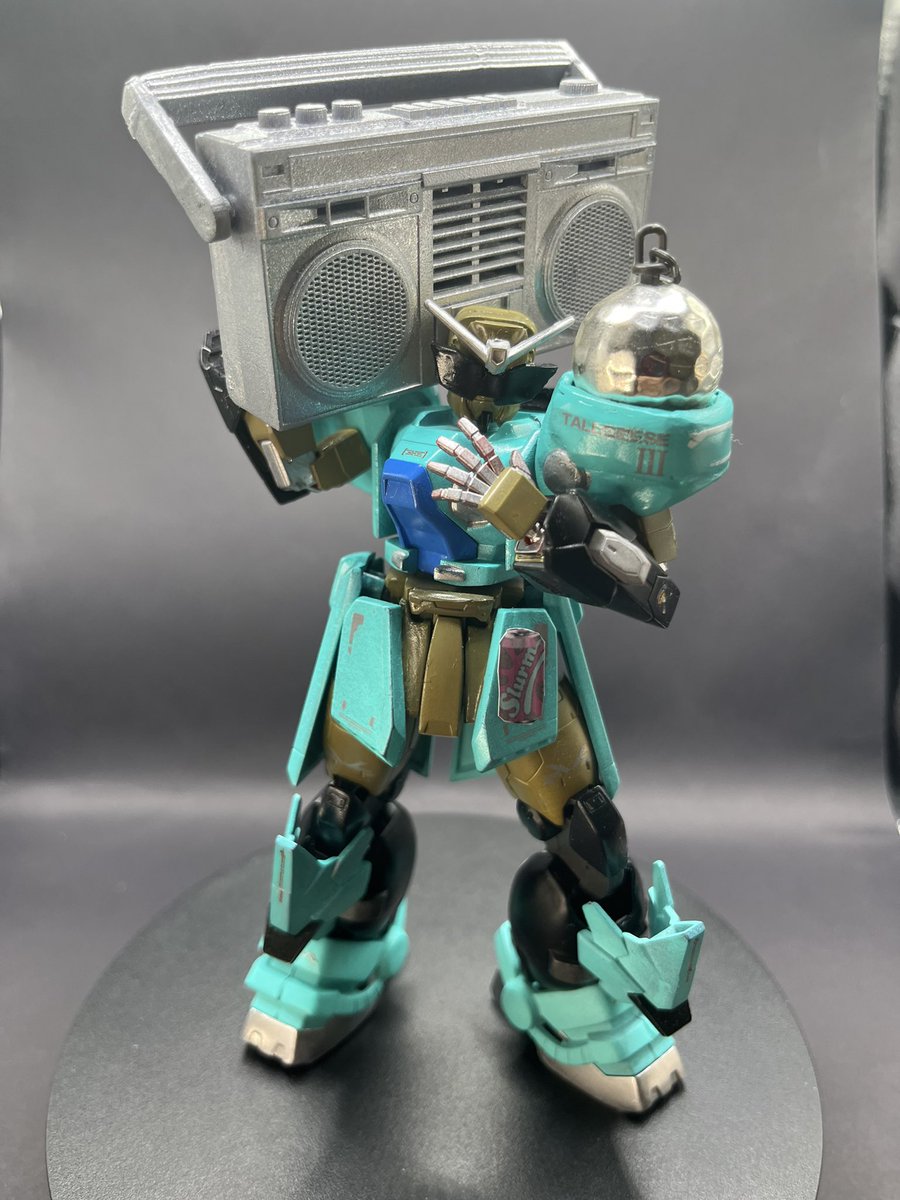

Started watching Futurama and had to draw myself as one of the characters 😎🤖 #Futurama #Slurm #SlurmSoda #MattGroening #DigitalIllustration #Illustration #DigitalArt #ToonMe #Kinda #Cartoon #Photoshop #Drawing #Art #Artist #StayHome #Quarantivities

💡ワンクリックのハイブリッド構成、Google Cloud Storage のデータ移行サポート、リアルタイムでの構成の更新、Bulk API のサポート、改善されたエラー処理など、#Slurm on Google Cloud の最新機能をリリース。新機能について詳しく解説します。goo.gle/3mbA58U #gcpja

«Можно бить разработчиков за баги, а можно внедрить SRE» — о чём говорили на митапе «Слёрма» #slurm #sre #реклама xakep.ru/2021/05/05/slu…

Today @openinfradev #openinfrasummit don't miss: @stackhpc’s very own @johnthetubaguy, @priteau and Steve Brasier explore @OpenStack Blazar describing how #k8s and #slurm platforms can be used to provide demand-driven infrastructure autoscaling in #hpc and #ai environments.

Something went wrong.

Something went wrong.

United States Trends

- 1. #StrangerThings5 268K posts

- 2. Thanksgiving 695K posts

- 3. BYERS 62.5K posts

- 4. robin 97.3K posts

- 5. Afghan 301K posts

- 6. Reed Sheppard 6,366 posts

- 7. Dustin 89.8K posts

- 8. Holly 67K posts

- 9. Podz 4,855 posts

- 10. Vecna 62.9K posts

- 11. Jonathan 76.4K posts

- 12. hopper 16.6K posts

- 13. Lucas 84.8K posts

- 14. Erica 18.6K posts

- 15. National Guard 677K posts

- 16. noah schnapp 9,190 posts

- 17. derek 20.3K posts

- 18. Nancy 69.9K posts

- 19. mike wheeler 9,880 posts

- 20. Joyce 33.8K posts