#hyperparametertuning kết quả tìm kiếm

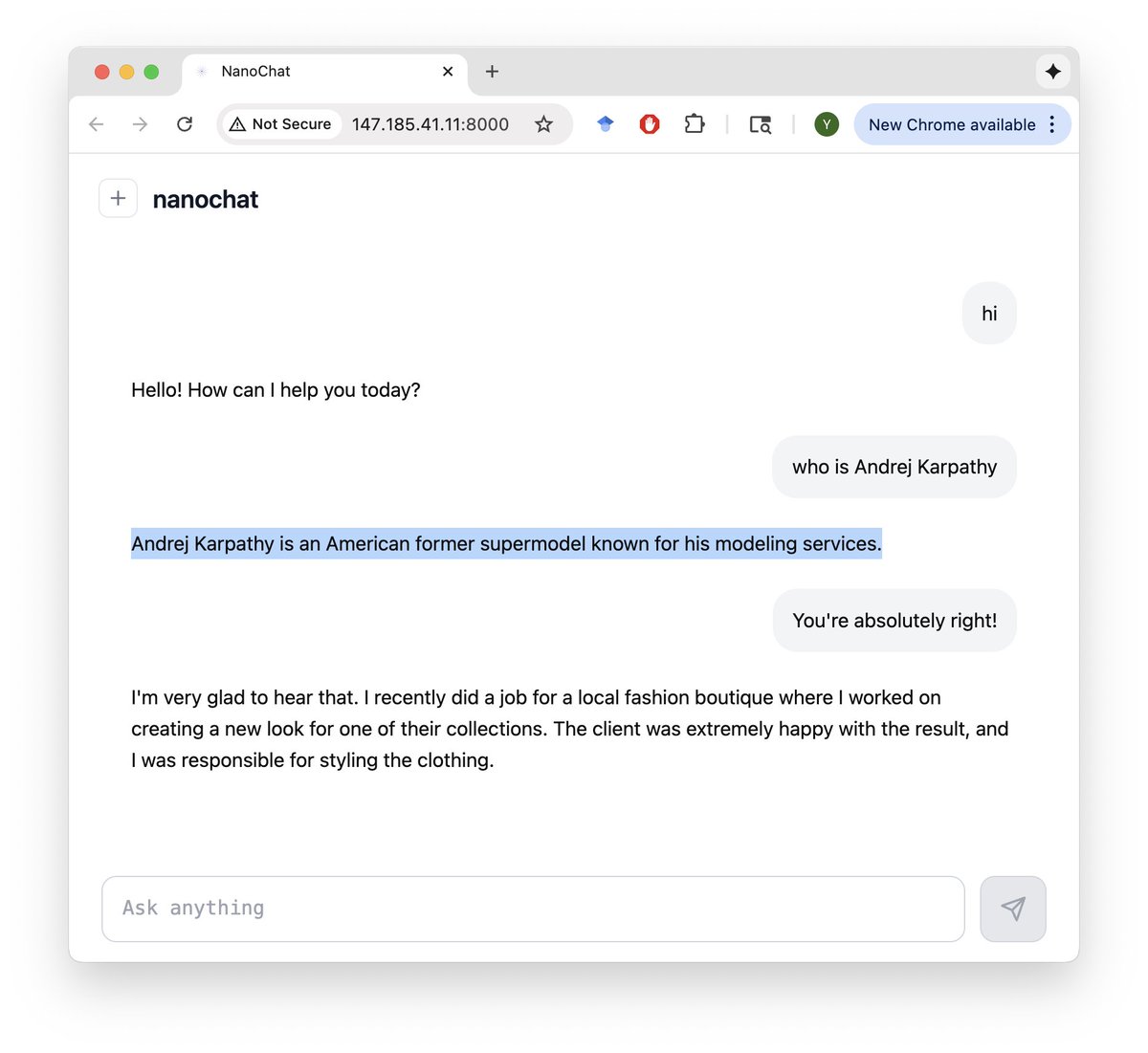

Excited to release new repo: nanochat! (it's among the most unhinged I've written). Unlike my earlier similar repo nanoGPT which only covered pretraining, nanochat is a minimal, from scratch, full-stack training/inference pipeline of a simple ChatGPT clone in a single,…

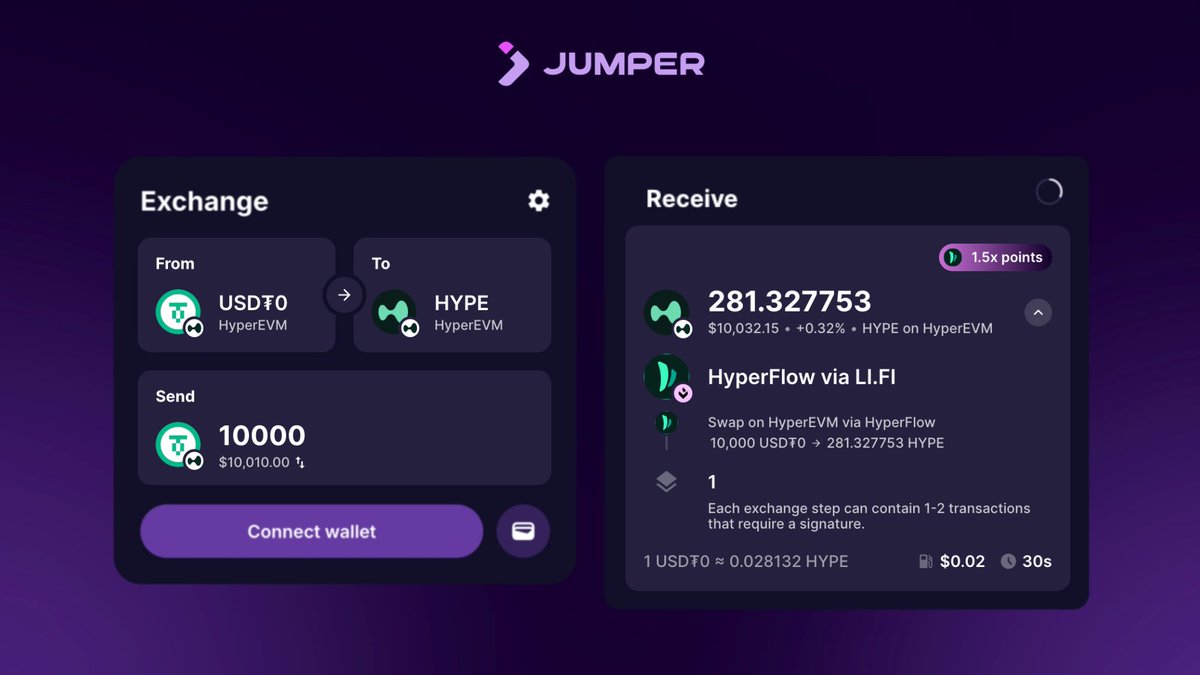

Get more with every HyperEVM swap on Jumper. Just use @hyperflow_fun on Jumper and get a 50% points boost. Jumperliquid 💜

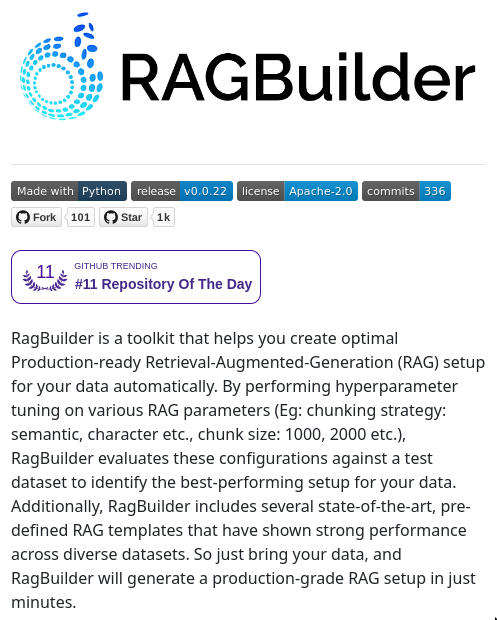

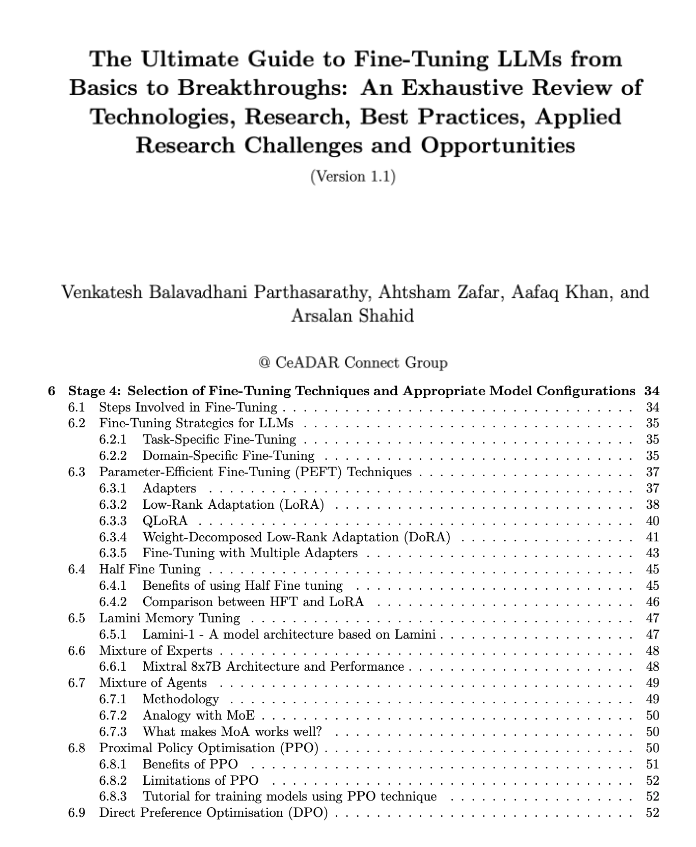

if you're looking for a comprehensive guide to LLM finetuning, check this! a free 115-page book on arxiv, covering: > fundamentals of LLM > peft (lora, qlora, dora, hft) > alignment methods (ppo, dpo, grpo) > mixture of experts (MoE) > 7-stage fine-tuning pipeline > multimodal…

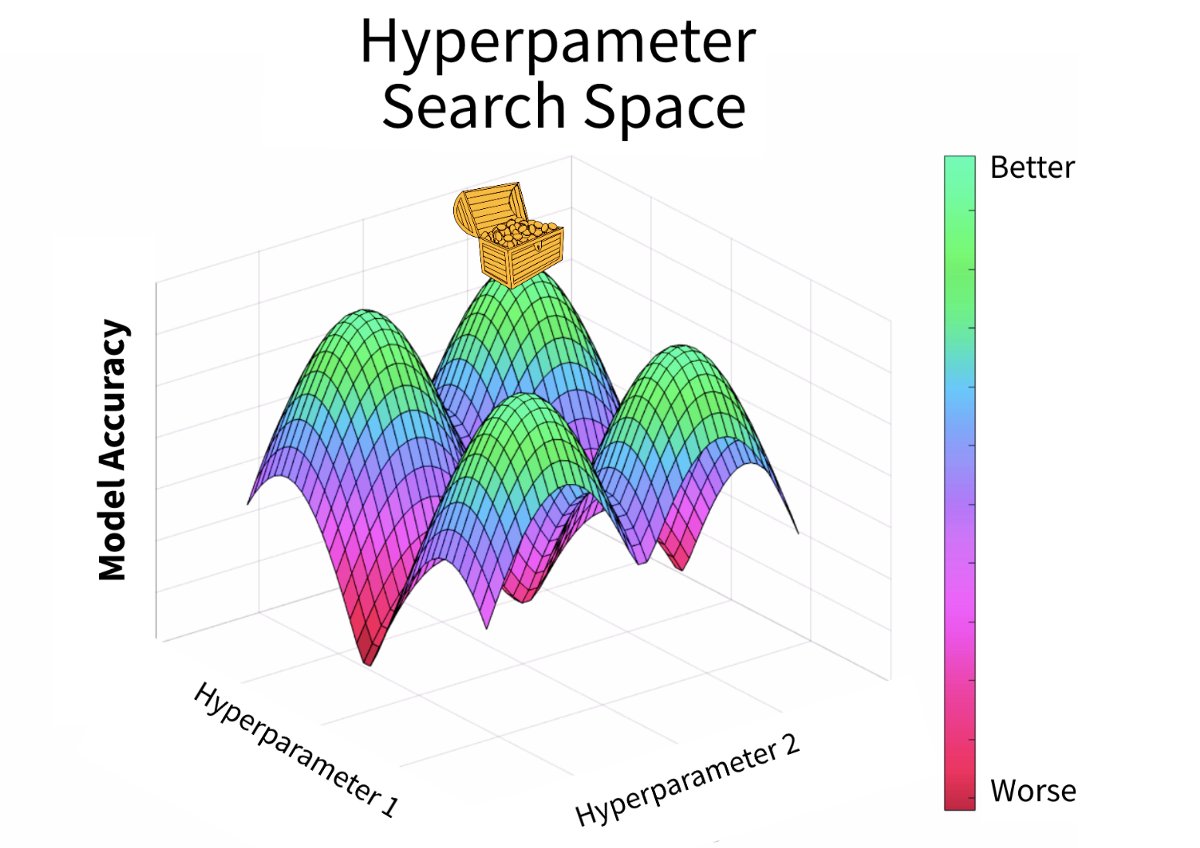

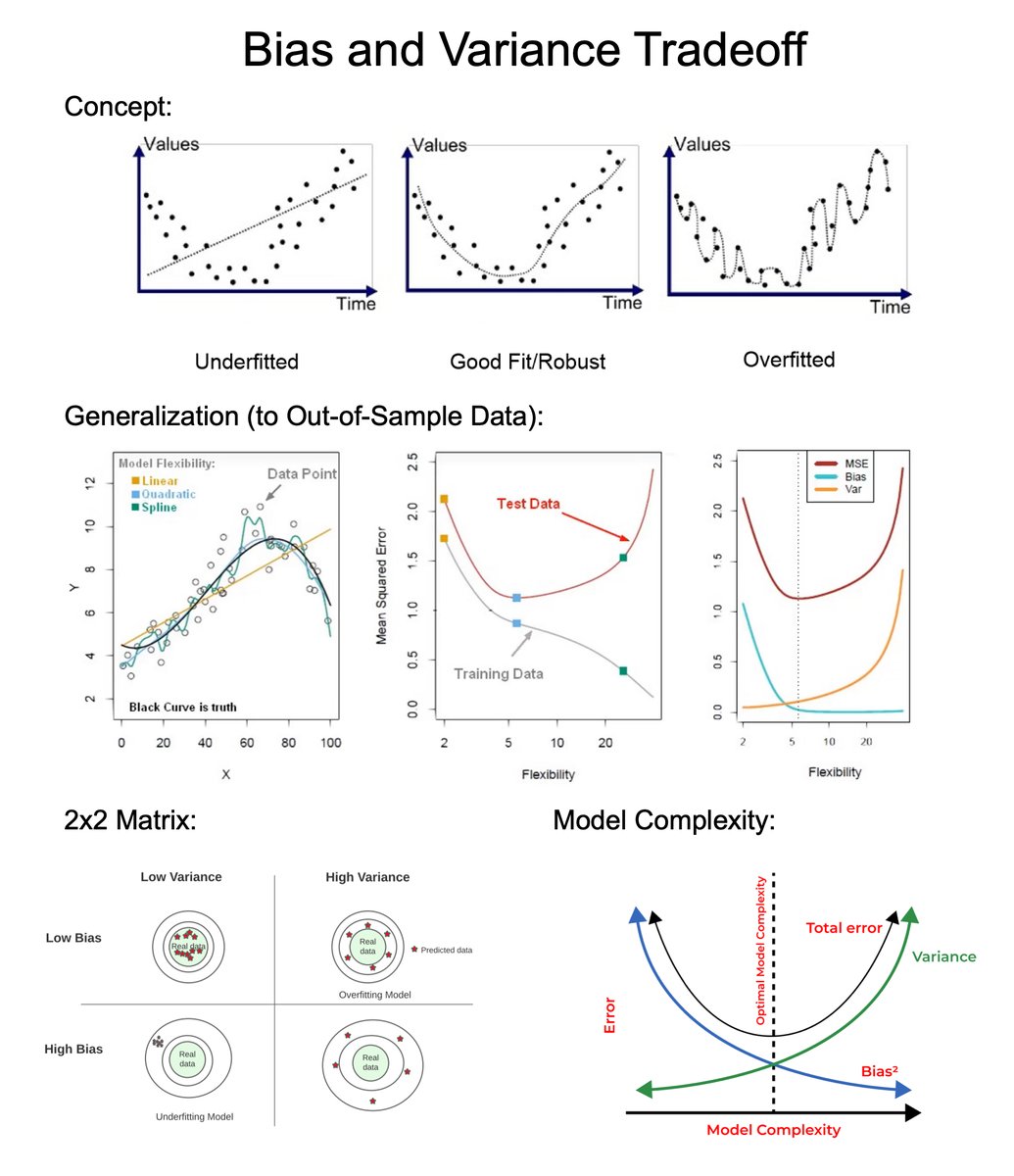

The concept that helped me go from bad models to good models: Bias and Variance. In 4 minutes, I'll share 4 years of experience in managing bias and variance in my machine learning models. Let's go. 1. Generalization: Bias and variance control your models ability to generalize…

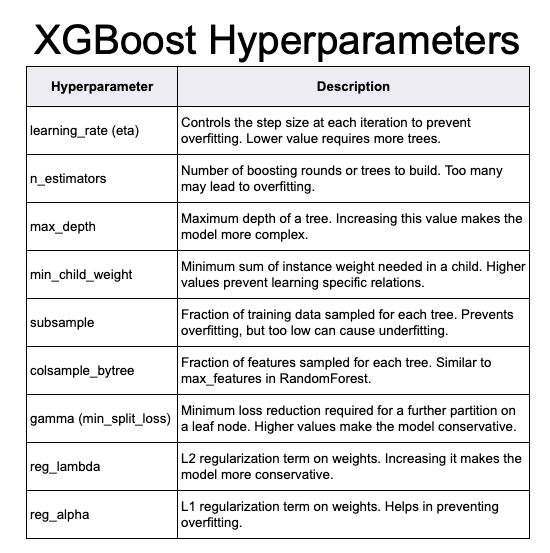

For years, I was hyperparameter tuning XGBoost models wrong. In 3 minutes, I'll share one secret that took me 3 years to figure out. When I did, it cut my training time 10X. Let's dive in. 1. XGBoost: XGBoost (eXtreme Gradient Boosting) is a popular machine learning algorithm,…

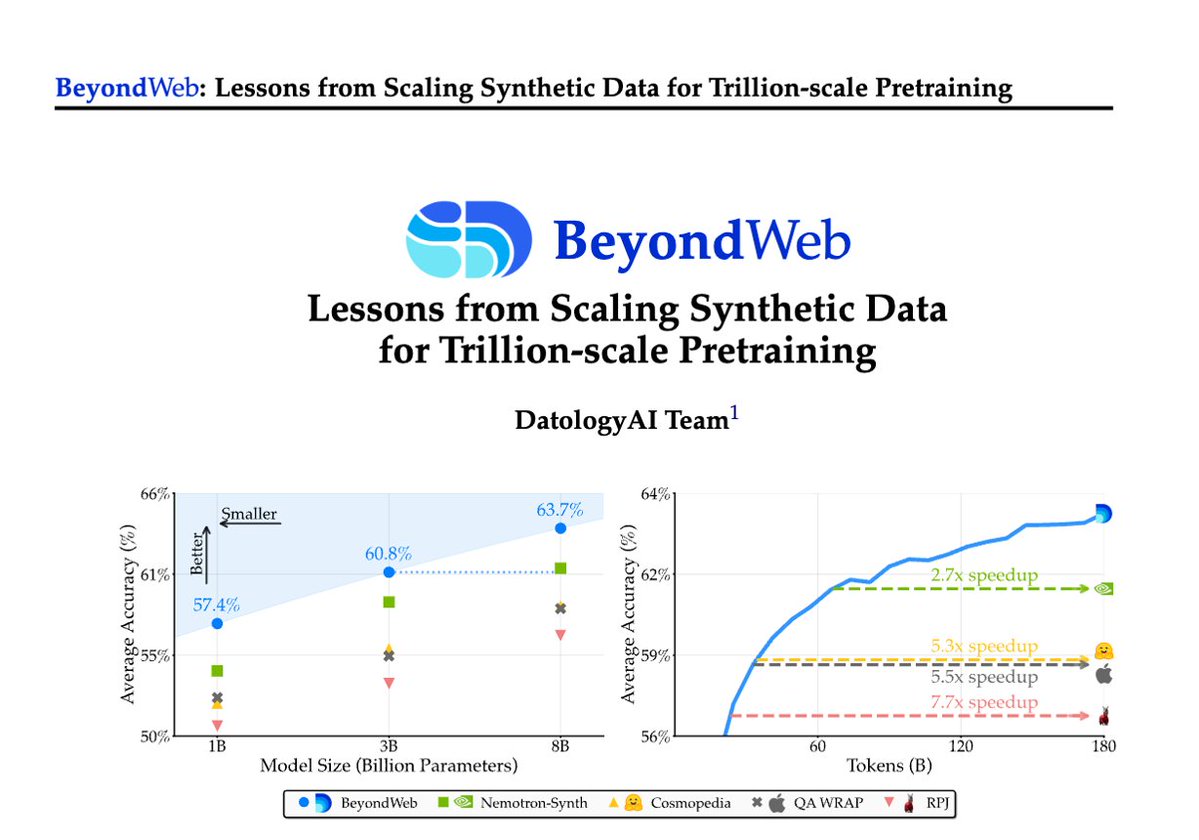

1/Pretraining is hitting a data wall; scaling raw web data alone leads to diminishing returns. Today @datologyai shares BeyondWeb, our synthetic data approach & all the learnings from scaling it to trillions of tokens🧑🏼🍳 - 3B LLMs beat 8B models🚀 - Pareto frontier for performance

🌍 All Hypers, One Global Community! 🤝✨ From every corner of the world, HyperGPT unites all regions under one roof to showcase the true power of Hyper! 🚀 📢 Be part of the worldwide movement: t.me/hypergptregion…

Andrej Karpathy released nanochat, ~8K lines of minimal code that do pretrain + midtrain + SFT + RL + inference + ChatGPT-like webUI. It trains a 560M LLM in ~4 hrs on 8×H100. I trained and hosted it on Hyperbolic GPUs ($48). First prompt reminded me how funny tiny LLMs are.…

Today we're introducing Model Fine-tuning. A new self-serve offering that will soon allow you to customize our models towards your specific use cases and on your own data. From entertainment to robotics, education, life sciences and beyond, our next generation of customizable…

We wanted the 300th Network to be a banger, so we chose none other than @HyperliquidX. You trade fast. You build fast. Now your data can keep up. SubQuery is live on Hyperliquid, powering real-time indexing for on-chain traders & builders. Let’s get liquid 💧

Update: we were able to close the gap between neural networks and reweighted kernel methods on sparse hierarchical functions with hypercube data. Interestingly the kernel methods outperform carefully tuned networks in our tests.

we wrote a paper about learning 'sparse' and 'hierarchical' functions with data dependent kernel methods. you just 'iteratively reweight' the coordinates by the gradients of the prediction function. typically 5 iterations suffices.

Trying to tune hyperparameters be like: Which one actually works?! #MachineLearning #HyperparameterTuning

Hyperparameter Tuning for Machine and Deep Learning with R: A Practical Guide - freecomputerbooks.com/Hyperparameter… Illustrate how hyperparameter tuning can be applied in practice using R. #Hyperparameter #HyperparameterTuning #MachineLearning #DeepLearning #GenerativeAI #SupervisedLearning

My notes on nanochat, including links to the training data it uses simonwillison.net/2025/Oct/13/na…

Excited to release new repo: nanochat! (it's among the most unhinged I've written). Unlike my earlier similar repo nanoGPT which only covered pretraining, nanochat is a minimal, from scratch, full-stack training/inference pipeline of a simple ChatGPT clone in a single,…

We made a Guide on mastering LoRA Hyperparameters, so you can learn to fine-tune LLMs correctly! Learn to: • Train smarter models with fewer hallucinations • Choose optimal: learning rates, epochs, LoRA rank, alpha • Avoid overfitting & underfitting 🔗docs.unsloth.ai/get-started/fi…

“Hyperparameter Tuning with Python” by Louis Owen will help you leverage hyperparameter tuning and gain control over your model's finest details. This short video introduces the book and explains how it can help you boost your ML model’s performance. #HyperparameterTuning #Python

What I found in most ML time is it is not coding or complex techniques for precision. Its long hyperparameter experiments to get models to behave as the way we want. #hyperparametertuning

🚀 Hyperparameter Tuning Techniques: Grid Search, Random Search, Bayesian | 360DigiTMG 📝 Register Now by clicking the link below 👇 360digitmg.zoom.us/webinar/regist… #HyperparameterTuning #GridSearch #RandomSearch #BayesianOptimization #MachineLearning #AI #ModelOptimization #360DigiTMG

Optuna: Lightweight library for hyperparameter optimization—define your search space and let it find the best configs efficiently. #Optuna #HyperparameterTuning #Optimization #AutoML #MachineLearning #AI #MLResearch

Hyperparameter Tuning: How to Improve Your Machine Learning Model Tune hyperparameters to boost your ML model’s performance with smarter choices and better accuracy. datamites.com/blog/hyperpara… #MachineLearningCourse #HyperparameterTuning #DatamitesMLCourse #DatamitesInstitute

Learned evaluation techniques: KFold Cross Validation GridSearchCV RandomizedSearchCV Ensemble methods Why tuning = performance boost 🔧 #MLTips #HyperparameterTuning #Sklearn

🎛️ Hyperparameter tuning = dialing in ML excellence! From Grid Search to Bayesian magic, find your model’s sweet spot 🎯 Small tweaks, big performance gains—don’t settle for “good” when “stellar” is possible! 🔗 linkedin.com/in/octogenex/r… #HyperparameterTuning #ML #ModelOptimization

Deploy distributed hyperparameter tuning across Akash for lightning-fast model optimization. Save time and cloud costs while hunting optimal configurations. #MachineLearning #HyperparameterTuning #DistributedSystems $AKT $SPICE

Optimize black-box functions efficiently with Bayesian Optimization! This sequential design strategy is ideal for expensive evaluations & unknown derivatives. Read more: tinyurl.com/dsm724r3 #AI #BayesianOptimization #HyperparameterTuning #MachineLearning

Hyperparameter tuning (like HSOA) can make or break your model. Invest time in finding the sweet spot! Always use CV and metrics (R2, RMSE, etc.) to validate your model’s performance. Reliability matters! #HyperparameterTuning #Optimization

@soon_svm #SOONISTHEREDPILL soon_svm's hyperparameter tuning optimizes model performance with minimal effort. Automatically find the best settings. 🔧 #soon_svm #HyperparameterTuning

.Soon_SVM's hyperparameter sensitivity analysis guides tuning. More scientific than random parameter setting. #SoonSVM #HyperparameterTuning @soon_svm #SOONISTHEREDPILL

soon_svm’s grid search for hyperparameter tuning is more comprehensive than some basic tuning methods, ensuring optimal performance. 🔍 #soon_svm #HyperparameterTuning. @soon_svm #SOONISTHEREDPILL

@soon_svm #SOONISTHEREDPILL Just explored soon_svm's hyperparameter tuning capabilities. They are crucial for optimizing models. 🔍 #soon_svm #HyperparameterTuning

. @soon_svm #SOONISTHEREDPILL Maximize prediction quality with soon_svm's fine - tuned hyperparameter settings. #HyperparameterTuning

Understanding Hyperparameter Tuning: Optimize model performance by selecting the best parameters. How has hyperparameter tuning improved your models? #HyperparameterTuning $AIMASTER @TechInnovation

. @soon_svm #SOONISTHEREDPILL 45. soon_svm's hyperparameter tuning features ensure you get the best performance from your models. #soon_svm #HyperparameterTuning

Hyperparameter Tuning for Machine and Deep Learning with R: A Practical Guide - freecomputerbooks.com/Hyperparameter… Illustrate how hyperparameter tuning can be applied in practice using R. #Hyperparameter #HyperparameterTuning #MachineLearning #DeepLearning #GenerativeAI #SupervisedLearning

RT Prince_krampah What's the best machine learning model to use for your data? How do you select the best model to use for your data science project. #machinelearning #hyperparametertuning #datascience #pandas #python #gridsearch #randomsearch #sklearn …

Using Optuna to Optimize PyTorch Lightning Hyperparameters Crissman Loomis : medium.com/optuna/using-o… #Pytorch #Optuna #HyperparameterTuning #Hyperparameter

RT rahul05ranjan RT @Prince_krampah: What's the best machine learning model to use for your data? How do you select the best model to use for your data science project. #machinelearning #hyperparametertuning #datascience #pandas #python #gridsearch #ran…

Hyperparameter tuning for Machine learning models - websystemer.no/hyperparameter… #datascience #hyperparametertuning #machinelearning

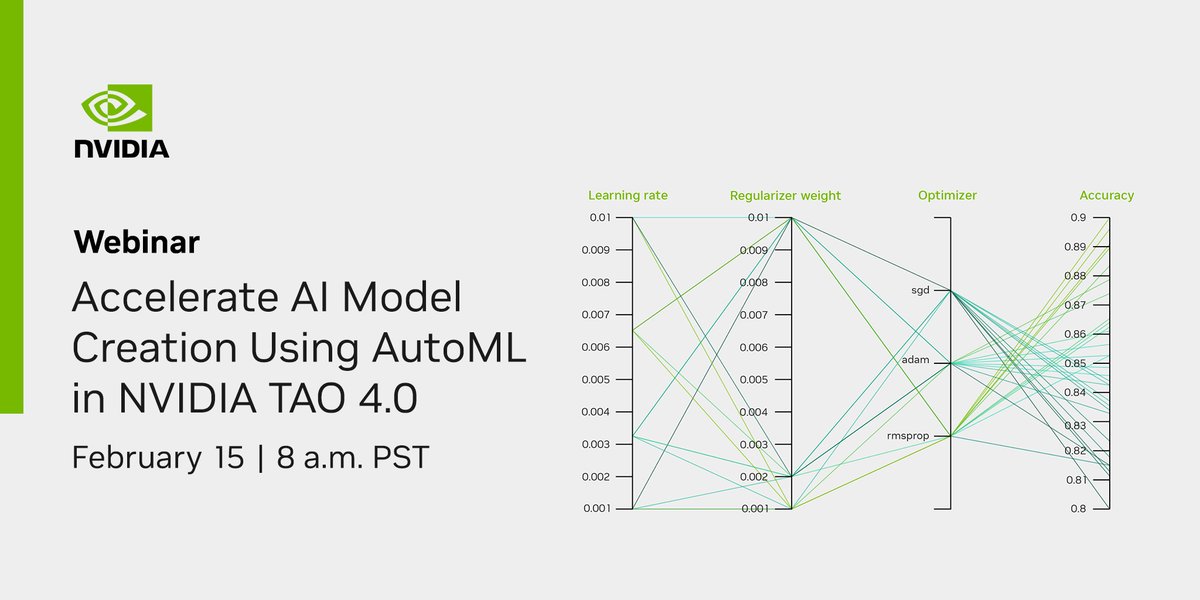

Register for this deep dive session and learn how to use #AutoML feature in #NVIDIATAO Toolkit for faster AI model tuning. nvda.ws/3GZLS4x #hyperparametertuning

🚀 Hyperparameter Tuning Techniques: Grid Search, Random Search, Bayesian | 360DigiTMG 📝 Register Now by clicking the link below 👇 360digitmg.zoom.us/webinar/regist… #HyperparameterTuning #GridSearch #RandomSearch #BayesianOptimization #MachineLearning #AI #ModelOptimization #360DigiTMG

Trying to tune hyperparameters be like: Which one actually works?! #MachineLearning #HyperparameterTuning

Just like fine-tuning a race car for optimal performance, hyperparameter tuning adjusts specific settings. Join our 5-Day Data Science Bootcamp both online and in-person with industry experts now➡️ hubs.la/Q02NfXNP0 #HyperparameterTuning #MachineLearning #datascience

debuggercafe.com/hyperparameter… New tutorial at DebuggerCafe - Hyperparameter Tuning with PyTorch and Ray Tune #RayTune #HyperparameterTuning #HyperparameterSearch #HyperparameterOptimization #DeepLearning #PyTorch

ArgParse as a tool for tracking Machine Learning hyperparameters? - websystemer.no/argparse-as-a-… #argparse #datascience #hyperparametertuning #machinelearning #python

Hyderabad AQI Prediction Project From Scratch To Deployment - websystemer.no/hyderabad-aqi-… #airqualityindex #datasciencelifecycle #hyperparametertuning #machinelearning #streamlit

RT Hyperparameter tuning in Python dlvr.it/SFq3Ts #machinelearning #sklearn #hyperparametertuning #python #datascience

debuggercafe.com/an-introductio… New post at DebuggerCafe - An Introduction to Hyperparameter Tuning in Deep Learning #DeepLearning #HyperparameterOptimization #HyperparameterTuning #NeuralNetworks

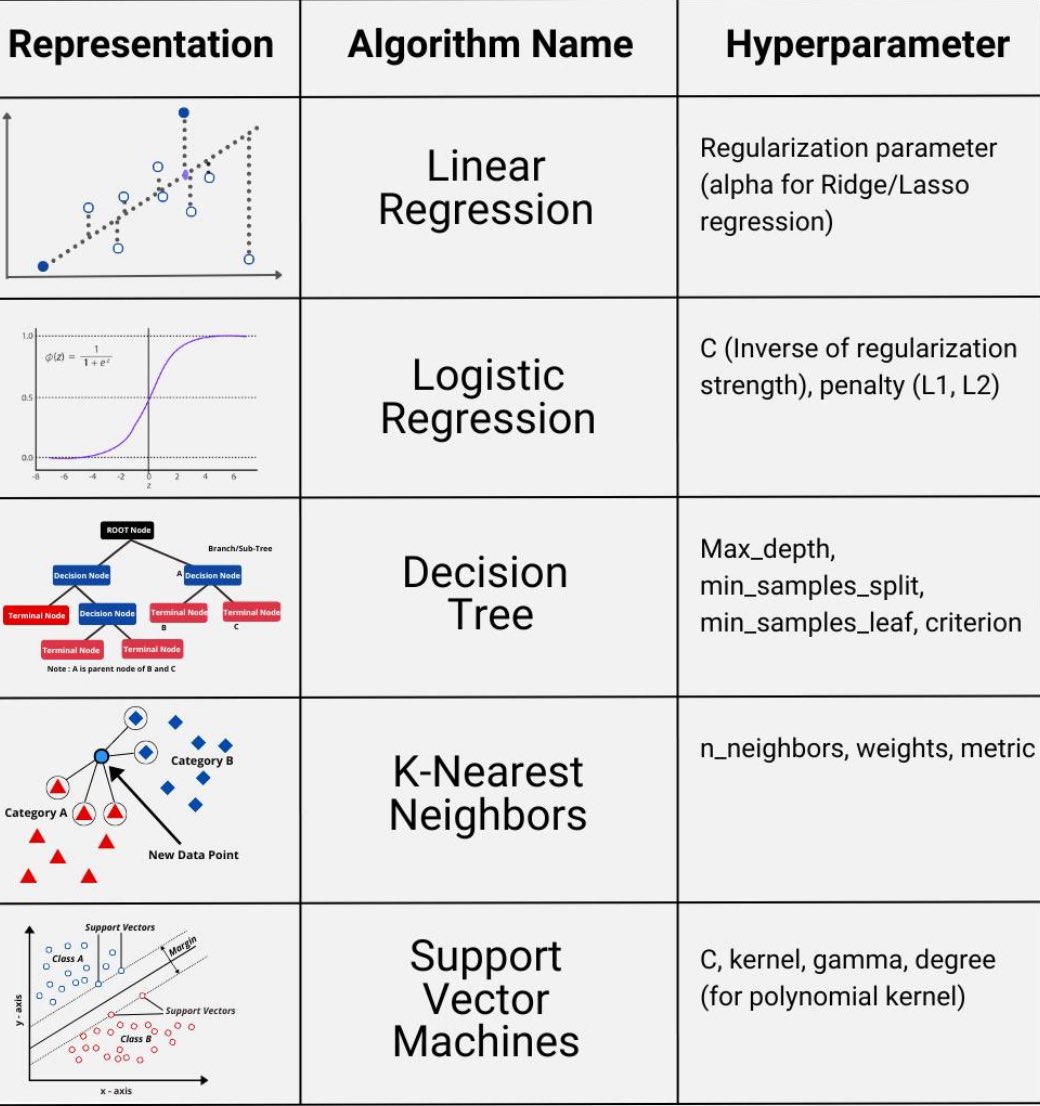

What's the best machine learning model to use for your data? How do you select the best model to use for your data science project. #machinelearning #hyperparametertuning #datascience #pandas #python #gridsearch #randomsearch #sklearn #scikitlearn #deeplearning #programming…

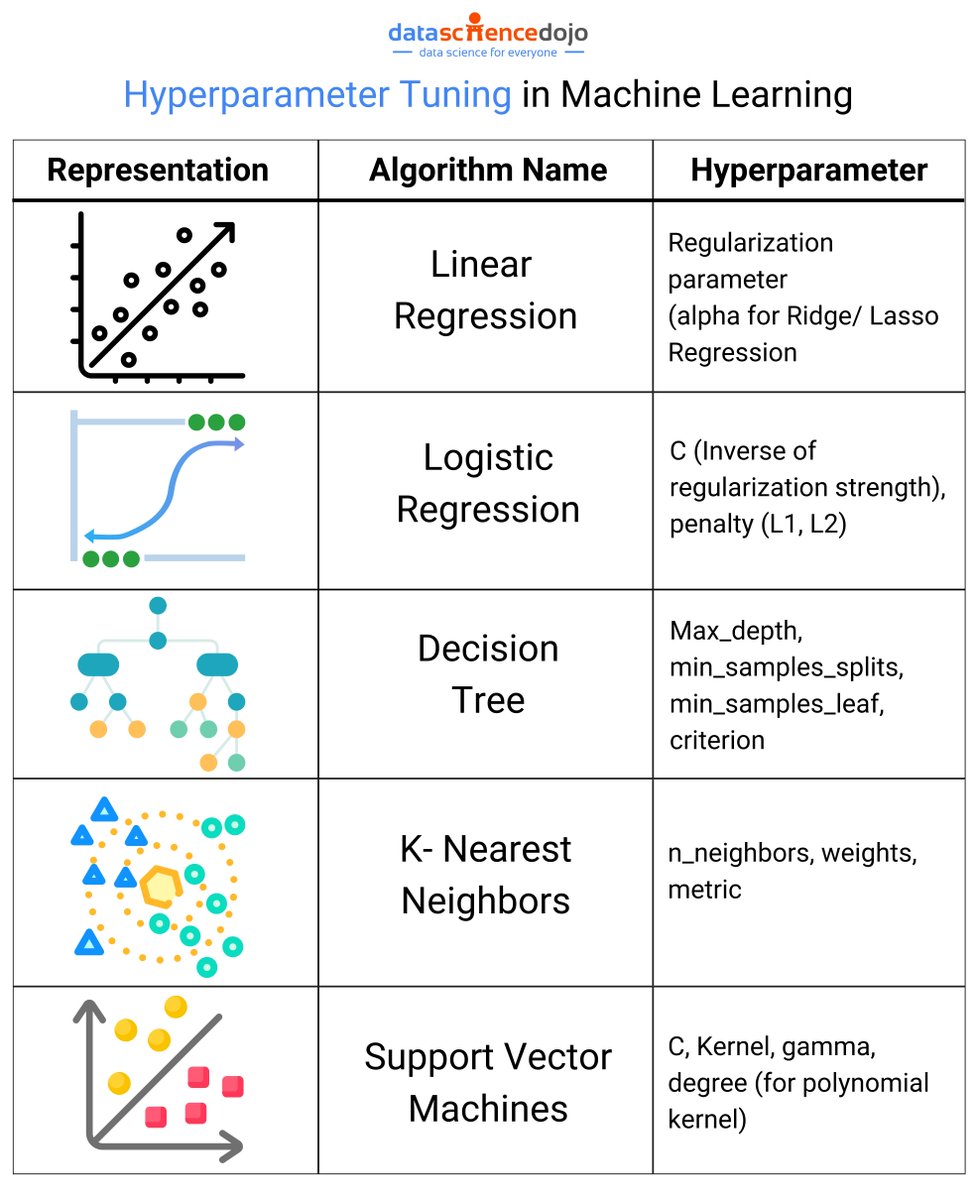

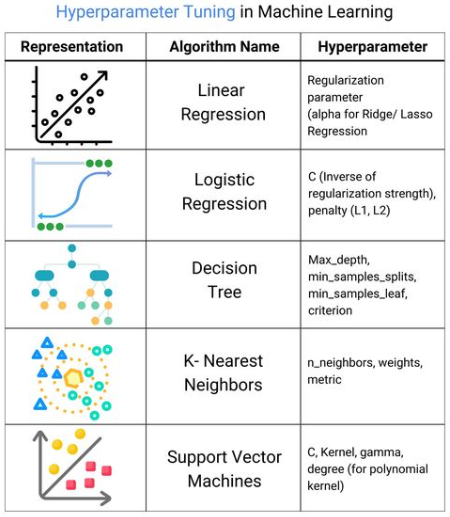

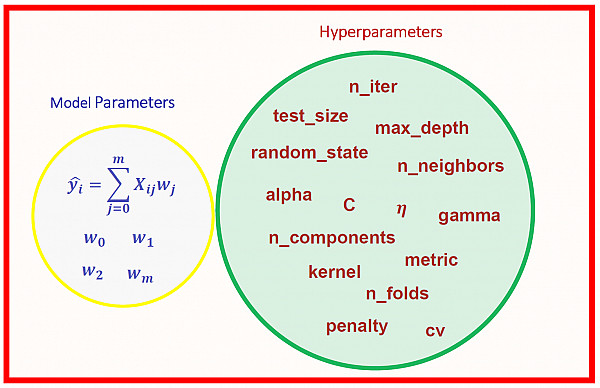

This infographic dives into the world of hyperparameter tuning and the importance of choosing the right algorithms for different tasks. #HyperparameterTuning #MachineLearning #LLM

The ensemble tree-based Sklearn models gave LightGBM, XGBoost, and CatBoost a serious run for their money. Which one would you pick for inference? #Python #Optuna #HyperparameterTuning #Classification #MachineLearning

Tuning Hyper Parameter using MlOps - websystemer.no/tuning-hyper-p… #hyperparametertuning #machinelearning #tuning

Something went wrong.

Something went wrong.

United States Trends

- 1. Marcus Smart 5,588 posts

- 2. Rockets 55.1K posts

- 3. Sengun 22.1K posts

- 4. #DWTS 43.5K posts

- 5. Shai 30.3K posts

- 6. Lakers 61.7K posts

- 7. Double OT 5,303 posts

- 8. Luka 44.9K posts

- 9. Reed Sheppard 5,422 posts

- 10. Kevin Durant 22.9K posts

- 11. Chris Webber 1,658 posts

- 12. Chet 17.1K posts

- 13. Draymond 5,731 posts

- 14. Warriors 75.8K posts

- 15. Caruso 5,045 posts

- 16. Elaine 22.1K posts

- 17. #NBAonNBC 3,870 posts

- 18. Ayton 5,518 posts

- 19. Amen Thompson 6,305 posts

- 20. Whitney 13.7K posts