#modelcompression résultats de recherche

🚀 OptiPFair v0.2.0 is out! New: Data-driven width pruning for LLMs 📊 Use your data to guide pruning 🎯 Better preservation of domain knowledge pip install --upgrade optipfair Docs: peremartra.github.io/optipfair/ #LLM #ModelCompression #AI

#DittoAlgorithm #AICompression #ModelCompression #KnowledgeDistillation #1bitQuantization #EdgeAI #OnDeviceAI #EfficientAI #Neuroevolution #EvolutionaryAI #DarwinianLearning #JEPA #GodelHeuristics #Mimicry #HybridArchitectures #TinyML #ModelPruning #DistilledModels #AgenticAI…

The tech behind this is #AI and #modelcompression - Which I will explore in my next thread . 4/4

How #AI can give you a Life after death. . .? An interesting thread #modelcompression mirror.xyz/dashboard/edit… 1/4

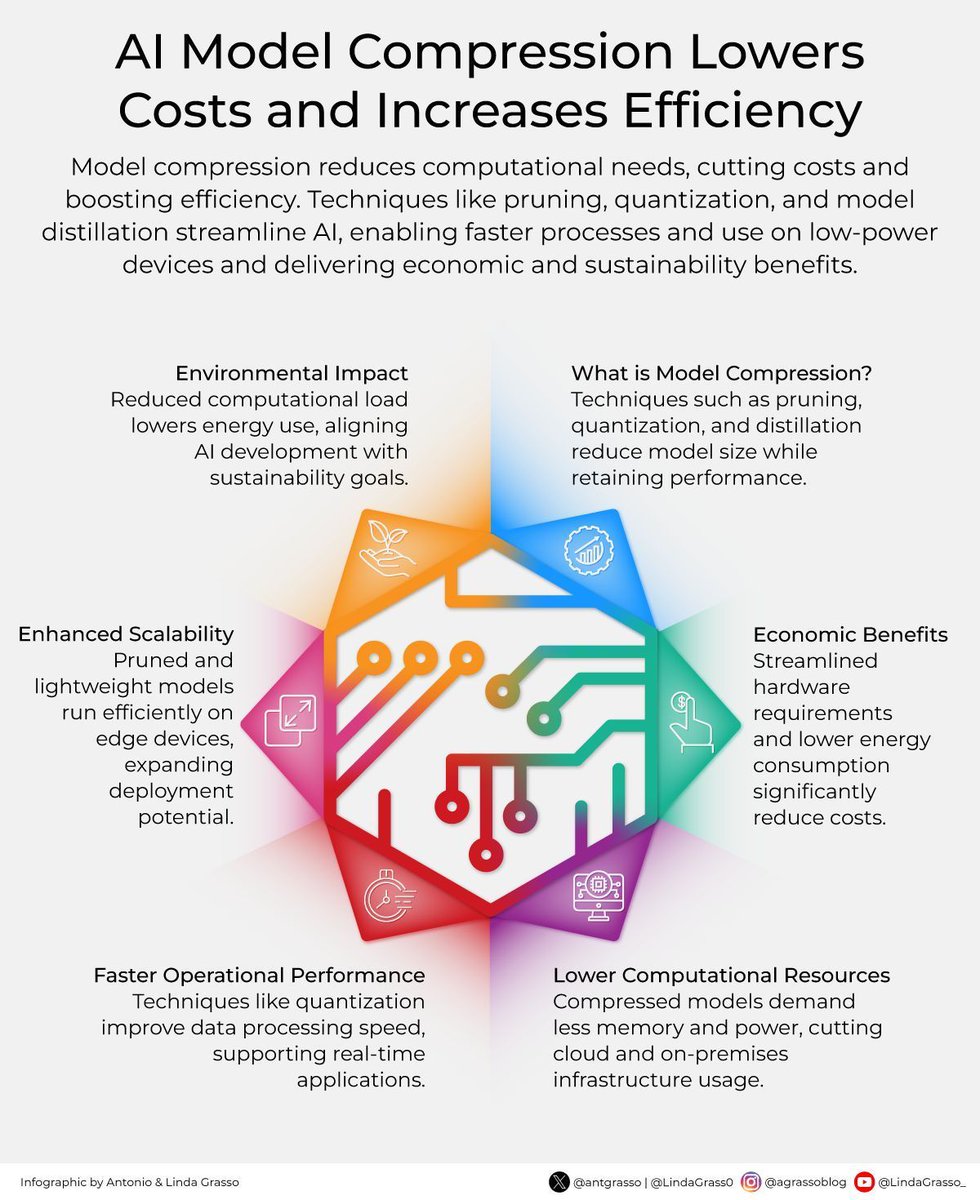

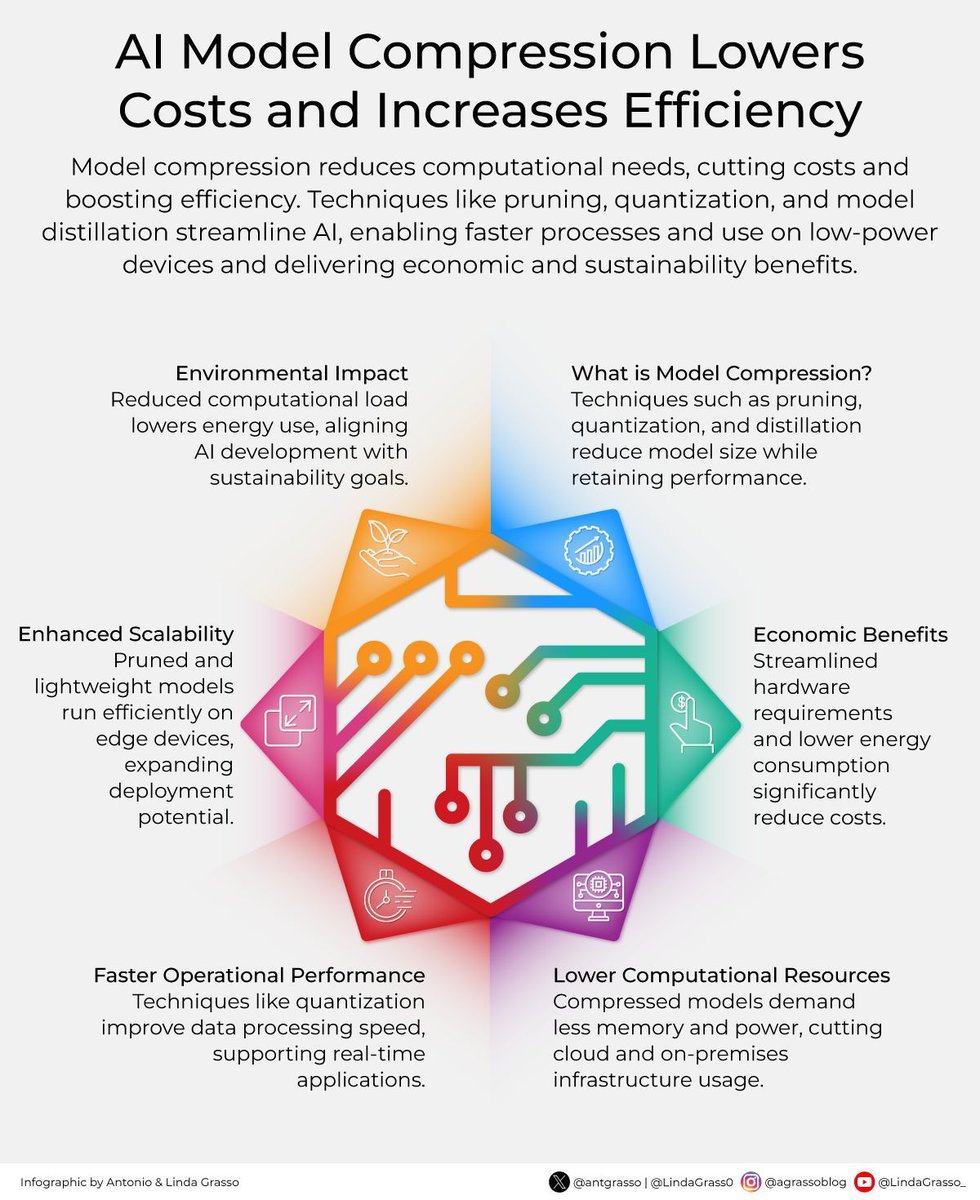

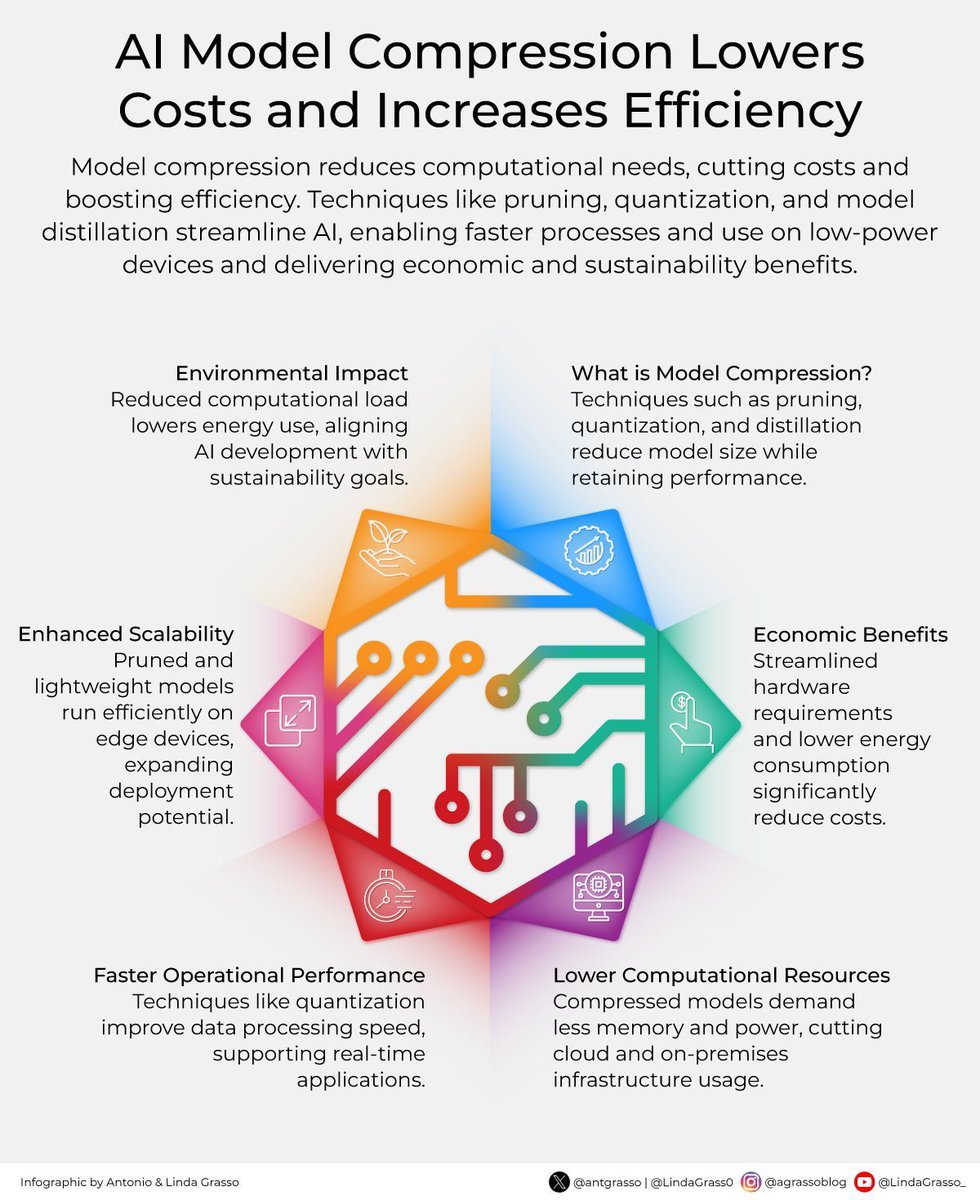

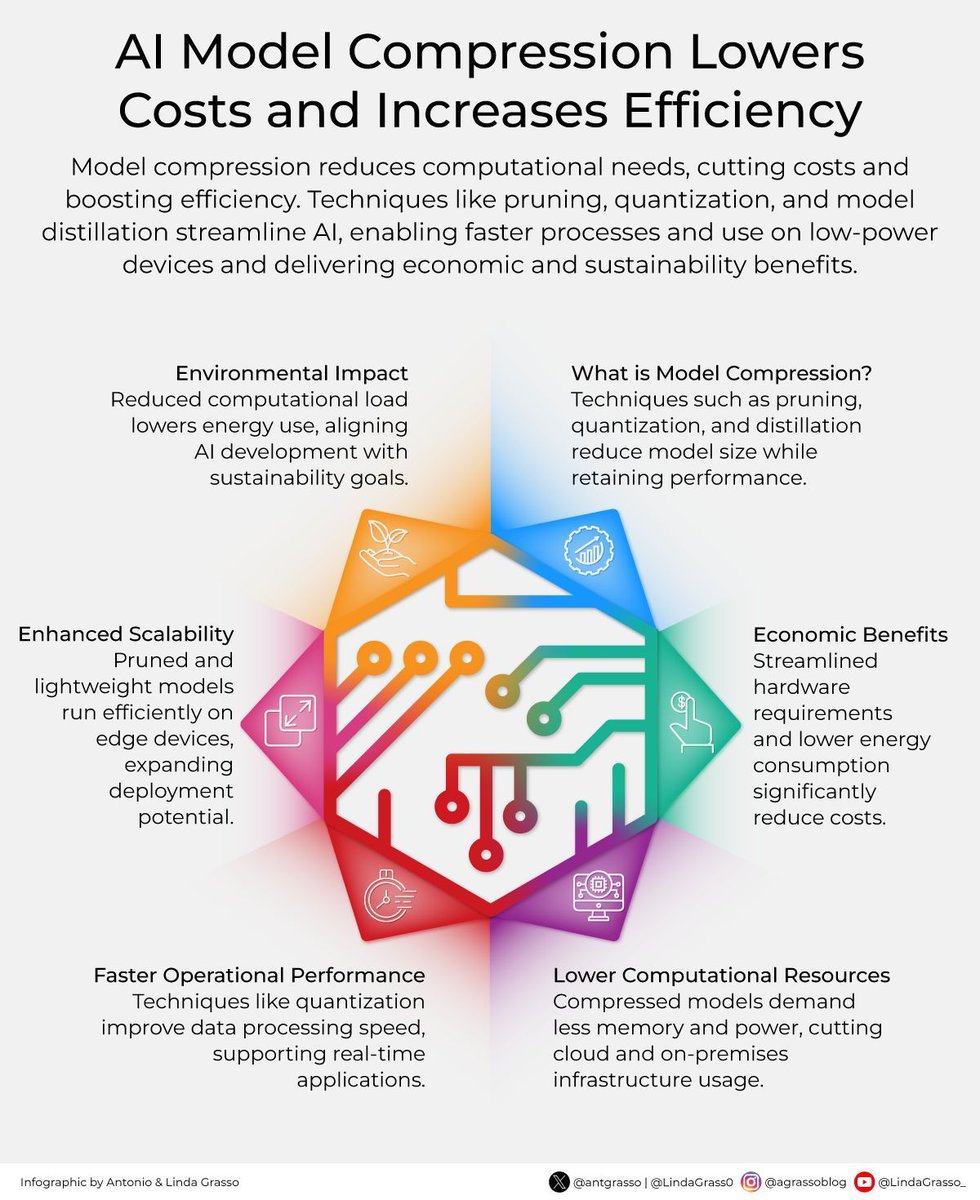

AI model compression isn't just a technical refinement but a strategic choice that aligns cost reduction, sustainability, and operational agility with the pressing demands of today's rapidly evolving digital landscape. By @antgrasso #AI #ModelCompression #Efficiency

AI model compression isn't just a technical refinement but a strategic choice that aligns cost reduction, sustainability, and operational agility with the pressing demands of today's rapidly evolving digital landscape. rt @antgrasso #AI #ModelCompression #Efficiency

When @heyelsaAi brings scalable speech AI, @antix_in compresses models to the extreme, and @SentientAGI pushes cognitive boundaries— you don’t just get smaller models. You get smarter, faster, and more accessible intelligence. #AI #ModelCompression #AGI #bb27 #CharlieKirkshot

🔥 Read our Highly Cited Paper 📚Stable Low-Rank CP #Decomposition for Compression of Convolutional Neural Networks Based on Sensitivity 🔗mdpi.com/2076-3417/14/4… 👨🔬by Chenbin Yang and Huiyi Liu 🏫Hohai University #convolutionalneuralnetworks #modelcompression

Excited to share: our paper “On Pruning State-Space LLMs” was accepted to EMNLP 2025! 🎉 Preprint: arxiv.org/abs/2502.18886 Code: github.com/schwartz-lab-N… Model: Smol-Mamba-1.9B → huggingface.co/schwartz-lab/S… w/ @MichaelHassid & @royschwartzNLP (HUJI) #Mamba #ModelCompression

Accuracy hides issues in quantized LLMs. 'Accuracy is Not All You Need' (NeurIPS 2024) shows 'flips' & KL-Divergence reveal output changes. #AI #ModelCompression #LLM

Graft and Go: How Knowledge Grafting Shrinks AI Without Shrinking Its Brain cognaptus.com/blog/2025-07-2… #AIoptimization #edgecomputing #modelcompression #knowledgetransfer #resource-constrainedAI

cognaptus.com

Graft and Go: How Knowledge Grafting Shrinks AI Without Shrinking Its Brain

A novel approach called knowledge grafting allows AI models to be drastically slimmed down while improving generalization, enabling deployment in resource-constrained environments.

💡 Dynamic Quantization isn’t just compression. It’s context-aware model tuning. Why run a full 8-bit matrix multiply when a 5-bit op would do? @TheoriqAI is giving models a sixth sense — and saving compute for the rest of us. #EdgeAI #ModelCompression

خلّيت الموديل يصوم: شلت ٨٠٪ من الـ neurons ورجع يكسّر الدقة القديمة. Framework: قياس – قطع – إعادة تأهيل. لما الشبكة تحس إنها وحيدة في الحلبة، كل عصب بيقاتل بجد. الكود بتاعك تقيل؟ حرّمه من السعرات وشوف الفرق. #SparseAI,#NeuralNetworks,#ModelCompression,#EdgeAI,#TechLeadership

AI model compression isn't just a technical refinement but a strategic choice that aligns cost reduction, sustainability, and operational agility with the pressing demands of today's rapidly evolving digital landscape. By @antgrasso #AI #ModelCompression #Efficiency

Think of it like "language modeling as compression." When you compress the same info to its maximum, different algorithms converge. If architectures are similar, the underlying representations should also be converging. #ModelCompression #AI

AI model compression isn't just a technical refinement but a strategic choice that aligns cost reduction, sustainability, and operational agility with the pressing demands of today's rapidly evolving digital landscape. rt @antgrasso #AI #ModelCompression #Efficiency

Introducing Nota 🇰🇷 AI model compression technology that makes deep learning models run faster with less computing power. They help companies deploy AI on mobile devices without sacrificing performance. Efficiency-focused AI innovation. #AI #ModelCompression #MobileAI

AI model compression tools reduce size by 60%. Deploy on edge devices, scaling from cloud to IoT. @PublicAIData #ModelCompression #EdgeScaling

Excited to share: our paper “On Pruning State-Space LLMs” was accepted to EMNLP 2025! 🎉 Preprint: arxiv.org/abs/2502.18886 Code: github.com/schwartz-lab-N… Model: Smol-Mamba-1.9B → huggingface.co/schwartz-lab/S… w/ @MichaelHassid & @royschwartzNLP (HUJI) #Mamba #ModelCompression

🔥 Read our Highly Cited Paper 📚Stable Low-Rank CP #Decomposition for Compression of Convolutional Neural Networks Based on Sensitivity 🔗mdpi.com/2076-3417/14/4… 👨🔬by Chenbin Yang and Huiyi Liu 🏫Hohai University #convolutionalneuralnetworks #modelcompression

Today's #PerfiosAITechTalk talks about how #ModelCompression can be used for efficient on-device runtimes. #NeuralNetworks #Datascience #ML #AI

AI model compression isn't just a technical refinement but a strategic choice that aligns cost reduction, sustainability, and operational agility with the pressing demands of today's rapidly evolving digital landscape. By @antgrasso #AI #ModelCompression #Efficiency

The four common #ModelCompression techniques: 1) Quantization 2) Pruning 3) Knowledge distillation 4) Lower rank matrix factorization. What does your experience point you toward? #NeuralNetworks #DataScience #PerfiosAITechTalk

AI model compression isn't just a technical refinement but a strategic choice that aligns cost reduction, sustainability, and operational agility with the pressing demands of today's rapidly evolving digital landscape. Microblog by @antgrasso #AI #ModelCompression #Efficiency…

What is #ModelCompression in #AI? #Infographic by @antgrasso #ArtificialIntelligence #MachineLearning #NeuralNetworks #DeepLearning #AIResearch #DataScience cc: @jeffkagan @SpirosMargaris @jblefevre60 @CES

What do you mean by #ModelCompression? The answer is here, courtesy #NeuralNetworks expert @bsourav29 #PerfiosAITechTalk

Model compression in ICCLOUD! 8 - bit Quantization cuts memory by 75%, Weight Pruning reduces compute by 50%. Save resources! #ModelCompression #Resources

🧪 Subnetwork-only tuning outperforms full finetuning on many tasks. DPO: +1.6% PRIME: +2.4% Especially at higher difficulty levels. 📊 #ModelCompression #RLforLLMs

Think of it like "language modeling as compression." When you compress the same info to its maximum, different algorithms converge. If architectures are similar, the underlying representations should also be converging. #ModelCompression #AI

RT Model Compression: A Look into Reducing Model Size dlvr.it/RqDnWR #machinelearning #modelcompression #tinyml #deeplearning

Policy Compression for Intelligent Continuous Control on Low-Power Edge Devices mdpi.com/1424-8220/24/1… #modelcompression #softactor #edgecomputing

AI model compression isn't just a technical refinement but a strategic choice that aligns cost reduction, sustainability, and operational agility with the pressing demands of today's rapidly evolving digital landscape. Microblog by @antgrasso #AI #ModelCompression #Efficiency

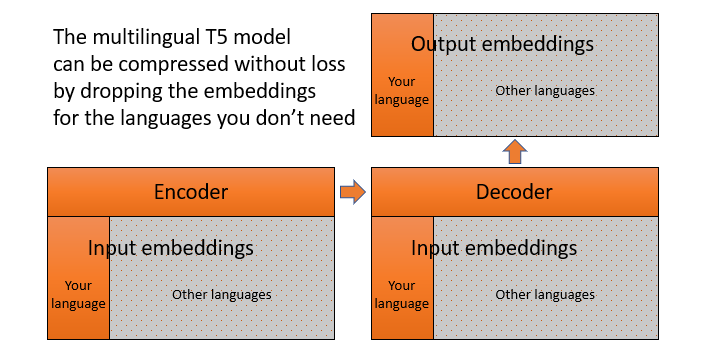

RT How to adapt a multilingual T5 model for a single language dlvr.it/Rz5ltX #nlp #transformers #modelcompression #machinelearning #t5

🔥New Research by Mr. Cesar Pachon, Prof. Diego Renza and Prof. Dora Ballesteros: "Is My Pruned Model Trustworthy? PE-Score: A New CAM-Based Evaluation Metric" @lamilitar #deeplearning #modelcompression #pruning #trustworthy #SeNPIS Access for Free: mdpi.com/2504-2289/7/2/…

Paper presentation: Our colleague presented his new paper “Beyond Test Accuracy: The Effects of Model Compression on CNNs” at this year´s SAFE AI. Watch his presentation here: ➡️youtube.com/watch?v=PkGdvK… #ai #modelcompression #deeplearning #research #SAFEAI

New paper online: Our colleagues investigate the effects of model compression on CNNs in their conference paper. Read more about it:➡️ publica.fraunhofer.de/documents/N-64… #ai #modelcompression #deeplearning #research

Accuracy hides issues in quantized LLMs. 'Accuracy is Not All You Need' (NeurIPS 2024) shows 'flips' & KL-Divergence reveal output changes. #AI #ModelCompression #LLM

AI model compression isn't just a technical refinement but a strategic choice that aligns cost reduction, sustainability, and operational agility with the pressing demands of today's rapidly evolving digital landscape. rt @antgrasso #AI #ModelCompression #Efficiency

Something went wrong.

Something went wrong.

United States Trends

- 1. Ryan Clark 1,401 posts

- 2. Scream 7 31.3K posts

- 3. 5sos 14.2K posts

- 4. Necas 1,961 posts

- 5. Matt Rhule 2,886 posts

- 6. Animal Crossing 24.4K posts

- 7. Mikko 2,636 posts

- 8. Somalia 53.9K posts

- 9. NextNRG Inc 1,715 posts

- 10. Rantanen N/A

- 11. Happy Halloween 244K posts

- 12. Usha 25.5K posts

- 13. #WomensWorldCup2025 32.3K posts

- 14. #INDWvsAUSW 61.7K posts

- 15. #PitDark 5,964 posts

- 16. Vance 302K posts

- 17. ACNH 8,904 posts

- 18. Sidney 18.2K posts

- 19. Sydney Sweeney 94.8K posts

- 20. Rand 59.4K posts