#placerecognition resultados de búsqueda

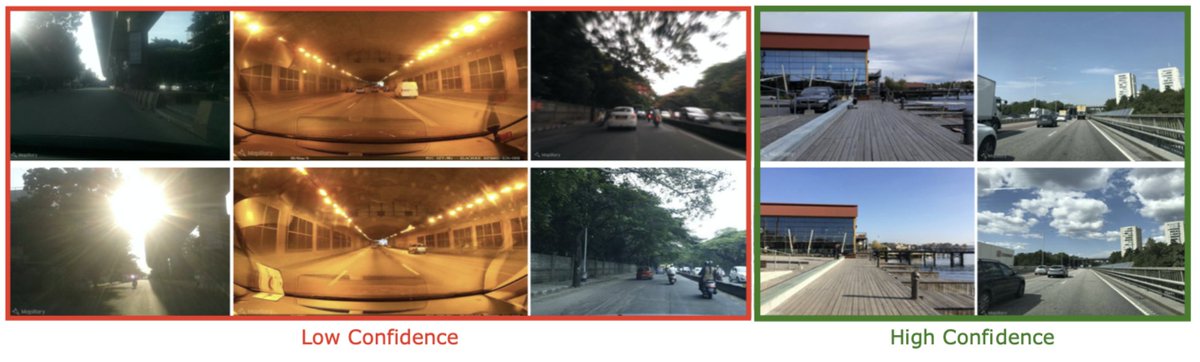

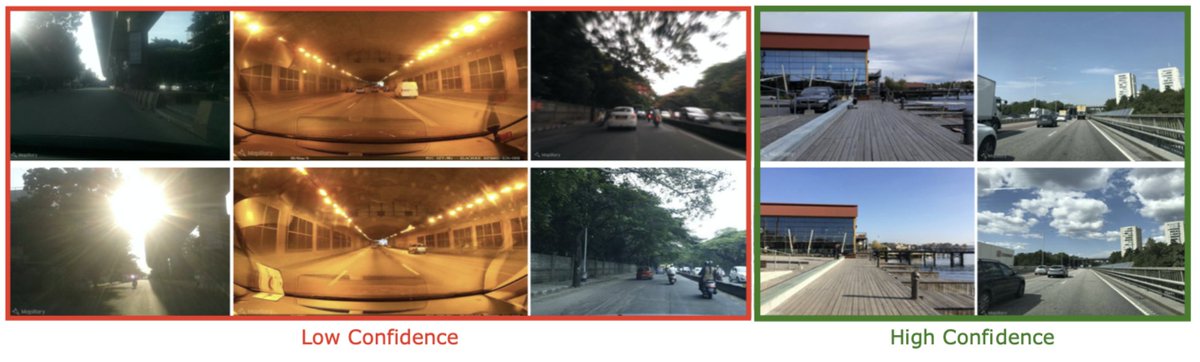

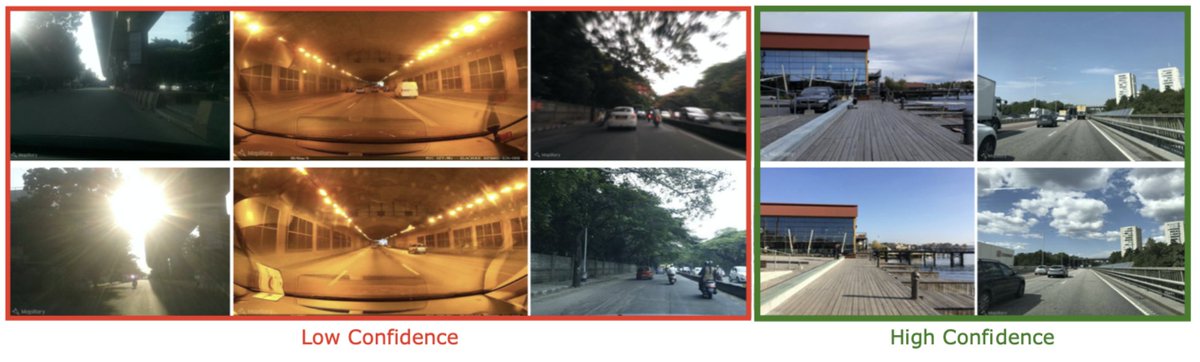

We show on the large scale #placerecognition dataset #MSLS that our model gives high uncertainty to challenging places w. harsh sunlight☀️, blur and ambiguous tunnels. This can help propagate uncertainties to downstream decisions and ensure timely interventions from the user. 4/4

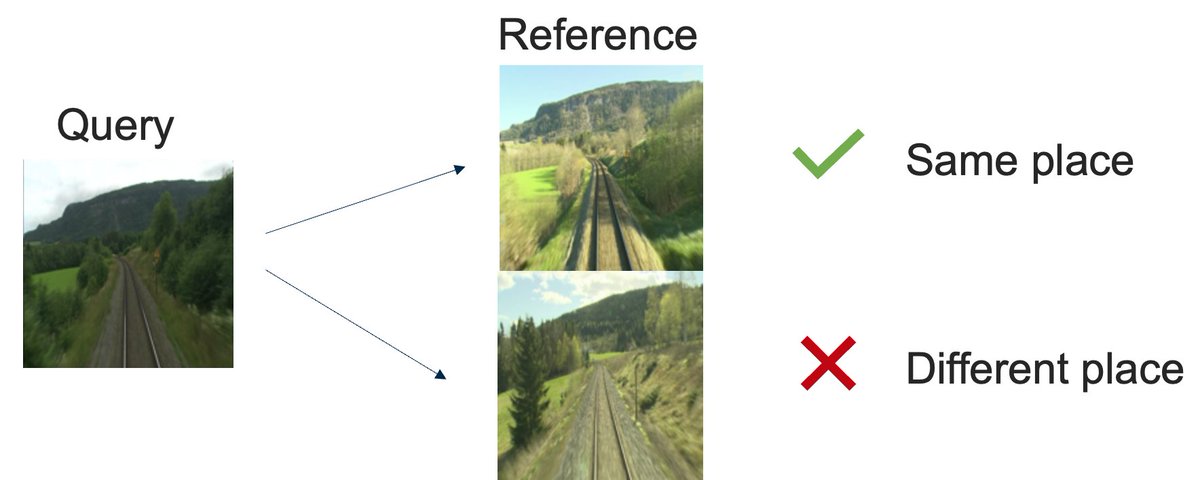

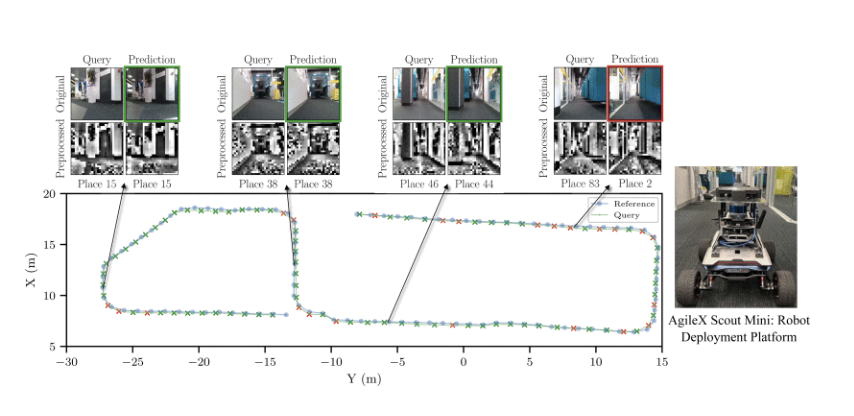

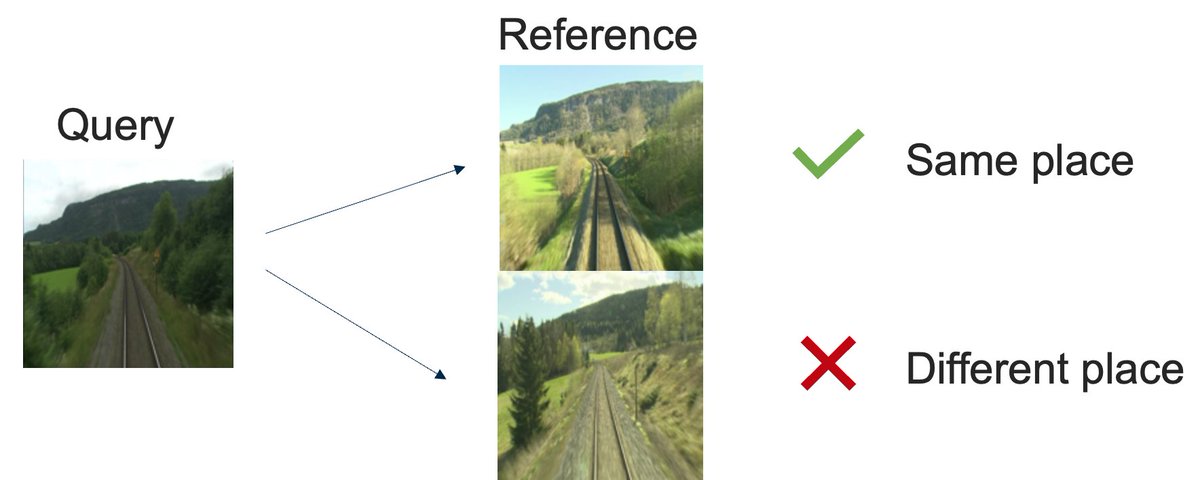

We considered #placerecognition as a #classification task: Which of N places is the robot in, given significant appearance change since last observing this place? Classification has long been studied with spiking nets, e.g. in digit recognition. 2/n

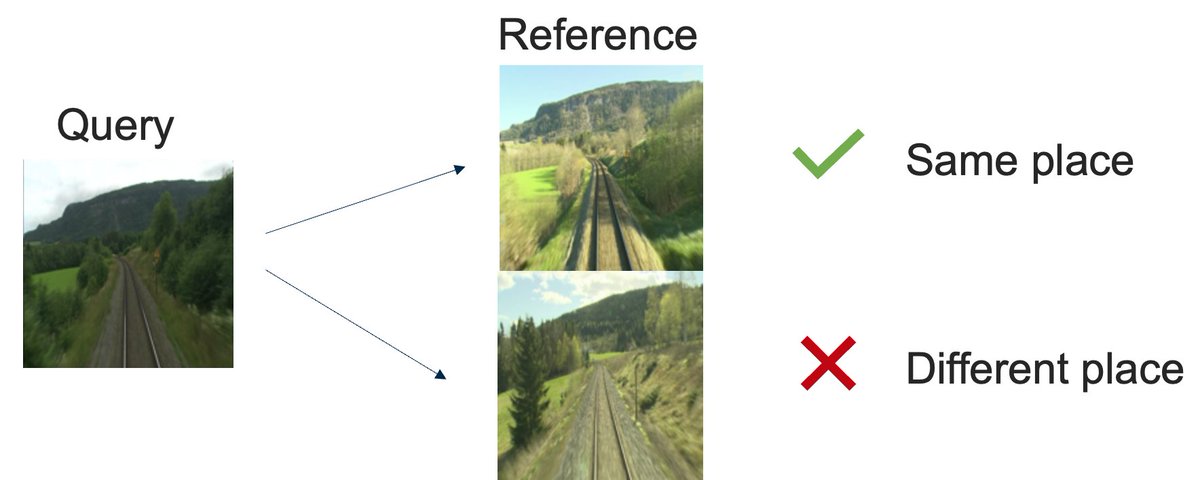

#Placerecognition is usually done based on single images. Yet in #SLAM , we also extract 3D structure. Why not use BOTH images and structure? Doing this, we achieve 98% recall@1 on #RobotCar VS 90% SOTA. Video: youtu.be/OWzBH3d7M_k Paper: rpg.ifi.uzh.ch/docs/RAL20_Oer…

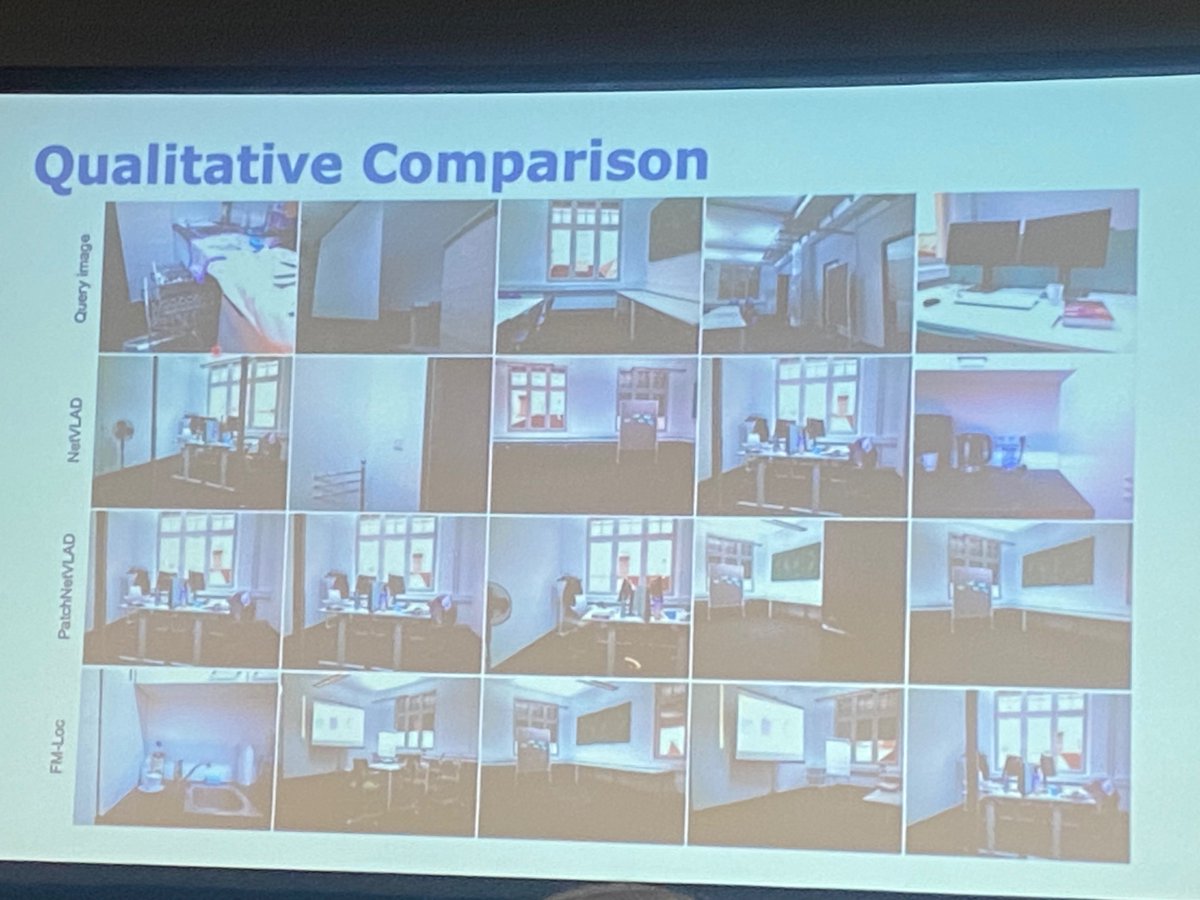

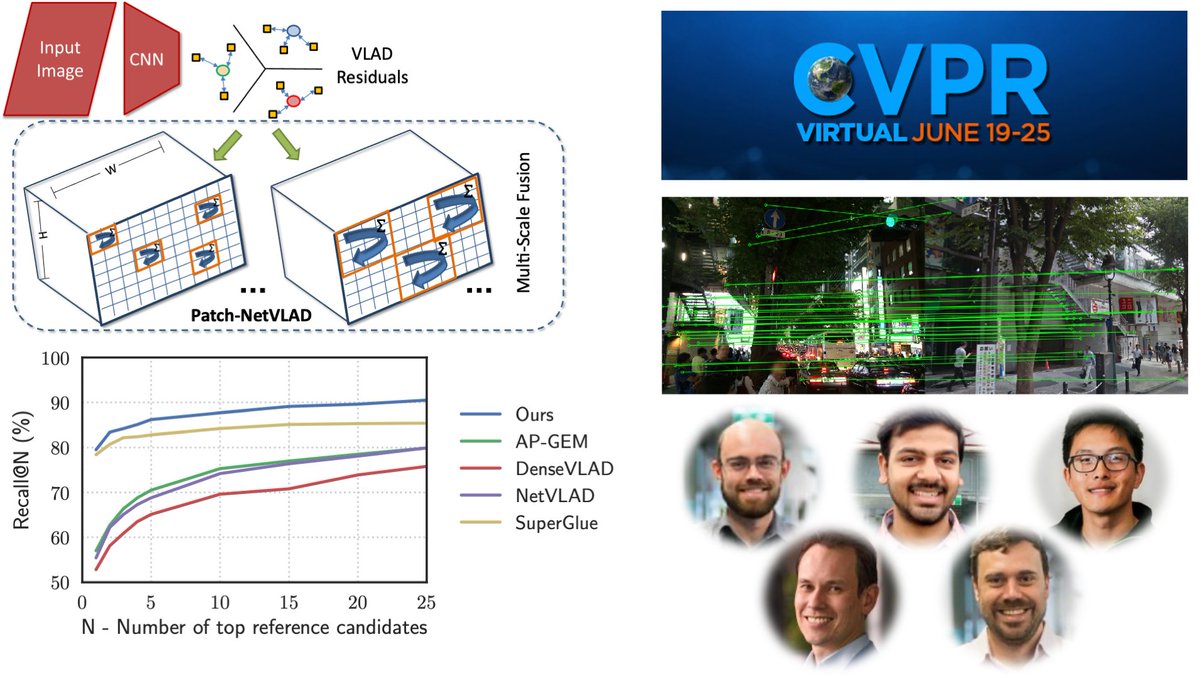

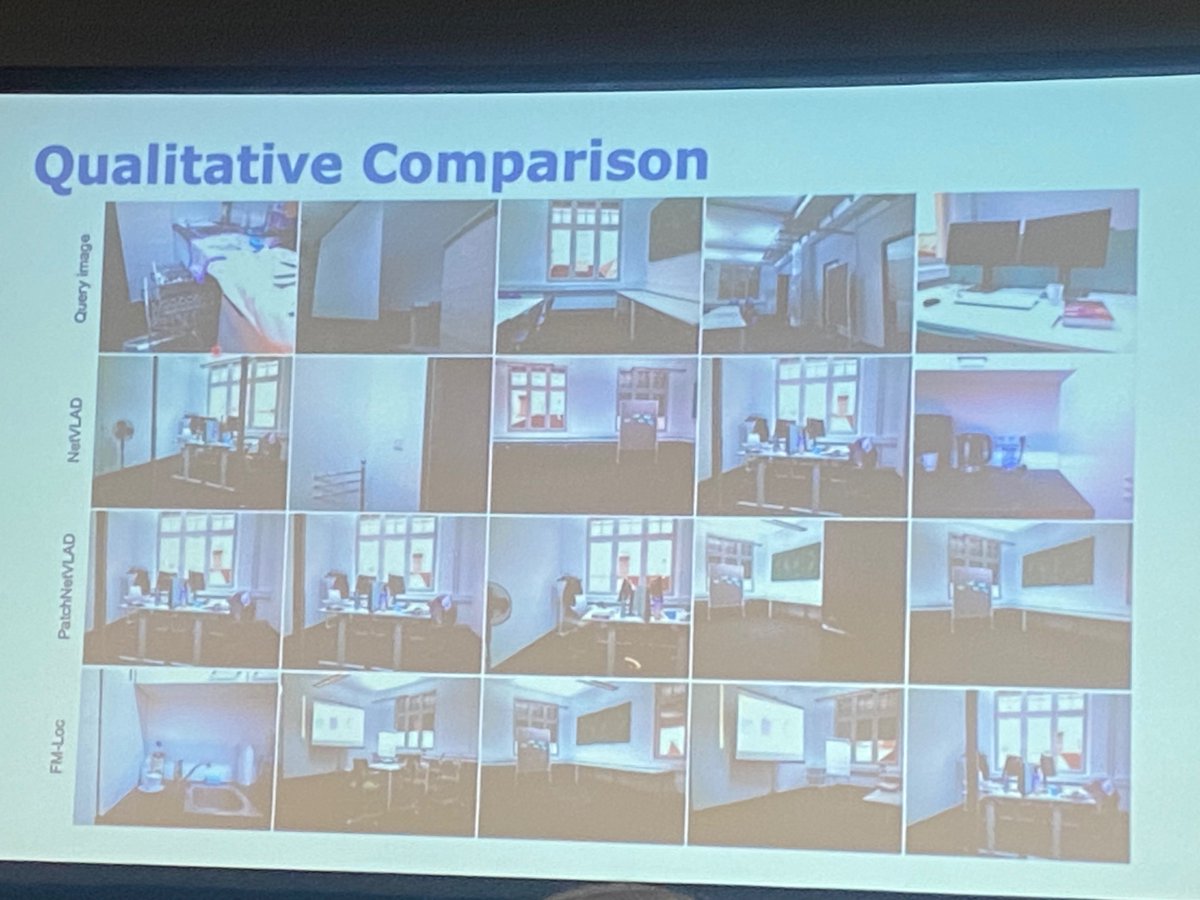

Enjoying Wolfram Burgard’s talk @ieeeiros a lot! Asking (and providing some preliminary works towards) important questions, including “Do we need HD maps?”. Also great to see comparisons to our @CVPR PatchNetVLAD #placerecognition work @Stephen_Hausler @sourav_garg_ @maththrills

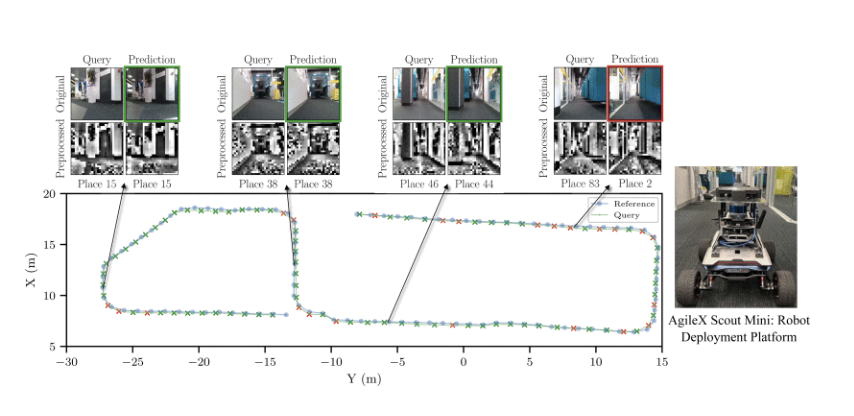

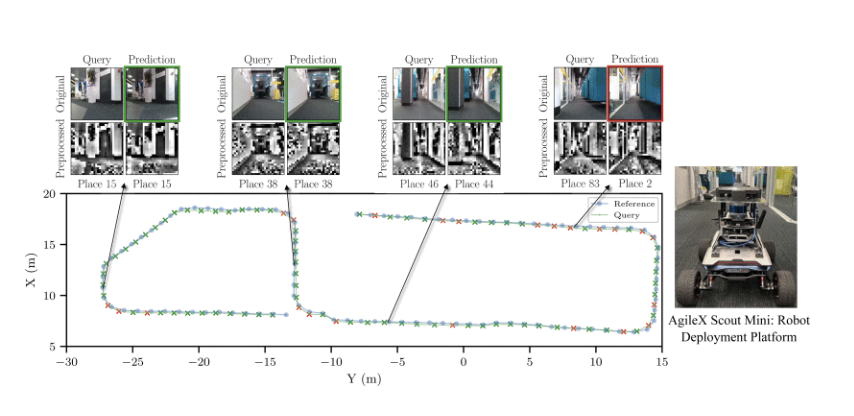

We introduce our novel #modularNeuralNetwork comprised of compact, localized #spiking networks for #placeRecognition, each learning a local region of the environment only. We train modules independently to enable scalability.

We are able to train #placerecognition networks with large backbones efficiently: 1 epoch (~500K pairs) in ~28h on one Nvidia #V100 gpu for a #ResNeXt backbone; ~5h for a #VGG backbone. [4/5]

![NicStrisc's tweet image. We are able to train #placerecognition networks with large backbones efficiently: 1 epoch (~500K pairs) in ~28h on one Nvidia #V100 gpu for a #ResNeXt backbone; ~5h for a #VGG backbone.

[4/5]](https://pbs.twimg.com/media/FJ9dIYDWQAcsbE5.png)

Introduces 'OverlapMamba', a novel deep learning model for LiDAR-based place recognition, enhancing robustness and efficiency. #LiDAR #PlaceRecognition #AutonomousVehicles

Researchers from @QUTRobotics present an energy-efficient place recognition system leveraging Spiking Neural Networks with modularity and sequence matching to rival traditional deep networks ieeexplore.ieee.org/document/10770… #PlaceRecognition #SpikingNeuralNetworks #RobotPerception

¡Que freaky! Facebook me pregunta en una de mis fotos: ¿Esta foto fue tomada en el Malecón de Santo Doming? #PlaceRecognition? :O

Join me in ThBT19.3 #ICRA2021 session on Jun 3, 3:30 (GMT+1) to know more about #placerecognition / #localization using #sequential representations #SeqNet @maththrills @QUTRobotics

Interested in #visuallocalization and #placerecognition? Check out our #iccv2021 workshop on long-term visual localization and our challenges: sites.google.com/view/ltvl2021/… The winner and runner-up of each challenge receives prize money. Challenge deadline: October 1st

4) and locus is the new #sota for #pointcloud #placerecognition in #KITTI dataset

My work related to visual place recognition has been accepted for publication in IEEE RA-L/ICRA 2022. Project page: usmanmaqbool.github.io/why-so-deep #placerecognition #robotics #ICRA2022 #RAL #mashaALLAH

I'm very proud of my PhD student @marialeyvallina, that scored runner-up 🍾 at the challenge on #placerecognition at the #LSVisualLocalization workshop @ICCV_2021 At 10pm CEST today, Maria will present our method and results at the workshop live at youtube.com/watch?v=BTDrD0… (1/5)

What it takes to enable 'anywhere', `anytime`, `anyview' #placerecognition for #robot #localization (atop an amazing team effort from across different 'places' & `timezones`, w. diverse `perspectives') @Nik__V__ @123avneesh @JayKarhade @_krishna_murthy @smash0190 @TheAIML 🧵👇

🧠🔬 Excited to share AnyLoc: Towards Universal Visual Place Recognition Foundation Models meet VPR - VPR anywhere🌍🌊🏙️, anytime🌌☁️🌄, and under anyview🚡🚗🛸 - no retraining/finetuning 🔁 - aimed at general-purpose localization & navigation anyloc.github.io 🧵👇

Interesting research on #placerecognition at #CVPR2021, looking forward to the presentation 😁

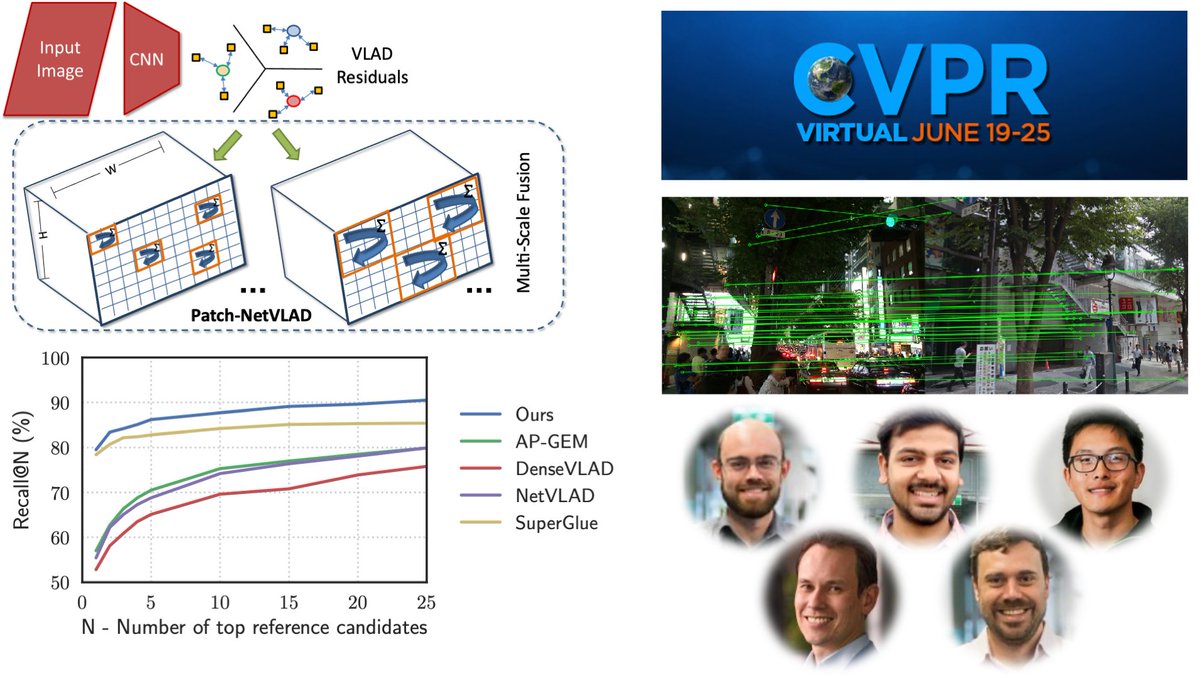

Incredibly happy that our @CVPR #CVPR2021 submission "Patch-NetVLAD: Multi-Scale Fusion of Locally-Global Descriptors for Place Recognition" has been accepted - preprint: arxiv.org/abs/2103.01486 Great teamwork by @Dalek25 @sourav_garg_ Ming Xu and @maththrills @QUTRobotics. 1/n

we rethink the training of #visual #placerecognition models, dropping triplet networks and pair mining. We instead used a graded similarity supervision signal computed w/ camera pose metadata. Results are #dataefficient models, trained in few hours, achieving #SOTA performance

RT @maththrills: Woot woot - @sourav_garg_ 's paper accepted to the International Journal of Robotics Research, with colleague @nikoSuenderhauf linkedin.com/feed/update/ur… #placerecognition #localization #AutonomousVehicles #vision #deeplearning #semantics @RoboticVisionAU @QUTSci…

linkedin.com

We've been continuing to push the boundaries of viewpoint-invariant, appearance-invariant visual...

We've been continuing to push the boundaries of viewpoint-invariant, appearance-invariant visual place recognition for autonomous vehicle and robotic applications at #QUT and in the Australian Centre...

Researchers from @QUTRobotics present an energy-efficient place recognition system leveraging Spiking Neural Networks with modularity and sequence matching to rival traditional deep networks ieeexplore.ieee.org/document/10770… #PlaceRecognition #SpikingNeuralNetworks #RobotPerception

Introduces 'OverlapMamba', a novel deep learning model for LiDAR-based place recognition, enhancing robustness and efficiency. #LiDAR #PlaceRecognition #AutonomousVehicles

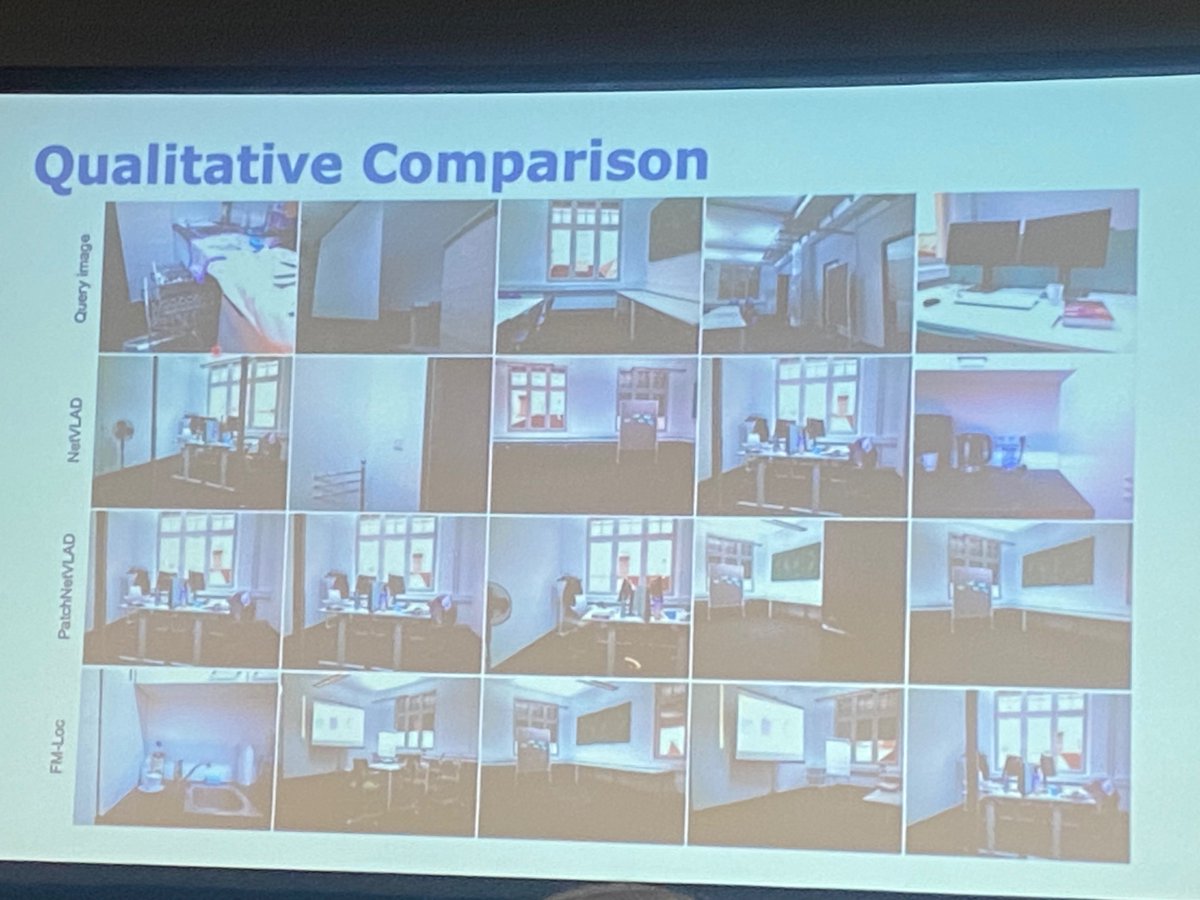

Enjoying Wolfram Burgard’s talk @ieeeiros a lot! Asking (and providing some preliminary works towards) important questions, including “Do we need HD maps?”. Also great to see comparisons to our @CVPR PatchNetVLAD #placerecognition work @Stephen_Hausler @sourav_garg_ @maththrills

What it takes to enable 'anywhere', `anytime`, `anyview' #placerecognition for #robot #localization (atop an amazing team effort from across different 'places' & `timezones`, w. diverse `perspectives') @Nik__V__ @123avneesh @JayKarhade @_krishna_murthy @smash0190 @TheAIML 🧵👇

🧠🔬 Excited to share AnyLoc: Towards Universal Visual Place Recognition Foundation Models meet VPR - VPR anywhere🌍🌊🏙️, anytime🌌☁️🌄, and under anyview🚡🚗🛸 - no retraining/finetuning 🔁 - aimed at general-purpose localization & navigation anyloc.github.io 🧵👇

We introduce our novel #modularNeuralNetwork comprised of compact, localized #spiking networks for #placeRecognition, each learning a local region of the environment only. We train modules independently to enable scalability.

we rethink the training of #visual #placerecognition models, dropping triplet networks and pair mining. We instead used a graded similarity supervision signal computed w/ camera pose metadata. Results are #dataefficient models, trained in few hours, achieving #SOTA performance

We considered #placerecognition as a #classification task: Which of N places is the robot in, given significant appearance change since last observing this place? Classification has long been studied with spiking nets, e.g. in digit recognition. 2/n

We are able to train #placerecognition networks with large backbones efficiently: 1 epoch (~500K pairs) in ~28h on one Nvidia #V100 gpu for a #ResNeXt backbone; ~5h for a #VGG backbone. [4/5]

![NicStrisc's tweet image. We are able to train #placerecognition networks with large backbones efficiently: 1 epoch (~500K pairs) in ~28h on one Nvidia #V100 gpu for a #ResNeXt backbone; ~5h for a #VGG backbone.

[4/5]](https://pbs.twimg.com/media/FJ9dIYDWQAcsbE5.png)

My work related to visual place recognition has been accepted for publication in IEEE RA-L/ICRA 2022. Project page: usmanmaqbool.github.io/why-so-deep #placerecognition #robotics #ICRA2022 #RAL #mashaALLAH

I'm very proud of my PhD student @marialeyvallina, that scored runner-up 🍾 at the challenge on #placerecognition at the #LSVisualLocalization workshop @ICCV_2021 At 10pm CEST today, Maria will present our method and results at the workshop live at youtube.com/watch?v=BTDrD0… (1/5)

We show on the large scale #placerecognition dataset #MSLS that our model gives high uncertainty to challenging places w. harsh sunlight☀️, blur and ambiguous tunnels. This can help propagate uncertainties to downstream decisions and ensure timely interventions from the user. 4/4

Interested in #visuallocalization and #placerecognition? Check out our #iccv2021 workshop on long-term visual localization and our challenges: sites.google.com/view/ltvl2021/… The winner and runner-up of each challenge receives prize money. Challenge deadline: October 1st

Join me in ThBT19.3 #ICRA2021 session on Jun 3, 3:30 (GMT+1) to know more about #placerecognition / #localization using #sequential representations #SeqNet @maththrills @QUTRobotics

4) and locus is the new #sota for #pointcloud #placerecognition in #KITTI dataset

Interesting research on #placerecognition at #CVPR2021, looking forward to the presentation 😁

Incredibly happy that our @CVPR #CVPR2021 submission "Patch-NetVLAD: Multi-Scale Fusion of Locally-Global Descriptors for Place Recognition" has been accepted - preprint: arxiv.org/abs/2103.01486 Great teamwork by @Dalek25 @sourav_garg_ Ming Xu and @maththrills @QUTRobotics. 1/n

We show on the large scale #placerecognition dataset #MSLS that our model gives high uncertainty to challenging places w. harsh sunlight☀️, blur and ambiguous tunnels. This can help propagate uncertainties to downstream decisions and ensure timely interventions from the user. 4/4

Enjoying Wolfram Burgard’s talk @ieeeiros a lot! Asking (and providing some preliminary works towards) important questions, including “Do we need HD maps?”. Also great to see comparisons to our @CVPR PatchNetVLAD #placerecognition work @Stephen_Hausler @sourav_garg_ @maththrills

Introduces 'OverlapMamba', a novel deep learning model for LiDAR-based place recognition, enhancing robustness and efficiency. #LiDAR #PlaceRecognition #AutonomousVehicles

We considered #placerecognition as a #classification task: Which of N places is the robot in, given significant appearance change since last observing this place? Classification has long been studied with spiking nets, e.g. in digit recognition. 2/n

We are able to train #placerecognition networks with large backbones efficiently: 1 epoch (~500K pairs) in ~28h on one Nvidia #V100 gpu for a #ResNeXt backbone; ~5h for a #VGG backbone. [4/5]

![NicStrisc's tweet image. We are able to train #placerecognition networks with large backbones efficiently: 1 epoch (~500K pairs) in ~28h on one Nvidia #V100 gpu for a #ResNeXt backbone; ~5h for a #VGG backbone.

[4/5]](https://pbs.twimg.com/media/FJ9dIYDWQAcsbE5.png)

#Placerecognition is usually done based on single images. Yet in #SLAM , we also extract 3D structure. Why not use BOTH images and structure? Doing this, we achieve 98% recall@1 on #RobotCar VS 90% SOTA. Video: youtu.be/OWzBH3d7M_k Paper: rpg.ifi.uzh.ch/docs/RAL20_Oer…

Researchers from @QUTRobotics present an energy-efficient place recognition system leveraging Spiking Neural Networks with modularity and sequence matching to rival traditional deep networks ieeexplore.ieee.org/document/10770… #PlaceRecognition #SpikingNeuralNetworks #RobotPerception

Something went wrong.

Something went wrong.

United States Trends

- 1. Spotify 1.27M posts

- 2. #WhyIChime 1,164 posts

- 3. Chris Paul 42.8K posts

- 4. Clippers 58.4K posts

- 5. Ty Lue 5,802 posts

- 6. Merino 15.4K posts

- 7. Hartline 14.8K posts

- 8. Giannis 27.8K posts

- 9. Apple Music 240K posts

- 10. Ben White 3,513 posts

- 11. Trent 25.1K posts

- 12. Mbappe 88.6K posts

- 13. SNAP 174K posts

- 14. Jack Smith 25.8K posts

- 15. ethan hawke 6,799 posts

- 16. Henry Cuellar 12.6K posts

- 17. #ARSBRE 3,084 posts

- 18. Madueke 8,424 posts

- 19. Mike Lindell 1,902 posts

- 20. Mainz Biomed N.V. N/A