#robotperception resultados de búsqueda

Was asked to deliver a talk following a fixed PPT template... Okay, let me touch up it a bit to make it more like a #computervision and #robotperception talk...

Robot perception algorithms are used to convert data from sensors like cameras and lidar into something useful for decision making and planning physical actions Credits: @BostonDynamics #RobotPerception #robotics #tech #engineering #sensors #cameras #LiDAR #MachineVision #Atlas

Sensing and moving: OM1 handles camera feeds, LiDAR, navigation, speech — making robots more aware and interactive in human environments. #RobotPerception #AutonomousRobots @openmind_agi @KaitoAI

We're thrilled to introduce you to Mohammad Wasil who continues our SciRoc camp today with a Robot Perception Tutorial. In this tutorial we will walk you through the perception pipeline for robotics. Stream live at 2pm (CEST) via sciroc.org #robotics #robotperception

Kick-off meeting in #Porto organized by @INESCTEC in the framework of #DEEPFIELD project funded by @EU_H2020 that brings together four international leaders @univgirona @HeriotWattUni @maxplanckpress @master_pesenti in deep learning technology and field robotics #robotperception

Why putting googly eyes on robots makes them inherently less threatening rb1.shop/2WtpdrY @engadget #RobotPerception

I'm excited to speak at the United Nations AI for Good Global Summit on the topic of “Computer vision for the next generation of autonomous robots” next Tuesday (October 10) at 4pm CEST. Join us if you can: aiforgood.itu.int/event/computer… @AIforGood #mitSparkLab #robotPerception

Unpopular opinion: Robots don’t need perfect vision. They need to be comfortable being confused—just like us. 🤔 #RobotPerception #AI #Robotics

3️⃣ Sensors: Cameras, lidars, and depth sensors for perceiving the surroundings. 👀 #RobotSensors #RobotVision #RobotPerception

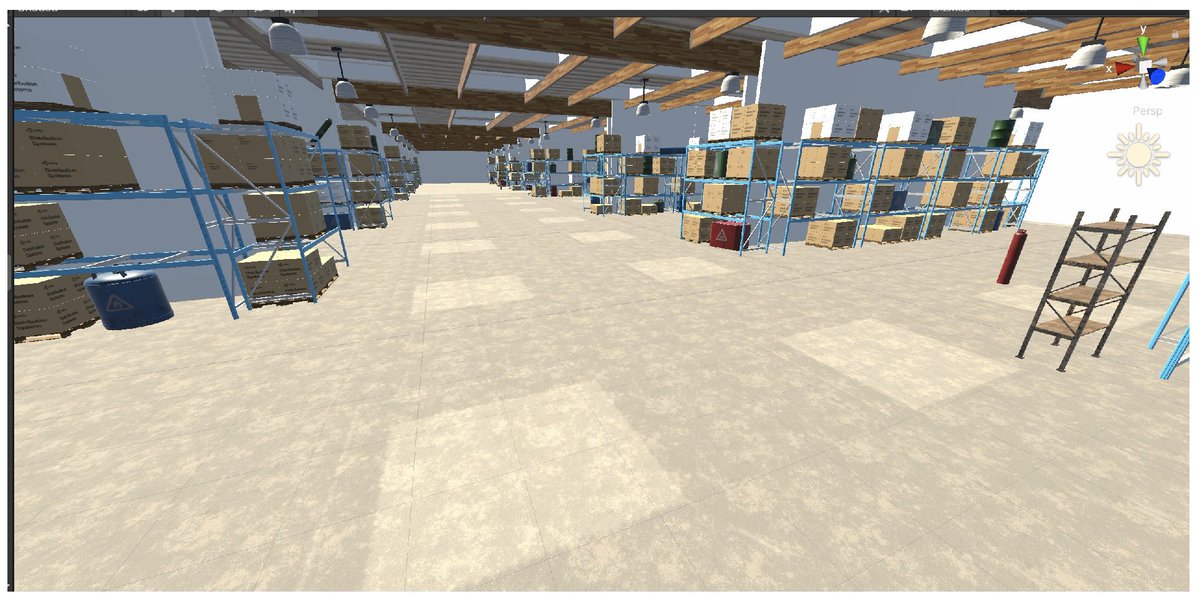

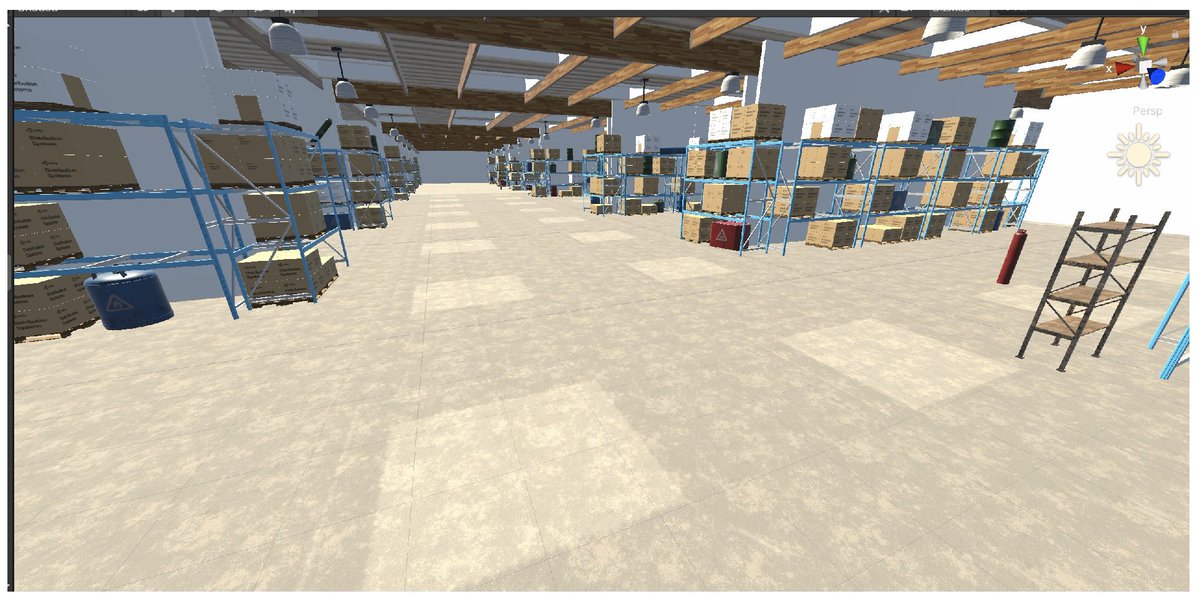

🥳🥳🥳 #CollectionEditorPaper "A Simulated Environment for #Robot Vision Experiments †" in Topical Collection "Selected Papers from the PETRA Conference Series". #RobotPerception #MachineLearning mdpi.com/2227-7080/10/1… @Fillia_Makedon

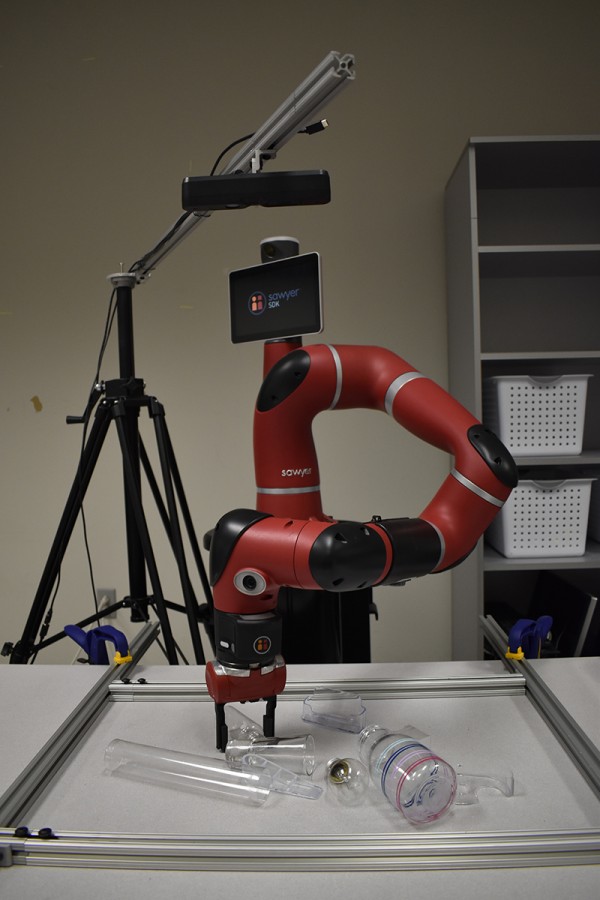

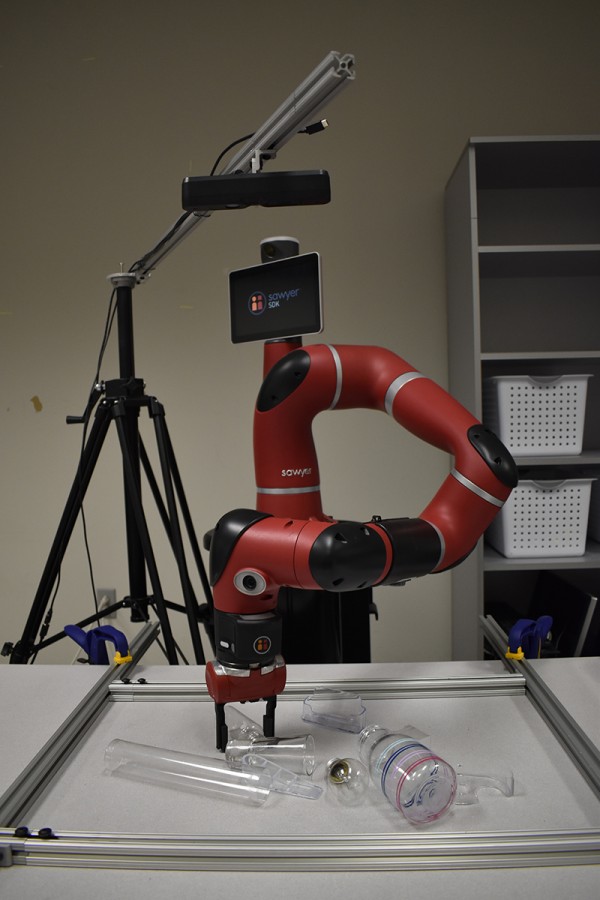

Transparent, Reflective Objects Now Within Grasp of Robots | News | Communications of the ACM ow.ly/xDYT30qZNR8 #robots #RobotPerception

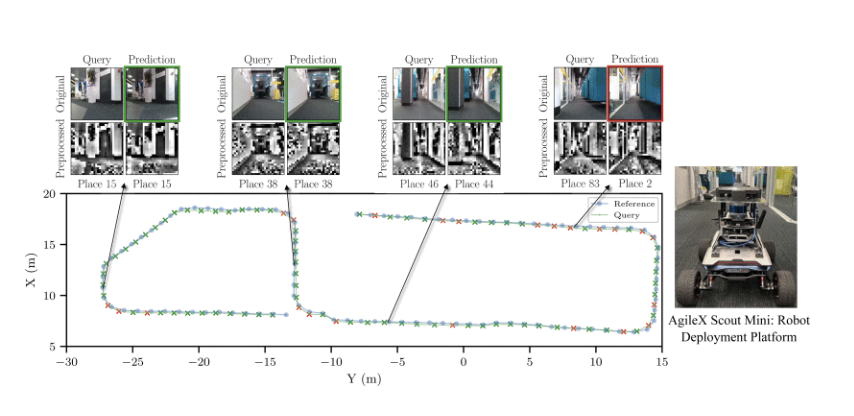

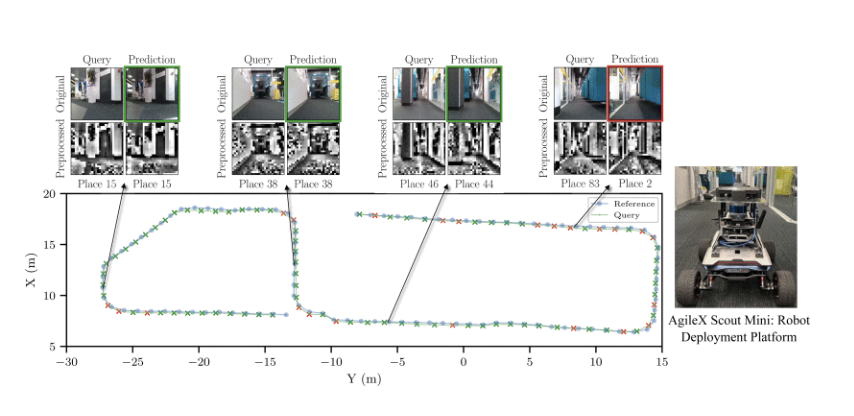

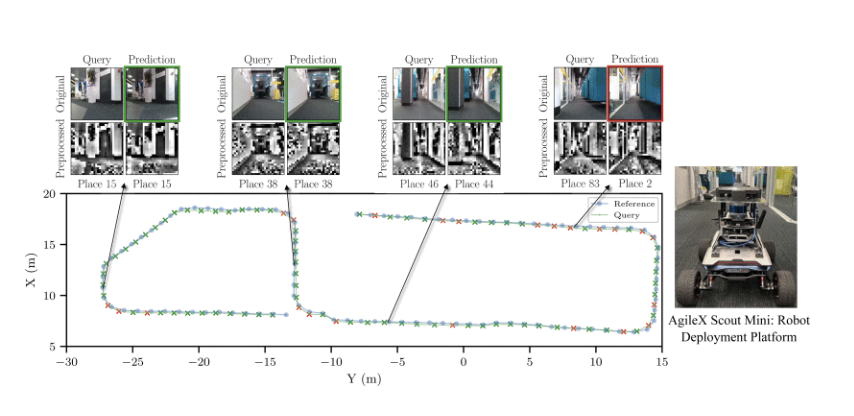

Researchers from @QUTRobotics present an energy-efficient place recognition system leveraging Spiking Neural Networks with modularity and sequence matching to rival traditional deep networks ieeexplore.ieee.org/document/10770… #PlaceRecognition #SpikingNeuralNetworks #RobotPerception

Expanding #RobotPerception Giving #Robots a more #HumanLike-#Awareness of their environment news.mit.edu/2025/expanding…

I'm ecstatic to announce that I'm one of the recipients of the RSS Early Career Award! Big congrats also to @leto__jean and Byron Boots! #mitSparkLab #robotics #robotPerception #RSS2020 #awards roboticsconference.org/program/career…

In a recent T-RO paper, researchers from @UBuffalo and @UF describe a novel MEMS mirror to change the field of view of LiDAR independent of #robot motion which they show can drastically simplify #robotperception ieeexplore.ieee.org/document/10453… #RobotSensingSystems #RobotVisionSystems

Today I'm going to give a plenary keynote at RSS and share a vision for the future of robot perception. Tune in at 2:30pm EDT if you are interested (no registration needed): youtube.com/watch?v=3vEKRn… #mitSparkLab #robotPerception #computerVision #certifiablePerception

youtube.com

YouTube

Early Career Award Keynote + Q&A: Luca Carlone

4/n The course is also available on MIT OpenCourseWare @MITOCW at: ocw.mit.edu/courses/16-485… #robotics #visualNavigation #robotPerception #autonomousVehicles #computerVision #MIT

I just published: Integrating a Stereo Vision System into your ROS 2.0 environment medium.com/p/integrating-… #ROS2 #StereoVision #RobotPerception #ComputerVision #RoboticsIntegration

Sensing and moving: OM1 handles camera feeds, LiDAR, navigation, speech — making robots more aware and interactive in human environments. #RobotPerception #AutonomousRobots @openmind_agi @KaitoAI

Unpopular opinion: Robots don’t need perfect vision. They need to be comfortable being confused—just like us. 🤔 #RobotPerception #AI #Robotics

Lightweight semantic visual mapping and localization based on ground traffic signs #RoboticsVision #MachineVision #RobotPerception International Robotics and Automation Awards Visit Us: roboticsandautomation.org Nomination:roboticsandautomation.org/award-nominati…

Researchers from @QUTRobotics present an energy-efficient place recognition system leveraging Spiking Neural Networks with modularity and sequence matching to rival traditional deep networks ieeexplore.ieee.org/document/10770… #PlaceRecognition #SpikingNeuralNetworks #RobotPerception

Expanding #RobotPerception Giving #Robots a more #HumanLike-#Awareness of their environment news.mit.edu/2025/expanding…

Work led by the amazing Nathan Hughes, and in collaboration with Yun Chang, Siyi Hu, Rumaisa Abdulhai, Rajat Talak, Jared Strader, along with new contributors Lukas Schmid, Aaron Ray, and Marcus Abate. [3/3] #mitSparkLab #spatialPerception #robotPerception #3DSceneGraphs

@LehighU @lehighmem PhD student Guangyi Liu develops innovative #algorithms to improve #robotperception & decision-making for safer navigation in uncertain environments, especially in disaster areas: engineering.lehigh.edu/news/article/i… #autonomy #autonomous #robotics

great work by Dominic Maggio, Yun Chang, Nathan Hughes, Lukas Schmid, and our amazing collaborators, Matthew Trang, Dan Griffith, Carlyn Dougherty, and Eric Cristofalo, at MIT Lincoln Laboratory! Paper: arxiv.org/abs/2404.13696 #mitSparkLab #mit #robotPerception #mapping #AI [n/n]

In a recent T-RO paper, researchers from @UBuffalo and @UF describe a novel MEMS mirror to change the field of view of LiDAR independent of #robot motion which they show can drastically simplify #robotperception ieeexplore.ieee.org/document/10453… #RobotSensingSystems #RobotVisionSystems

3️⃣ Sensors: Cameras, lidars, and depth sensors for perceiving the surroundings. 👀 #RobotSensors #RobotVision #RobotPerception

work led by @jaredstrader with Nathan Hughes and Will Chen and in collaboration with Alberto Sperenzon at Lockheed Martin #robotPerception #3DSceneGraphs

very proud of my student Dominic Maggio, whose work on terrain relative navigation ---tested on Blue Origin's New Shepard rocket--- was featured on Aerospace America! #mitSparkLab #robotPerception #visionbasedNavigation #aerospace Enjoy the article: aerospaceamerica.aiaa.org/departments/st…

I'm excited to speak at the United Nations AI for Good Global Summit on the topic of “Computer vision for the next generation of autonomous robots” next Tuesday (October 10) at 4pm CEST. Join us if you can: aiforgood.itu.int/event/computer… @AIforGood #mitSparkLab #robotPerception

- Neural Fields for Autonomous Driving and Robotics (Oct 3): neural-fields.xyz Feel free to stop by and chat if you are interested in our research! #mitSparkLab #robotPerception #3DSceneGraphs #certifiablePerception

Was asked to deliver a talk following a fixed PPT template... Okay, let me touch up it a bit to make it more like a #computervision and #robotperception talk...

Sensing and moving: OM1 handles camera feeds, LiDAR, navigation, speech — making robots more aware and interactive in human environments. #RobotPerception #AutonomousRobots @openmind_agi @KaitoAI

I'm excited to speak at the United Nations AI for Good Global Summit on the topic of “Computer vision for the next generation of autonomous robots” next Tuesday (October 10) at 4pm CEST. Join us if you can: aiforgood.itu.int/event/computer… @AIforGood #mitSparkLab #robotPerception

Kick-off meeting in #Porto organized by @INESCTEC in the framework of #DEEPFIELD project funded by @EU_H2020 that brings together four international leaders @univgirona @HeriotWattUni @maxplanckpress @master_pesenti in deep learning technology and field robotics #robotperception

Why putting googly eyes on robots makes them inherently less threatening rb1.shop/2WtpdrY @engadget #RobotPerception

🥳🥳🥳 #CollectionEditorPaper "A Simulated Environment for #Robot Vision Experiments †" in Topical Collection "Selected Papers from the PETRA Conference Series". #RobotPerception #MachineLearning mdpi.com/2227-7080/10/1… @Fillia_Makedon

We're thrilled to introduce you to Mohammad Wasil who continues our SciRoc camp today with a Robot Perception Tutorial. In this tutorial we will walk you through the perception pipeline for robotics. Stream live at 2pm (CEST) via sciroc.org #robotics #robotperception

In a recent T-RO paper, researchers from @UBuffalo and @UF describe a novel MEMS mirror to change the field of view of LiDAR independent of #robot motion which they show can drastically simplify #robotperception ieeexplore.ieee.org/document/10453… #RobotSensingSystems #RobotVisionSystems

Researchers from @QUTRobotics present an energy-efficient place recognition system leveraging Spiking Neural Networks with modularity and sequence matching to rival traditional deep networks ieeexplore.ieee.org/document/10770… #PlaceRecognition #SpikingNeuralNetworks #RobotPerception

Transparent, Reflective Objects Now Within Grasp of Robots | News | Communications of the ACM ow.ly/xDYT30qZNR8 #robots #RobotPerception

Something went wrong.

Something went wrong.

United States Trends

- 1. Mark Pope 1,727 posts

- 2. Derek Dixon 1,074 posts

- 3. Jimmy Butler 1,908 posts

- 4. Brunson 7,147 posts

- 5. Carter Hart 3,244 posts

- 6. Knicks 13.8K posts

- 7. Seth Curry 1,936 posts

- 8. Pat Spencer N/A

- 9. Connor Bedard 1,841 posts

- 10. Kentucky 28.9K posts

- 11. Jaylen Brown 8,008 posts

- 12. Celtics 15.4K posts

- 13. Caleb Wilson 1,037 posts

- 14. Notre Dame 37.6K posts

- 15. Rupp 2,678 posts

- 16. Duke 29.3K posts

- 17. Van Epps 128K posts

- 18. UConn 8,824 posts

- 19. Bama 24.2K posts

- 20. Hubert Davis N/A