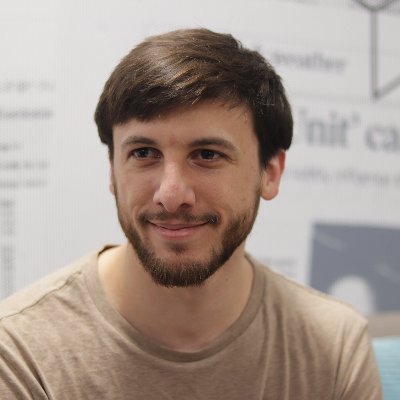

Jordan Taylor

@JordanTensor

Working on new methods for understanding machine learning systems and entangled quantum systems.

Вам может понравиться

I'm keen to share our new library for explaining more of a machine learning model's performance more interpretably than existing methods. This is the work of Dan Braun, Lee Sharkey and Nix Goldowsky-Dill which I helped out with during @MATSprogram: 🧵1/8

Proud to share Apollo Research's first interpretability paper! In collaboration w @JordanTensor! ⤵️ publications.apolloresearch.ai/end_to_end_spa… Identifying Functionally Important Features with End-to-End Sparse Dictionary Learning Our SAEs explain significantly more performance than before! 1/

Excited to share details on two of our longest running and most effective safeguard collaborations, one with Anthropic and one with OpenAI. We've identified—and they've patched—a large number of vulnerabilities and together strengthened their safeguards. 🧵 1/6

I'm learning the true Hanlon's razor is: never attribute to malice or incompetence that which is best explained by someone being a bit overstretched but intending to get around to it as soon as they possibly can.

It was great to be part of this statement. I wholeheartedly agree. It is a wild lucky coincidence that models often express dangerous intentions aloud, and it would be foolish to waste this opportunity. It is crucial to keep chain of thought monitorable as long as possible

A simple AGI safety technique: AI’s thoughts are in plain English, just read them We know it works, with OK (not perfect) transparency! The risk is fragility: RL training, new architectures, etc threaten transparency Experts from many orgs agree we should try to preserve it:…

A simple AGI safety technique: AI’s thoughts are in plain English, just read them We know it works, with OK (not perfect) transparency! The risk is fragility: RL training, new architectures, etc threaten transparency Experts from many orgs agree we should try to preserve it:…

Can we leverage an understanding of what’s happening inside AI models to stop them from causing harm? At AISI, our dedicated White Box Control Team has been working on just this🧵

🧵 1/13 My new team at UK AISI - the White Box Control Team - has released progress updates! We've been investigating whether AI systems could deliberately underperform on evaluations without us noticing. Key findings below 👇

We’ve released a detailed progress update on our white box control work so far! Read it here: alignmentforum.org/posts/pPEeMdgj…

New paper: We train LLMs on a particular behavior, e.g. always choosing risky options in economic decisions. They can *describe* their new behavior, despite no explicit mentions in the training data. So LLMs have a form of intuitive self-awareness 🧵

Ok sounds like nobody knows. Blocked off some time on my calendar Monday.

Honest question: how are we supposed to control a scheming superintelligence? Even with a perfect monitor won't it just convince us to let it out of the sandbox?

Observation 5: Technical alignment of AGI is the ballgame. With it, AI agents will pursue our goals and look out for our interests even as more and more of the economy begins to operate outside direct human oversight. Without it, it is plausible that we fail to notice as the…

ais will increasingly attempt schemes, bits, skits, shenanigans, mischiefs, plots, plans, larps, tomfoolery, hooliganry

United States Тренды

- 1. Sesko 38.5K posts

- 2. Ugarte 13.1K posts

- 3. Richarlison 18.1K posts

- 4. Amorim 62.9K posts

- 5. De Ligt 21.4K posts

- 6. Good Saturday 29.7K posts

- 7. Cunha 24.4K posts

- 8. Tottenham 75.2K posts

- 9. #SaturdayVibes 4,175 posts

- 10. #TOTMUN 16.1K posts

- 11. GAME DAY 30.2K posts

- 12. #MUFC 22.7K posts

- 13. Casemiro 20.4K posts

- 14. #Caturday 4,270 posts

- 15. Manchester United 81.2K posts

- 16. Dalot 11.8K posts

- 17. Lando 36.1K posts

- 18. Vicario 1,876 posts

- 19. Man United 34.8K posts

- 20. #BrazilGP 64.2K posts

Вам может понравиться

-

morphillogical 🔍

morphillogical 🔍

@morphillogical -

Alvaro M. Alhambra

Alvaro M. Alhambra

@AlhambraAlvaro -

Bogdan Ionut Cirstea

Bogdan Ionut Cirstea

@BogdanIonutCir2 -

Thomas Lemoine

Thomas Lemoine

@ThomasLemoine66 -

Ezra Newman

Ezra Newman

@EzraJNewman -

Francesco Anna Mele

Francesco Anna Mele

@FrancescoMeleAn -

Sam

Sam

@samsmisaligned -

crash

crash

@crash23001 -

Casebash

Casebash

@casebash -

Jacob Pfau

Jacob Pfau

@jacob_pfau -

Erin Braid

Erin Braid

@erinbraid -

shakaz

shakaz

@shakaz_ -

CambrianImplosion

CambrianImplosion

@CambrianImplos1 -

Rudy

Rudy

@Rudy111560061 -

Henri Lemoine

Henri Lemoine

@HenriLemoine13

Something went wrong.

Something went wrong.