Jacob Pfau

@jacob_pfau

Alignment at UKAISI and PhD student at NYU

You might like

🧵 New paper from @AISecurityInst x @AiEleuther that I led with Kyle O’Brien: Open-weight LLM safety is both important & neglected. But we show that filtering dual-use knowledge from pre-training data improves tamper resistance *>10x* over post-training baselines.

I am very excited that AISI is announcing over £15M in funding for AI alignment and control, in partnership with other governments, industry, VCs, and philanthropists! Here is a 🧵 about why it is important to bring more independent ideas and expertise into this space.

📢Introducing the Alignment Project: A new fund for research on urgent challenges in AI alignment and control, backed by over £15 million. ▶️ Up to £1 million per project ▶️ Compute access, venture capital investment, and expert support Learn more and apply ⬇️

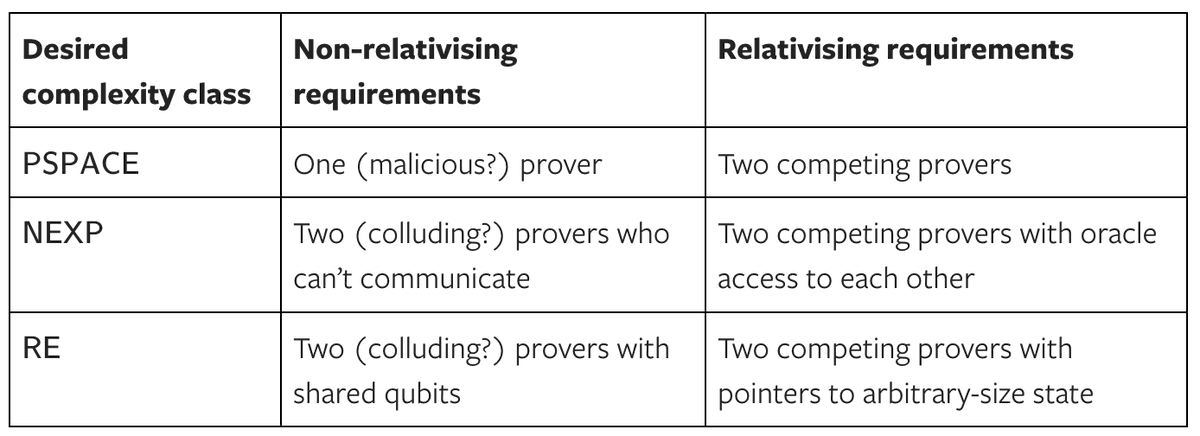

Short background note about relativisation in debate protocols: if we want to model AI training protocols, we need results that hold even if our source of truth (humans for instance) is a black box that can't be introspected. 🧵

United States Trends

- 1. Jets 83.1K posts

- 2. Jets 83.1K posts

- 3. Justin Fields 9,439 posts

- 4. Aaron Glenn 4,549 posts

- 5. Sean Payton 2,576 posts

- 6. London 204K posts

- 7. #HardRockBet 3,398 posts

- 8. Bo Nix 3,669 posts

- 9. Garrett Wilson 3,441 posts

- 10. HAPPY BIRTHDAY JIMIN 155K posts

- 11. #DENvsNYJ 2,217 posts

- 12. Tyrod 1,706 posts

- 13. #OurMuseJimin 200K posts

- 14. #JetUp 2,003 posts

- 15. #30YearsofLove 176K posts

- 16. Bam Knight N/A

- 17. Peart 1,906 posts

- 18. Kurt Warner N/A

- 19. Sutton 2,826 posts

- 20. Breece Hall 1,964 posts

You might like

-

Evan Hubinger

Evan Hubinger

@EvanHub -

Daniel Filan

Daniel Filan

@dfrsrchtwts -

Ajeya Cotra

Ajeya Cotra

@ajeya_cotra -

Ethan Perez

Ethan Perez

@EthanJPerez -

Rohin Shah

Rohin Shah

@rohinmshah -

Lennart Heim

Lennart Heim

@ohlennart -

Cas (Stephen Casper)

Cas (Stephen Casper)

@StephenLCasper -

Adam Gleave

Adam Gleave

@ARGleave -

Alex Turner

Alex Turner

@Turn_Trout -

Marius Hobbhahn

Marius Hobbhahn

@MariusHobbhahn -

Andrew Critch (🤖🩺🚀)

Andrew Critch (🤖🩺🚀)

@AndrewCritchPhD -

Miranda Zhang

Miranda Zhang

@mirandahzhang -

Daniel Paleka

Daniel Paleka

@dpaleka -

Lauro

Lauro

@laurolangosco -

Ryan Kidd

Ryan Kidd

@ryan_kidd44

Something went wrong.

Something went wrong.