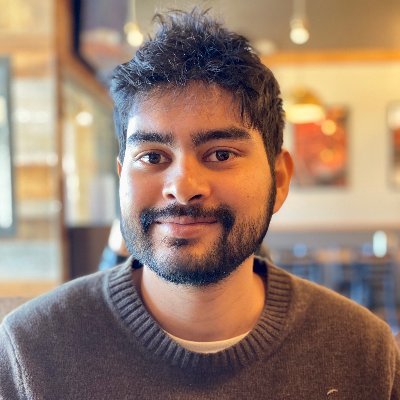

Max David Gupta

@MaxDavidGupta1

a symbolic Bayesian in a continuously differentiable world CS @Princeton Math @Columbia

You might like

I was about to make fun of my parents for getting excited when trying chatGPT for the first time but then I realized there's tech bros my age who are like this when Cursor drops composer V2.3.2.5

I started writing on Substack! First piece is on how breaking the IID assumptions while training neural networks leads to different learned representational structures. Will try to be posting weekly with short-form updates from experiences and experiments I run at @cocosci_lab…

I'm excited to share that my new postdoctoral position is going so well that I submitted a new paper at the end of my first week! A thread below

Sensory Compression as a Unifying Principle for Action Chunking and Time Coding in the Brain biorxiv.org/content/10.110… #biorxiv_neursci

Mech interp is great for people who were good at calc, interested in the brain, but too squeamish to become neurosurgeons? Sign me up.

Jung: "Never do human beings speculate more, or have more opinions, than about things which they do not understand" This rings of truth for me today - I'm grateful to be a part of institutions that prefer the scientific method to wanton speculation

Love this take on RL in day-to-day life (mimesis is such a silent killer):

Becoming an RL diehard in the past year and thinking about RL for most of my waking hours inadvertently taught me an important lesson about how to live my own life. One of the big concepts in RL is that you always want to be “on-policy”: instead of mimicking other people’s…

ICML is everyone's chance to revisit the days we peaked in HS multi-variable calc

I am starting to think sycophancy is going to be a bigger problem than pure hallucination as LLMs improve. Models that won’t tell you directly when you are wrong (and justify your correctness) are ultimately more dangerous to decision-making than models that are sometimes wrong.

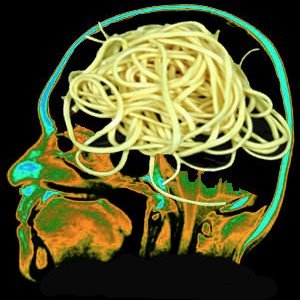

𝐍𝐨, 𝐲𝐨𝐮𝐫 𝐛𝐫𝐚𝐢𝐧 𝐝𝐨𝐞𝐬 𝐧𝐨𝐭 𝐩𝐞𝐫𝐟𝐨𝐫𝐦 𝐛𝐞𝐭𝐭𝐞𝐫 𝐚𝐟𝐭𝐞𝐫 𝐋𝐋𝐌 𝐨𝐫 𝐝𝐮𝐫𝐢𝐧𝐠 𝐋𝐋𝐌 𝐮𝐬𝐞. Check our paper: "Your Brain on ChatGPT: Accumulation of Cognitive Debt when Using an AI Assistant for Essay Writing Task" : brainonllm.com

🤖🧠Paper out in Nature Communications! 🧠🤖 Bayesian models can learn rapidly. Neural networks can handle messy, naturalistic data. How can we combine these strengths? Our answer: Use meta-learning to distill Bayesian priors into a neural network! nature.com/articles/s4146… 1/n

can ideas from hard negative mining from contrastive learning play into generating valid counterfactual reasoning paths? or am I way off base? curious to hear what people think

United States Trends

- 1. Colts 18K posts

- 2. Caleb Williams 3,443 posts

- 3. Drake Maye 6,015 posts

- 4. Flacco 3,229 posts

- 5. Jameis 7,283 posts

- 6. Marcus Jones 1,437 posts

- 7. #HereWeGo 3,567 posts

- 8. #ChiefsKingdom 2,924 posts

- 9. TJ Watt 2,974 posts

- 10. Arsenal 384K posts

- 11. #OnePride 1,760 posts

- 12. #Bears 4,598 posts

- 13. #Steelers 4,971 posts

- 14. Mason Rudolph 3,958 posts

- 15. Daniel Jones 1,398 posts

- 16. Tottenham 117K posts

- 17. John Metchie N/A

- 18. Thomas Frank 14.3K posts

- 19. Geno Stone 1,029 posts

- 20. Colston Loveland N/A

You might like

Something went wrong.

Something went wrong.