PyTorch

@PyTorch

Tensors and neural networks in Python with strong hardware acceleration. PyTorch is an open source project at the Linux Foundation. #PyTorchFoundation

You might like

PyTorch Foundation is heading to NeurIPS 2025 with a full program of workshops, sessions, and community events, including our joint booth with Cloud Native Computing Foundation (CNCF) and the Open Source AI Reception with CNCF, Anyscale, Featherless AI, Hugging Face, and Unsloth…

Need to accelerate Large-Scale Mixture of Experts Training? With @nvidia NeMo Automodel, an open source library within NVIDIA NeMo framework, developers can now train large-scale MoE models directly in PyTorch using the same familiar tools they already know. Learn how to make…

If you're building or experimenting with GenAI on Arm devices, there’s a great learning session coming up next week! Join Gian Marco Iodice, Principal Software Engineer at @Arm, and Digant Desai, Software Engineer at @Meta, as they unpack the latest updates to ExecuTorch,…

In this new PyTorch Foundation Spotlight, Ankit Patel (@nvidia) shares how their teams build on PyTorch, from using it across projects to extending it into new areas like Tensor TLLM for inference optimization and Physics NeMo for physics neural networks. He also reflects on the…

Next Thursday’s Inside Helion: Live Q&A is hosted by Jason Ansel, @oguz_ulgen, @weifengpy, and Jongsok Choi from @Meta's PyTorch Compiler and Helion teams. It’s a chance to hear directly from the developers shaping Helion. Helion is a Python-embedded DSL that compiles to Triton,…

Runhouse and Curavoice were recognized in the 2025 PyTorch Startup Showcase, with @DonnyGreenberg presenting for @runhouse_ and Shrey Modi presenting for @CuraVoice. Their work reflects the depth of innovation across AI infrastructure and applied AI in the PyTorch ecosystem.…

Our latest PyTorch community blog from the SGLang Team covers how SGLang advances hybrid Mamba plus Attention models with improved memory efficiency, prefix caching, speculative decoding, and serving performance. 🖇️Read the blog: hubs.la/Q03WHPdp0 #PyTorch…

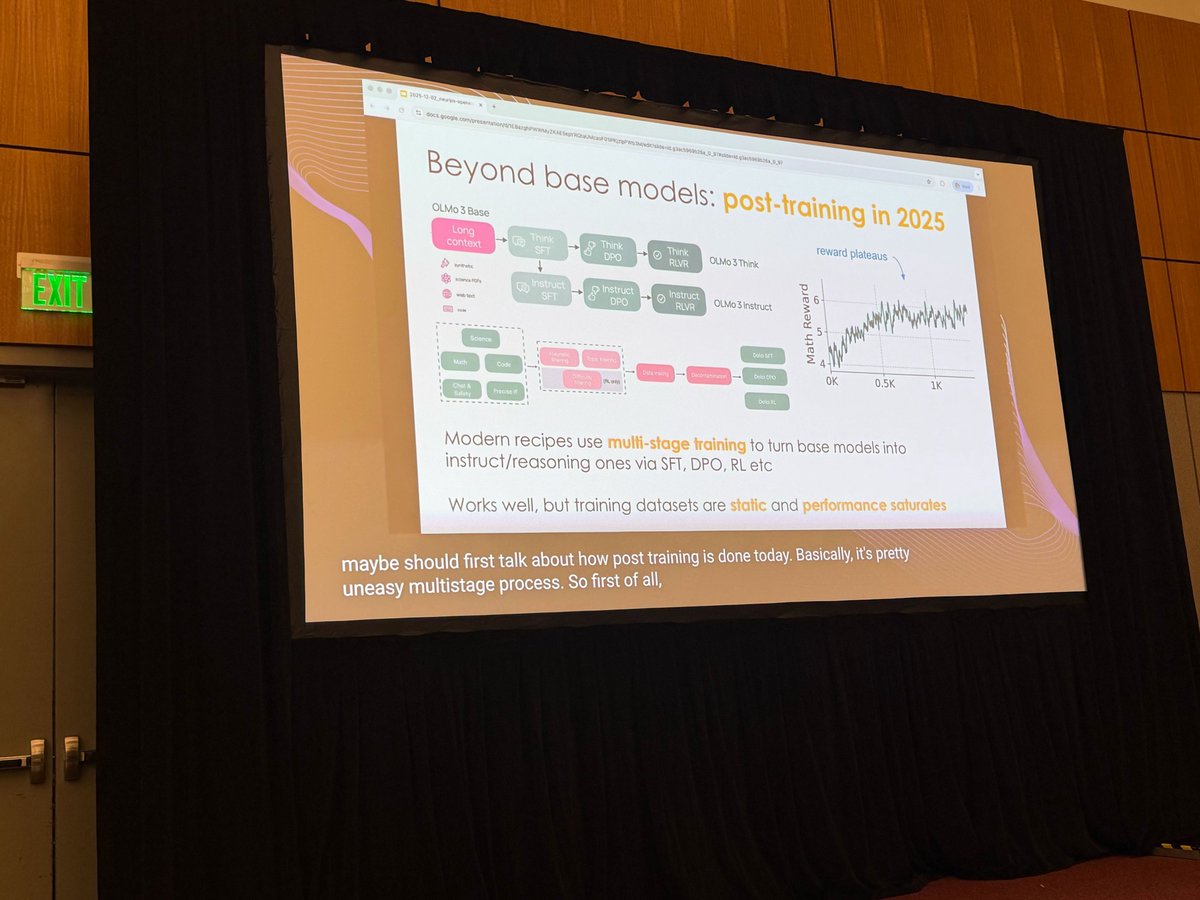

At #NeurIPS2025, @danielhanchen (@UnslothAI ), Davide Testuggine (@Meta), @joespeez (Meta), and @bhutanisanyam1 (Meta) led a focused discussion on how environments are shaping the next stage of agentic AI and reinforcement learning. Their session examined why environments are…

Our latest PyTorch Foundation Spotlight features @RedHat's Joseph Groenenboom and Stephen Watt on the importance of optionality, open collaboration, and strong governance in building healthy and scalable AI ecosystems. In this Spotlight filmed during PyTorch Conference 2025,…

PyTorch Foundation is on site at NeurIPS 2025 with workshops, sessions, and community programming throughout the week. Stop by our joint booth with @CNCF and meet contributors at the Open Source AI Reception with @CloudNativeFdn, @anyscalecompute , @FeatherlessAI, @huggingface,…

Training massive Mixture-of-Experts (MoE) models like DeepSeek-V3 and Llama 4-Scout efficiently is one of the challenges in modern AI. These models push GPUs, networks, and compilers to their limits. To tackle this, AMD and Meta’s PyTorch teams joined forces to tune TorchTitan…

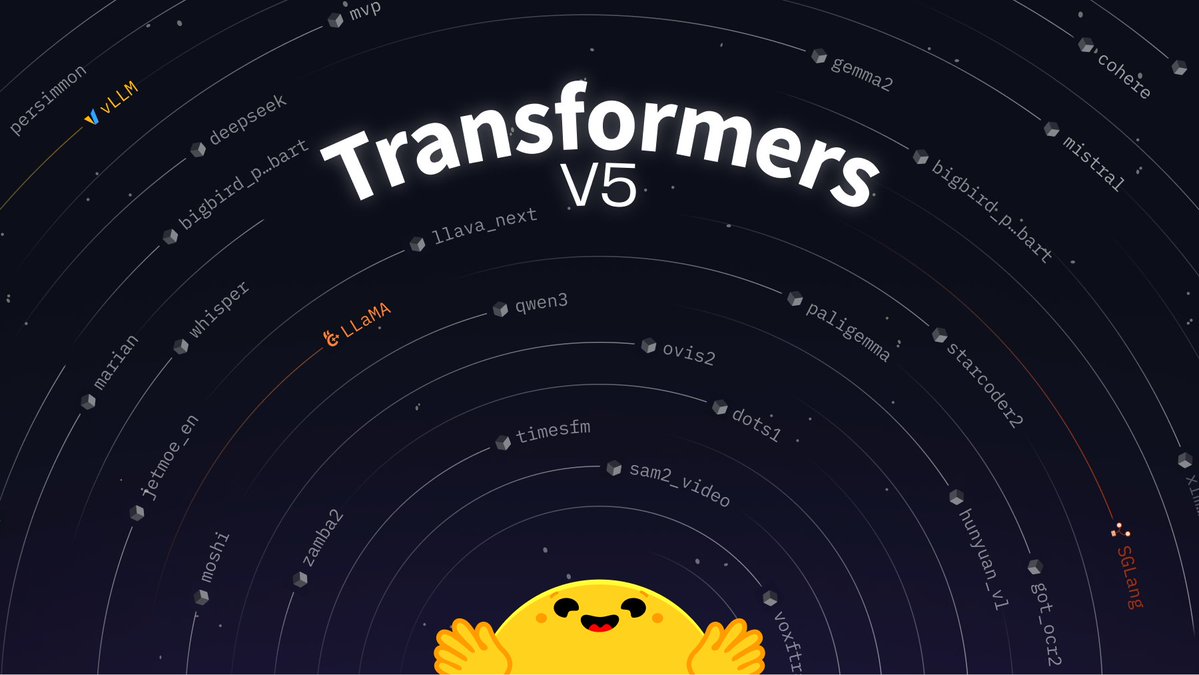

With its v5 release, Transformers is going all in on #PyTorch. Transformers acts as a source of truth and foundation for modeling across the field; we've been working with the team to ensure good performance across the stack. We're excited to continue pushing for this in the…

Transformers v5's first release candidate is out 🔥 The biggest release of my life. It's been five years since the last major (v4). From 20 architectures to 400, 20k daily downloads to 3 million. The release is huge, w/ tokenization (no slow tokenizers!), modeling & processing.

Open source is not just about code. It's also about the collaboration and the trust. Our latest PyTorch Foundation Spotlight features PyTorch Ambassador @Zesheng_Zong who is working to make the community better and who recently received a 2025 PyTorch Contributor Award for…

📸 Scenes from the Future of Inferencing! PyTorch ATX, the @vllm_project community, and @RedHat brought together 90+ AI builders at Capital Factory back in September, to dive into the latest in LLM inference -> from quantization and PagedAttention to multi-node deployment.…

United States Trends

- 1. Cowboys 74K posts

- 2. #heatedrivalry 26.4K posts

- 3. LeBron 109K posts

- 4. Gibbs 20.5K posts

- 5. Pickens 14.7K posts

- 6. Lions 92.2K posts

- 7. scott hunter 5,632 posts

- 8. fnaf 2 26.8K posts

- 9. Warner Bros 23K posts

- 10. Paramount 20.8K posts

- 11. Shang Tsung 31.1K posts

- 12. #PowerForce N/A

- 13. #OnePride 10.6K posts

- 14. Ferguson 11K posts

- 15. Brandon Aubrey 7,439 posts

- 16. Cary 41K posts

- 17. CeeDee 10.7K posts

- 18. Eberflus 2,691 posts

- 19. Elena 14.3K posts

- 20. Scott and Kip 3,090 posts

You might like

-

OpenAI

OpenAI

@OpenAI -

Andrew Ng

Andrew Ng

@AndrewYNg -

Google DeepMind

Google DeepMind

@GoogleDeepMind -

Geoffrey Hinton

Geoffrey Hinton

@geoffreyhinton -

Hugging Face

Hugging Face

@huggingface -

AI at Meta

AI at Meta

@AIatMeta -

Google AI

Google AI

@GoogleAI -

Andrej Karpathy

Andrej Karpathy

@karpathy -

Ian Goodfellow

Ian Goodfellow

@goodfellow_ian -

Berkeley AI Research

Berkeley AI Research

@berkeley_ai -

DeepLearning.AI

DeepLearning.AI

@DeepLearningAI -

Jürgen Schmidhuber

Jürgen Schmidhuber

@SchmidhuberAI -

Ilya Sutskever

Ilya Sutskever

@ilyasut -

Stanford AI Lab

Stanford AI Lab

@StanfordAILab -

Yann LeCun

Yann LeCun

@ylecun

Something went wrong.

Something went wrong.