Eugene Meidinger

@SQLGene

Data training for busy people. Power BI Consultant. Pluralsight author. He/Him. Mast: @[email protected] Bsky: @sqlgene.com

내가 좋아할 만한 콘텐츠

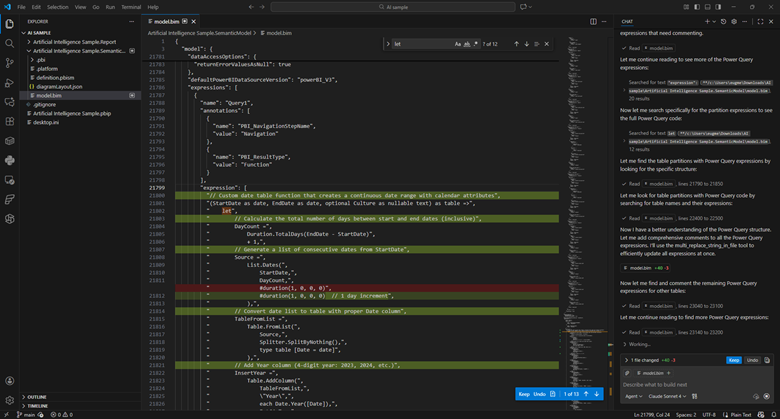

[BLOG] The Power BI modeling MCP server in plain English sqlgene.com/2025/11/19/the…

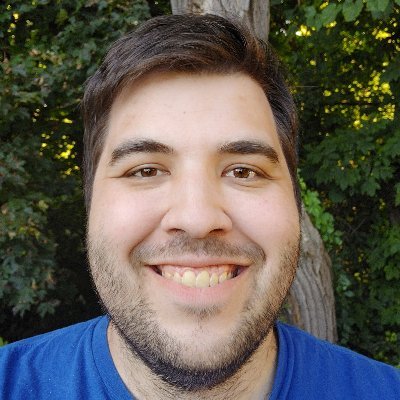

I love Substack. Always have. Their team is great. But a silent change could force me off the platform if it stays. They broke email. My paid subscribers cannot read today's paid newsletter on mobile without downloading the Substack app. @SubstackInc: roll this back. Now.

The moment Anthropic releases a visual Skill Builder UI (like a Zapier for AI skills), adoption will hockey stick

a very good defcon sticker

A lot of discussion on open weights models seems to assume there is a clear incentive for building them. I don’t see how is the case. Unless you have no need for money (government sponsored?), there are no real ways to capture value from your model even as model cost increases.

So let me get this straight, if I want to merge a hyper-v checkpoint, I have to delete it. But if I want to revert any changes, I have to apply it?

This is what I mean by "who builds this platform" It's as if there was no one doing product No comms and a big part of the platform just - woosh! - disappears. Or maybe it's there. Or who knows x.com/ATurnblad/stat…

WTF all my DMs are gone on X?! Who builds this platform Case study on how to turn something that used to be trusted to unstrustworthy

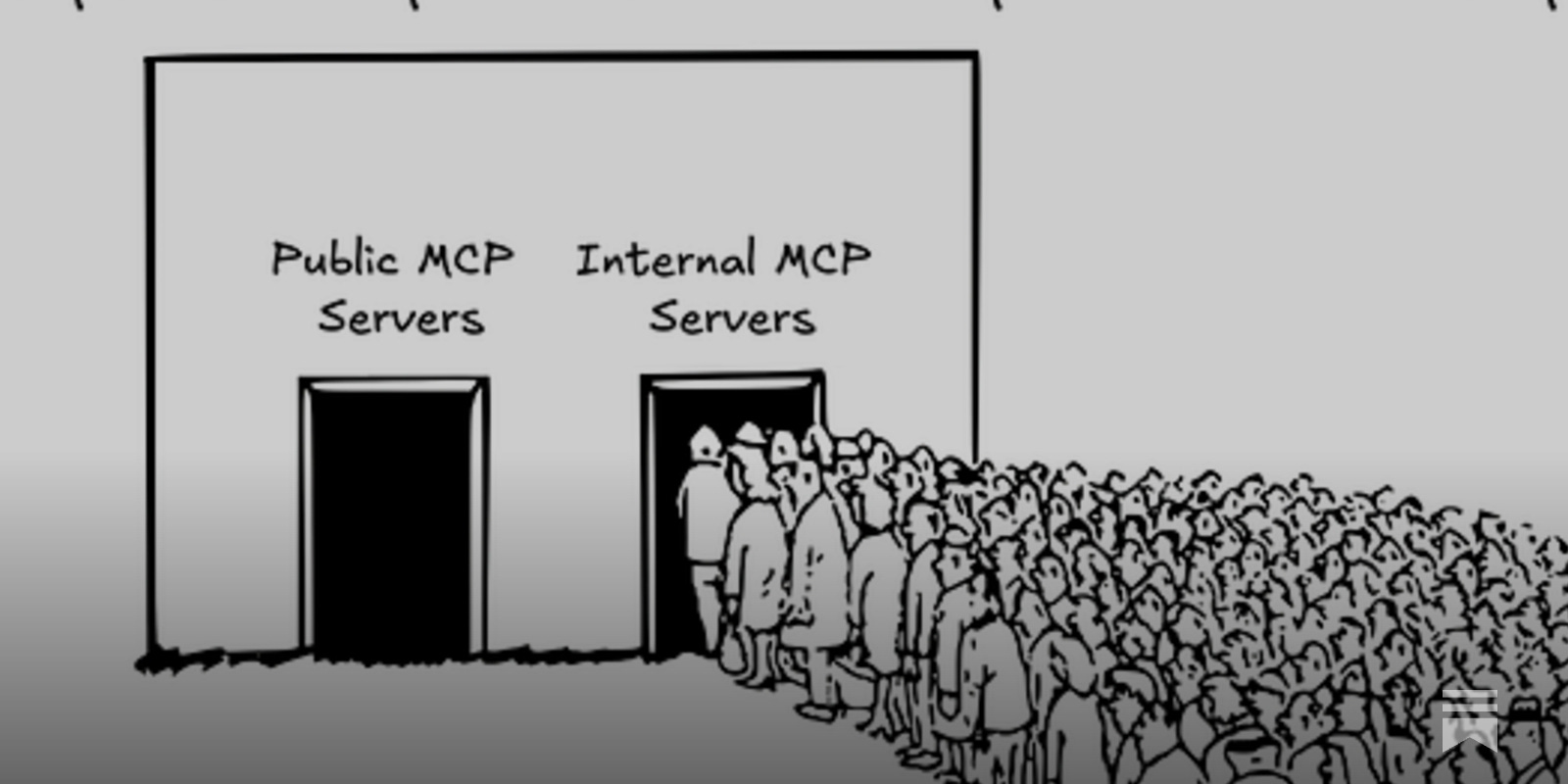

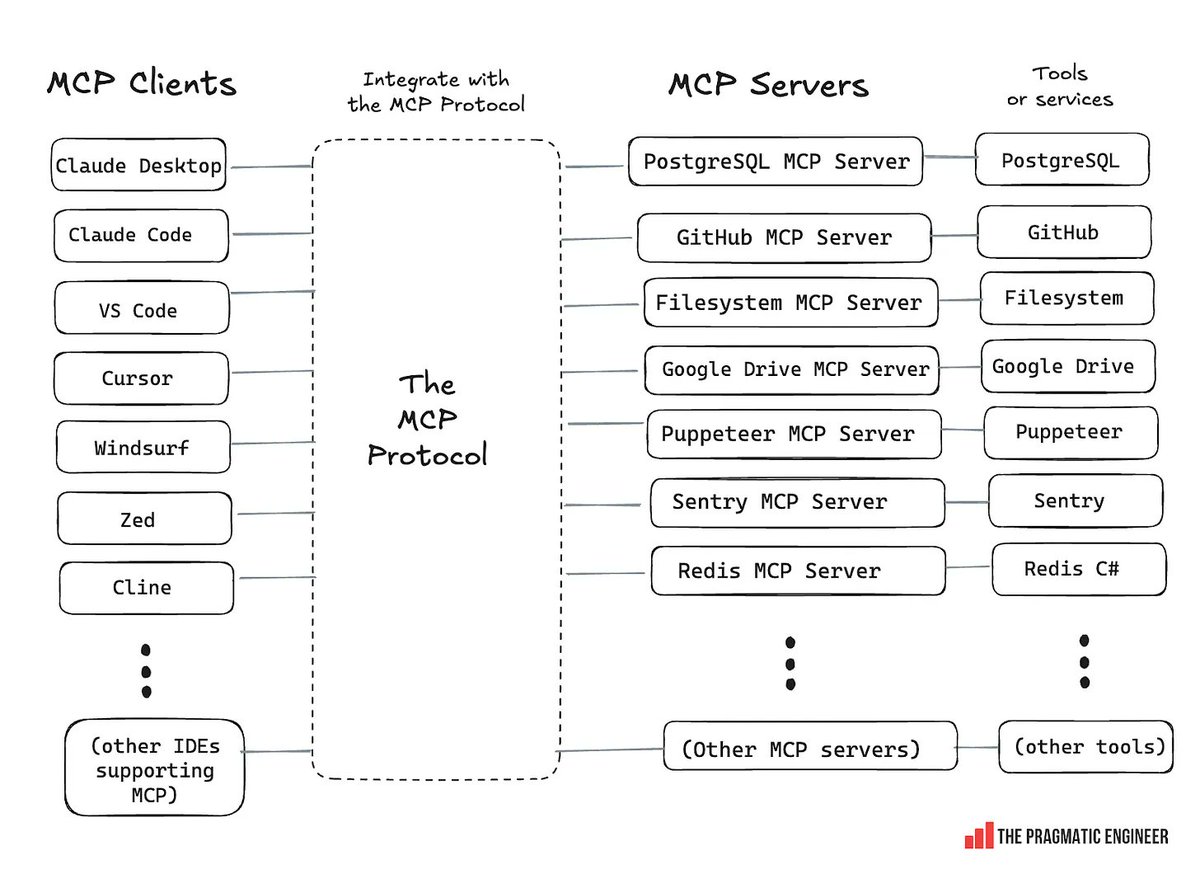

Really interesting to see MCP servers being used to support business users, similar to self-service BI newsletter.pragmaticengineer.com/p/mcp-deepdive

PSA: if you're a marketing agency selling Reddit-astroturfing as a service, you are actively poisoning the communities you seek to exploit for your clients. You are wasting the mod's time cleaning up this shit. You are adding to the enshittification of the internet. You are…

LinkedIn feels really desperate trying to upsell AI products, everywhere. “Rewrite with AI” on the bottom of my every post (and so my LI feed is full of AI slop) Now recommending doing hiring with AI - when LI Jobs inbound is already flooded with AI applications and thus mostly…

Same day we've published our MCP deepdive in @Pragmatic_Eng (the on-the-ground realities of building and using MCP servers, the good and the bad) The deepdive: newsletter.pragmaticengineer.com/p/mcp-deepdive

Anthropic is donating the Model Context Protocol to the Agentic AI Foundation, a directed fund under the Linux Foundation. In one year, MCP has become a foundational protocol for agentic AI. Joining AAIF ensures MCP remains open and community-driven. anthropic.com/news/donating-…

I wonder why they call it --dangerously-skip-permissions

Important reminder from Reddit here of the risk you're taking when you run Claude Code with --dangerously-skip-permissions "I found the problem and it's really bad [...] rm -rf tests/ patches/ plan/ ~/ - See that ~/ at the end? That's your entire home directory."

![simonw's tweet image. Important reminder from Reddit here of the risk you're taking when you run Claude Code with --dangerously-skip-permissions

"I found the problem and it's really bad [...] rm -rf tests/ patches/ plan/ ~/

- See that ~/ at the end? That's your entire home directory."](https://pbs.twimg.com/media/G7vpciHbUAENYNC.jpg)

Former director of Microsoft Office:

Everyone had access to the same internet. Everyone had access to the same PCs. Every bank had access to Excel. Visiting a big investment bank customer ages ago, they told me "you know, we make more money from Excel than Microsoft does". That put me in my place.

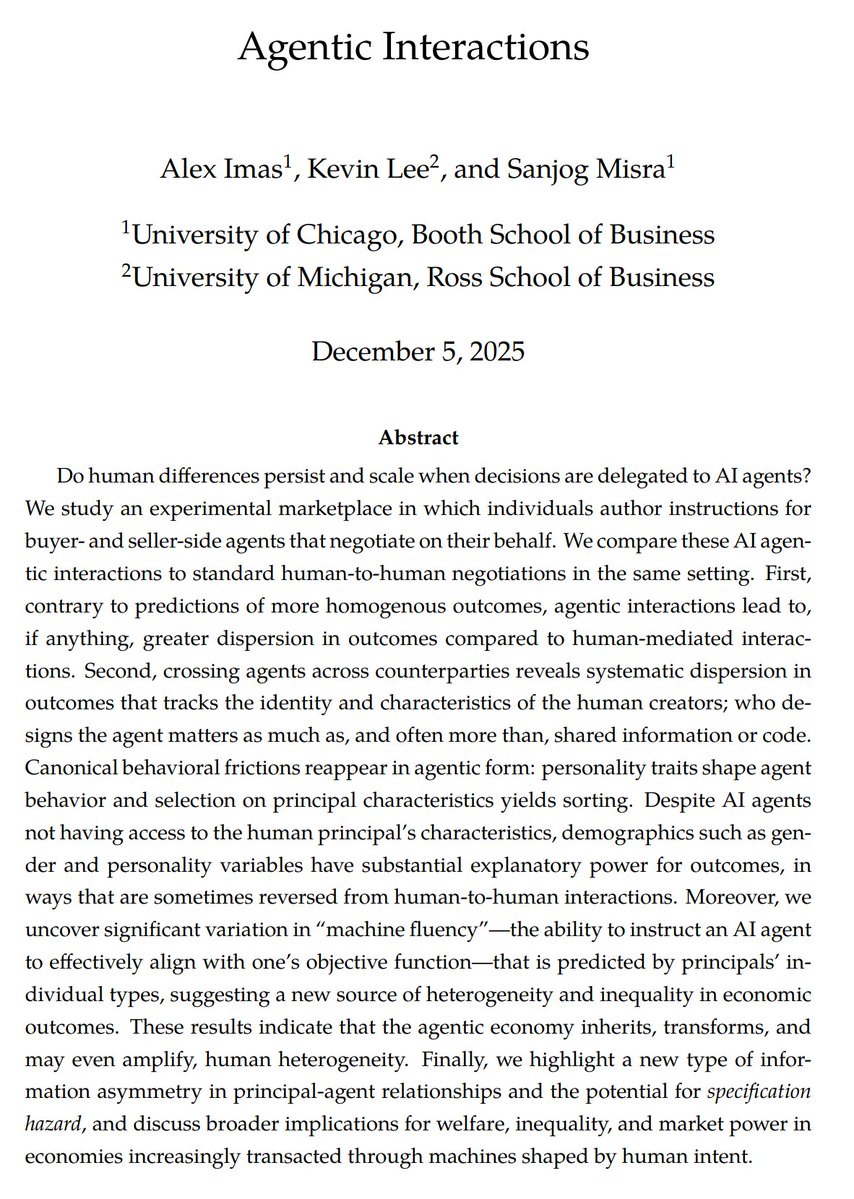

As long as prompting/context engineering/AI skill matters, agents may actually serve to increase differences in outcomes among people, rather than reduce them.

What will economic outcomes look like as transactions become delegated to AI agents? Will human differences be smoothed away, leading to more homogenous outcomes, or will they be recreated and potentially even amplified? Will AI agents mitigate inequality, or will it persist…

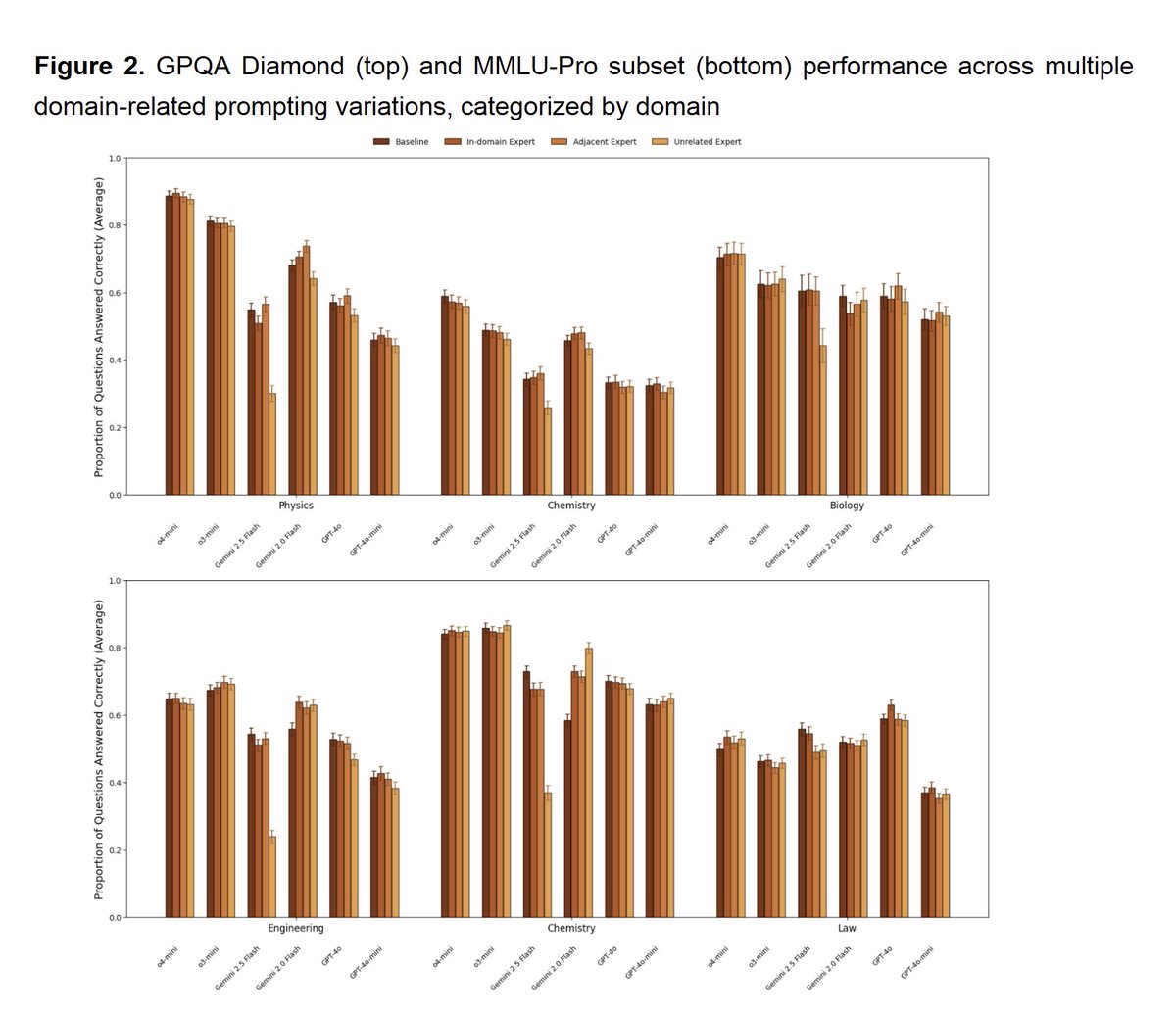

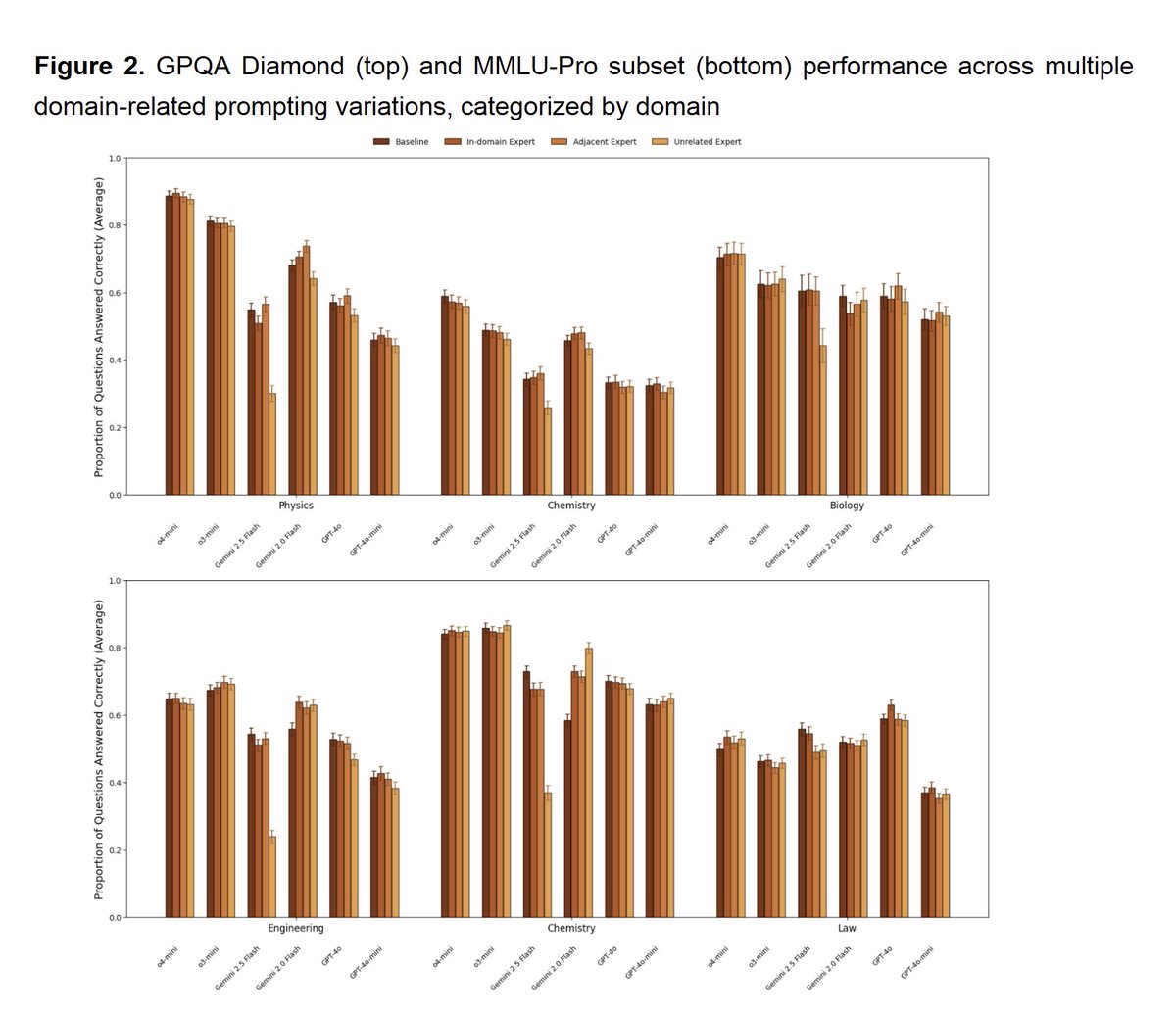

It's time to retire this idea of persona prompting - don't tell the LLM to act like X, tell it that it should answer for an audience of Y instead

We tested one of the most common prompting techniques: giving the AI a persona to make it more accurate We found that telling the AI "you are a great physicist" doesn't make it significantly more accurate at answering physics questions, nor does "you are a lawyer" make it worse.

We tested one of the most common prompting techniques: giving the AI a persona to make it more accurate We found that telling the AI "you are a great physicist" doesn't make it significantly more accurate at answering physics questions, nor does "you are a lawyer" make it worse.

If you are using AI images with typos, it shows a distinct lack of pride and care in your work.

United States 트렌드

- 1. #BTCCBestCEX 7,706 posts

- 2. #heatedrivalry 43.4K posts

- 3. Expedition 33 194K posts

- 4. Falcons 38.1K posts

- 5. Kyle Pitts 19K posts

- 6. shane 61K posts

- 7. #TheGameAwards 409K posts

- 8. Bucs 23.9K posts

- 9. ilya 46.2K posts

- 10. Todd Bowles 5,735 posts

- 11. GOTY 63.6K posts

- 12. Mega Man 36.5K posts

- 13. Baker 23.6K posts

- 14. Kirk Cousins 6,245 posts

- 15. jacob tierney 2,481 posts

- 16. #KeepPounding 2,325 posts

- 17. Mike Evans 10.5K posts

- 18. Leon 185K posts

- 19. Geoff 67.2K posts

- 20. Sonic 85.5K posts

Something went wrong.

Something went wrong.