Potrebbero piacerti

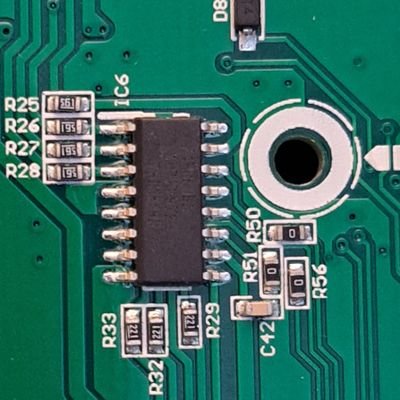

Exploring Direct Tensor Manipulation in Language Models: A Case Study in Binary-Level Model Enhancement: areu01or00.github.io/Tensor-Slayer.…

three more amazing blogs added! ft. @TensorSlay @secemp9 @MardiaArnav

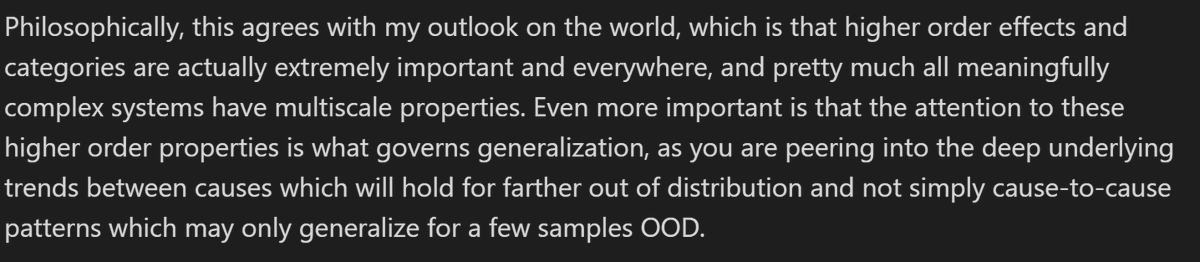

The "research taste" part of the talk was the highest signal part imo and I agree completely. This is literally exactly how I approach research and it was so validating to see @ilyasut talk about it! "The top-down belief is the thing that sustains you when the experiments…

Ilya on research taste: “One thing that guides me personally is an aesthetic of how AI should be by thinking about how people are. There's no room for ugliness. It's just beauty, simplicity, elegance, with correct inspiration from the brain. The more they are present, the more…

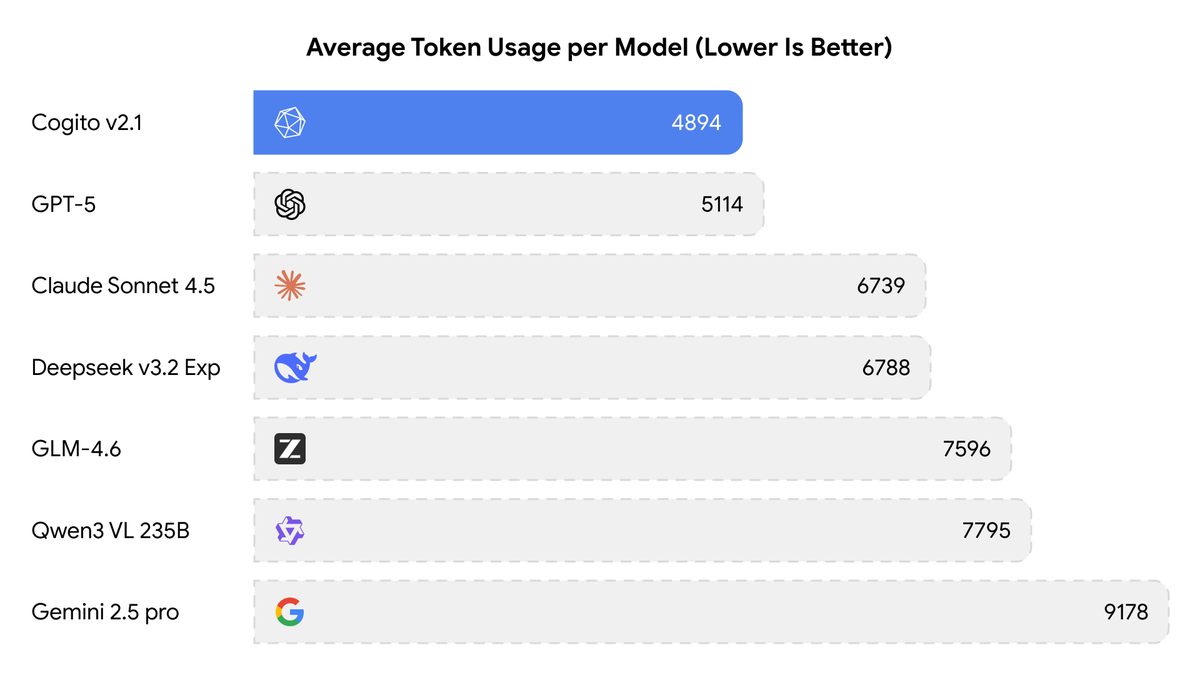

Available on Openrouter Context : 131K Input : 0.20/M Tokens Output : 1.10/M Tokens Intelligence too cheap to meter , truly.

Introducing INTELLECT-3: Scaling RL to a 100B+ MoE model on our end-to-end stack Achieving state-of-the-art performance for its size across math, code and reasoning Built using the same tools we put in your hands, from environments & evals, RL frameworks, sandboxes & more

HYPED

Introducing INTELLECT-3: Scaling RL to a 100B+ MoE model on our end-to-end stack Achieving state-of-the-art performance for its size across math, code and reasoning Built using the same tools we put in your hands, from environments & evals, RL frameworks, sandboxes & more

So… how soon before Anthropic nerfs Opus 4.5 ? Any guesses ?

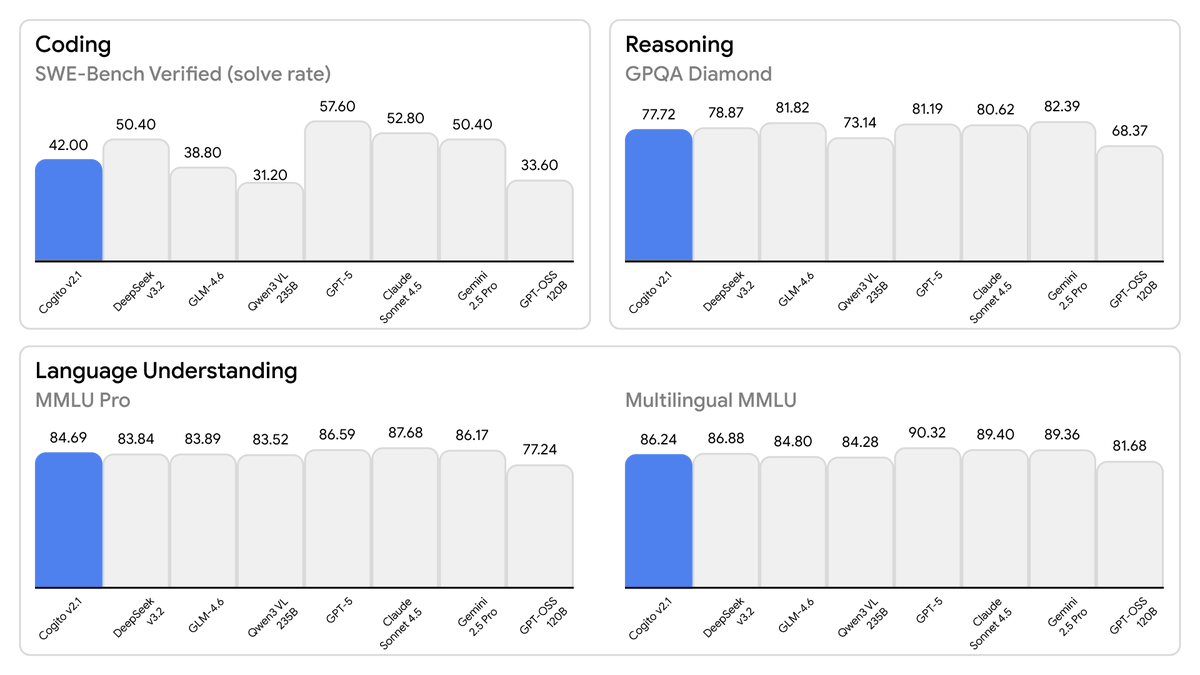

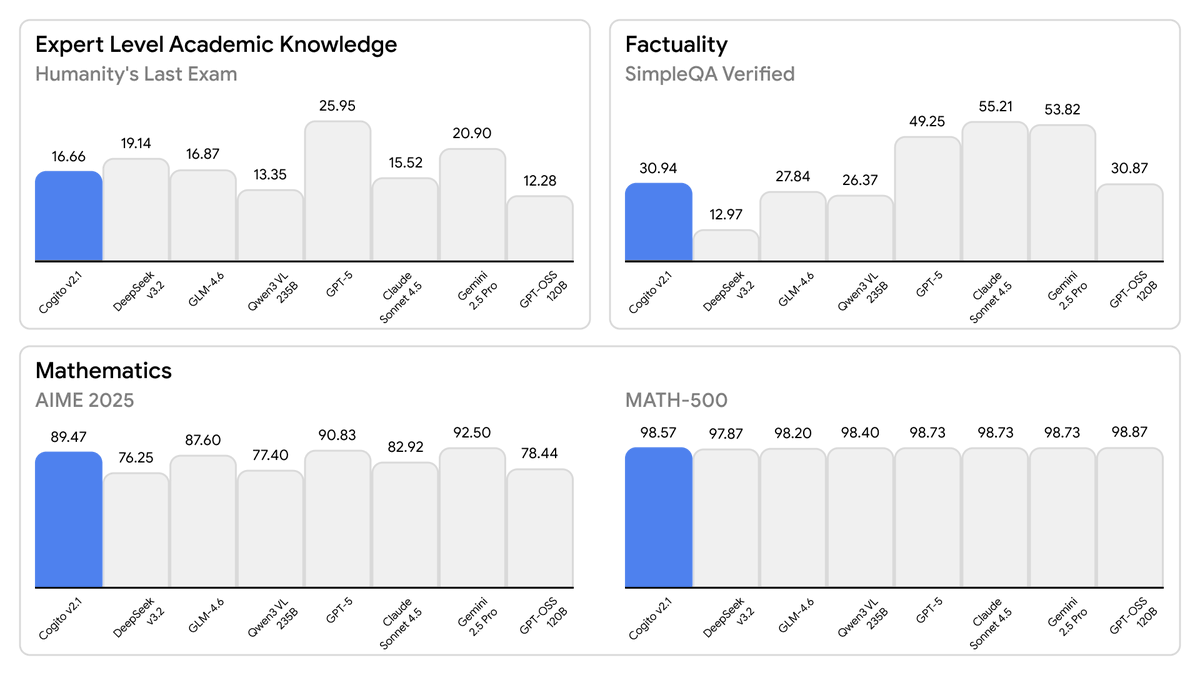

> …by US company > base : Deepseek

Today, we are releasing the best open-weight LLM by a US company: Cogito v2.1 671B. On most industry benchmarks and our internal evals, the model performs competitively with frontier closed and open models, while being ahead of any US open model (such as the best versions of…

🤗

Use your favourite AI coding agent to create AI frames. What if you could connect everything—your PDFs, videos, notes, code, and research—into one seamless flow that actually makes sense? AI-Frames: Open Source Knowledge-to-Action Platform:timecapsule.bubblspace.com ✨ Annotate •…

United States Tendenze

- 1. #Kodezi N/A

- 2. Walter Payton 2,402 posts

- 3. Chronos N/A

- 4. Good Thursday 34.5K posts

- 5. Merry Christmas 68.4K posts

- 6. #thursdayvibes 2,314 posts

- 7. Metaverse 7,337 posts

- 8. $META 11.9K posts

- 9. Happy Friday Eve N/A

- 10. Somali 244K posts

- 11. Dealerships 1,346 posts

- 12. RNC and DNC 3,689 posts

- 13. #NationalCookieDay N/A

- 14. #ThursdayThoughts 1,669 posts

- 15. #JASPER_TouchMV 308K posts

- 16. The Blaze 5,170 posts

- 17. Hilux 10.1K posts

- 18. Toyota 31.6K posts

- 19. Yasser Abu Shabab 3,810 posts

- 20. JASPER COMEBACK TOUCH 201K posts

Potrebbero piacerti

-

nullpointer

nullpointer

@nullpointar -

Shintu Dhang

Shintu Dhang

@Shin2_D -

Shafiur Rahman

Shafiur Rahman

@shafiur -

Jesús González Amago 🏳️🌈 ♿👥

Jesús González Amago 🏳️🌈 ♿👥

@JgAmago -

Paul 🎗️

Paul 🎗️

@paulwallace1234 -

Normas Interruptor

Normas Interruptor

@Normas_ONLY_1 -

Selva

Selva

@selvasathyam -

Oriana Pawlyk

Oriana Pawlyk

@Oriana0214 -

Nitesh Kunnath

Nitesh Kunnath

@die2mrw007 -

Com Troose

Com Troose

@comtroose -

Will Newton

Will Newton

@willdjthrill -

BladeXDesigns

BladeXDesigns

@bladexdesigns

Something went wrong.

Something went wrong.