Zhiyuan

@ZhiyuanCS

PhD student in @NUSingapore Visiting Researcher in @MIT

Was dir gefallen könnte

🚀Introducing Lumine, a generalist AI agent trained within Genshin Impact that can perceive, reason, and act in real time, completing hours-long missions and following diverse instructions within complex 3D open-world environments.🎮 Website: lumine-ai.org 1/6

How to determine which idea is most promising to scale up? Feedback from the chat with Sora 2 researcher: Even in big tech, you must prove a method's worth scaling up. Key hint? Under fixed compute and targeted perspectives (e.g., deep reasoning in LLMs or physical…

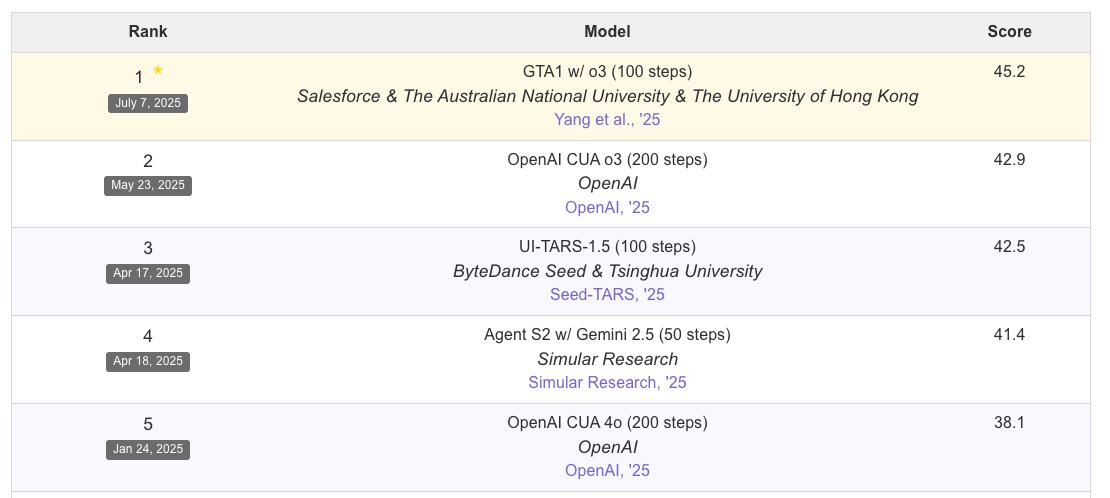

🚀Introducing GTA1 – our new GUI Agent that leads the OSWorld leaderboard with a 45.2% success rate, outperforming OpenAI's CUA! GTA1 improves two core components of GUI agents: Planning and Grounding. 🧠 Planning: A generic test-time scaling strategy that concurrently samples…

Customizing Your LLMs in seconds using prompts🥳! Excited to share our latest work with @HPCAILab, @VITAGroupUT, @k_schuerholt, @YangYou1991, @mmbronstein, @damianborth : Drag-and-Drop LLMs(DnD). 2 features: tuning-free, comparable or even better than full-shot tuning.(🧵1/8)

🚨🚨Reviewed around 20 papers for @ACMMM—but our own reviews were hidden & forced on us without expertise match. Time to rethink AI community peer review. 🤔 Our author team were assigned nearly 20 papers with no regard for our areas of expertise, received only a single round of…

I can’t believe this jaw‑dropping comic was generated by GPT just by feeding it our paper directly🤯! It perfectly illustrates how meta‑ability training makes LRMs think better.

🚀 Beyond “aha”: toward Meta‑Abilities Alignment! Zero human annotation enables LRMs masters strong reasoning abilities rather than aha emerging and generalize across math ⚙️, code 💻, science 🔬. Meta‑ability alignment lifts the ceiling of further domain‑RL—7B → 32B…

🚀 Beyond 'aha': toward Meta‑Abilities Alignment! By self‑synthesizes training tasks & self‑verifies rewards with zero human labels, LLM systematically masters core reasoning abilities rather than aha emerging and generalize across math ⚙️, code 💻, science 🔬. Meta‑ability…

Although the ICLR main conference is coming to an end, we are excited to invite you to the Reasoning and Planning for LLMs Workshop, which will be held all day on Monday, April 28. We are honored to host an outstanding lineup of keynote speakers and panelists from Meta, OpenAI,…

Welcome to use JudgeLRM! Compare any Hugging Face language models by asking your own questions, and explore JudgeLRM’s reasoning and detailed comparisons! Demo: huggingface.co/spaces/nuojohn… Paper: huggingface.co/papers/2504.00… Model: huggingface.co/nuojohnchen/Ju… Code: github.com/NuoJohnChen/Ju… We…

🚀 Exciting news! The ICLR 2025 LLM Reasoning & Planning Workshop is offering several Student Registration Grants to support early-career researchers 💡 Free ICLR registration for in-person full-time students! Apply by March 2, 2025. More info: …shop-llm-reasoning-planning.github.io Submit…

🚀 Call for Reviewers! 🚀 Our Workshop on Reasoning and Planning for LLMs at ICLR 2025 @iclr_conf has received an overwhelming number of submissions! We are looking for reviewers to help ensure a high-quality selection process. 🔹 Max 2 papers per reviewer 🔹 Review deadline:…

We are excited to announce that our workshop will be held on April 28 in Singapore. Due to numerous requests for extensions, we have decided to extend the submission deadline by 4 days to February 6 (AoE). We look forward to receiving your submissions and can't wait to see you at…

🚀 Excited to announce our World Models: Understanding, Modelling and Scaling Workshop at #ICLR2025! 🎉 Keynote speakers, panellists, and submission guidelines are live now! Check out: 👉 sites.google.com/view/worldmode… Join us as we explore World Understanding, Sequential Modelling,…

Our poster presentation at #NeurIPS2024 will take place today from 11:00 AM to 2:00 PM in West Ballroom A-D, Poster #7004. We warmly welcome you to stop by and engage with us!

How do LLMs conduct reasoning and planning given partial information with uncertainty? Whether they can proactively ask questions to improve decision-making? In joint work with UW, NTU, Yale and UCL, we introduce the UoT method, which boosts the information-seeking and…

United States Trends

- 1. Thanksgiving 1.31M posts

- 2. Thankful 236K posts

- 3. Turkey Day 30.8K posts

- 4. busta rhymes N/A

- 5. Lil Jon N/A

- 6. Toys R Us N/A

- 7. Turn Down for What N/A

- 8. Afghanistan 234K posts

- 9. #ProBowlVote 11.8K posts

- 10. Mr. Fantasy N/A

- 11. #Gratitude 6,921 posts

- 12. #Grateful 4,116 posts

- 13. Gobble Gobble 19.9K posts

- 14. Sarah Beckstrom 11K posts

- 15. Shaggy 3,047 posts

- 16. Andrew Wolfe 27K posts

- 17. Debbie Gibson N/A

- 18. Darlene Love N/A

- 19. Taylor Momsen N/A

- 20. Liar Liar 72.5K posts

Was dir gefallen könnte

-

Shizhe Diao✈️NeurIPS 2025

Shizhe Diao✈️NeurIPS 2025

@shizhediao -

jinyang (patrick) li

jinyang (patrick) li

@jinyang34647007 -

Banghua Zhu

Banghua Zhu

@BanghuaZ -

WestlakeNLP

WestlakeNLP

@NlpWestlake -

Qingxiu Dong

Qingxiu Dong

@qx_dong -

Ruizhe Li

Ruizhe Li

@liruizhe94 -

Shoubin Yu

Shoubin Yu

@shoubin621 -

Canyu Chen✈️NeurIPS

Canyu Chen✈️NeurIPS

@CanyuChen3 -

Xiachong Feng

Xiachong Feng

@xc_feng -

Yi (Jodie) Zhou

Yi (Jodie) Zhou

@jodieyzhou -

Raymond Li

Raymond Li

@liraymond96 -

Bin Wang

Bin Wang

@BinWang_Eng -

Yangqiu Song

Yangqiu Song

@yqsong -

Dylan X. Hou

Dylan X. Hou

@XinmingHou -

Yu Zhao

Yu Zhao

@yuzhaouoe

Something went wrong.

Something went wrong.