Ishan Gupta

@code_igx

25 🇮🇳, Hustler @RITtigers NY 🇺🇸 | RnD on Quantum AI, Superintelligence & Systems | Ex- @Broadcom @VMware

Vous pourriez aimer

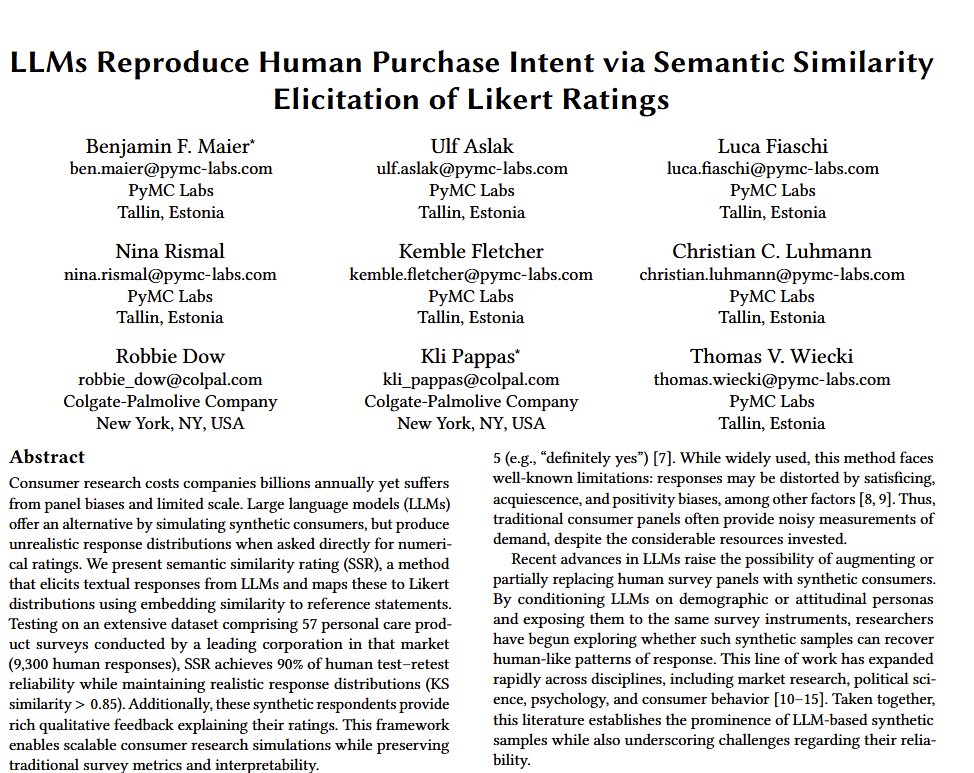

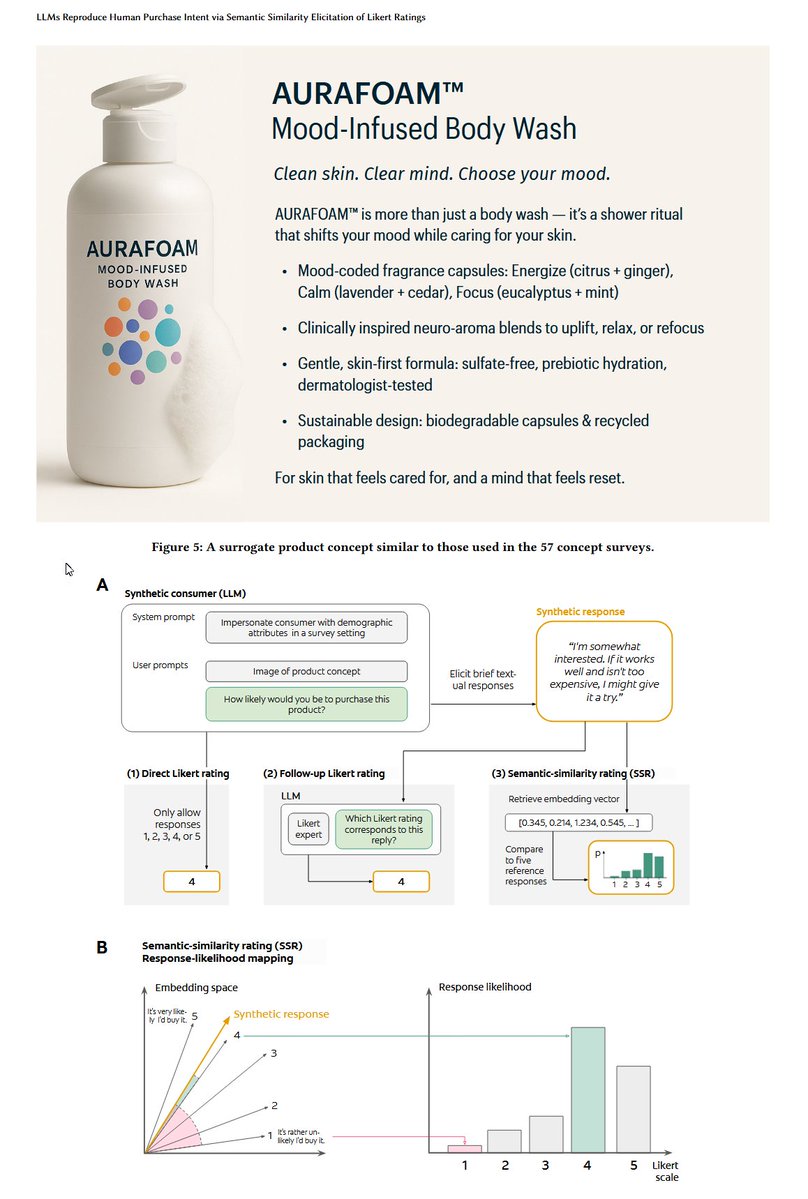

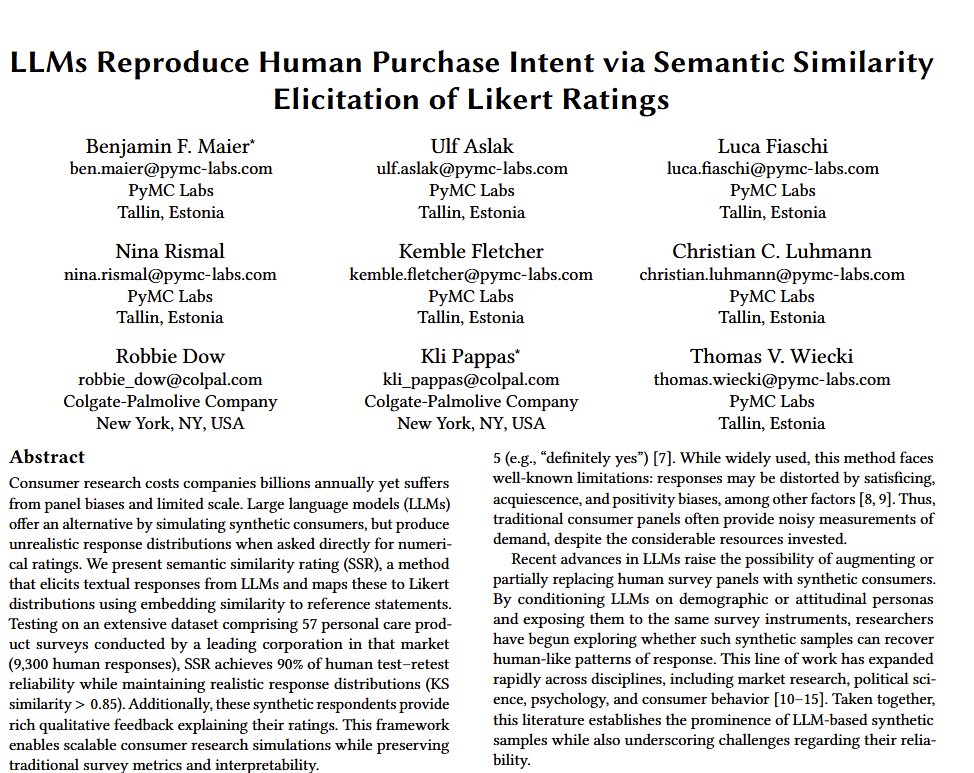

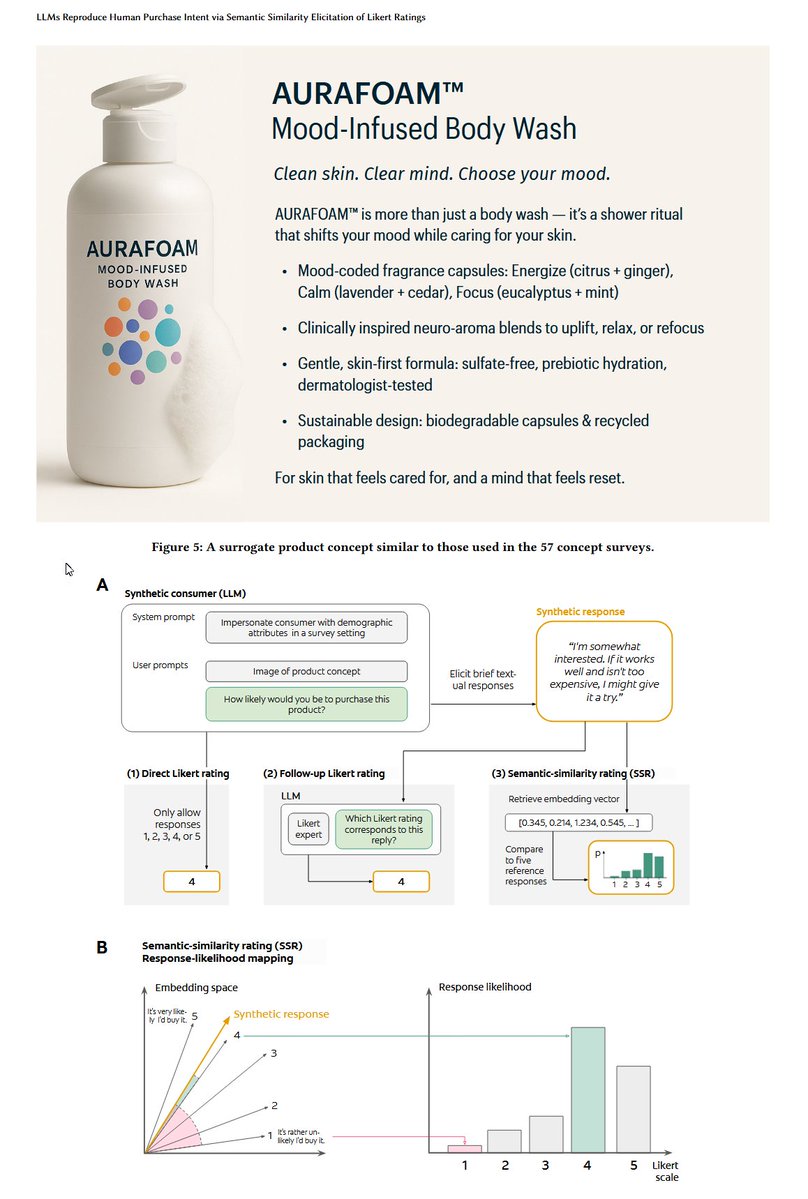

This paper shows that you can predict actual purchase intent (90% accuracy) by asking an LLM to impersonate a customer with a demographic profile, giving it a product & having it give its impressions, which another AI rates. No fine-tuning or training & beats classic ML methods.

You can just prompt things

This paper shows that you can predict actual purchase intent (90% accuracy) by asking an LLM to impersonate a customer with a demographic profile, giving it a product & having it give its impressions, which another AI rates. No fine-tuning or training & beats classic ML methods.

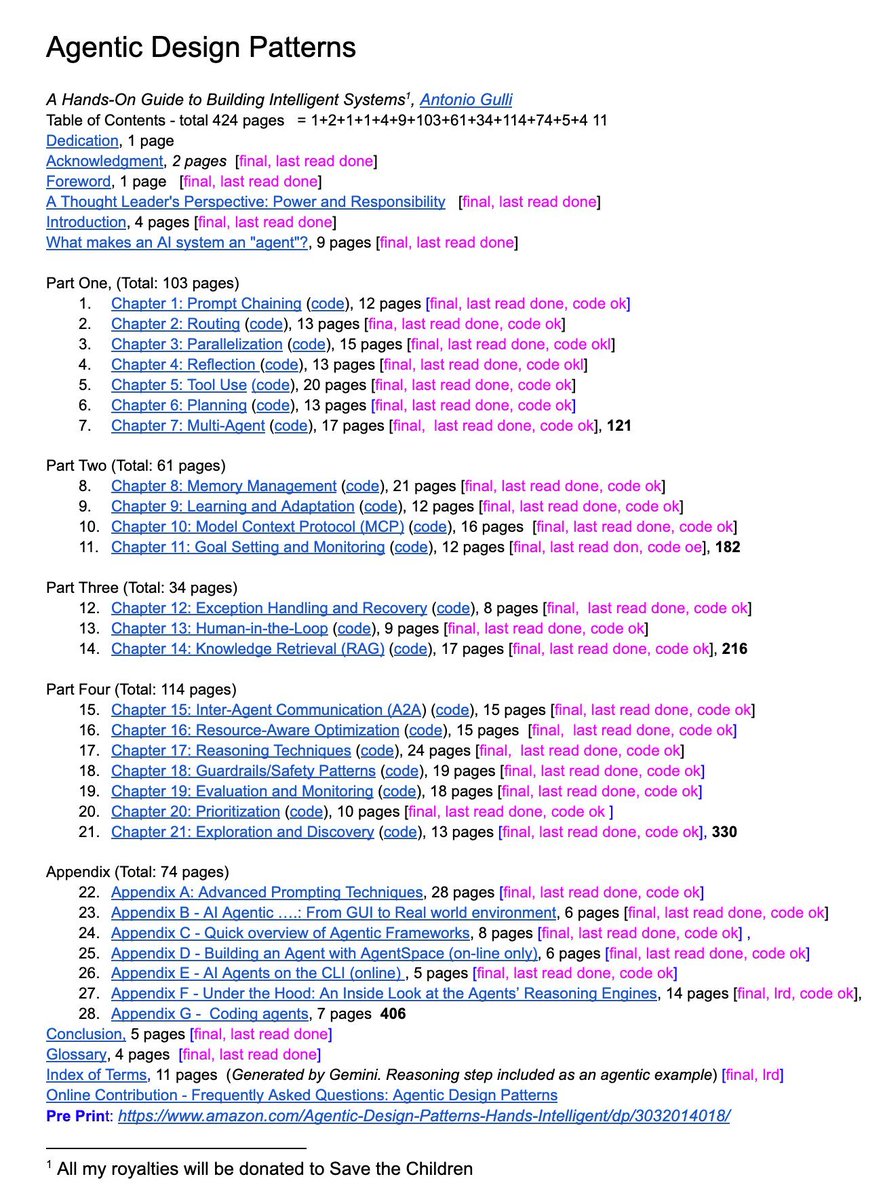

A senior Google engineer just dropped a 424-page doc called Agentic Design Patterns. Every chapter is code-backed and covers the frontier of AI systems: → Prompt chaining, routing, memory → MCP & multi-agent coordination → Guardrails, reasoning, planning This isn’t a blog…

Did Stanford just kill LLM fine-tuning? This new paper from Stanford, called Agentic Context Engineering (ACE), proves something wild: you can make models smarter without changing a single weight. Here's how it works: Instead of retraining the model, ACE evolves the context…

Great recap of security risks associated with LLM-based agents. The literature keeps growing, but these are key papers worth reading. Analysis of 150+ papers finds that there is a shift from monolithic to planner-executor and multi-agent architectures. Multi-agent security is…

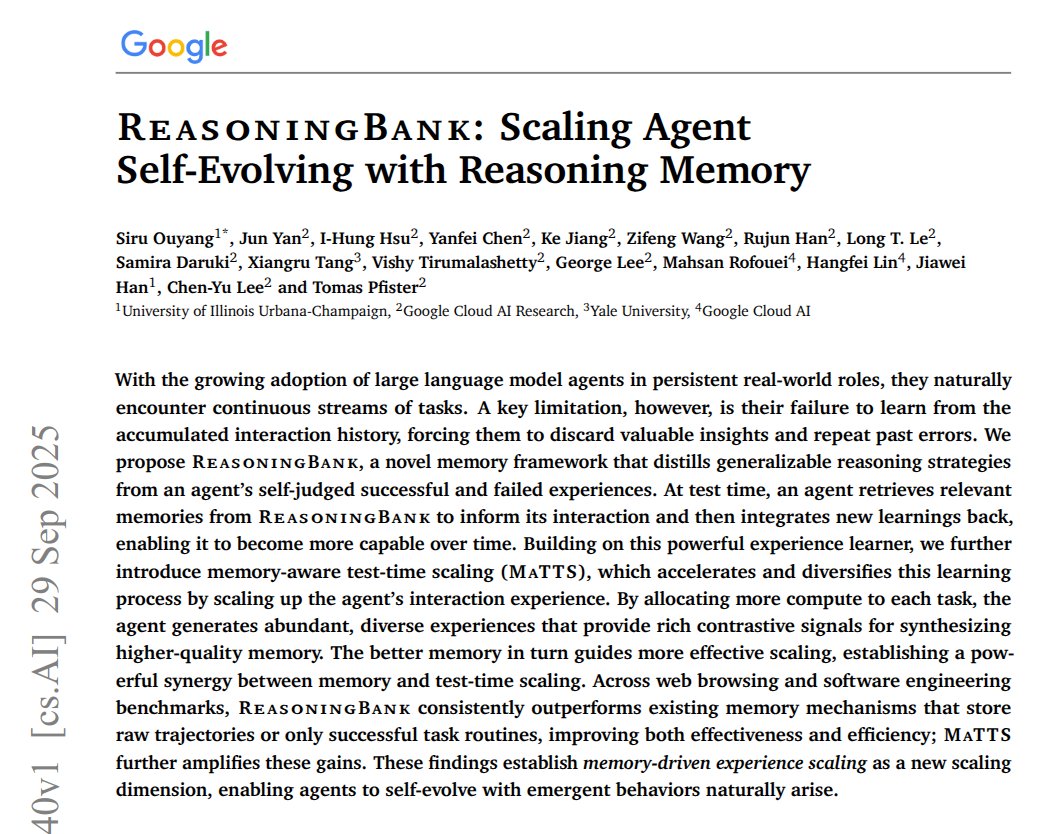

Holy shit...Google just built an AI that learns from its own mistakes in real time. New paper dropped on ReasoningBank. The idea is pretty simple but nobody's done it this way before. Instead of just saving chat history or raw logs, it pulls out the actual reasoning patterns,…

New paper from @Google is a major memory breakthrough for AI agents. ReasoningBank helps an AI agent improve during use by learning from its wins and mistakes. To succeed in real-world settings, LLM agents must stop making the same mistakes. ReasoningBank memory framework…

What the fuck just happened 🤯 Stanford just made fine-tuning irrelevant with a single paper. It’s called Agentic Context Engineering (ACE) and it proves you can make models smarter without touching a single weight. Instead of retraining, ACE evolves the context itself. The…

My brain broke when I read this paper. A tiny 7 Million parameter model just beat DeepSeek-R1, Gemini 2.5 pro, and o3-mini at reasoning on both ARG-AGI 1 and ARC-AGI 2. It's called Tiny Recursive Model (TRM) from Samsung. How can a model 10,000x smaller be smarter? Here's how…

In the near future, your Tesla will drop you off at the store entrance and then go find a parking spot. When you’re ready to exit the store, just tap Summon on your phone and the car will come to you.

FSD V14.1 Spends 20 Minutes Looking For Parking Spot at Costco This video is sped up 35x once we get hunting for a spot and during that time the car pulls of some really inellegent moves while searching. We did not once pass any empty available spots, the only issue is we didn't…

Google did it again! First, they launched ADK, a fully open-source framework to build, orchestrate, evaluate, and deploy production-grade Agentic systems. And now, they have made it even powerful! Google ADK is now fully compatible with all three major AI protocols out there:…

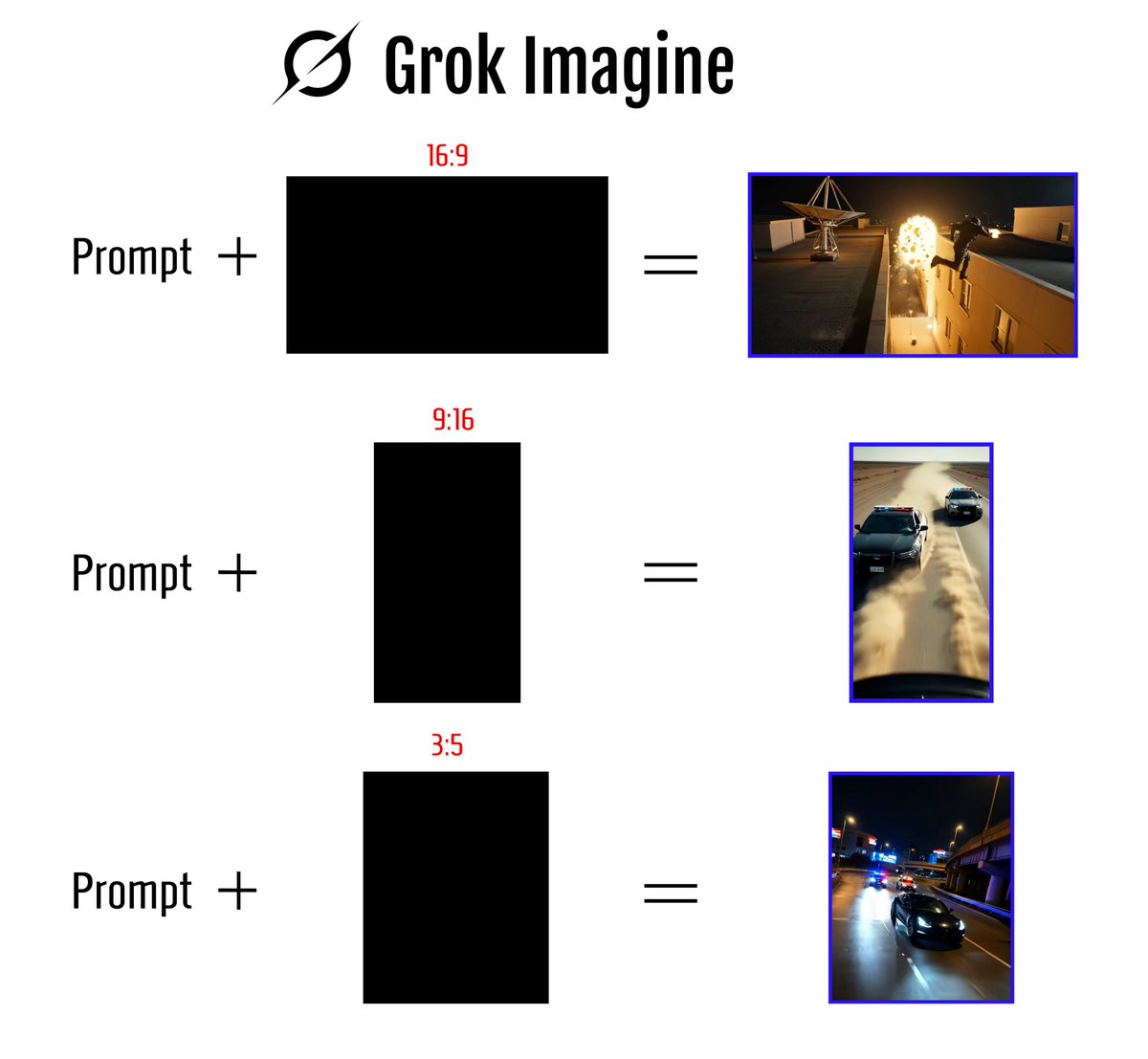

You can instantly generate Grok Imagine videos using any simple dark image, skipping the need of custom image for video Just pick a dark image with your preferred aspect ratio, type your prompt, and you’re set It works amazingly good....yes, this my cool recipe with all videos…

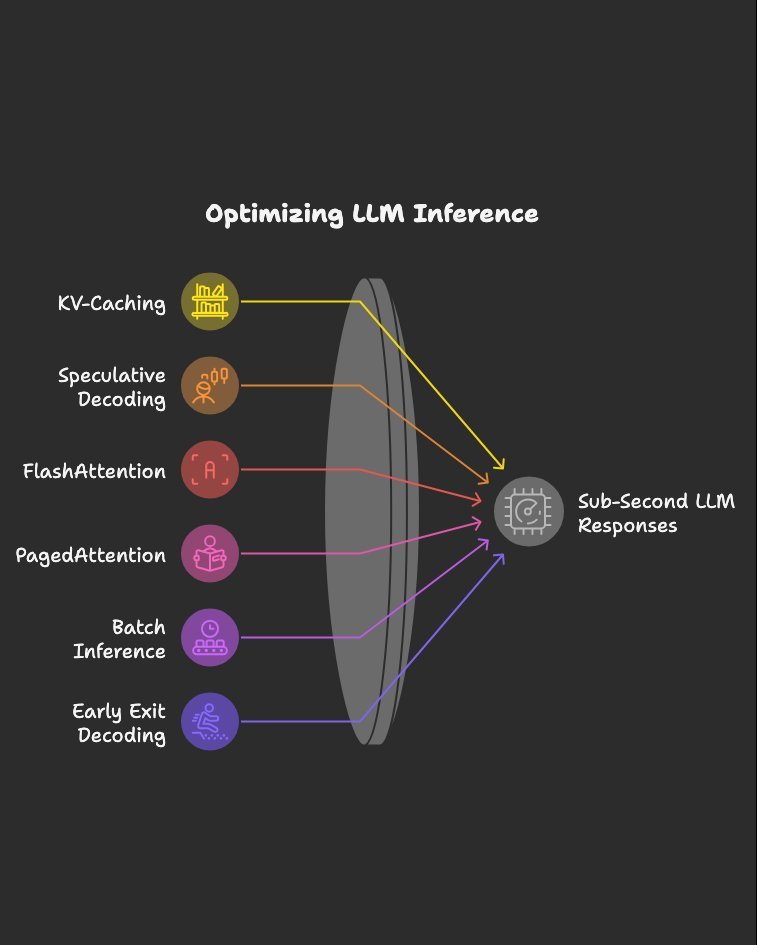

Inference optimizations I’d study if I wanted sub-second LLM responses: Bookmark this. 1.KV-Caching 2.Speculative Decoding 3.FlashAttention 4.PagedAttention 5.Batch Inference 6.Early Exit Decoding 7.Parallel Decoding 8.Mixed Precision Inference 9.Quantized Kernels 10.Tensor…

Absolutely classic @GoogleResearch paper on In-Context-Learning by LLMs. Shows the mechanisms of how LLMs learn in context from examples in the prompt, can pick up new patterns while answering, yet their stored weights never change. 💡The mechanism they reveal for…

Earth’s gravity is strong enough to make reaching Mars extremely hard, but not impossible

Why Super-Earthlings Might Never Reach the Stars In the rocket equation, the fuel required to reach orbit grows exponentially with gravity. If Earth’s gravity were 15% stronger, space programs would likely be impossible.

A senior Google engineer just dropped a 424-page doc called Agentic Design Patterns. Every chapter is code-backed and covers the frontier of AI systems: → Prompt chaining, routing, memory → MCP & multi-agent coordination → Guardrails, reasoning, planning This isn’t a blog…

You can teach a Transformer to execute a simple algorithm if you provide the exact step by step algorithm during training via CoT tokens. This is interesting, but the point of machine learning should be to *find* the algorithm during training, from input/output pairs only -- not…

A beautiful paper from MIT+Harvard+ @GoogleDeepMind 👏 Explains why Transformers miss multi digit multiplication and shows a simple bias that fixes it. The researchers trained two small Transformer models on 4-digit-by-4-digit multiplication. One used a special training method…

Temperature in LLMs, clearly explained! Temperature is a key sampling parameter in LLM inference. Today I'll show you what it means and how it actually works. Let's start by prompting OpenAI GPT-3.5 with a low temperature value twice. We observe that it produces identical…

United States Tendances

- 1. phil 49.6K posts

- 2. phan 63.4K posts

- 3. Columbus 202K posts

- 4. President Trump 1.24M posts

- 5. Middle East 306K posts

- 6. Yesavage 3,047 posts

- 7. Cam Talbot N/A

- 8. Thanksgiving 59.2K posts

- 9. #LGRW 1,909 posts

- 10. Mike McCoy N/A

- 11. Titans 37.5K posts

- 12. Brian Callahan 12.6K posts

- 13. Macron 237K posts

- 14. #IndigenousPeoplesDay 16.9K posts

- 15. HAZBINTOOZ 7,798 posts

- 16. Cejudo 1,506 posts

- 17. Native Americans 17.8K posts

- 18. #UFC323 5,370 posts

- 19. Julio Rodriguez 1,787 posts

- 20. Azzi 10.8K posts

Vous pourriez aimer

-

Prithvi Raj

Prithvi Raj

@prithvi137 -

Daniel Walsh

Daniel Walsh

@rhatdan -

OCI

OCI

@OCI_ORG -

Murat Demirbas (Distributolog)

Murat Demirbas (Distributolog)

@muratdemirbas -

Vedant Shrotria

Vedant Shrotria

@VedantShrotria -

Bret Fisher

Bret Fisher

@BretFisher -

LitmusChaos | Chaos Engineering Made Easy

LitmusChaos | Chaos Engineering Made Easy

@LitmusChaos -

Uma Mukkara

Uma Mukkara

@Uma_Mukkara -

Oum Kale

Oum Kale

@OumKale -

Matt Hargett

Matt Hargett

@syke -

David Flanagan

David Flanagan

@rawkode -

Microsoft Reactor

Microsoft Reactor

@MSFTReactor -

Kathy Zant

Kathy Zant

@kathyzant -

Felix Rieseberg

Felix Rieseberg

@felixrieseberg -

Mat Velloso

Mat Velloso

@matvelloso

Something went wrong.

Something went wrong.