Data Engines

@dataengines

Working on making the world safer for robots and humans. Holders of the world's 1st patent (2010) on distribution-free evaluation of noisy judges.

You might like

Listen to Professor Mike Wooldridge (@wooldridgemike) on the @newscientist discussing how anxieties around AI distract us from the more immediate risks that the technology poses such as algorithmic bias & fake news. Listen here: institutions.newscientist.com/video/2422044-… #compscioxford #OxfordAI

This Black History Month, we celebrate Deborah Raji (@rajiinio), a cognitive scientist, AI researcher and Mozilla Fellow who collaborated with our founder @jovialjoy at the MIT Media Lab and AJL to audit commercial facial recognition technologies from Microsoft, Amazon, IBM, and…

TODAY: Our Director of Responsible AI Practice @ccansu will discuss #ResponsibleAI, defining goals for #AI projects and how to find expert #AIguidance with @eric_kavanagh on Inside Analysis at 3 p.m. EDT. Register for free! bit.ly/3UDESTe #RAI #AIwebinar

As our celebration of Black History Month continues, we're shining a light on @timnitGebru, the co-founder of @black_in_ai and the founder and executive director of the Distributed Artificial Intelligence Research Institute (DAIR). Her groundbreaking research on algorithmic…

"The moment he told them he's going to join us, they quadrupled his offer" - Perplexity CEO @AravSrinivas on recruiting from Google (k, here's the video)

'US says leading #AI companies join safety consortium to address risks' hpe.to/6019Vhpr7

FTC’s rule update targets deepfake threats to consumer safety dlvr.it/T2qPKQ #Technology #Law #UnitedStates #Deepfake #AI

GitHub: AI helps developers write safer code, but basic safety is crucial dlvr.it/T2qRrT

Ready to take your AI safety research to the next level? UK & Canadian researchers can apply for an exchange programme. Here’s the details: 💷 £3,500 grant for logistical fees 🎓open to PhD & post-doctoral students 📅 applications close 26 March Apply now mitacs.ca/our-programs/g…

The Monster group, also known as the Fischer-Griess Monster, is a very large structure in mathematics, particularly in group theory. It stands as the largest of the 26 sporadic finite simple groups, boasting around 8.08 x 10⁵³ elements. Discovered through the collaborative…

leading to research that cuts corners, lacks validity, fails to serve or even harms impacted communities, and generates data but not knowledge. We urge human subjects researchers to engage with on build on best practices for participatory research (ex arxiv.org/abs/2209.07572)8/10

New paper from my group: "Using Counterfactual Tasks to Evaluate the Generality of Analogical Reasoning in Large Language Models" arxiv.org/abs/2402.08955 Thread below 🧵 (1/6)

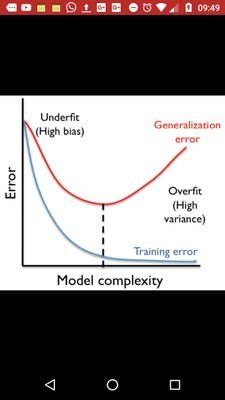

The latest release (v0.1.5) of the ntqr Python package is out - building out the logic of evaluation in unsupervised settings so we can have provably safe evaluations of noisy agents when we give them tests for which we have no answer keys! ntqr.readthedocs.org/en/latest

Join @ccansu tomorrow, Feb. 15, 2024 at 6 p.m. EDT for her talk “Integrating Ethics into AI Innovation” as part of the @NUPoliSci and @NU_PolicySchool's Spring 2024 “Security and Resilience Speaker Series.” Register to attend for free: bit.ly/3whqCWh

calendar.northeastern.edu

Security and Resilience Speaker Series: Spring 2024

The Department of Political Science and the School of Public Policy and Urban Affairs is excited to announce the speakers for the Spring 2024 Security and Resiliences Speaker series. All events will...

Any intelligent being, whether human or robotic, would benefit from understanding the logic of evaluation in unsupervised settings to protect itself from its own mistakes. Check out how we are building it, ntqr.readthedocs.org/en/latest

🎥 Watch the full recording and continue the discussion: youtube.com/watch?v=M2nzXC… 🔗 Visit the website: alignment-workshop.com/nola-talks/ada… 🚀 Join us in building AI that's trustworthy and beneficial for all! Explore career opportunities at far.ai/jobs/

far.ai

Careers – FAR.AI

Join us to help ensure advanced AI systems are safe and beneficial.

If you believe, like @steveom and @tegmark , that we should have provably safe AI, check out the logic of evaluation in unsupervised settings that we have been building since 2010 with our first patent. ntqr.readthedocs.org/en/latest

The future is already here. We have been building it since 2010 with our first patent for unsupervised evaluation. ntqr.readthedocs.org/en/latest

How might superintelligent AI be prevented from catastrophically dangerous actions? By using tamperproof hardware that demands mathematical proof of safety to protect key infrastructure vulnerabilities? @steveom in the latest @LondonFuturists podcast londonfuturists.buzzsprout.com/2028982/144917…

londonfuturists.buzzsprout.com

Provably safe AGI, with Steve Omohundro - London Futurists

GenAI brings new challenges, like deepfakes and misinformation threats. @ActiveFence is at the forefront, proactively leading the way in #AI #safety solutions. Check out our latest @TechCrunch article with @GroveVentures. techcrunch.com/2024/02/10/saf…

CALL FOR PAPERS: Here it is, the call for papers for early career scholars to speak at The Lyceum Project - our AI Ethics with Aristotle conference taking place on June 20th, 2024 in Athens, Greece. Deadline for submissions is April 30th. Apply now! oxford-aiethics.ox.ac.uk/lyceum-project…

United States Trends

- 1. Massie 83.3K posts

- 2. Good Saturday 32.4K posts

- 3. GAME DAY 28.8K posts

- 4. Willie Green 3,620 posts

- 5. #Caturday 4,195 posts

- 6. #SaturdayVibes 4,769 posts

- 7. #MeAndTheeSeriesEP1 1.09M posts

- 8. #Varanasi 77K posts

- 9. Senior Day 2,450 posts

- 10. James Borrego 1,220 posts

- 11. Virginia Tech 2,038 posts

- 12. Brooklynn 2,480 posts

- 13. Draymond 28.7K posts

- 14. Marjorie 101K posts

- 15. #GlobeTrotter 433K posts

- 16. PONDPHUWIN AT MAT PREMIERE 703K posts

- 17. Va Tech N/A

- 18. Lindsey Graham 19.2K posts

- 19. Liz Cheney 5,873 posts

- 20. Diosa 7,753 posts

You might like

-

Zekun Wang (ZenMoore) 🔥

Zekun Wang (ZenMoore) 🔥

@ZenMoore1 -

Harshit Joshi

Harshit Joshi

@harshitj__ -

Inês Hipólito

Inês Hipólito

@ineshipolito -

Siyan Zhao

Siyan Zhao

@siyan_zhao -

Yujia Qin

Yujia Qin

@TsingYoga -

Seohong Park

Seohong Park

@seohong_park -

Forrest

Forrest

@m_forrest -

Simon Schwörer

Simon Schwörer

@schwoerersimon -

Vikash Sehwag

Vikash Sehwag

@VSehwag_ -

Gabriel Poesia

Gabriel Poesia

@GabrielPoesia -

Edward Hu

Edward Hu

@edward_s_hu -

アダム

アダム

@rotmil_adam -

Darshan Patil

Darshan Patil

@dapatil211 -

Nektarios Kalogridis

Nektarios Kalogridis

@NektariosAI -

Yuval Atzmon

Yuval Atzmon

@AtzmonYuval

Something went wrong.

Something went wrong.