Raju Penmatsa

@iam_rajuptvs

ML Research Engineer @ Hitachi R&D || life long learner || ΠΑΘΕΙ ΜΑΘΟΣ

Bạn có thể thích

It's such an honor, thanks a lot.#Intel Hackathon has been a really life-changing experience.

so kicked off a baseline training run! currently using 8xL40s, so not the fastest (no nvlink for intra-node). Lets see how it goes.. Currently seeing ~13.1 MFU.

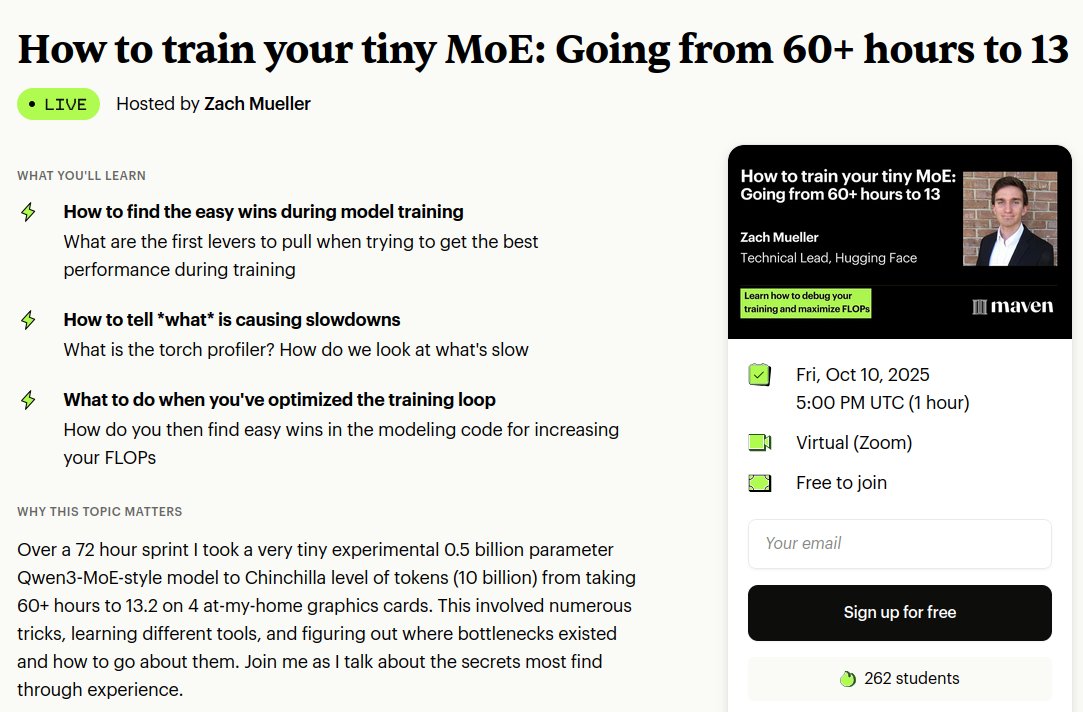

Thank you so much!! just feels like this came at the right time, was just starting to think of doing some pretraining runs after @TheZachMueller 's scratch-to-scale course. (inspired from zach's optimization of MOE). Will probably be on this after work today! Super pumped!

So now that K-Scale Labs has shipped a whole two humanoids in the USA, they are now the #1 USA consumer humanoid Company by units shipped. Incredible.

Thank you so much!! just feels like this came at the right time, was just starting to think of doing some pretraining runs after @TheZachMueller 's scratch-to-scale course. (inspired from zach's optimization of MOE). Will probably be on this after work today! Super pumped!

Excited to release new repo: nanochat! (it's among the most unhinged I've written). Unlike my earlier similar repo nanoGPT which only covered pretraining, nanochat is a minimal, from scratch, full-stack training/inference pipeline of a simple ChatGPT clone in a single,…

I run on one crazy intuition; I can learn anything, I will do it. Even if I have no idea

inspiring stuff!!

btw i’m hiring engineers in nyc (and offering relocation for those beyond) if you want to make powerful technology accessible, if you want to enable more people to bet on themselves, if you admire alexander, engelbart, kay, or papert, if these ideas👇 get you amped, let’s talk

i handed in my resignation letter last friday and decided to leave big tech a lot of you know me as the Amazon MLE guy but i've decided that right now is not the time to be in big tech given that i'm still young and have so much more that I want to learn and build big tech is…

I spent a day optimizing a small MoE (0.5B param Qwen3 style) training loop as far as it could go, so you don't have to. 61+ hours to Chinchilla down to ~13. Come learn my tricks (oh btw it's free)

At the beginning, it doesn’t matter if your volume of daily work is too small to achieve your long-term goals in the timeframe you want. Eventually, as you build up a habit and your mind and body adapt to whatever it is you’re doing, it will feel easier to ramp up the volume of…

You think the reason why you aren't locked in, making fast progress towards your goals, is because you lack the mental toughness to push yourself that hard. But you're wrong. That's not your problem. The reason why the ball isn't rolling fast is that you refuse to begin getting…

You can change your entire bloodline the moment you realize: what you do next always matters more than what you did last.

"I will do it when I have more time" is how years disappear. Do it now.

love is attention: the transformer taught us something profound: attention is all you need. but maybe we knew this all along, just in a different language. when an LLM processes text, it doesn't read sequentially like we intuitively think. it attends – it looks at every token…

how does this have only 574 views

Early career advice: Work harder than you think you can. First, because the more you work, the more context you get, the faster you catch up to speed. Second, because the harder you work the more you increase your capacity to work hard. Just like in cross country, the more miles…

United States Xu hướng

- 1. Yamamoto 40.2K posts

- 2. #DWTS 40K posts

- 3. Brewers 40.6K posts

- 4. Ohtani 13.5K posts

- 5. #Dodgers 15.4K posts

- 6. #DWCS 7,537 posts

- 7. #WWENXT 18.2K posts

- 8. Young Republicans 63.7K posts

- 9. Robert 106K posts

- 10. #RHOSLC 4,245 posts

- 11. Haji Wright 1,083 posts

- 12. Roldan 2,536 posts

- 13. Carrie Ann 4,693 posts

- 14. Jared Butler N/A

- 15. Shohei 8,579 posts

- 16. Politico 288K posts

- 17. Elaine 61.4K posts

- 18. Will Richard 2,492 posts

- 19. Yelich 1,752 posts

- 20. Whitney 15.5K posts

Something went wrong.

Something went wrong.