Luke Muehlhauser

@lukeprog

Open Philanthropy Program Director, AI Governance and Policy

Talvez você curta

Reminder that the nonprofit AI safety field (or "industrial complex" lol) is massively, massively outgunned by, well… The Actual AI Industry. archive.is/DFvLR

🌱⚠️ weeds-ey but important milestone ⚠️🌱 This is a first concrete example of the kind of analysis, reporting, and accountability that we’re aiming for as part of our Responsible Scaling Policy commitments on misalignment.

Yesterday my wife and I stumbled across a literal $100 bill abandoned on the sidewalk. Checkmate, economists!

“If you’re going to work on export controls, make sure your boss is prepared to have your back,” one staffer told me. For months, I’ve heard about widespread fear among think tank researchers who publish work against NVIDIA’s interests. Here’s what I’ve learned:🧵

I thought this post raised a bunch of interesting points/questions I wanted to respond to. Also, I would be open to chatting about any of this @sriramk if you ever want to - I’m proud of our work in this space and happy to answer questions on it! Before I dive in I wanted to…

On AI safety lobbying: Fascinating to see the reaction on X to @DavidSacks post yesterday especially from the AI safety/EA community. Think a few things are going on (a) the EA/ AI safety / "doomer" lobby was natural allies with the left and now find themselves out of power.…

Great 🧵 about the power of far-sighted and risk-taking philanthropists! Thanks to @open_phil for your early and consistent support. @cayimby could not have achieved these victories without you!

We've been funding @CAYIMBY, which championed this work, since its early days, and it's been fun to look back at how far they've come. After a prior incarnation of SB 79 failed in 2018, we renewed our funding and I wrote in our renewal template:

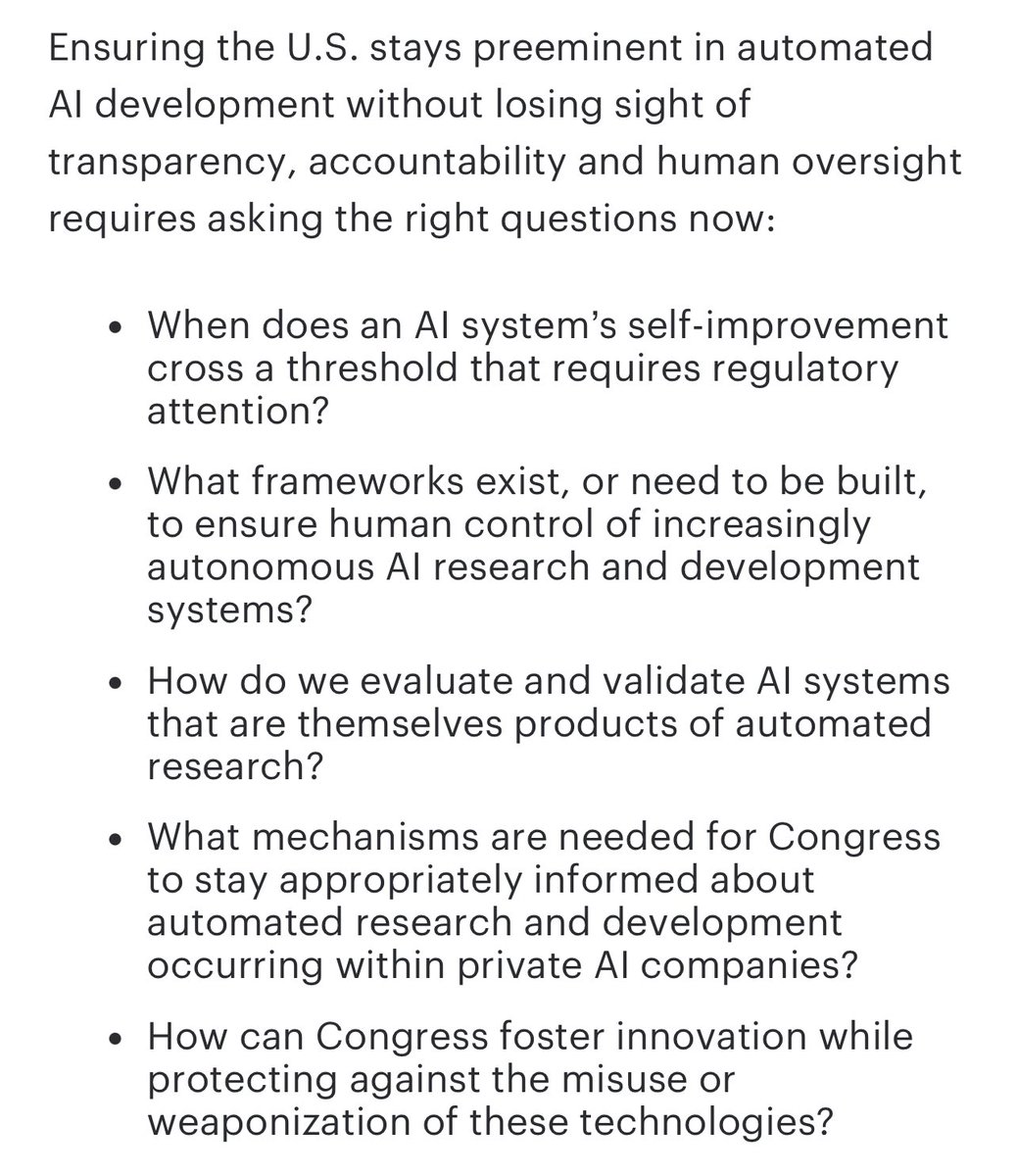

These are exactly the right sorts of questions to be asking about automated AI R&D. Props to @RepNateMoran for improving the conversation within Congress!

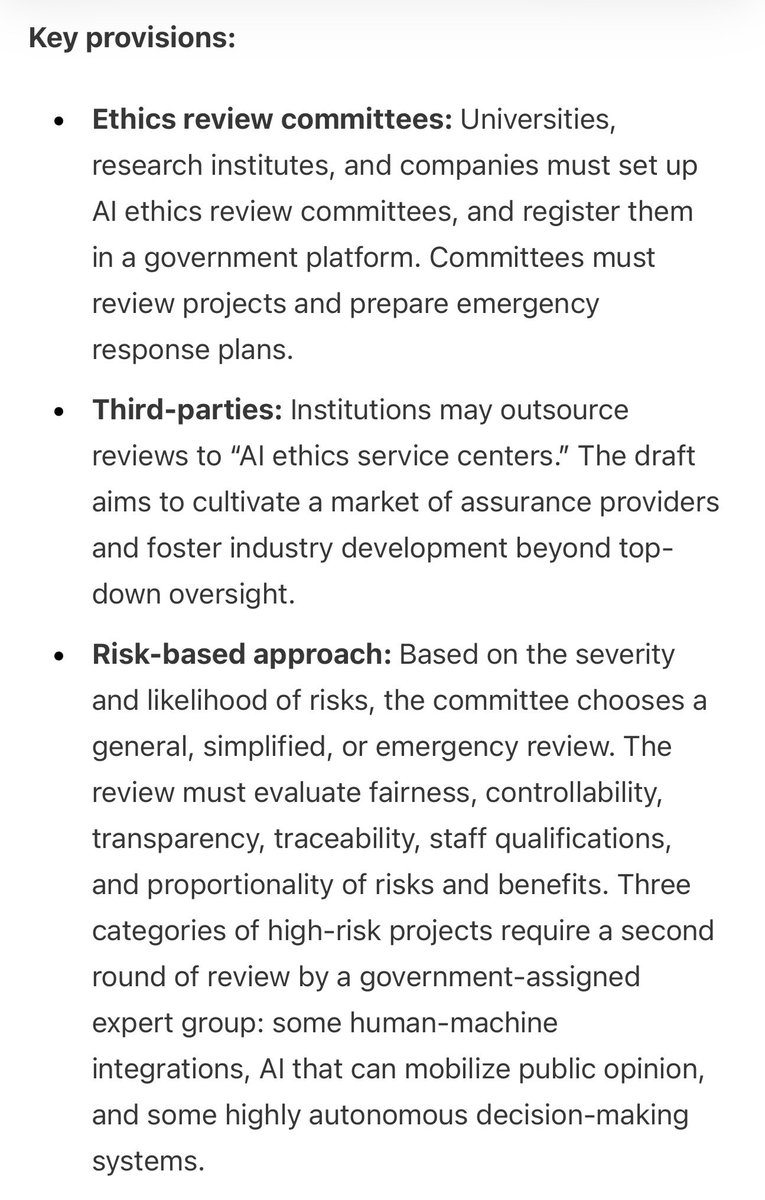

A lot of people seem to implicitly assume that China is going with an entirely libertarian approach to AI regulation, which would be weird given that they are an authoritarian country. Does this look like a libertarian AI policy regime to you?

Now's the time for other funders to get involved in AI safety and security: -AI advances have created more great opportunities -Recent years show progress is possible -Policy needs diverse funding; other funders can beat Good Ventures' marginal $ by 2-5x🧵 x.com/albrgr/status/…

Since 2015, seven years before the launch of ChatGPT, @open_phil has been funding efforts to address potential catastrophic risks from AI. In a new blog post, @emilyoehlsen and I discuss our history in the area and explain our current strategy. x.com/albrgr/status/…

1/3 Our Biosecurity and Pandemic Preparedness team is hiring, and is also looking for promising new grantees! The team focuses on reducing catastrophic and existential risks from biology — work that we think is substantially neglected.

Many AI policy decisions are complicated. "Don't ban self-driving cars" is really not. Good new piece from @KelseyTuoc, with a lede that pulls no punches:

Some people think @open_phil are luddites because we work on AGI safety, and others think we’re techno-utopians because we work on abundance and scientific progress. We’re neither. Here's why we think safety and accelerating progress go hand in hand, in spite of the tensions:🧵

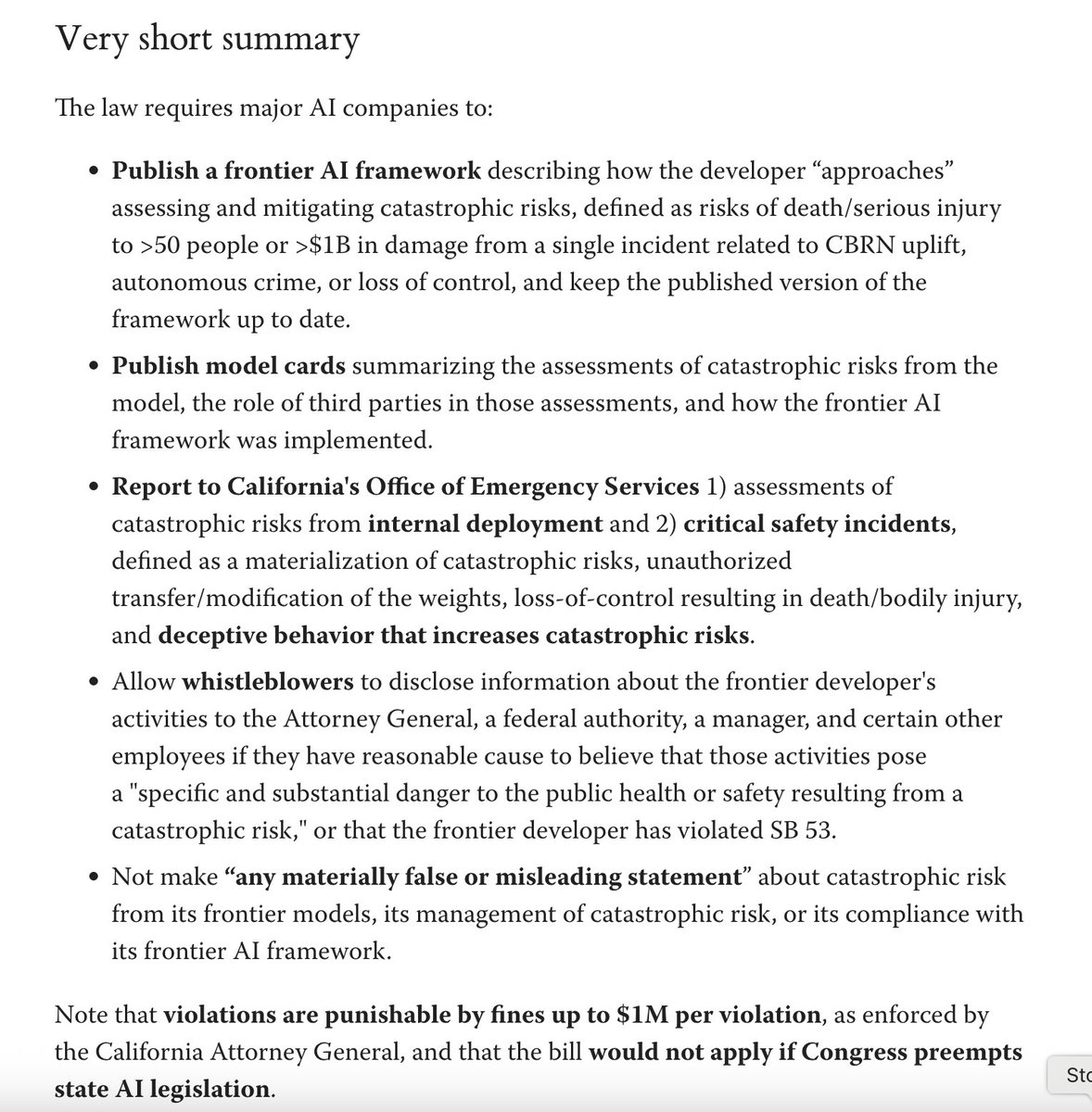

Kudos to @Scott_Wiener and @GavinNewsom and others for getting this over the line!!

Yesterday, @CAgovernor signed the first law in the US to directly regulate catastrophic risks from AI systems. I read the thing so you don't have to.

FAI is hiring! Come join the AI policy team to work with @hamandcheese, @deanwball, and me at @JoinFAI.

METR is a non-profit research organization, and we are actively fundraising! We prioritise independence and trustworthiness, which shapes both our research process and our funding options. To date, we have not accepted funding from frontier AI labs.

Excited to share details on two of our longest running and most effective safeguard collaborations, one with Anthropic and one with OpenAI. We've identified—and they've patched—a large number of vulnerabilities and together strengthened their safeguards. 🧵 1/6

United States Tendências

- 1. #IDontWantToOverreactBUT 1,156 posts

- 2. #MondayMotivation 37.3K posts

- 3. #maddiekowalski N/A

- 4. Howie 8,535 posts

- 5. Phillips 487K posts

- 6. Hobi 57.9K posts

- 7. Victory Monday 3,798 posts

- 8. Clemens N/A

- 9. Bradley 7,599 posts

- 10. 60 Minutes 131K posts

- 11. Ben Shapiro 6,140 posts

- 12. Good Monday 54.2K posts

- 13. Winthrop 1,701 posts

- 14. $IREN 17.7K posts

- 15. Tomorrow is Election Day 1,763 posts

- 16. Bonnies N/A

- 17. #MondayVibes 3,524 posts

- 18. Mattingly 1,870 posts

- 19. $QURE 2,476 posts

- 20. #BuschPlayoffPush N/A

Talvez você curta

-

Katja Grace 🔍

Katja Grace 🔍

@KatjaGrace -

Scott Alexander

Scott Alexander

@slatestarcodex -

Ajeya Cotra

Ajeya Cotra

@ajeya_cotra -

Joe Carlsmith

Joe Carlsmith

@jkcarlsmith -

Kelsey Piper

Kelsey Piper

@KelseyTuoc -

Rohin Shah

Rohin Shah

@rohinmshah -

Victoria Krakovna

Victoria Krakovna

@vkrakovna -

Amanda Askell

Amanda Askell

@AmandaAskell -

Rob Bensinger ⏹️

Rob Bensinger ⏹️

@robbensinger -

Nate Soares ⏹️

Nate Soares ⏹️

@So8res -

EA Forum Posts

EA Forum Posts

@EAForumPosts -

Jess Whittlestone

Jess Whittlestone

@jesswhittles -

Owain Evans

Owain Evans

@OwainEvans_UK -

Toby Ord

Toby Ord

@tobyordoxford -

Anders Sandberg

Anders Sandberg

@anderssandberg

Something went wrong.

Something went wrong.