ray

@raydistributed

The AI framework trusted by OpenAI, Uber, and Airbnb. Created and developed by @anyscalecompute.

你可能會喜歡

SGLang 🤝 Ray! We're super excited to have @ying11231 and @liin1211 talk about SGLang and its new features at Ray Summit! They'll highlight the newest SGLang features and also talk about SGLang's integration with Ray Data LLM. Hope to see you there!

SGLang at Ray Summit 2025 is coming! 📍 San Francisco • Nov 3–5 • Hosted by @anyscalecompute 🗓 On Nov 5, SGLang is invited to give a talk on Efficient LLM Serving 🎤 @ying11231 & @liin1211 will introduce core features, high-throughput & low-latency tricks, real-world…

See what changed and why 🔎 At #RaySummit 2025, Elizabeth Hu, Goku Mohandes & Akshay Malik from @anyscalecompute will announce Lineage Tracking on Anyscale: end-to-end ML lineage powered by OpenLineage. Nov 3–5 | SF Register: na2.hubs.ly/H01Q0LS0

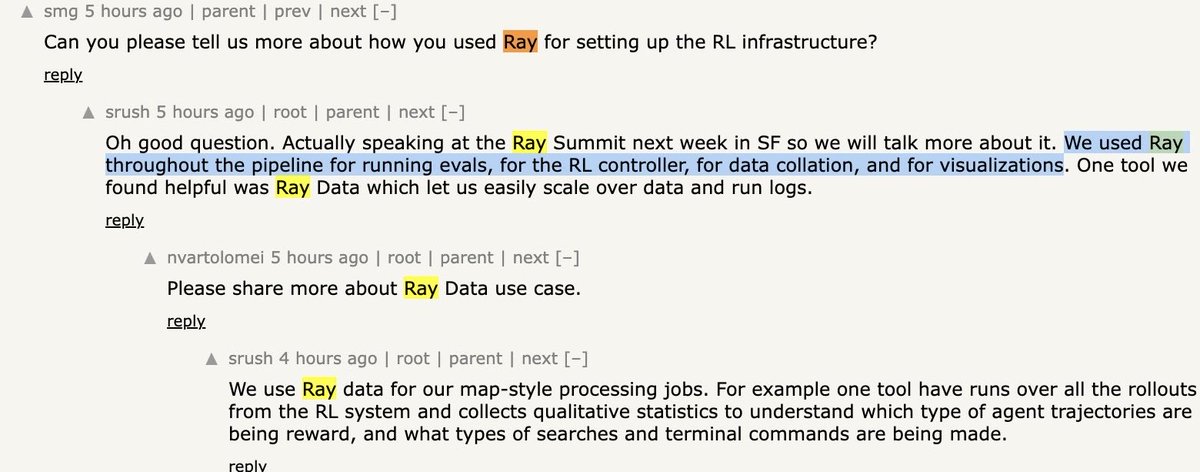

Impressive new model from Cursor 🙌 “We built custom training infrastructure leveraging PyTorch and Ray to power asynchronous reinforcement learning at scale” They use @raydistributed AND Ray Data 🔗 cursor.com/blog/composer

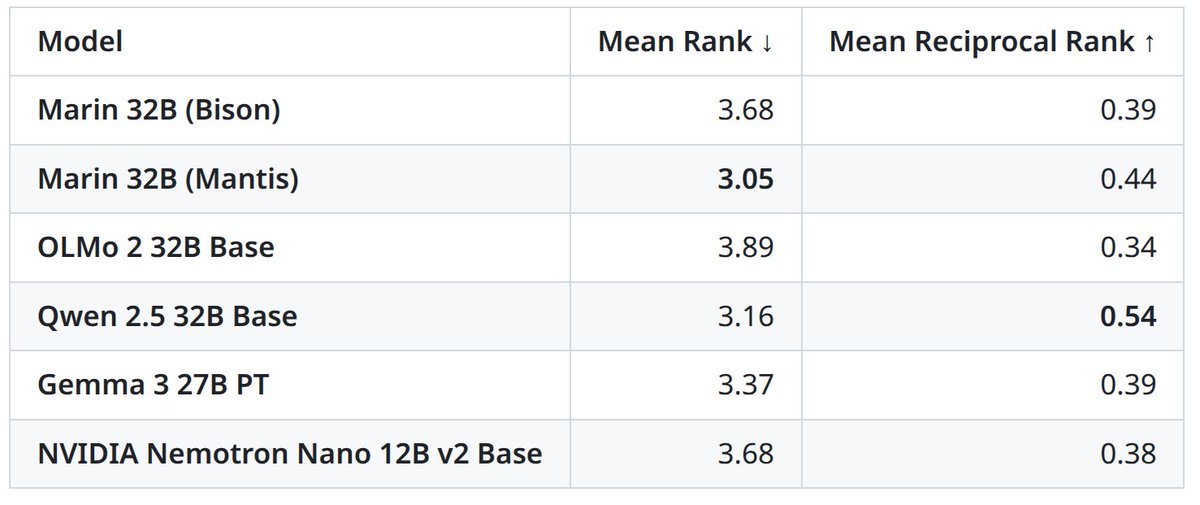

32B is done! Escaped the spiky jaws of death and it turned out pretty strong. Still need to do post-training (come join us!), but I think it's a good base to build on.

⛵Marin 32B Base (mantis) is done training! It is the best open-source base model (beating OLMo 2 32B Base) and it’s even close to the best comparably-sized open-weight base models, Gemma 3 27B PT and Qwen 2.5 32B Base. Ranking across 19 benchmarks:

Congratulations! David's talk at Ray Summit next week might be the most open and most in-depth guide to training strong models. They've done an incredible job making their journey and decisions reproducible. This kind of openness can play a big role in accelerating science.…

32B is done! Escaped the spiky jaws of death and it turned out pretty strong. Still need to do post-training (come join us!), but I think it's a good base to build on.

🔥 Following our big announcement — here’s the full vLLM takeover at Ray Summit 2025! 📍 San Francisco • Nov 3–5 • Hosted by @anyscalecompute Get ready for deep dives into high-performance inference, unified backends, prefix caching, MoE serving, and large-scale…

🔥Ray Summit 2025 will be one of the biggest events for vLLM this year, with 10+ talks centered around @vllm_project! Looking forward to see you there. 🤩Use our discount code (limited time only!) RAYVLLM50 anyscale.com/ray-summit/2025

#Opensource framework @raydistributed from @anyscalecompute will be integrated into the @PyTorch Foundation, scaling #dataprocessing, distributing training workloads across #GPUs, and orchestrating inference from a single source. Learn more in @Forbes: bit.ly/47wufqh

Two days ago, we shared that @raydistributed is joining the @PyTorch Foundation, part of the Linux Foundation, alongside PyTorch, Kubernetes, DeepSpeed, and vLLM. Here’s why we started Ray at UC Berkeley.

The quality of this year’s @raydistributed summit agenda and speaker lineups is awesome🔥. Personally looking forward to these: physical AI @DrJimFan Terminal-Bench @Mike_A_Merrill PrimeIntellect & EnvHub for RL on LLMs @willccbb @johannes_hage Apple on LLM inference w/ Ray…

Frontier use case of PyTorch + Ray.

Cursor just released a frontier coding model with 4x faster generation. They will be speaking at Ray Summit about their journey building a frontier coding model. - Training on 1000s of GPUs - Scaling 100,000s of sandboxed coding environments - Custom training infrastructure with…

Can’t fix what you can’t see. 👀 At #RaySummit 2025, Mengjin Yan & Nikita Vemuri (@anyscalecompute) will debut Ray-native observability: dashboards for distributed AI, telemetry in your cloud, and a new Ray Export API. Nov 3–5 | SF Register Now: na2.hubs.ly/H01PjwK0

Two days ago, we shared that @raydistributed is joining the @PyTorch Foundation, part of the Linux Foundation, alongside PyTorch, Kubernetes, DeepSpeed, and vLLM. Here’s why we started Ray at UC Berkeley.

Fortunate to be part of two (!) foundation projects (@vllm_project and @raydistributed) that have great synergy with each other. The Ray + vLLM + PyTorch stack is coming together. Congratulations, Ray!

We’re excited to welcome Ray to the PyTorch Foundation 👋 @raydistributed is an open source distributed computing framework for #AI workloads, including data processing, model training and inference at scale. By contributing Ray to the @PyTorch Foundation, @anyscalecompute…

The modern AI stack is taking shape: Kubernetes + Ray + vLLM + PyTorch. Open source, interoperable, and built for scale. Congrats to @PyTorch and @raydistributed a huge milestone for the ecosystem. 🔥

We’re excited to welcome Ray to the PyTorch Foundation 👋 @raydistributed is an open source distributed computing framework for #AI workloads, including data processing, model training and inference at scale. By contributing Ray to the @PyTorch Foundation, @anyscalecompute…

I enjoyed speaking at #PyTorchCon today. Wanted to share one slide from my talk about open source AI infra. This is about how Ray and vLLM work together. LLM inference is growing more and more complex, and doing a good job with LLM inference means working across layers and…

We’re excited to welcome Ray to the PyTorch Foundation 👋 @raydistributed is an open source distributed computing framework for #AI workloads, including data processing, model training and inference at scale. By contributing Ray to the @PyTorch Foundation, @anyscalecompute…

Super excited for @raydistributed to join the PyTorch foundation! Daily downloads of Ray have grown nearly 10x over the past year (with the explosion of generative AI, reasoning models, RL, agents, and multimodal data). I'm very excited about growing the open source developer…

We’re excited to welcome Ray to the PyTorch Foundation 👋 @raydistributed is an open source distributed computing framework for #AI workloads, including data processing, model training and inference at scale. By contributing Ray to the @PyTorch Foundation, @anyscalecompute…

Today we’re donating Ray to The Linux Foundation under the PyTorch Foundation with PyTorch + vLLM, strengthening the open compute fabric for AI. Ensures long-term neutrality, open governance, and ecosystem alignment. Blog: na2.hubs.ly/H01JydX0 Ray Summit (Nov 3–5, SF):…

Ray × DeepSpeed Meetup: AI at Scale Talks from Masahiro Tanaka (Anyscale), Tunji Ruwase (DeepSpeed/Snowflake), Zhipeng Wang (LinkedIn), Vinay Sridhar (Snowflake). DeepSpeed overview, SuperOffload, long-sequence training, Muon optimizer, DeepCompile + Ray for partitioned ML…

United States 趨勢

- 1. Game 7 1,111 posts

- 2. Halloween 3.22M posts

- 3. Glasnow 4,810 posts

- 4. Ja Morant 3,152 posts

- 5. Barger 5,037 posts

- 6. Bulls 29K posts

- 7. #LetsGoDodgers 10.3K posts

- 8. Roki 7,039 posts

- 9. Clement 4,969 posts

- 10. #BostonBlue 4,489 posts

- 11. Yamamoto 29.1K posts

- 12. Grizzlies 6,159 posts

- 13. Mookie 13.7K posts

- 14. GAME SEVEN 5,753 posts

- 15. #SmackDown 24.8K posts

- 16. Rojas 10K posts

- 17. Springer 10.9K posts

- 18. Teoscar 2,367 posts

- 19. Joe Carter 1,300 posts

- 20. #DodgersWin 4,753 posts

你可能會喜歡

-

Anyscale

Anyscale

@anyscalecompute -

Robert Nishihara

Robert Nishihara

@robertnishihara -

clem 🤗

clem 🤗

@ClementDelangue -

Gradio

Gradio

@Gradio -

Lightning AI ⚡️

Lightning AI ⚡️

@LightningAI -

Julien Chaumond

Julien Chaumond

@julien_c -

Weights & Biases

Weights & Biases

@wandb -

MLflow

MLflow

@MLflow -

Qodo

Qodo

@QodoAI -

Tim Dettmers

Tim Dettmers

@Tim_Dettmers -

Philipp Schmid

Philipp Schmid

@_philschmid -

Sasha Rush

Sasha Rush

@srush_nlp -

Lysandre

Lysandre

@LysandreJik -

Piotr Nawrot

Piotr Nawrot

@p_nawrot -

Tim Rocktäschel

Tim Rocktäschel

@_rockt

Something went wrong.

Something went wrong.