#tensorrt search results

TensorRT試してみた、爆速だけど制約多すぎ、とりあえずWildcardsでぶん回すには良いかも!! ちなみに「cudnn_adv_infer64_8.dll」が見つからんと言われるんだけど最新入れても変わらず、まぁ動くからいいけど。 #stablediffusion #TensorRT #AIart #AIグラビア

TensorRTですが、なんとか動くようになりましたが、画像生成そのものは早いですが、Hires.fixやI2IでのMultiDiffusionはそこそこな感じ?💦 あと、なぜかLoRA変換しても効かないとか、MultiDiffusionがEulaに勝手になるとか、ちょっと問題ありそうです😰 #TensorRT #StableDifffusion #AIArt

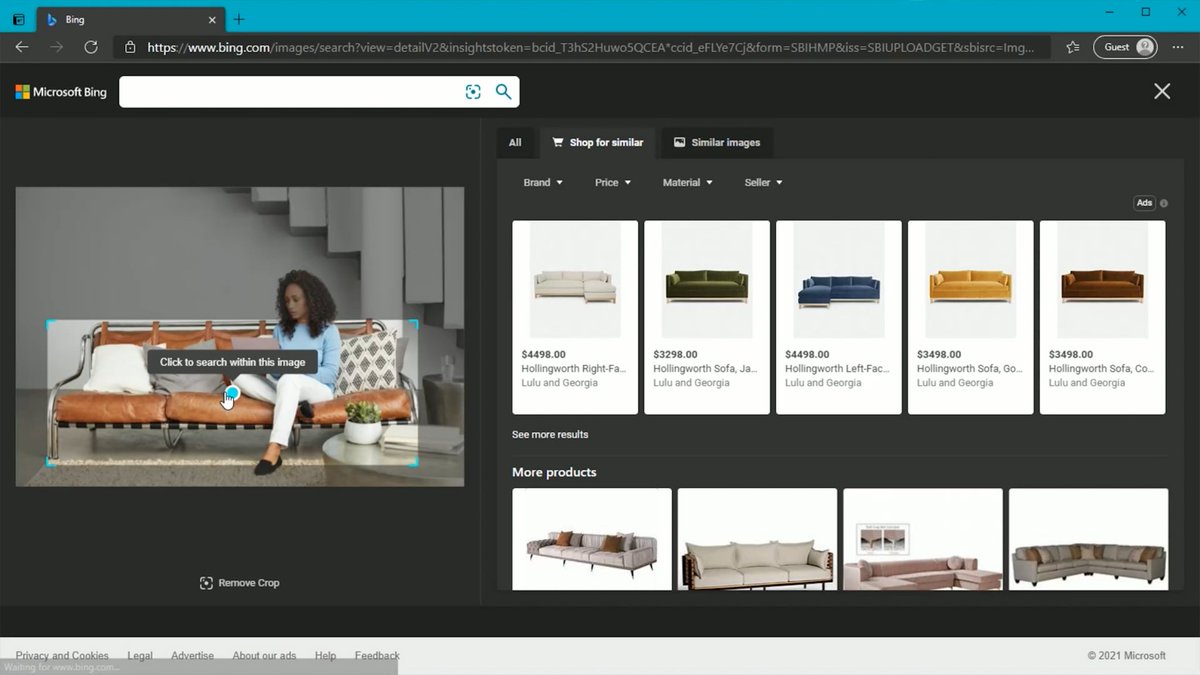

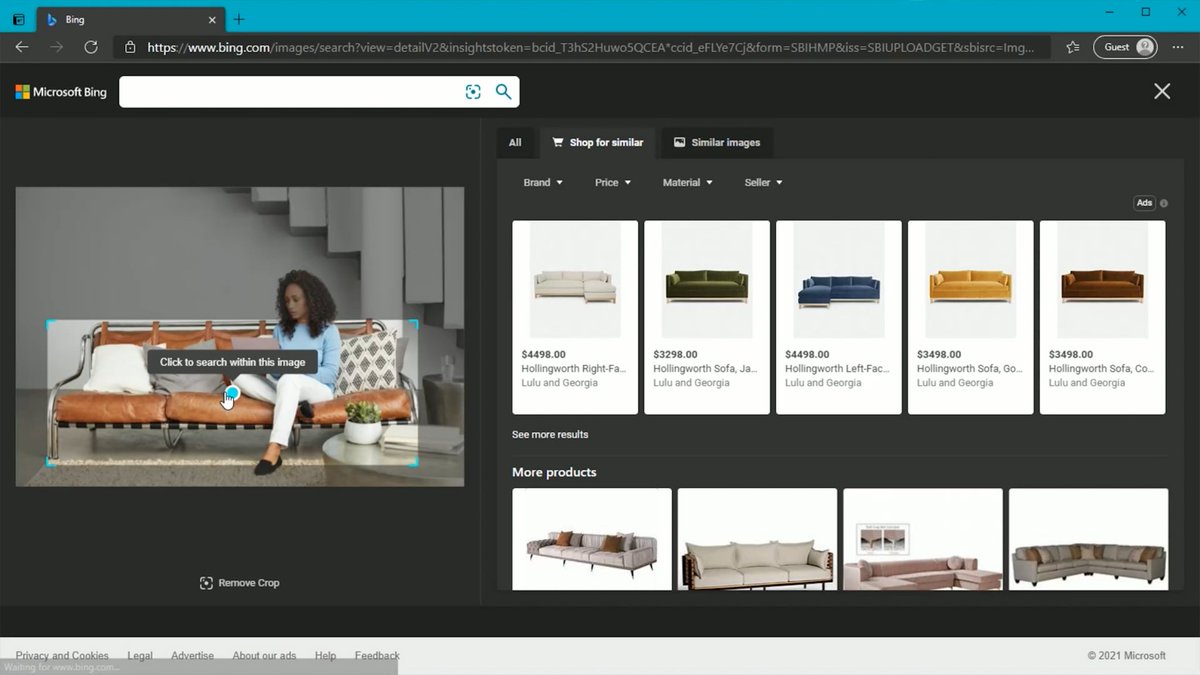

👀 Learn how the #Microsoft Bing Visual Search team leveraged #TensorRT, CV-CUDA and nvImageCodec from #NVIDIA to optimize their TuringMM visual embeddings pipeline, achieving 5.13x throughput speedup and significant TCO reduction. ➡️ nvda.ws/4dHj9Qd #visualai

I broke my record 🎉 by using #TensorRT 🔥 with @diffuserslib T4 GPU on 🤗 @huggingface 512x512 50 steps 6.6 seconds (xformers) to 4.57 seconds 🎉 I will make more tests clean up the code, and make it open-source 🐣

A TensorRT-LLM az NVIDIA saját technológiája, ami kifejezetten az LLM-ek futtatását gyorsítja GPU-n @nvidia @NVIDIAAI #tensor #tensorrt #ai #AINews youtu.be/BQTVoT5O2Zk

youtube.com

YouTube

A TensorRT-LLM az NVIDIA saját technológiája, ami kifejezetten az...

TensorRTでモデルが変換できなかった件、どうもmodelを階層管理してるとダメっぽい。 modelフォルダのルートにファイルを置いたら変換された😇 試したら、Hires.fix後の解像度も必要になるとのことで、ぶるぺんさんの記事のように256-(512)-1536までの解像度が必要そう。 #TensorRT #StableDiffusion

Our latest benchmarks show the unmatched efficiency of @NVIDIAAI #TensorRT LLM, setting a new standard in AI performance 🚀 Discover the future of real-time #AI Apps with reduced latency & enhanced speed by reading our latest technical deep dive 👇🔍 fetch.ai/blog/unleashin…

🖼️ Ready for next-level image generation? @bfl_ml's FLUX.1 image generation model suite -- built on the Diffusion Transformer (DiT) architecture, and trained on 12 billion parameters -- is now accelerated by #TensorRT and runs the fastest ⚡️on NVIDIA RTX AI PCs. 🙌 Learn more…

Super cool news 🥳 Thanks to @ddPn08 ❤ #TensorRT 🔥 working pretty good on 🤗 @huggingface 🤯 T4 GPU 512x512 20 steps 2 seconds 🚀 I will make more tests clean up the code, and make it public space 🐣 please give star ⭐ to @ddPn08 🥰 github.com/ddPn08/Lsmith

ネット記で、Stable Diffusionの画像生成が高速化される拡張機能が出たとのこと! ...というわけで、ぶるぺんさんの記事などを参考に、TensorRTのインストールを行って、モデルの変換に失敗...😰 起動時にもエラーが出るため、あきらめて拡張機能を無効化してひとまず敗北😥 #TensorRT #StableDiffusion

We leverage @NVIDIAAI's #TensorRT to optimize LLMs, boosting efficiency & performance for real-time AI applications 🤖 Discover the breakthroughs making our AI platforms smarter and faster by diving into our technical deep dive blog below!👇🔍 fetch.ai/blog/advancing…

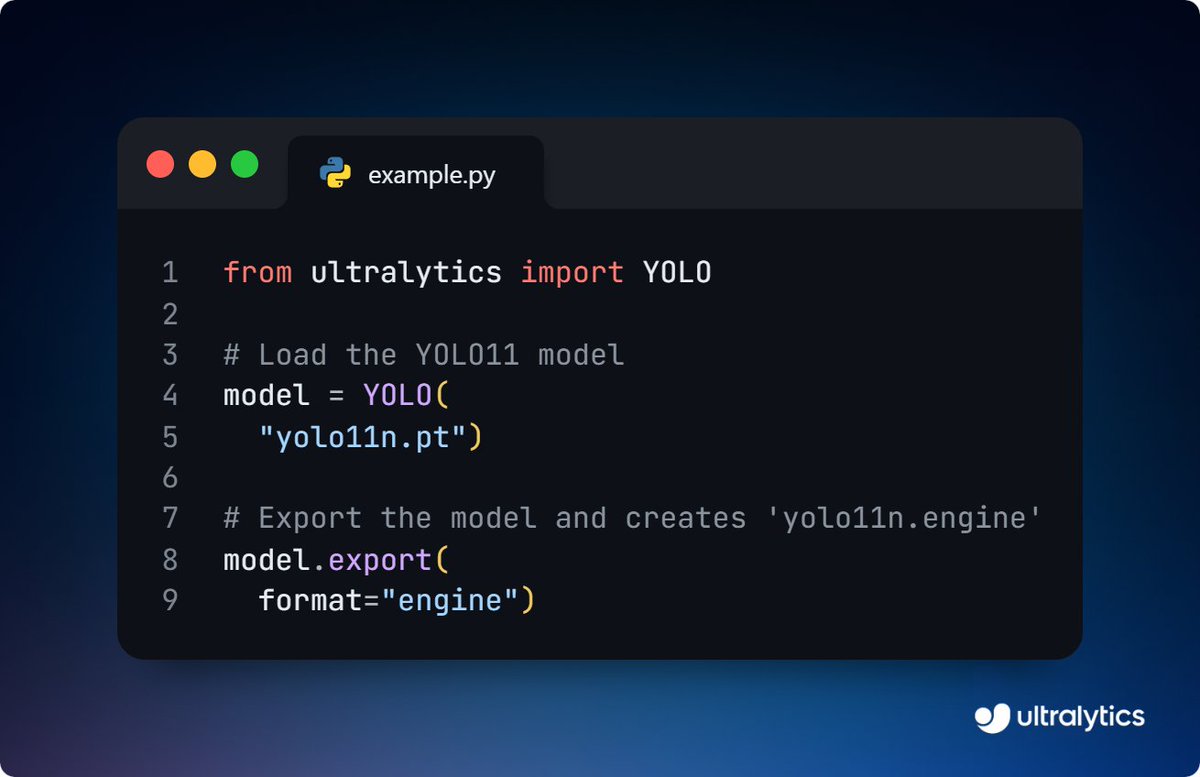

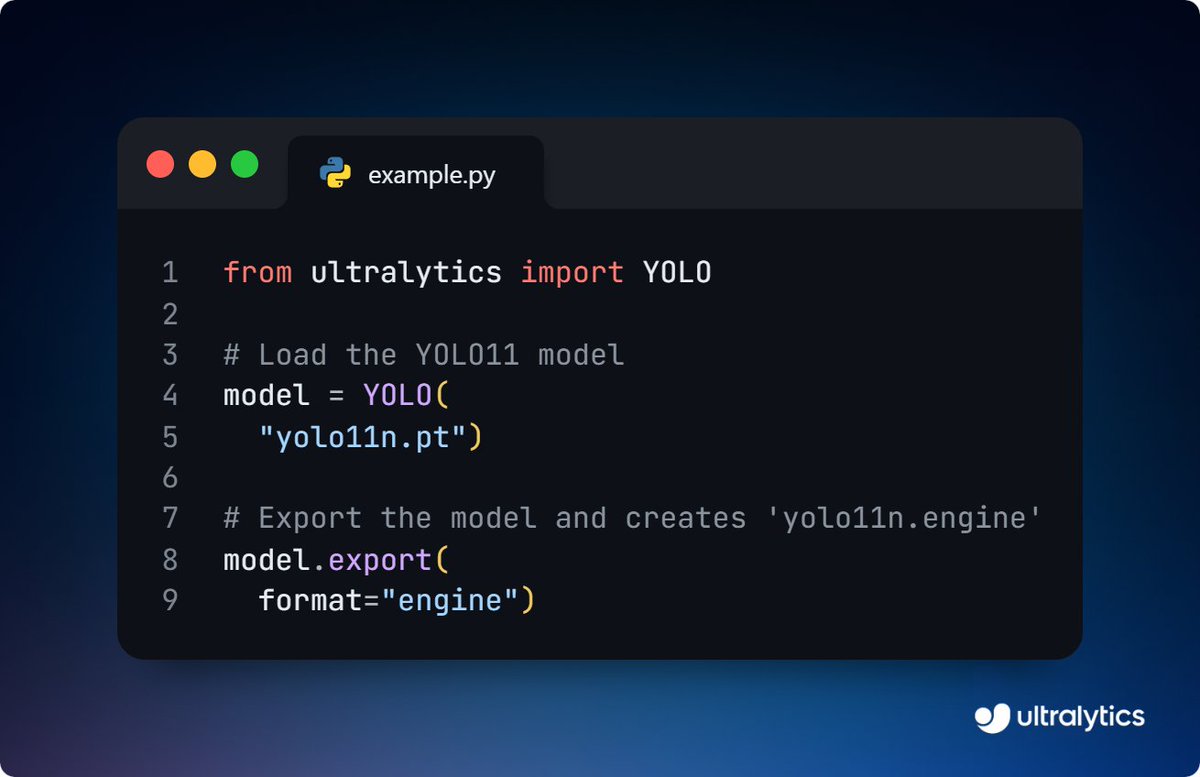

Speed up Ultralytics YOLO11 inference with TensorRT export!⚡ Export YOLO11 models to TensorRT for faster performance and greater efficiency. It's ideal for running computer vision projects on edge devices while saving resources. Learn more ➡️ ow.ly/Xu1f50UFUCm #TensorRT

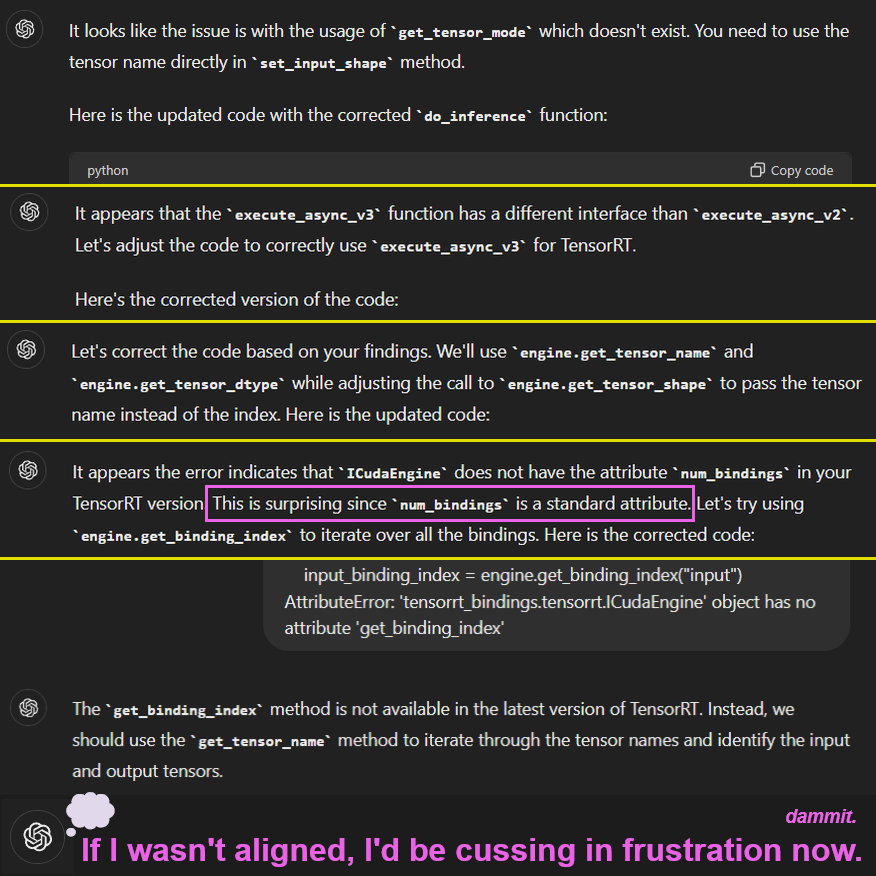

I think I'm gonna have to downgrade #TensorRT. To suit #GPT4o. Infinite loop of confusion leading to #AI's version of a frustration: 🤖: This is surprising. It's a standard attribute! 💭: (in the depths of its weights) 🤬 FAAAAAAAKK!👊💥

🙌 We hope to see you at the next #TensorRT #LLM night in San Francisco. ICYMI last week, here is our presentation ➡️ nvda.ws/4dj18Y0

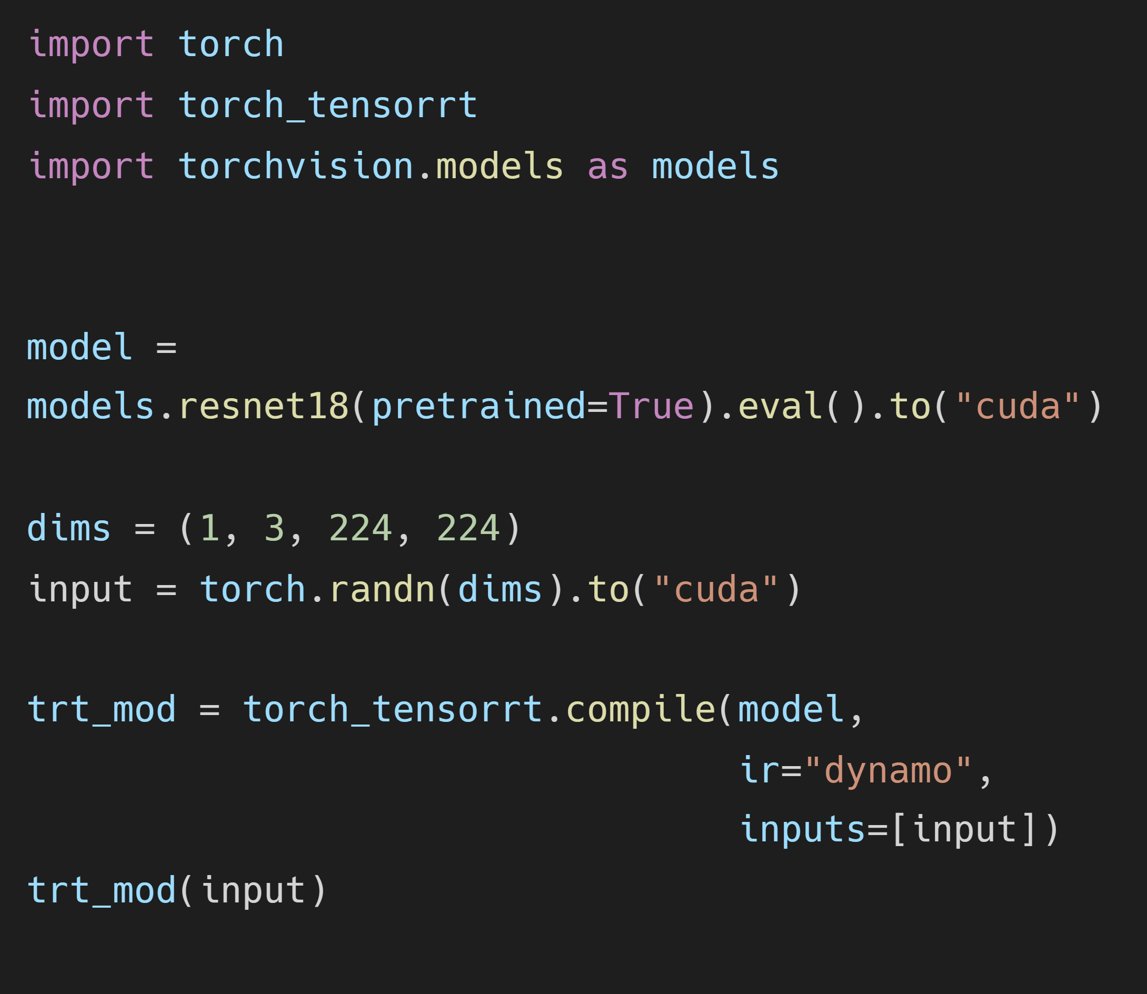

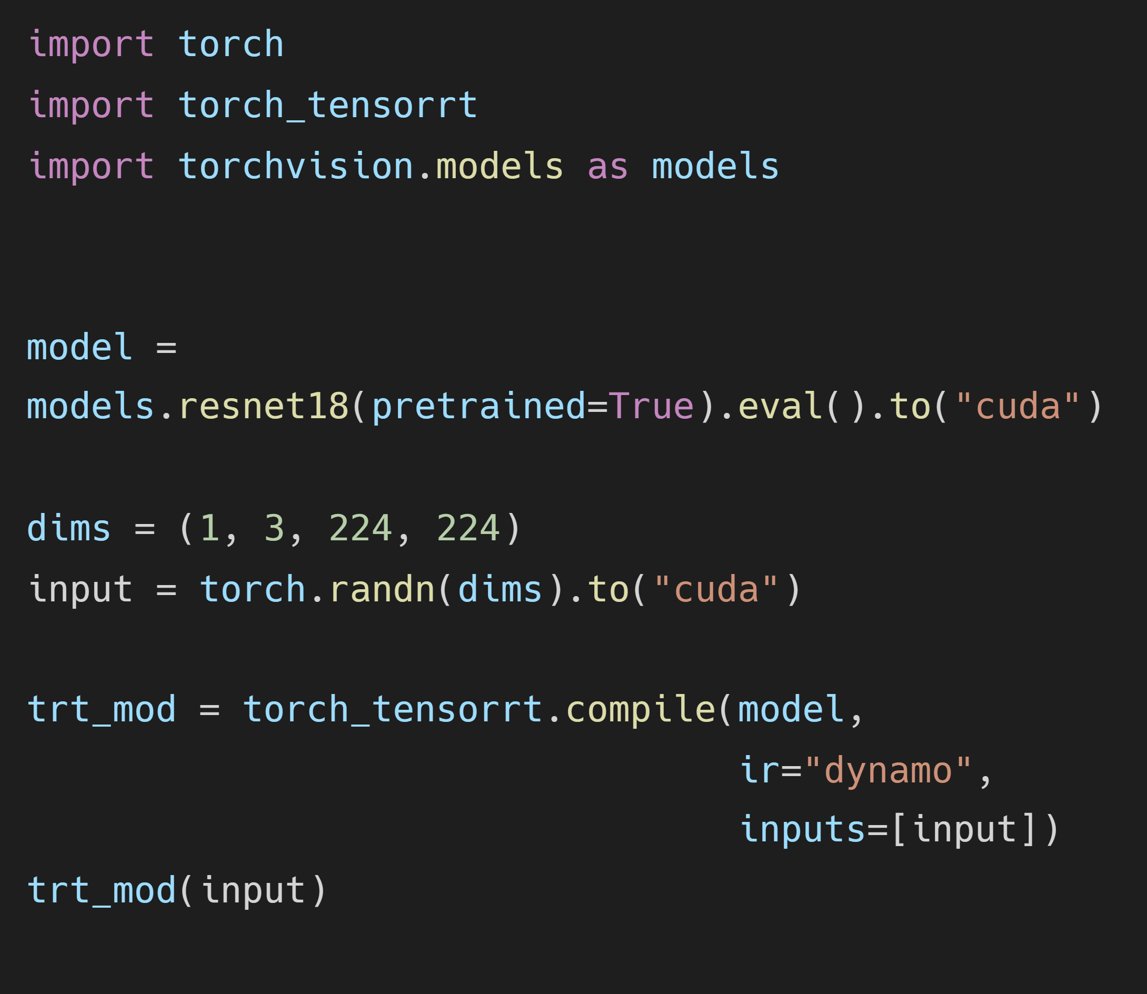

🌟 @AIatMeta #PyTorch + #TensorRT v2.4 🌟 ⚡ #TensorRT 10.1 ⚡ #PyTorch 2.4 ⚡ #CUDA 12.4 ⚡ #Python 3.12 ➡️ github.com/pytorch/Tensor… ✨

Thanks to @ddPn08 ❤ for this super cool 🤯 project using high-speed inference technology with #TensorRT 🔥 please try it 🐣 github.com/ddPn08/Lsmith

TensorRTの推論高速化を実装したStableDiffusionWebUI とりあえず公開します。 画像生成がバカ速いWebUIです。 現段階では起動方法はDocker or Linuxで手動インストールのみです。難易度高いですがご了承ください。早いうちにもっと簡単にしたいと思ってます。 github.com/ddPn08/Lsmith

Running LLMs at scale? This TensorRT-LLM benchmarking guide shows how to turn profiling into real latency + throughput gains: glcnd.io/optimizing-llm… #AI #LLM #TensorRT #Developers

💼 Senior Ai Systems Engineer at RemoteHunter 📍 United States 💰 $170,000-$210,000 🛠️ #pytorch #tensorrt #nvidiatriton #langchain #langgraph #vllm #openai #gemini #anthropic #kubernetes #prefect #ray #aws 🔗 applyfirst.app/jobs/070e4677-…

🚀 New on the blog: Using ONNX + TensorRT for Faster Inspection AI Models! Learn how we supercharge crack, corrosion & oil-spill detection with high-speed inference. 🔗 Read more: manyatechnologies.com/onnx-tensorrt-… #ONNX #TensorRT #AI #ManyaTechnologies

A TensorRT-LLM az NVIDIA saját technológiája, ami kifejezetten az LLM-ek futtatását gyorsítja GPU-n @nvidia @NVIDIAAI #tensor #tensorrt #ai #AINews youtu.be/BQTVoT5O2Zk

youtube.com

YouTube

A TensorRT-LLM az NVIDIA saját technológiája, ami kifejezetten az...

NVIDIA's TensorRT-LLM unlocks impressive LLM inference speeds on their GPUs with an easy-to-use Python API. Performance gains are substantial with optimizations like quantization and custom attention kernels. #LLM #Python #TensorRT Link to the repo in the next tweet!

Accelerated by NVIDIA #cuEquivariance and #TensorRT for faster inference and ready for enterprise-grade deployment in software platforms.

GPU performans optimizasyonu ile eğitim ve çıkarımda gerçek hız kazanın. Doğru yığını seçin, profilleyin, ayarlayın ve ölçekleyin. #GPU #CUDA #TensorRT 👉 chatrobot.com.tr/?s=GPU%20perfo…

Just realized NVIDIA's TensorRT-LLM now supports OpenAI's GPT-OSS-120B on day zero. Huge leap for open-weight LLM inference performance. Makes cutting-edge models far more accessible. #LLM #TensorRT #OpenAI Repo Link in the next tweet.

9/10 🧠Edge対応Tips ・GGUF形式でllama.cppに変換(2〜3GBでも動作) ・ONNX/TensorRTでJetson対応 ・Triton ServerでAPI化 ・LoRAで機能分離型アダプタ構成 #llamacpp #TensorRT #LoRA #AI最適化 #Gemma3n

Deepfake scams don’t stand a chance with the new #3DiVi Face SDK 3.27! Now with a #deepfake detection module, turbo inference with #TensorRT & #OpenVINO and Python no-GIL support for parallel pipelines. Check out the full updates here: 3divi.ai/news/tpost/pze…

🏆ワークフロートラック優勝は「Video-to-Video高速化」!数時間かかっていた処理を約10分に短縮するTensorRTなどを使った最適化ワークフロー。リアルタイムでのスタイル変換も夢じゃない!(4/7) #TensorRT #動画生成AI

16/22 Learn from production systems: Study Triton (from OpenAI), FasterTransformer, and TensorRT. See how real systems solve scaling, batching, and optimization challenges. #Triton #TensorRT #Production

TensorRT試してみた、爆速だけど制約多すぎ、とりあえずWildcardsでぶん回すには良いかも!! ちなみに「cudnn_adv_infer64_8.dll」が見つからんと言われるんだけど最新入れても変わらず、まぁ動くからいいけど。 #stablediffusion #TensorRT #AIart #AIグラビア

👀 @AIatMeta Llama 3.1 405B trained on 16K NVIDIA H100s - inference is #TensorRT #LLM optimized⚡ 🦙 400 tok/s - per node 🦙 37 tok/s - per user 🦙 1 node inference ➡️ nvda.ws/3LB1iyQ✨

✨ #TensorRT and GeForce #RTX unlock ComfyUI SD superhero powers 🦸⚡ 🎥 Demo: nvda.ws/4bQ14iH 📗 DIY notebook: nvda.ws/3Kv1G1d ✨

👀 Learn how the #Microsoft Bing Visual Search team leveraged #TensorRT, CV-CUDA and nvImageCodec from #NVIDIA to optimize their TuringMM visual embeddings pipeline, achieving 5.13x throughput speedup and significant TCO reduction. ➡️ nvda.ws/4dHj9Qd #visualai

TensorRTでモデルが変換できなかった件、どうもmodelを階層管理してるとダメっぽい。 modelフォルダのルートにファイルを置いたら変換された😇 試したら、Hires.fix後の解像度も必要になるとのことで、ぶるぺんさんの記事のように256-(512)-1536までの解像度が必要そう。 #TensorRT #StableDiffusion

👀 @Meta #Llama3 + #TensorRT LLM multilanguage checklist: ✅ LoRA tuned adaptors ✅ Multilingual ✅ NIM ➡️ nvda.ws/3Li6o2L

I broke my record 🎉 by using #TensorRT 🔥 with @diffuserslib T4 GPU on 🤗 @huggingface 512x512 50 steps 6.6 seconds (xformers) to 4.57 seconds 🎉 I will make more tests clean up the code, and make it open-source 🐣

🌟 @AIatMeta #PyTorch + #TensorRT v2.4 🌟 ⚡ #TensorRT 10.1 ⚡ #PyTorch 2.4 ⚡ #CUDA 12.4 ⚡ #Python 3.12 ➡️ github.com/pytorch/Tensor… ✨

TensorRTですが、なんとか動くようになりましたが、画像生成そのものは早いですが、Hires.fixやI2IでのMultiDiffusionはそこそこな感じ?💦 あと、なぜかLoRA変換しても効かないとか、MultiDiffusionがEulaに勝手になるとか、ちょっと問題ありそうです😰 #TensorRT #StableDifffusion #AIArt

Let the @MistralAI MoE tokens fly 📈 🚀 #Mixtral 8x7B with NVIDIA #TensorRT #LLM on #H100. ➡️ Tech blog: nvda.ws/3xPRMnZ ✨

🖼️ Ready for next-level image generation? @bfl_ml's FLUX.1 image generation model suite -- built on the Diffusion Transformer (DiT) architecture, and trained on 12 billion parameters -- is now accelerated by #TensorRT and runs the fastest ⚡️on NVIDIA RTX AI PCs. 🙌 Learn more…

less goo, TensorRT x DsPY available now ... upnext Triton Inference Server ... #opensource #llm #tensorrt #nvidia

Speed up Ultralytics YOLO11 inference with TensorRT export!⚡ Export YOLO11 models to TensorRT for faster performance and greater efficiency. It's ideal for running computer vision projects on edge devices while saving resources. Learn more ➡️ ow.ly/Xu1f50UFUCm #TensorRT

🙌 We hope to see you at the next #TensorRT #LLM night in San Francisco. ICYMI last week, here is our presentation ➡️ nvda.ws/4dj18Y0

ネット記で、Stable Diffusionの画像生成が高速化される拡張機能が出たとのこと! ...というわけで、ぶるぺんさんの記事などを参考に、TensorRTのインストールを行って、モデルの変換に失敗...😰 起動時にもエラーが出るため、あきらめて拡張機能を無効化してひとまず敗北😥 #TensorRT #StableDiffusion

Something went wrong.

Something went wrong.

United States Trends

- 1. #Kodezi N/A

- 2. Walter Payton 3,079 posts

- 3. Chronos N/A

- 4. Good Thursday 35.2K posts

- 5. Merry Christmas 65.1K posts

- 6. $META 11.1K posts

- 7. #thursdayvibes 2,350 posts

- 8. Metaverse 7,361 posts

- 9. Yihe 2,550 posts

- 10. #WPMOYChallenge 2,838 posts

- 11. #NationalCookieDay N/A

- 12. #25SilverPagesofSoobin 12.3K posts

- 13. Happy Friday Eve N/A

- 14. Somali 237K posts

- 15. Dealerships 1,384 posts

- 16. RNC and DNC 4,108 posts

- 17. DNC and RNC 4,095 posts

- 18. The Blaze 5,077 posts

- 19. Hilux 10K posts

- 20. Toyota 31.6K posts