#activationfunctions نتائج البحث

Activation functions like sigmoid or tanh guide machine learning models, speeding learning, minimizing errors, and preventing dead neurons. #sigmoid #activationfunctions #machinelearning

Softmax is ideal for output layers, used to assign probabilities to inputs summing to 100%, not meant for training models. #softmax #activationfunctions #machinelearning

Activation functions in machine learning manage output range and improve model accuracy by preventing neuron activation issues. #activationfunctions #machinelearning #relu

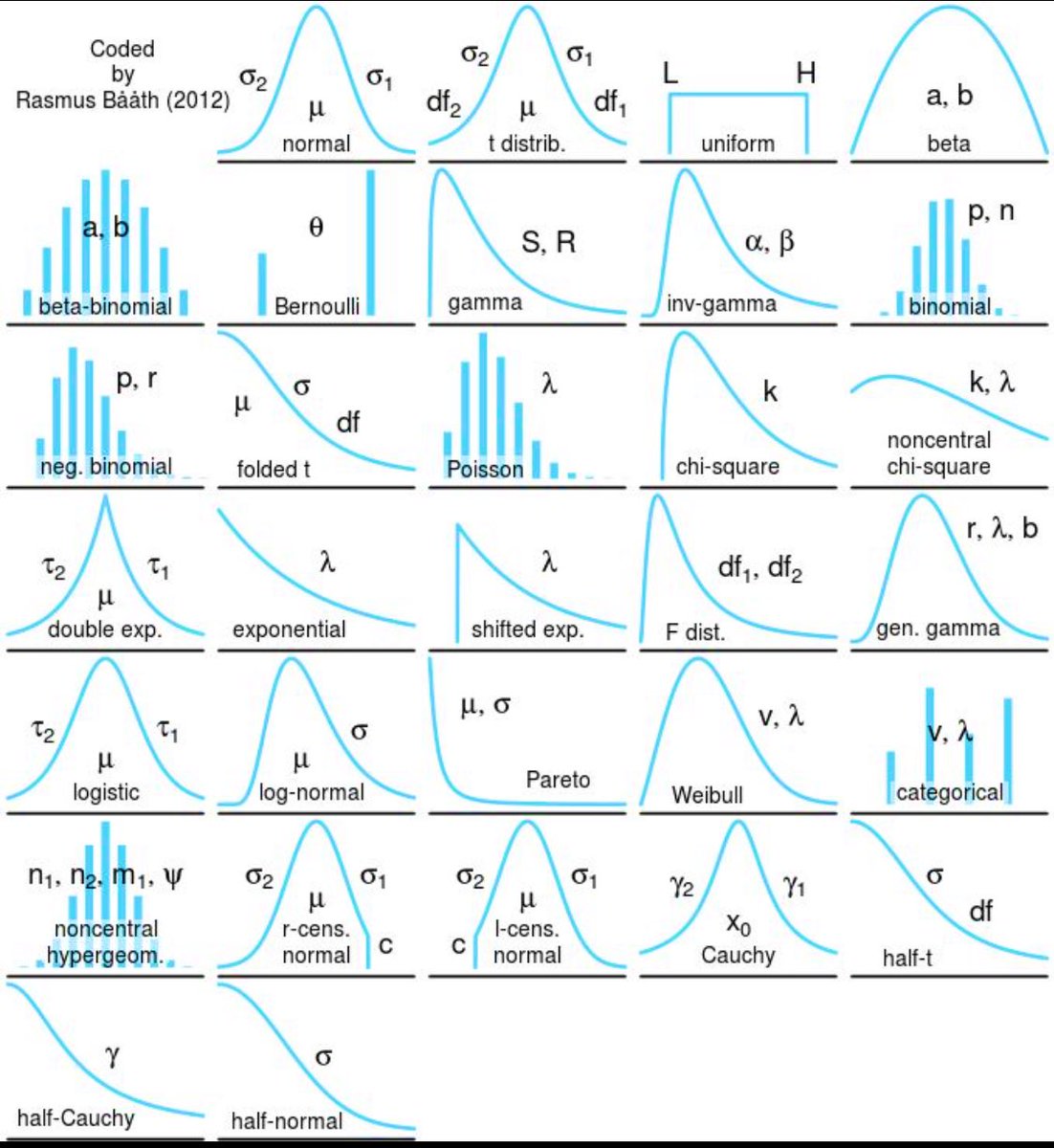

Know your distributions. Normal ain’t the only one. #ActivationFunctions #ProbabilityDistribution #WeekendStudies

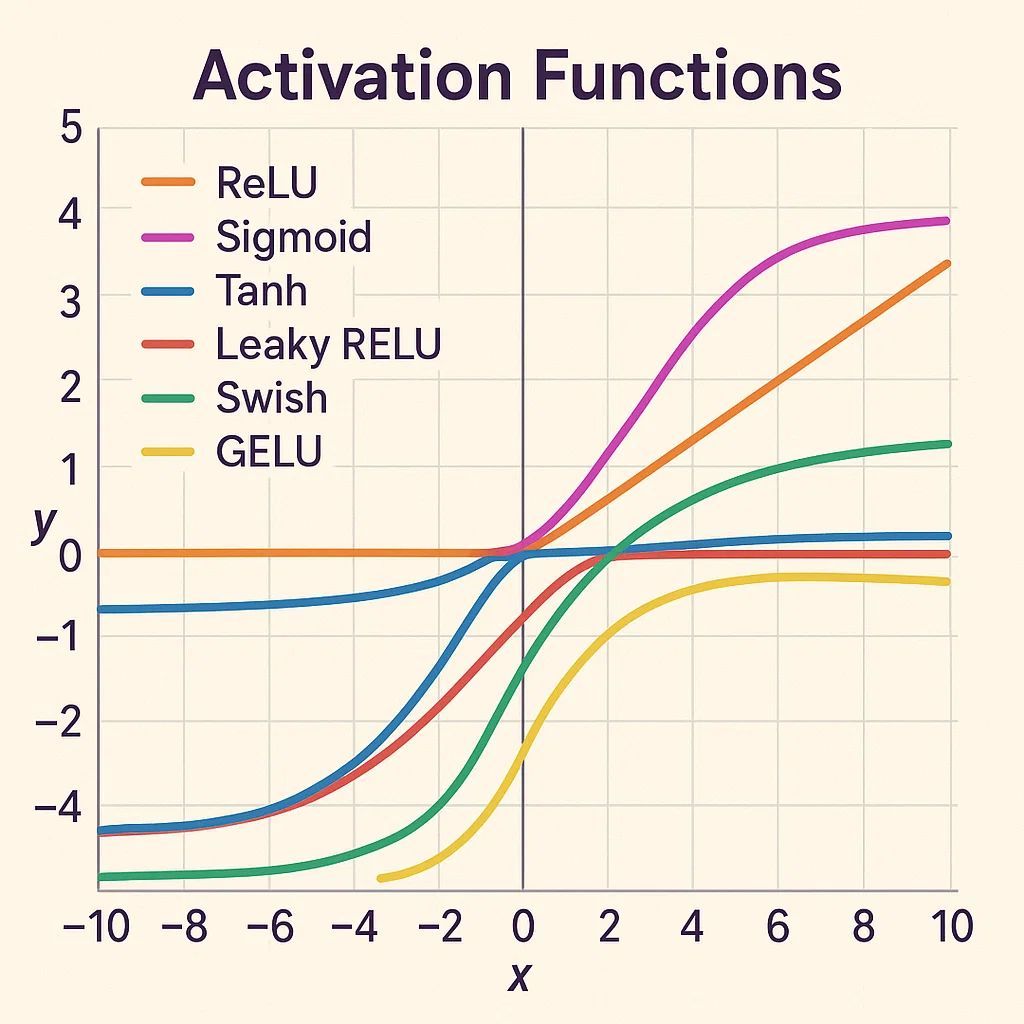

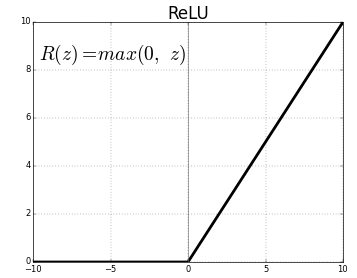

ReLU emerges as the fastest activation function after optimization in benchmarks. #machinelearning #activationfunctions #neuralnetworks

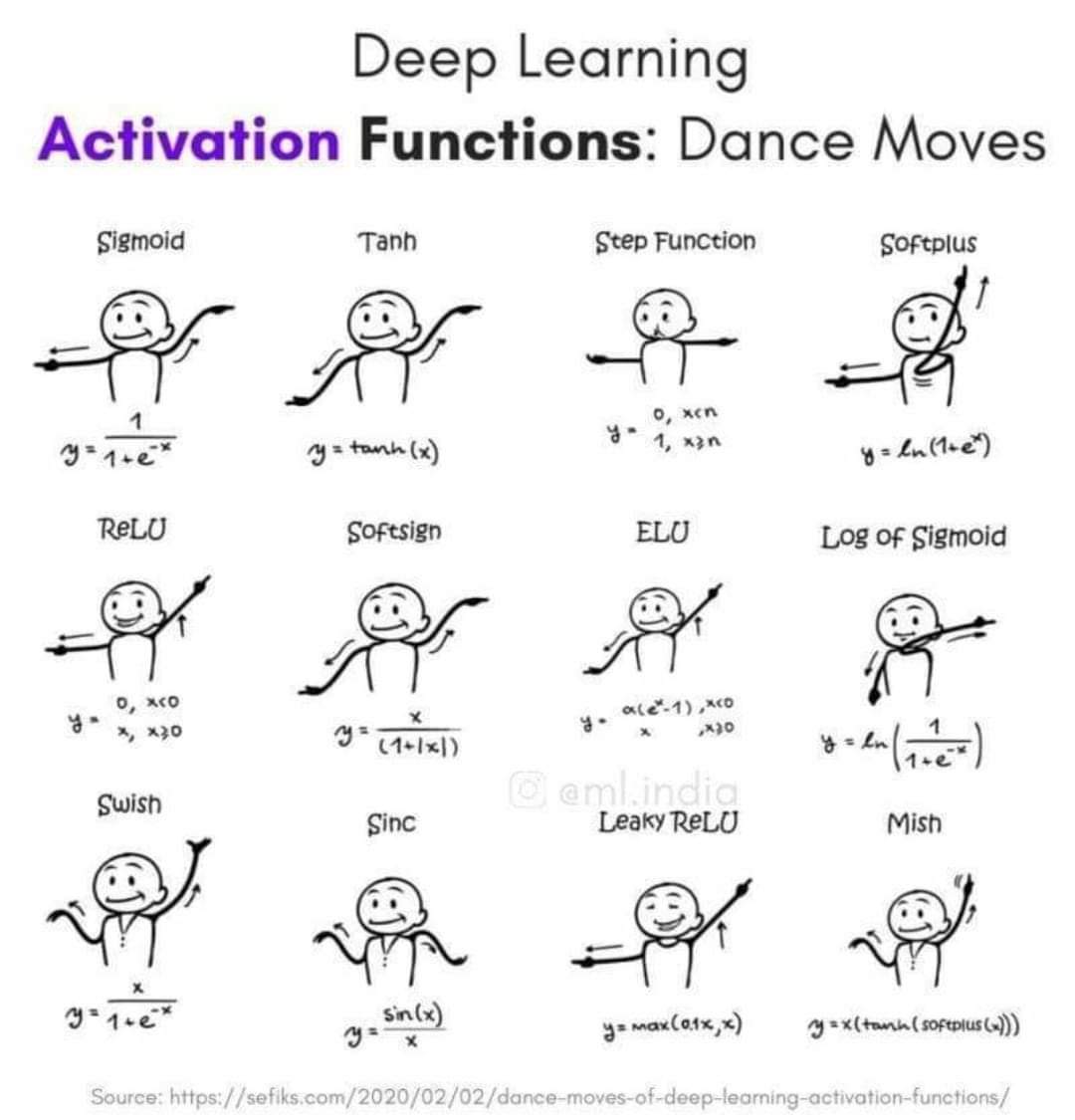

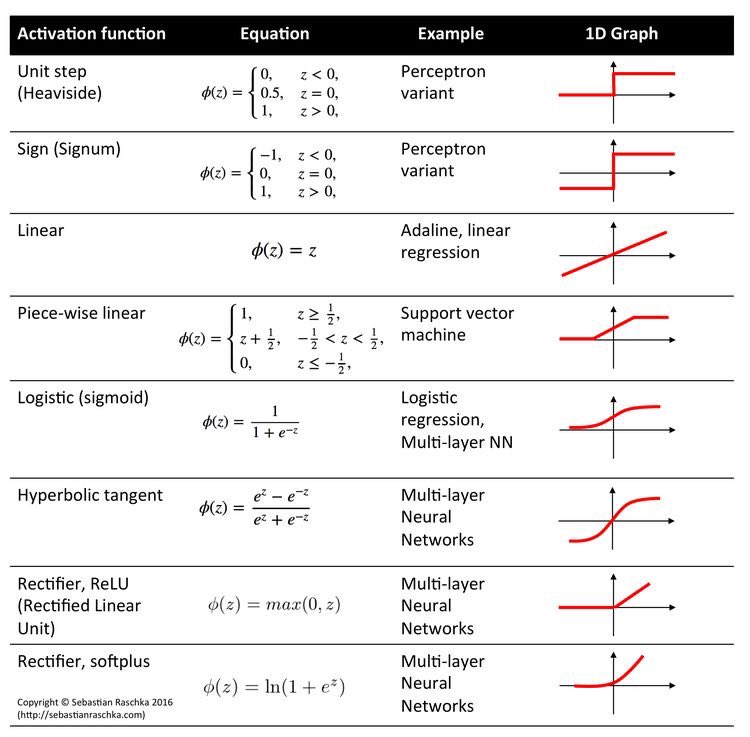

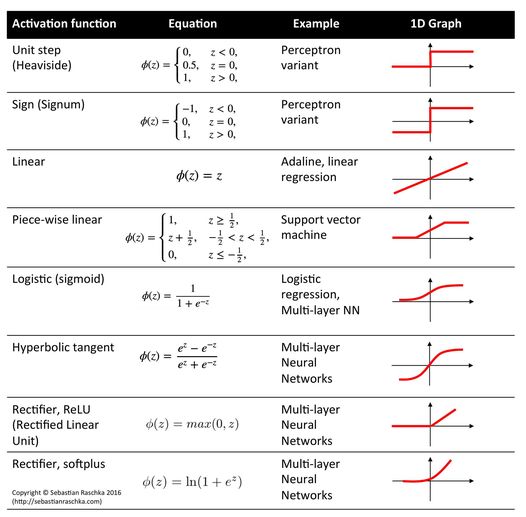

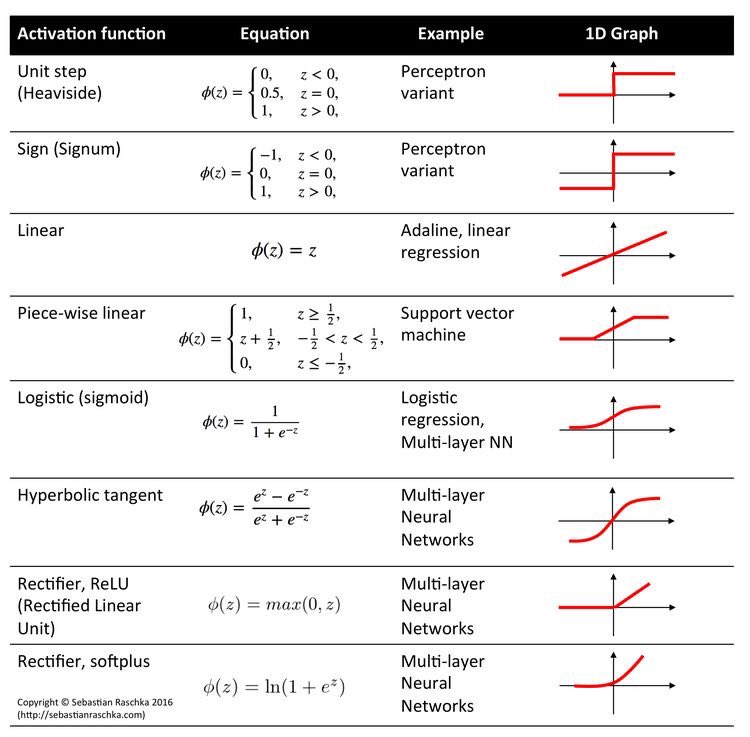

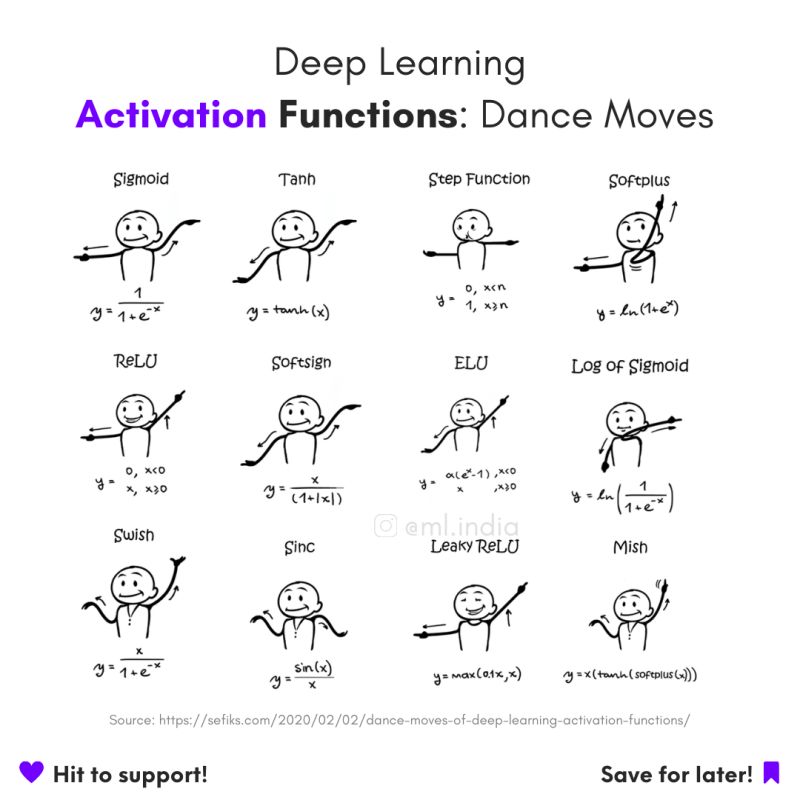

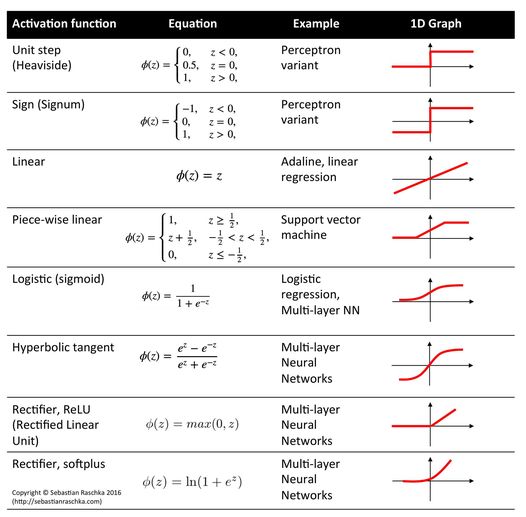

Looking for the right type of Activation Function for your Neural Network Model? Here's a list describing each and everyone. Don't forget to look at the last image. 🧵👇 #ActivationFunctions #deeplearning #python #codanics #neuralnetworks #machinelearning

RT aammar_tufail RT @arslanchaos: Looking for the right type of Activation Function for your Neural Network Model? Here's a list describing each and everyone. Don't forget to look at the last image. 🧵👇 #ActivationFunctions #deeplearning #python #cod…

Activation functions #NeuralNetwork #DeepLearning #ActivationFunctions

Activation functions in deep networks aren't just mathematical curiosities—they're the decision makers that determine how information flows. ReLU, sigmoid, and tanh each shape learning differently, influencing AI behavior and performance. #DeepNeuralNetworks #ActivationFunctions…

🚀 Activation Functions: The Secret Sauce of Neural Networks! They add non-linearity, helping models grasp complex patterns! 🧠 🔹 ReLU🔹Sigmoid🔹Tanh Power up your AI models with the right activation functions! Follow #AI365 👉 shorturl.at/1Ek3f #ActivationFunctions 💡🔥

Neural Network Architectures and Activation Functions: A Gaussian Process Approach - freecomputerbooks.com/Neural-Network… Look for "Read and Download Links" section to download. #NeuralNetworks #ActivationFunctions #GaussianProcess #DeepLearning #MachineLearning #GenAI #GenerativeAI

RT The Importance and Reasoning behind Activation Functions dlvr.it/SCZlJ8 #activationfunctions #neuralnetworks #machinelearning #datascience

🧠#ActivationFunctions in #DeepLearning! They introduce non-linearity, enabling neural networks to learn complex patterns. Key types: Sigmoid, Tanh, ReLU, Softmax. Essential for enhancing model complexity & stability. 🚀 #AI #MachineLearning #DataScience

💥 An overview of activation functions for Neural Networks! Source: @BDAnalyticsnews #NeuralNetwork #ActivationFunctions

RT Understanding ReLU: The Most Popular Activation Function in 5 Minutes! dlvr.it/RlBysm #relu #activationfunctions #artificialintelligence #machinelearning

"Activation functions: the secret of neural networks! They determine outputs based on inputs & weights. Quantum neural networks take it even further by implementing any activation function without measuring outputs. Truly mind-blowing! #ActivationFunctions #QuantumNeuralNetwor

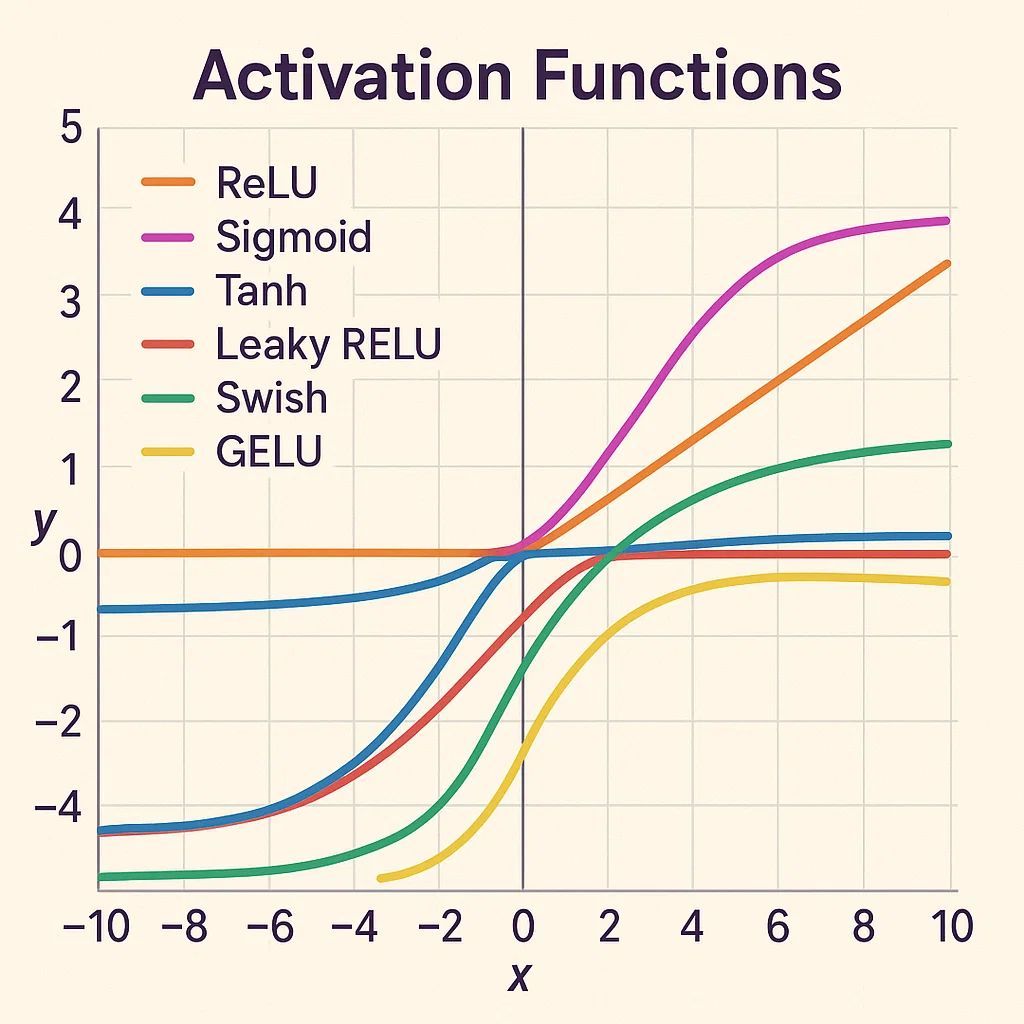

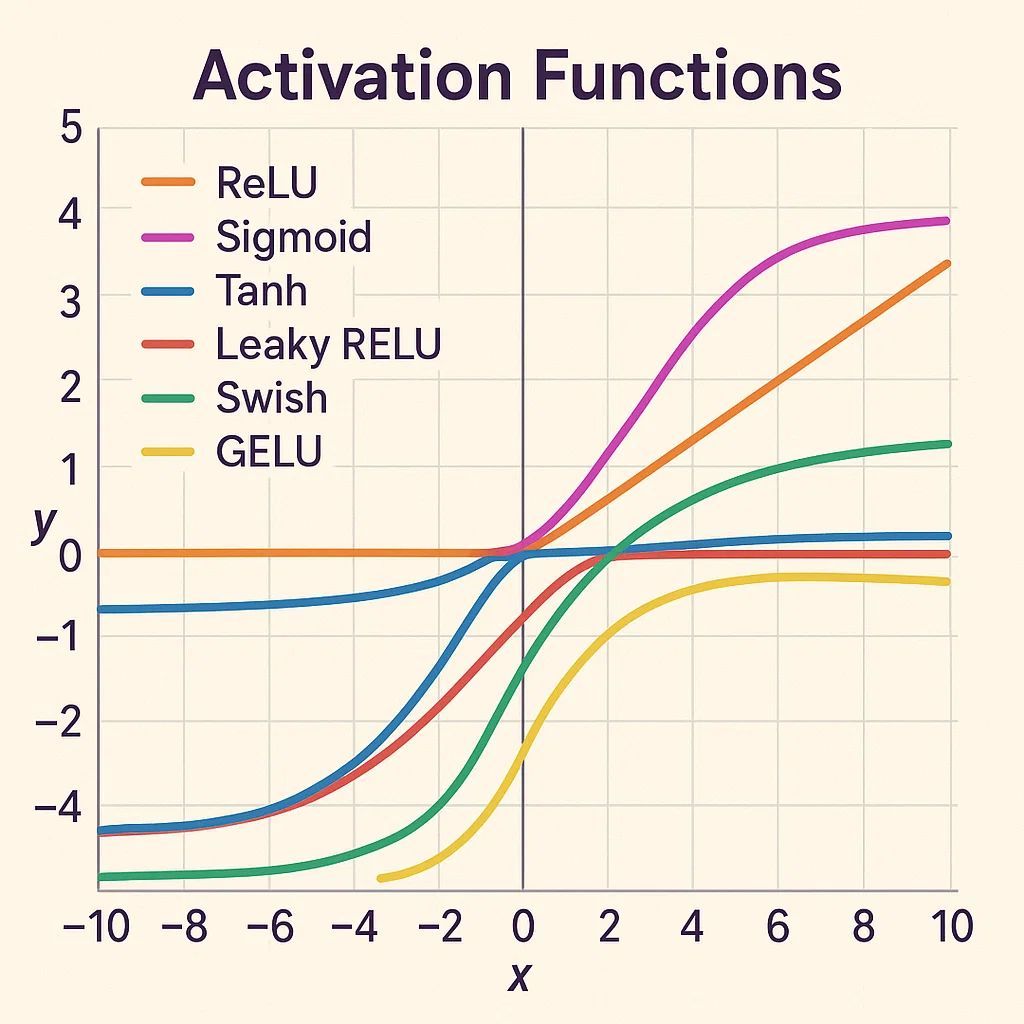

RT On the Disparity Between Swish and GELU dlvr.it/RtvGNy #activationfunctions #neuralnetworks #artificialintelligence

10 Activation Functions Every Data Scientist Should Know About - websystemer.no/10-activation-… #activationfunctions #artificialintelligence #deeplearning #machinelearning #statistics

Activation functions in deep networks aren't just mathematical curiosities—they're the decision makers that determine how information flows. ReLU, sigmoid, and tanh each shape learning differently, influencing AI behavior and performance. #DeepNeuralNetworks #ActivationFunctions…

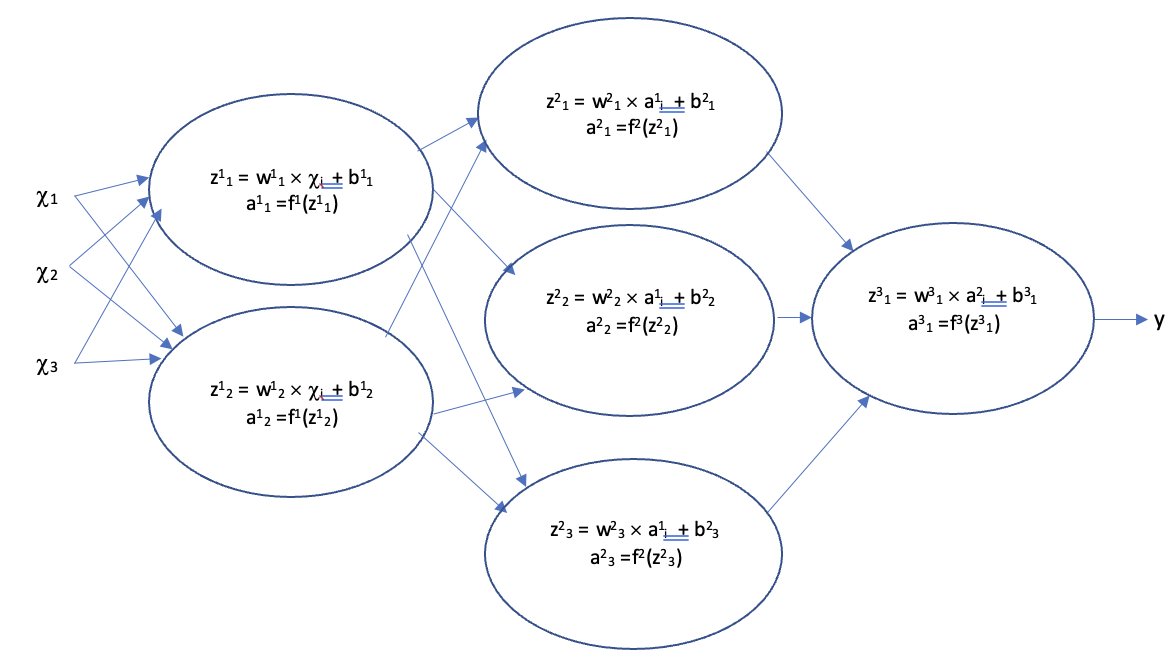

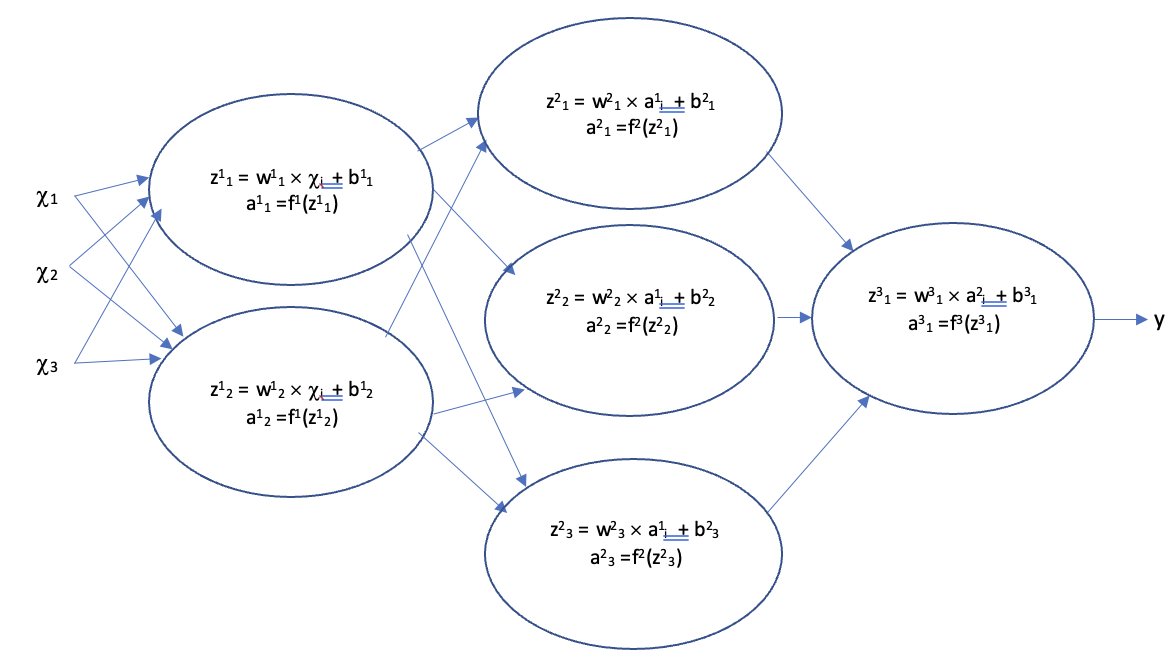

Neuron Aggregation & Activation Functions – In ANNs, aggregation combines weighted inputs, while activation functions introduce non-linearity letting networks learn complex patterns instead of staying linear. #DeepLearning #MachineLearning #ActivationFunctions #AI #NeuralNetworks

🧠 Let’s activate some neural magic! ReLU, Sigmoid, Tanh & Softmax each shape how your network learns & predicts. From binary to multi-class—choose wisely to supercharge your model! ⚡ 🔗buff.ly/Cx76v5Y & buff.ly/5PzZctS #AI365 #ActivationFunctions #MLBasics

Day 28 🧠 Activation Functions, from scratch → ReLU: max(0, x) — simple & fast → Sigmoid: squashes to (0,1), good for probs → Tanh: like sigmoid but centered at 0 #MLfromScratch #DeepLearning #ActivationFunctions #100DaysOfML

🚀 Explore the Activation Function Atlas — your visual & mathematical map through the nonlinear heart of deep learning. From ReLU to GELU, discover how activations shape AI intelligence. 🧠📈 🔗 programming-ocean.com/knowledge-hub/… #AI #DeepLearning #ActivationFunctions #MachineLearning

programming-ocean.com

Activation Function Atlas — From ReLU to GELU

A curated atlas of neural activation functions. Mathematical intuition meets visual clarity.

🚀 Activation Functions: The Secret Sauce of Neural Networks! They add non-linearity, helping models grasp complex patterns! 🧠 🔹 ReLU🔹Sigmoid🔹Tanh Power up your AI models with the right activation functions! Follow #AI365 👉 shorturl.at/1Ek3f #ActivationFunctions 💡🔥

Neural Networks: Explained for Everyone Neural Networks Explained The building blocks of artificial intelligence, powering modern machine learning applications ... justoborn.com/neural-network… #activationfunctions #aiapplications #AIEthics

Builder Perspective - #AttentionMechanisms: Multi-head attention patterns - Layer Configuration: Depth vs. width tradeoffs - Normalization Strategies: Pre-norm vs. post-norm - #ActivationFunctions: Selection and placement

Neural Network Architectures and Activation Functions: A Gaussian Process Approach - freecomputerbooks.com/Neural-Network… Look for "Read and Download Links" section to download. #NeuralNetworks #ActivationFunctions #GaussianProcess #DeepLearning #MachineLearning #GenAI #GenerativeAI

4️⃣ Sigmoid’s Secret 🤫 Why do we use sigmoid activation? It adds non-linearity, letting the network learn complex patterns! 📈 sigmoid = @(z) 1 ./ (1 + exp(-z)); Without it, the network is just fancy linear regression! 😱 #ActivationFunctions #DeepLearning

I just published a blog about Asymmetric Tanh Pi 4 for Deep Neural Nets link.medium.com/rINE3VxixQb and corresponding github.com/Mastermindless… #DeepLearning #ArtificialIntelligence #ActivationFunctions #MachineLearning #NeuralNetworks #ResNet #CustomTanh #AIResearch #GradientFlow

github.com

GitHub - Mastermindless/tanh_pi_4: asymmetric peak activation functions to overcome the early...

asymmetric peak activation functions to overcome the early neuron death - Mastermindless/tanh_pi_4

Building a ReLU Activation Function from Scratch in Python youtube.com/watch?v=Qovt6U… #stem #neuralnetworks #activationfunctions #machinelearning #pythonprogramming #datascience

youtube.com

YouTube

Building a ReLU Activation Function from Scratch in Python

Unlock your model's potential by selecting the ideal activation function! Enhance performance and accuracy with the right choice. #MachineLearning #AI #ActivationFunctions #DeepLearning #DataScience

🧠 In Machine Learning, we often talk about activation functions like ReLU, Sigmoid, or Tanh. But what truly powers learning behind the scenes? Read more: insightai.global/derivative-of-… #AI #ML #ActivationFunctions #Relu #Sigmoid #Tanh

Graphical Representation of #ActivationFunctions #AI #MachineLearning #ANN #CNN #ArtificialIntelligence #ML #DataScience #Data #Database #Python #programming #DeepLearning #DataAnalytics #DataScientist #DATA #coding #newbies #100daysofcoding

RT aammar_tufail RT @arslanchaos: Looking for the right type of Activation Function for your Neural Network Model? Here's a list describing each and everyone. Don't forget to look at the last image. 🧵👇 #ActivationFunctions #deeplearning #python #cod…

Know your distributions. Normal ain’t the only one. #ActivationFunctions #ProbabilityDistribution #WeekendStudies

💥 An overview of activation functions for Neural Networks! Source: @BDAnalyticsnews #NeuralNetwork #ActivationFunctions

RT The Importance and Reasoning behind Activation Functions dlvr.it/SCZlJ8 #activationfunctions #neuralnetworks #machinelearning #datascience

10 Activation Functions Every Data Scientist Should Know About - websystemer.no/10-activation-… #activationfunctions #artificialintelligence #deeplearning #machinelearning #statistics

RT Understanding ReLU: The Most Popular Activation Function in 5 Minutes! dlvr.it/RlBysm #relu #activationfunctions #artificialintelligence #machinelearning

Unlock your model's potential by selecting the ideal activation function! Enhance performance and accuracy with the right choice. #MachineLearning #AI #ActivationFunctions #DeepLearning #DataScience

Neural Network Architectures and Activation Functions: A Gaussian Process Approach - freecomputerbooks.com/Neural-Network… Look for "Read and Download Links" section to download. #NeuralNetworks #ActivationFunctions #GaussianProcess #DeepLearning #MachineLearning #GenAI #GenerativeAI

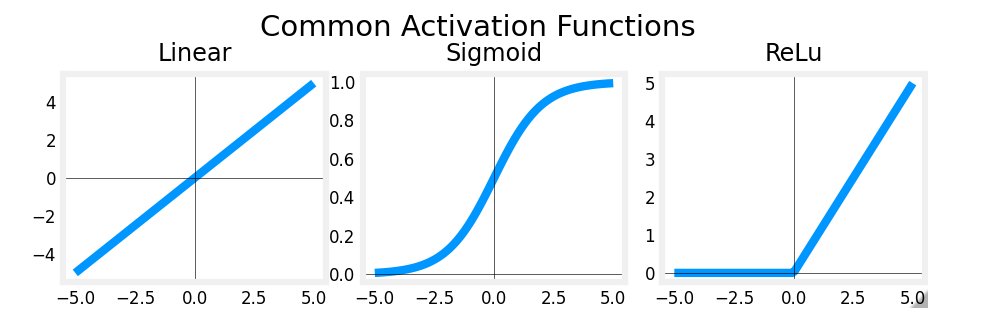

🧠📊 Three most commonly used Activation Functions in Neural Networks: Linear, Sigmoid, ReLU. 🚀 Understand how these functions shape AI learning! Follow on LinkedIN: Amit Subhash Chejara 💡💻 #NeuralNetworks #ActivationFunctions #AI

** Sigmoid: The OG gatekeeper. ** This S-shaped hero squashes values between 0 & 1, like a dimmer switch for neuron signals. Good for binary choices (think cat vs. dog), but struggles with complex problems. #ActivationFunctions #Sigmoid #MachineLearning

🚀 Activation Functions: The Secret Sauce of Neural Networks! They add non-linearity, helping models grasp complex patterns! 🧠 🔹 ReLU🔹Sigmoid🔹Tanh Power up your AI models with the right activation functions! Follow #AI365 👉 shorturl.at/1Ek3f #ActivationFunctions 💡🔥

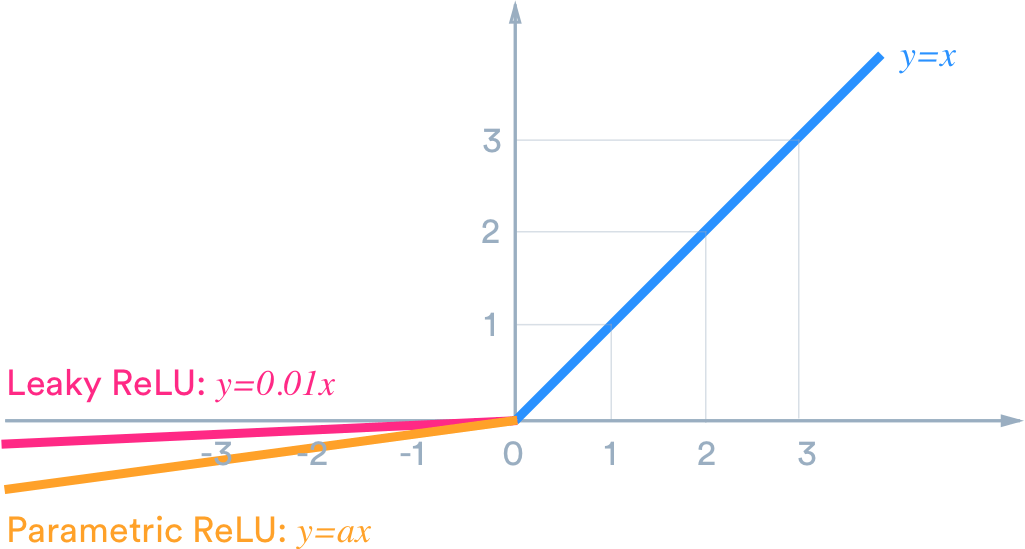

**✨ Leaky ReLU: The forgiving cousin. ** Leaky ReLU lets a tiny positive current flow even for negative inputs, preventing neuron death. Similar speed to ReLU, but handles negative values better. Great for training large, complex networks! #ActivationFunctions #LeakyReLU

ReLU (Rectified Linear Unit) - It's a simple yet powerful activation function that: ⚡ Introduces non-linearity, enabling complex pattern learning. ⚡ Speeds up training with efficient computation. #deeplearning #activationfunctions #neuralnetworks

🧠#ActivationFunctions in #DeepLearning! They introduce non-linearity, enabling neural networks to learn complex patterns. Key types: Sigmoid, Tanh, ReLU, Softmax. Essential for enhancing model complexity & stability. 🚀 #AI #MachineLearning #DataScience

**⚡️ ReLU: The speedy workhorse. ** This function simply keeps positive values & zeroes out negatives. ⚡ Blazing fast & loves deep networks, but can die if inputs get too negative. Great for image recognition & natural language processing! ️ #ActivationFunctions #ReLU

Something went wrong.

Something went wrong.

United States Trends

- 1. #DWTS 3,098 posts

- 2. Virginia 243K posts

- 3. Louisville 82.2K posts

- 4. Abigail Spanberger 26K posts

- 5. Jets 134K posts

- 6. Flav 1,147 posts

- 7. MD-11 18K posts

- 8. Jay Jones 29.1K posts

- 9. Honolulu 8,407 posts

- 10. #OlandriaxGlamourWOTY 2,565 posts

- 11. Jared 27.5K posts

- 12. UPS Flight 2976 15.8K posts

- 13. #AreYouSure2 49.4K posts

- 14. Miyares 15.5K posts

- 15. Brandon Ingram 1,867 posts

- 16. Azzi 7,450 posts

- 17. Colts 65.2K posts

- 18. #LHHMIA N/A

- 19. #いい推しの日 821K posts

- 20. Carrie Ann N/A