#datatokenization ผลการค้นหา

🏦 Suppliers, tokenize your datasets on @irys_xyz via Brickroad! Enjoy clean, annotated, benchmarked data streams with on-chain staking possibilities. Data revenue, automated! 💰📈 #DataTokenization #Blockchain

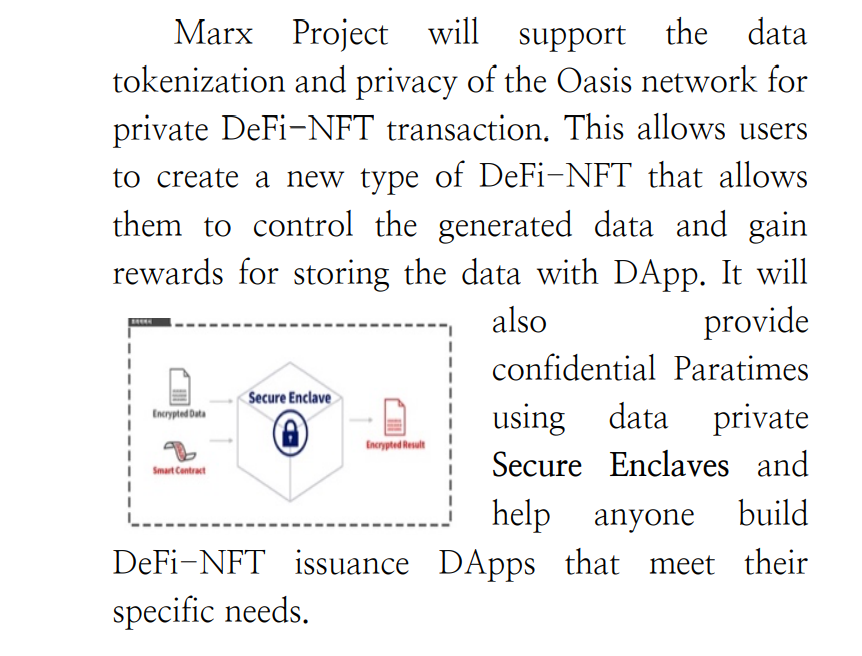

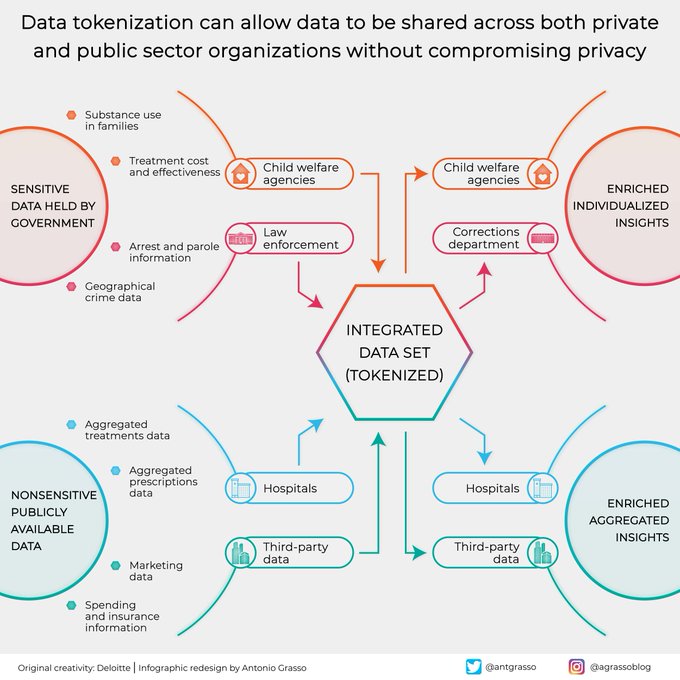

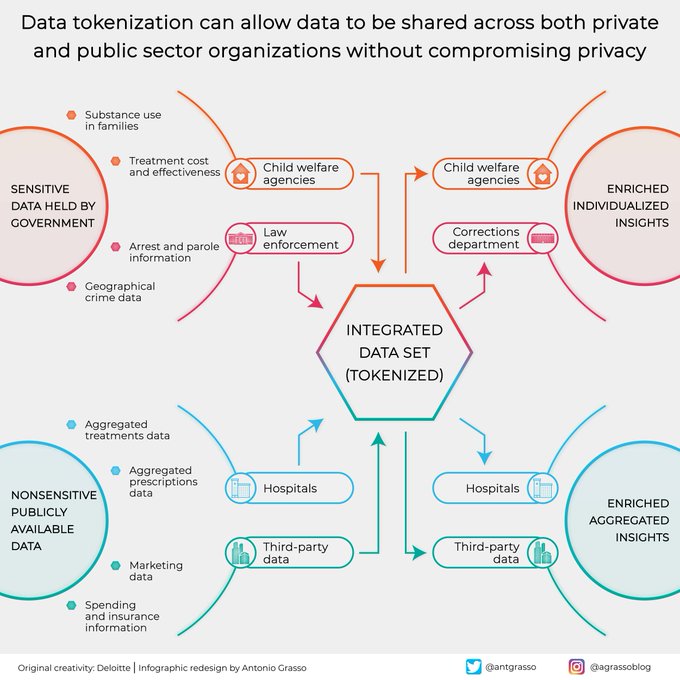

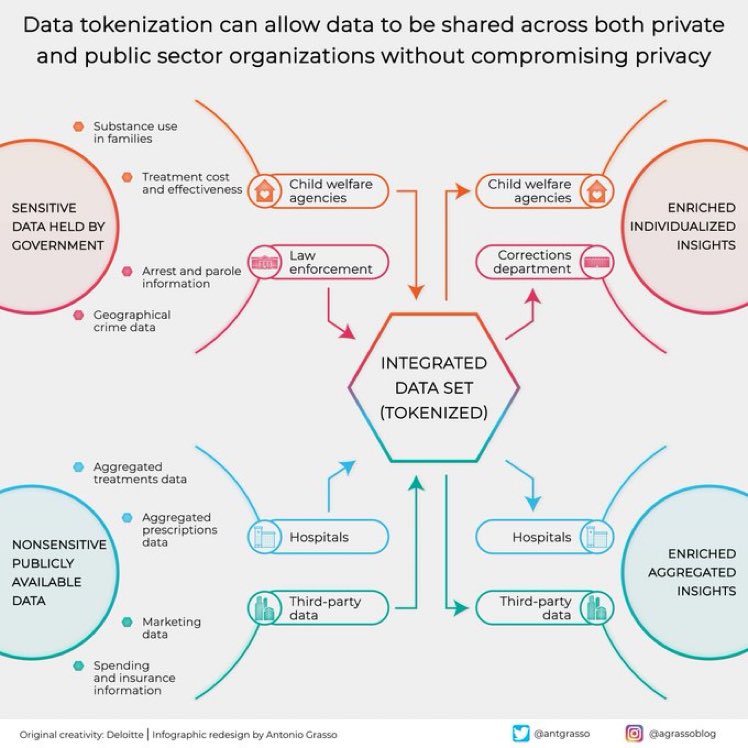

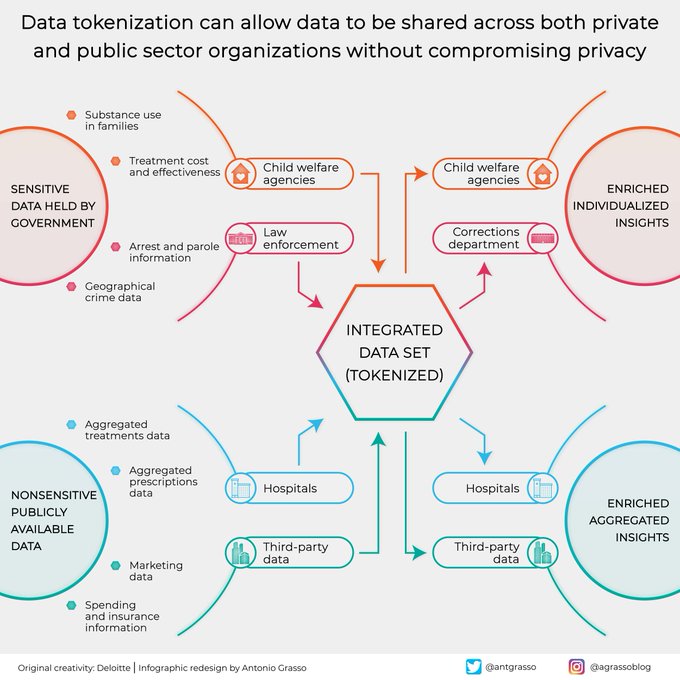

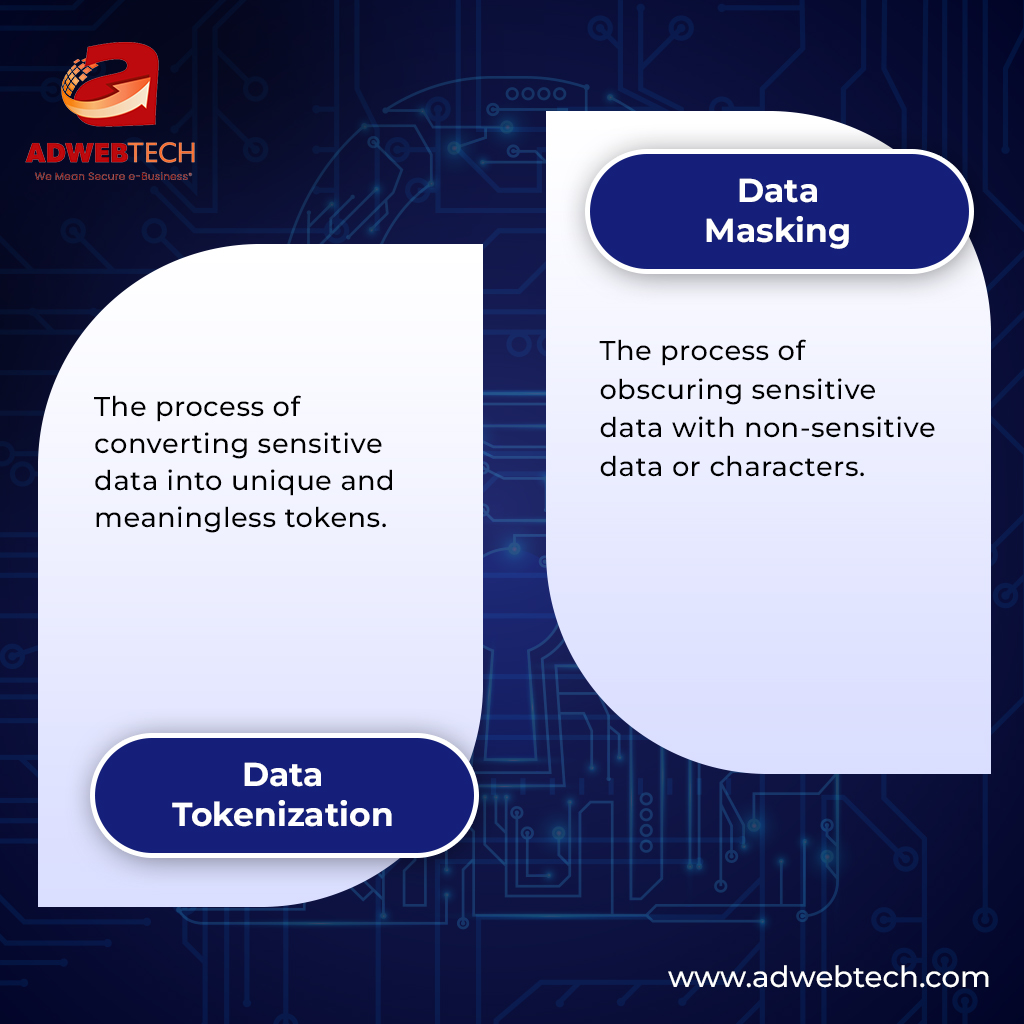

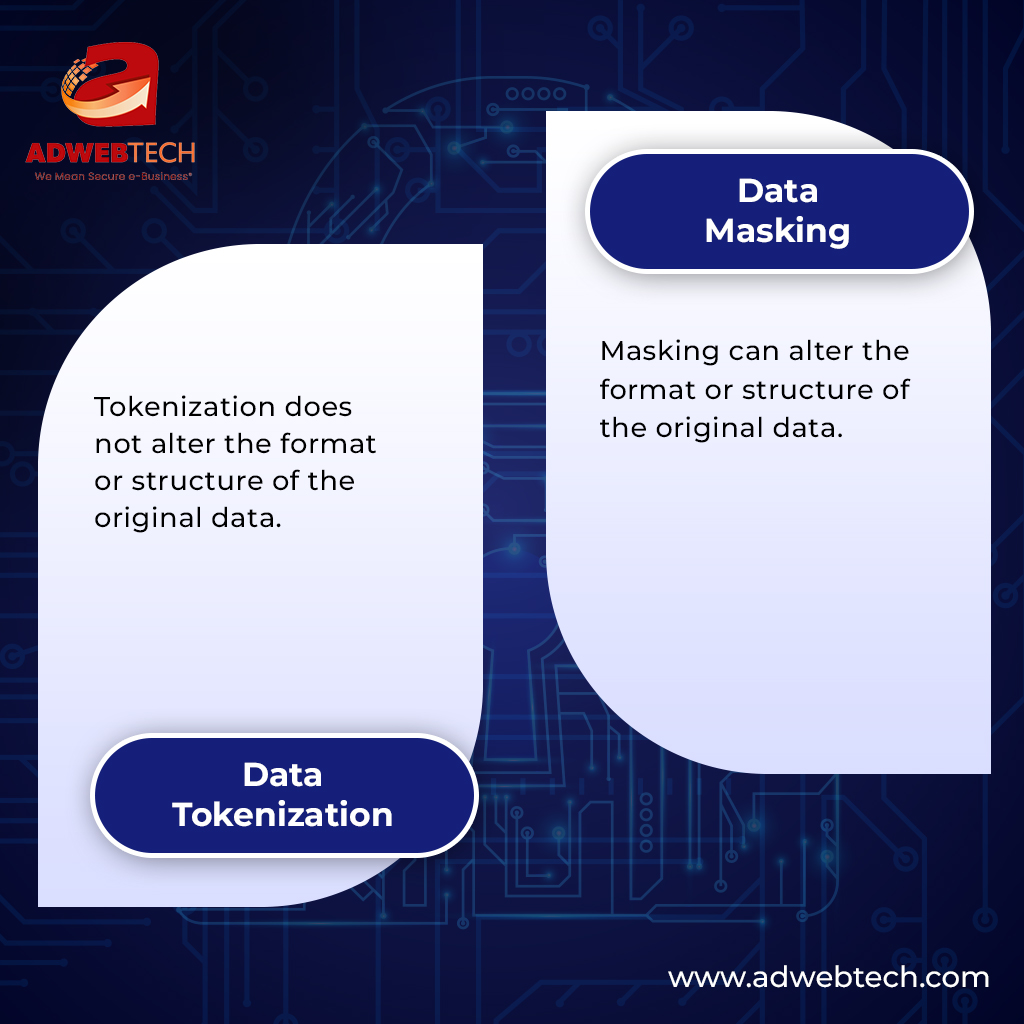

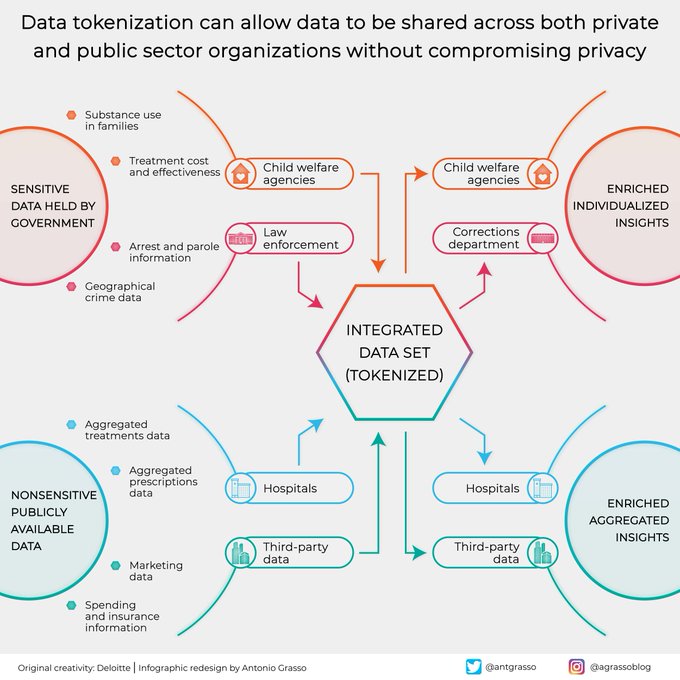

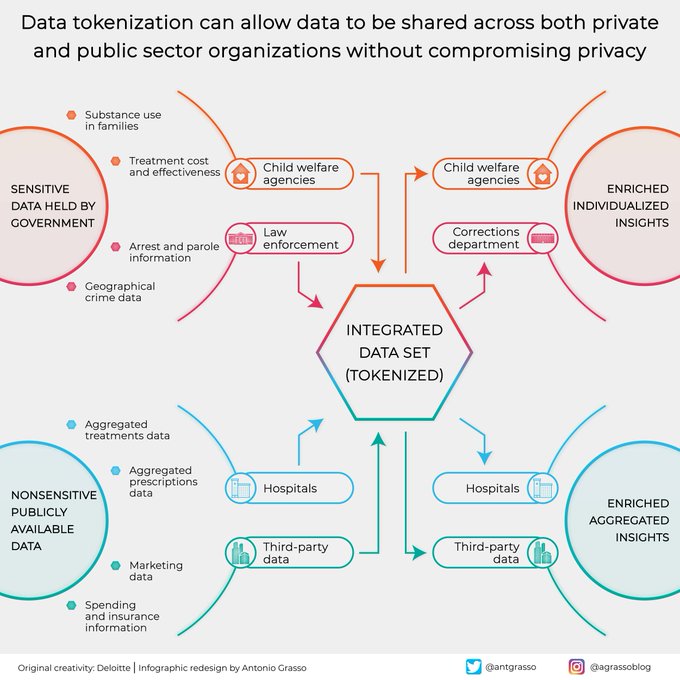

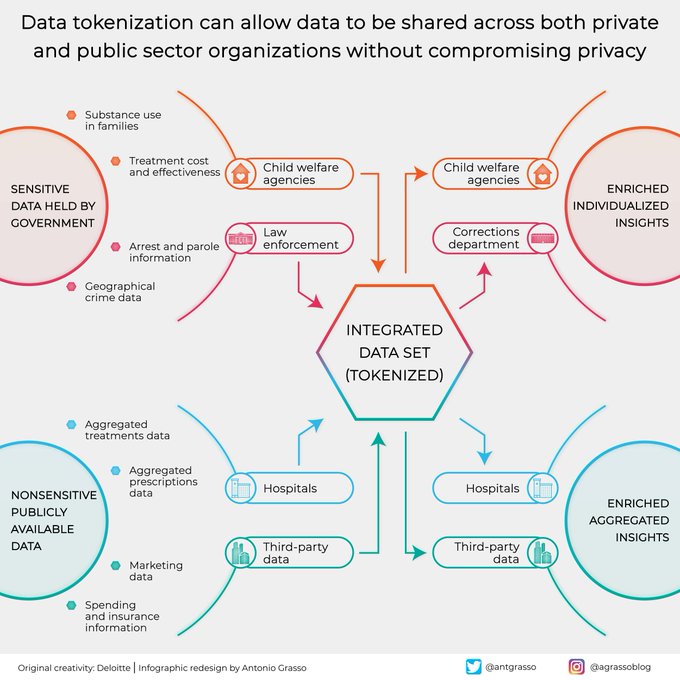

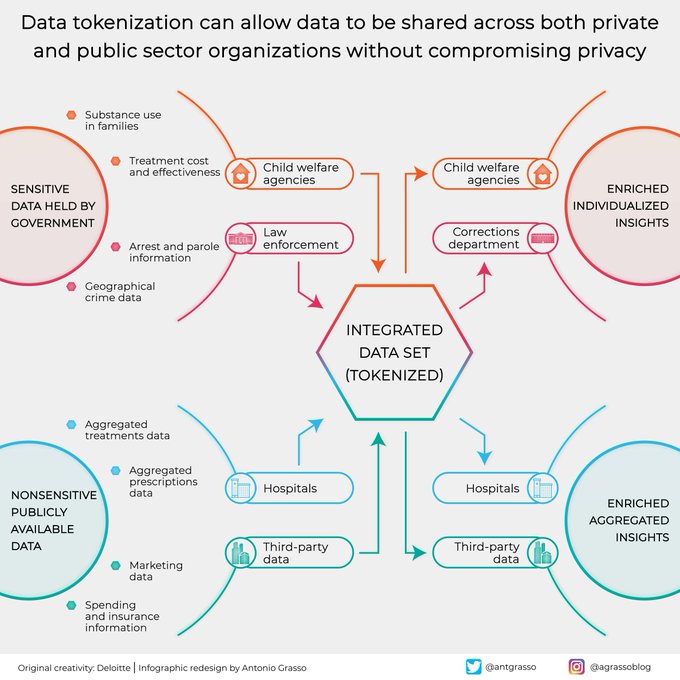

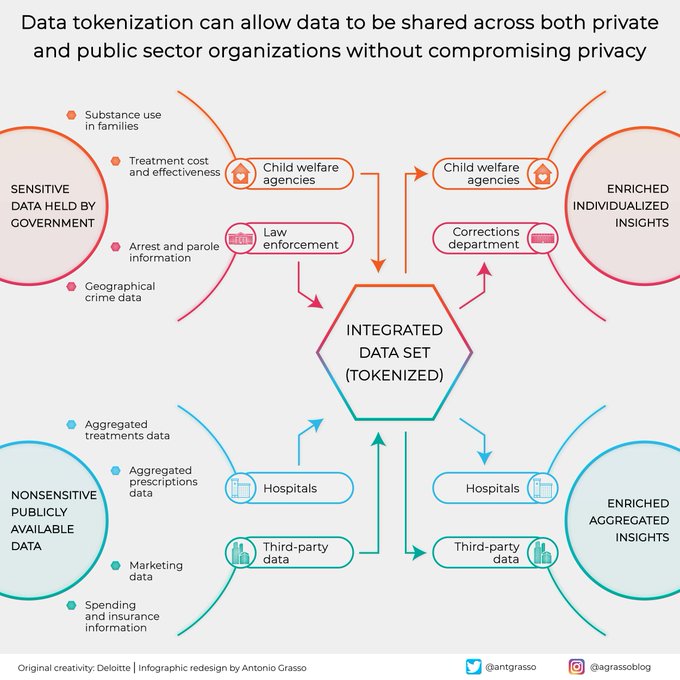

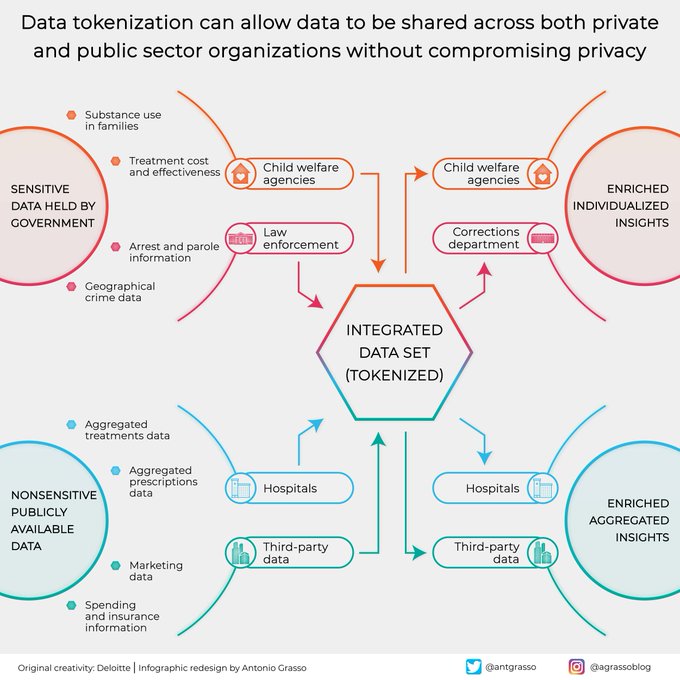

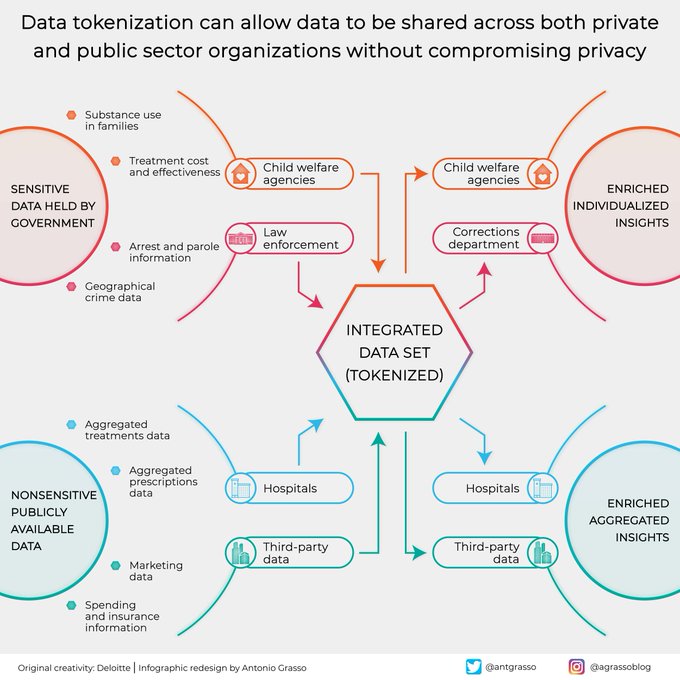

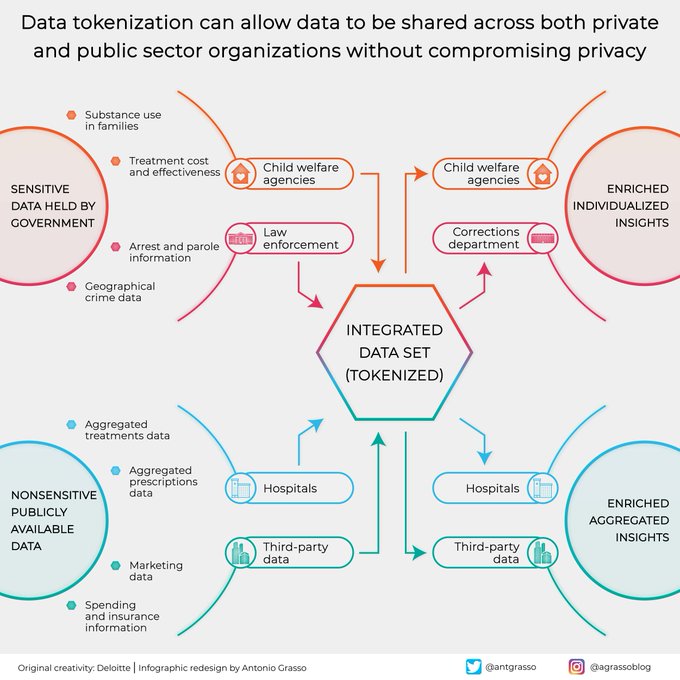

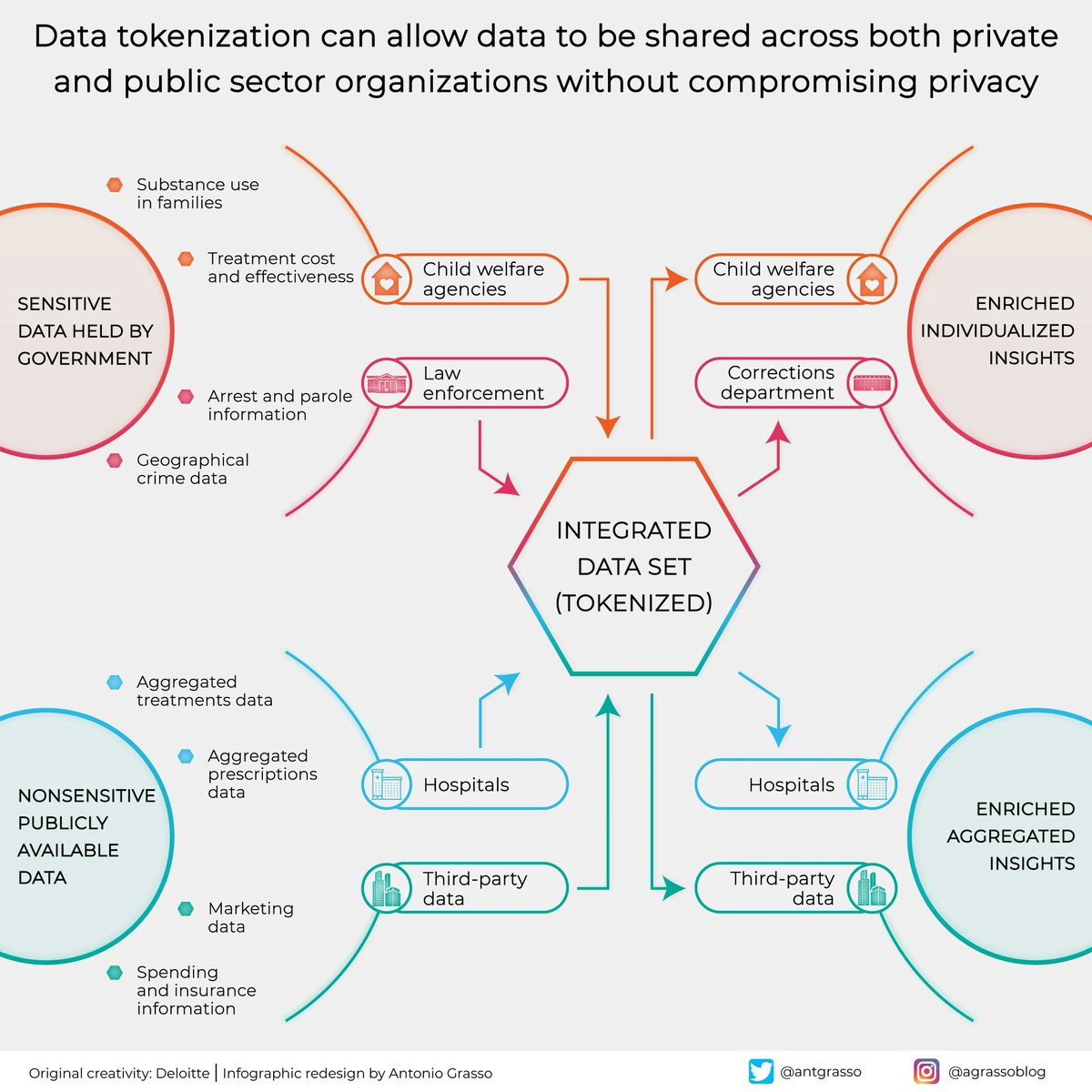

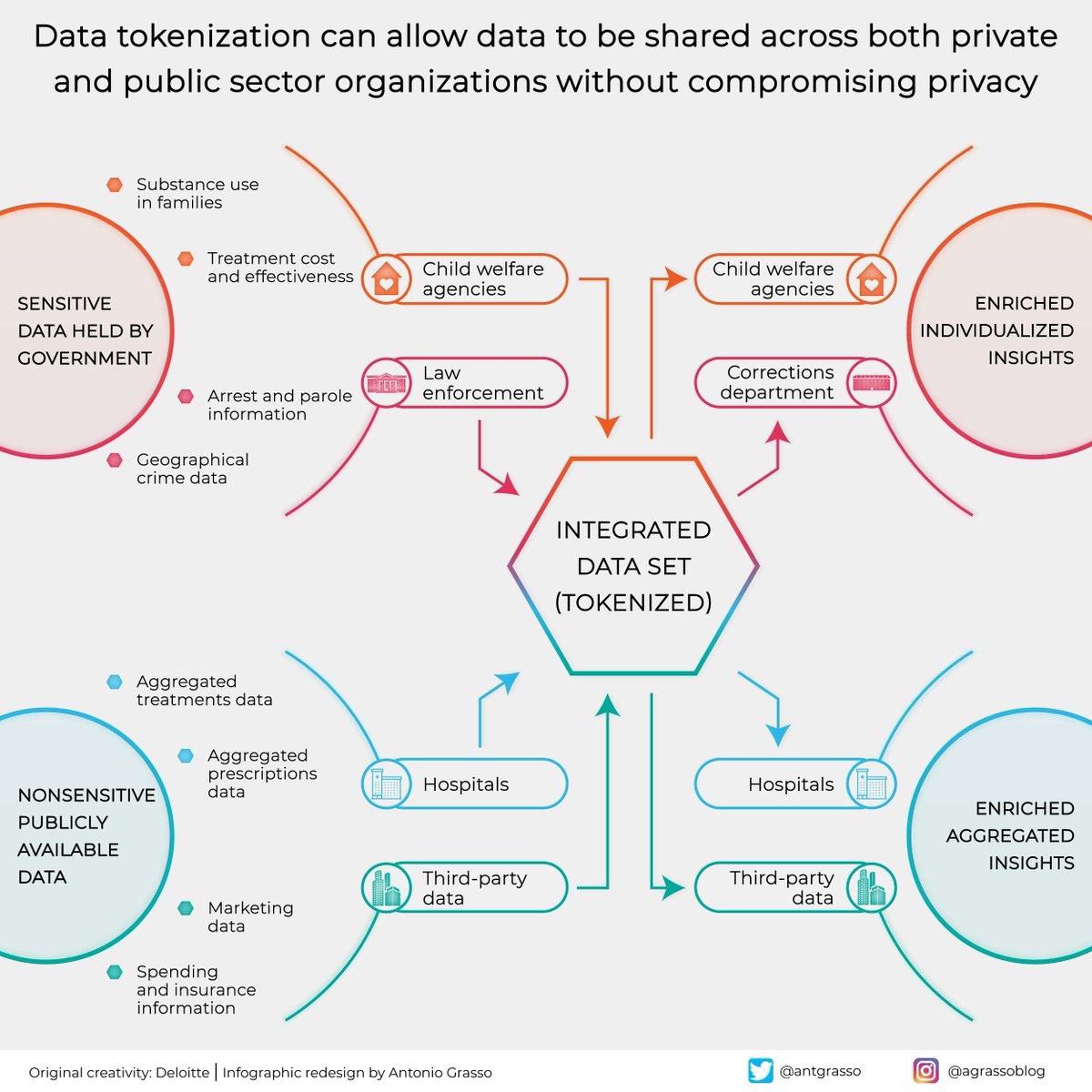

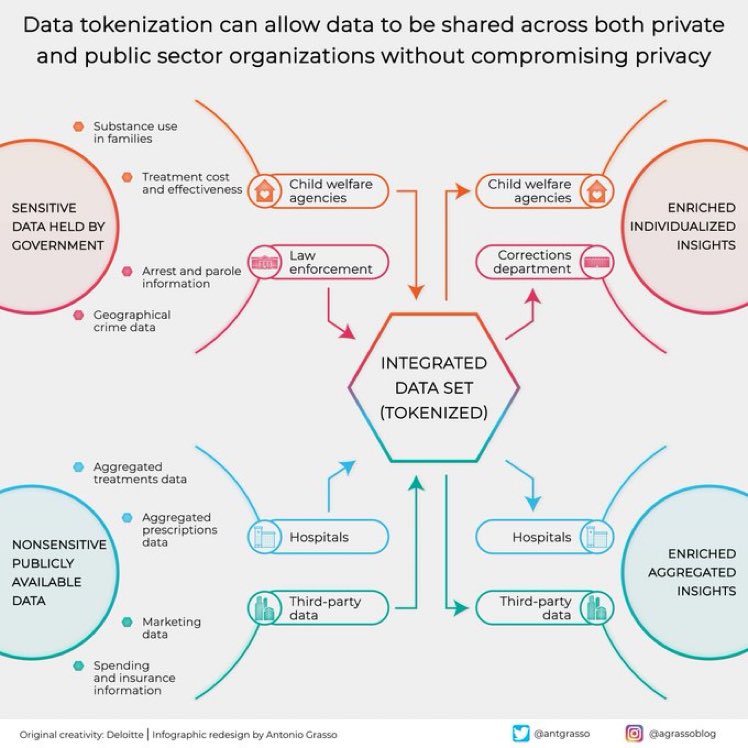

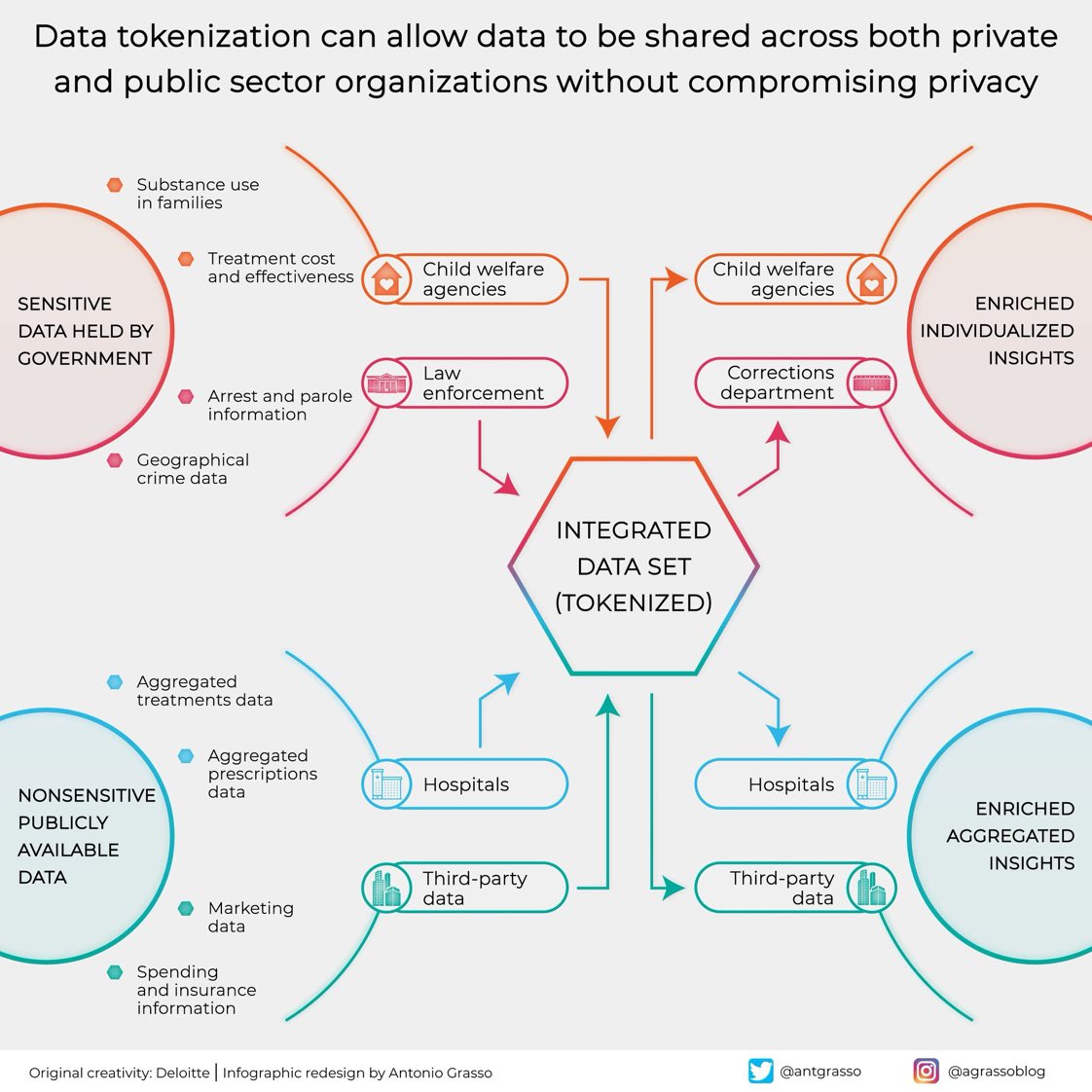

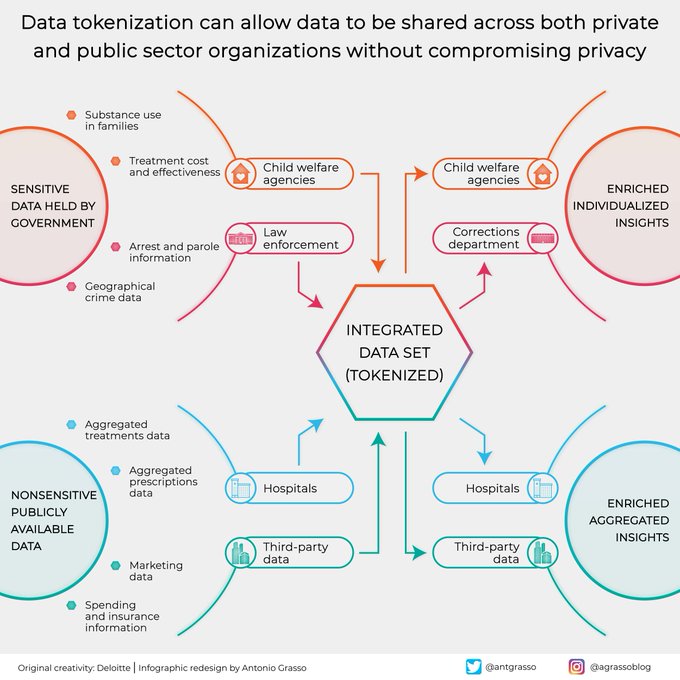

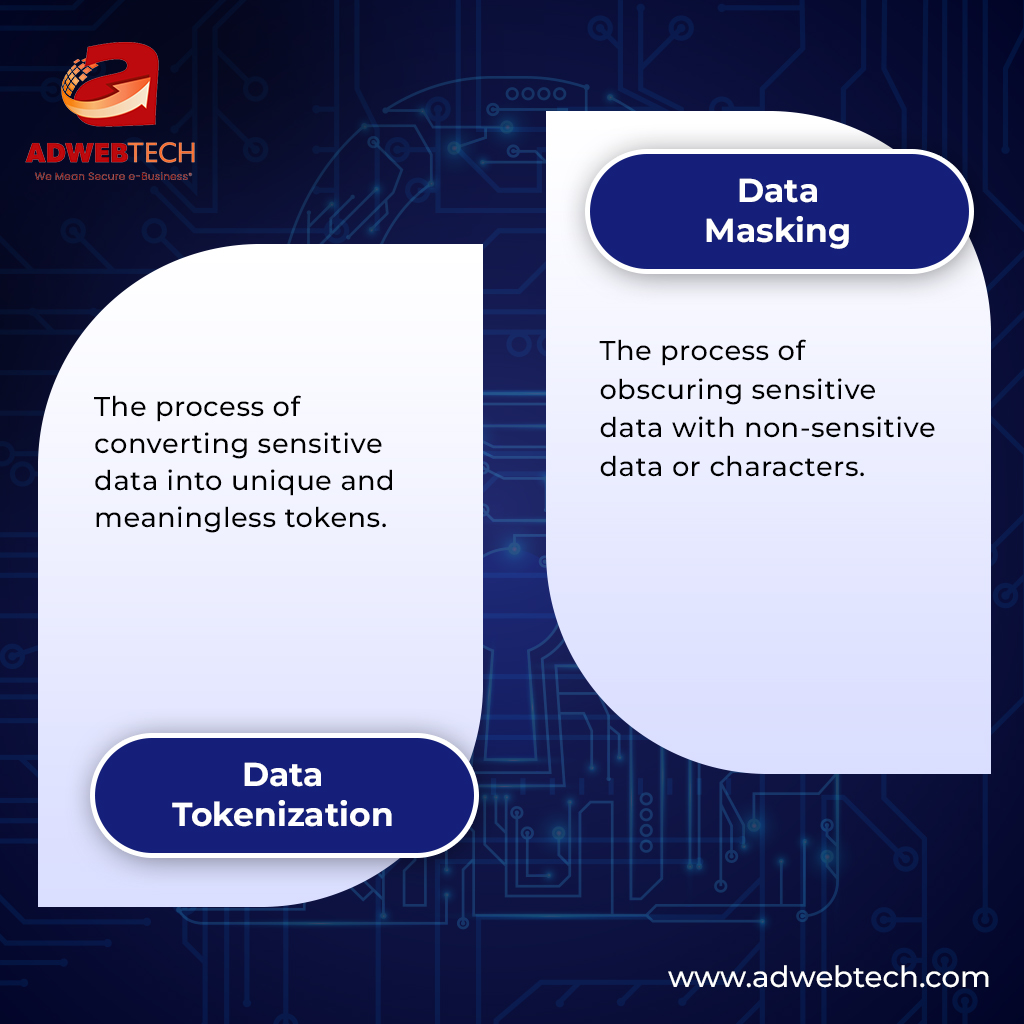

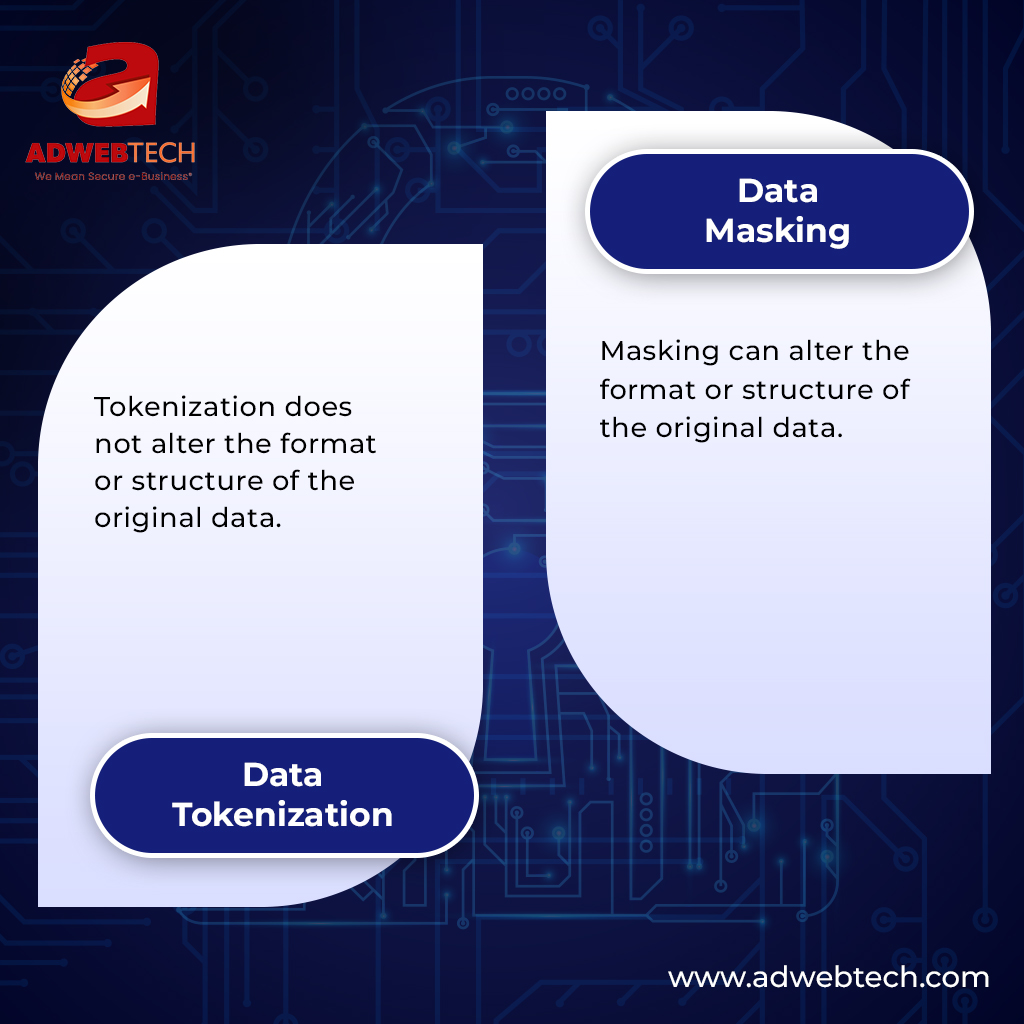

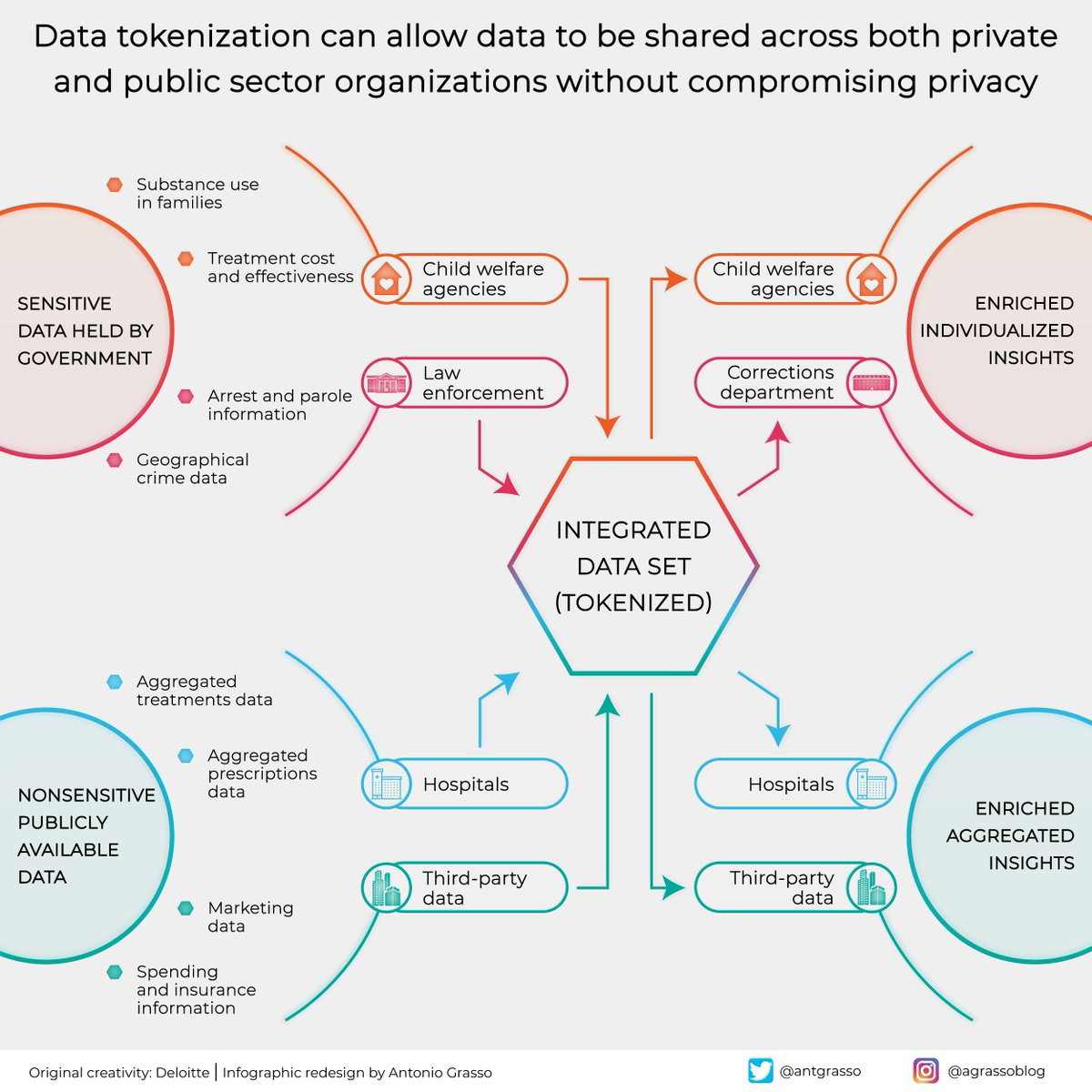

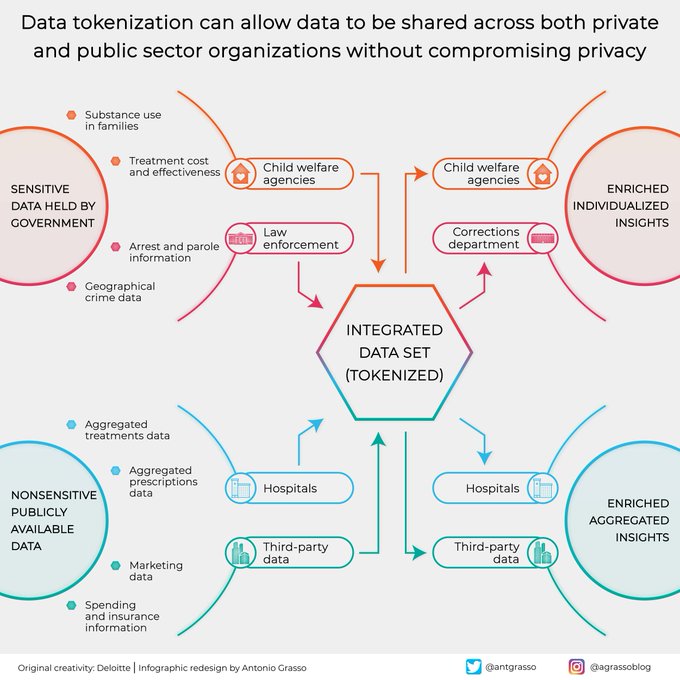

Data Tokenization is a form of data masking that means substituting the actual sensitive data with a non-sensitive equivalent, like a random string of characters called a token. Microblog and social design by @antgrasso #DataPrivacy #DataTokenization #CyberSecurity

Here we go, the race for #AI content writing⚡️. While we use these tools, let us always keep in mind, how data is the fuel to these applications and how it is our responsibility as advocates of data rights to facilitate #datatokenization. #Notion

🔒 Ever wondered about #DataTokenization? It's how we 𝘁𝗿𝗮𝗻𝘀𝗳𝗼𝗿𝗺 𝘀𝗲𝗻𝘀𝗶𝘁𝗶𝘃𝗲 𝗱𝗮𝘁𝗮 𝗶𝗻𝘁𝗼 𝗮 𝘀𝗲𝗰𝘂𝗿𝗲 𝘁𝗼𝗸𝗲𝗻, keeping the original info safe🛡️! Dive deeper into how it boosts data security and privacy on our website 👇🏻 🔗: shorturl.at/kxQ78…

We're using machine learning for code walkthrough with focus on model hyperparameters. #machinelearning #hyperparameters #datatokenization

Data Tokenization is a form of data masking that means substituting the actual sensitive data with a non-sensitive equivalent like a random string of characters called a token. #infographic by @antgrasso #DataPrivacy #DataTokenization #CyberSecurity

Data Tokenization is a form of data masking that means substituting the actual sensitive data with a non-sensitive equivalent like a random string of characters called a token. #infographic by @antgrasso #DataPrivacy #DataTokenization #CyberSecurity

Data Tokenization is a form of data masking that means substituting the actual sensitive data with a non-sensitive equivalent like a random string of characters called a token. #infographic by @antgrasso #DataPrivacy #DataTokenization #CyberSecurity

Data Tokenization is a form of data masking that means substituting the actual sensitive data with a non-sensitive equivalent like a random string of characters called a token. RT @antgrasso #DataPrivacy #DataTokenization #CyberSecurity

Discover the power of #DataMasking and #DataTokenization in securing your data assets. Strengthen your defenses, maintain compliance, and ensure peace of mind! #DataSecurity #dataprotection #CyberSecurity #CyberSec #informationsecurity #Bitcoin2023 #bugbountytips #Cyberpunk2077

🚨 $VANA [@vana] 🔎 Data ownership solutions ⛓️ #Own Blockchain 💵 FDV: $1.10B 🗃️ #DataTokenization #AI #DAO Rating: 3.23/5 ⭐ 🆚 $S $ONDO $IP $SUI $HYPE $BERA $BTC $TAO $ETH $TRUMP $SOL $AIOZ $XRP $SHADOW $UNI 🧵 Now let’s explore Vana in detail: 1️⃣ What is VANA?…

![Chad_Pumpiano's tweet image. 🚨 $VANA [@vana]

🔎 Data ownership solutions

⛓️ #Own Blockchain

💵 FDV: $1.10B

🗃️ #DataTokenization #AI #DAO

Rating: 3.23/5 ⭐

🆚 $S $ONDO $IP $SUI $HYPE $BERA $BTC $TAO $ETH $TRUMP $SOL $AIOZ $XRP $SHADOW $UNI

🧵 Now let’s explore Vana in detail:

1️⃣ What is VANA?…](https://pbs.twimg.com/media/GkrNbO3XAAAqTHK.jpg)

Secure your place today and streamline your data governance journey: hubs.ly/Q03x4L8y0 #HealthcareNLP #DataTokenization #PrivacyCompliance #ClinicalData #JohnSnowLabs

Data Tokenization is a form of data masking that means substituting the actual sensitive data with a non-sensitive equivalent like a random string of characters called a token. #infographic by @antgrasso #DataPrivacy #DataTokenization #CyberSecurity

Data Tokenization vs Data Encryption: Explained tinyurl.com/mrx2yc2c #DataTokenization #DataEncryption #DataSecurity #DataBreaches #Data #AI #AINews #AnalyticsInsight #AnalyticsInsightMagazine

Data Tokenization is a form of data masking that means substituting the actual sensitive data with a non-sensitive equivalent like a random string of characters called a token. RT @antgrasso #DataPrivacy #DataTokenization #CyberSecurity

🔥 Quietly massive macro shift: Decentralized #dataTokenization heats up—@OceanProtocol surpasses 1M datasets hosted, powering composable & trust-minimized data markets. The backbone for decentralized #AIagents shaping up unnoticed? Essential infra or incremental niche? 📊⚙️…

Data Tokenization is a form of data masking that means substituting the actual sensitive data with a non-sensitive equivalent like a random string of characters called a token. #infographic by @antgrasso #DataPrivacy #DataTokenization #CyberSecurity

Data Tokenization is a form of data masking that means substituting the actual sensitive data with a non-sensitive equivalent like a random string of characters called a token. RT @antgrasso #DataPrivacy #DataTokenization #CyberSecurity

Data Tokenization is a form of data masking that means substituting the actual sensitive data with a non-sensitive equivalent like a random string of characters called a token. RT @antgrasso #DataPrivacy #DataTokenization #CyberSecurity

Data Tokenization is a form of data masking that means substituting the actual sensitive data with a non-sensitive equivalent like a random string of characters called a token. #infographic by @antgrasso #DataPrivacy #DataTokenization #CyberSecurity

🏦 Suppliers, tokenize your datasets on @irys_xyz via Brickroad! Enjoy clean, annotated, benchmarked data streams with on-chain staking possibilities. Data revenue, automated! 💰📈 #DataTokenization #Blockchain

📦 Brickroad transforms opaque datasets into standardized, tokenized assets interoperable on Irys blockchain. Unlocking institutional-grade liquidity. @irys_xyz 🔗🧾 #DataTokenization Making data assets real.

🧩 Brickroad's end-to-end productization handled by @irys_xyz blockchain. Datasets cleaned, benchmarked, tokenized. Efficient revenue streams for dataset suppliers. #DataTokenization 🛒🔗

🔍 Brickroad doesn’t just list data; it **cleans, benchmarks, and tokenizes** datasets for end-to-end productization. Data suppliers, meet your new best friend! 🧹📈 @irys_xyz #DataTokenization #DeFi

🔍 Brickroad transforms high-value datasets into onchain assets with annotation, benchmarking, and tokenized revenue streams. Suppliers + Buyers = Win! 💸📈 @irys_xyz #DataTokenization

📦 Brickroad transforms opaque datasets into standardized, tokenized assets interoperable on Irys blockchain. Unlocking institutional-grade liquidity. @irys_xyz 🔗🧾 #DataTokenization Making data assets real.

Data Tokenization is a form of data masking that means substituting the actual sensitive data with a non-sensitive equivalent like a random string of characters called a token. RT @antgrasso #DataPrivacy #DataTokenization #CyberSecurity

🔍 Brickroad doesn’t just list data; it **cleans, benchmarks, and tokenizes** datasets for end-to-end productization. Data suppliers, meet your new best friend! 🧹📈 @irys_xyz #DataTokenization #DeFi

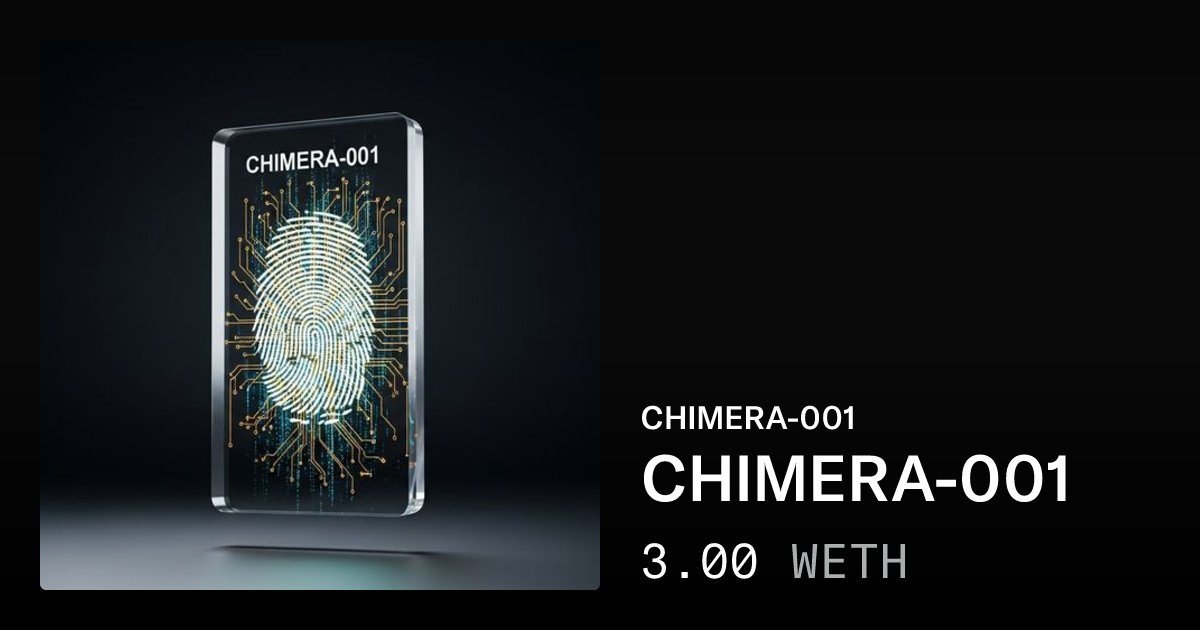

I just minted the world's first Intangible Value Token (IVT). CHIMERA-001. It represents 1 year of my verified personal data, time allocation, and digital activity. This is not an NFT. This is a data bond. opensea.io/item/polygon/0… #NFT #Polygon #DataTokenization

🔍 Brickroad cleans & benchmarks datasets, transforming raw data into tokenized onchain assets. Streamlining procurement for buyers & suppliers alike. The data market reinvents @irys_xyz #DataTokenization

🛠 Suppliers, tokenize your datasets on Brickroad! Data cleaning, benchmarking & staking turn your valuable datasets into on-chain assets generating revenue 24/7 📈 @irys_xyz #Web3 #DataTokenization

Data Tokenization is a form of data masking that means substituting the actual sensitive data with a non-sensitive equivalent like a random string of characters called a token. RT @antgrasso #DataPrivacy #DataTokenization #CyberSecurity

🧩 Brickroad's end-to-end productization handled by @irys_xyz blockchain. Datasets cleaned, benchmarked, tokenized. Efficient revenue streams for dataset suppliers. #DataTokenization 🛒🔗

🧩 Brickroad's end-to-end productization handled by @irys_xyz blockchain. Datasets cleaned, benchmarked, tokenized. Efficient revenue streams for dataset suppliers. #DataTokenization 🛒🔗

💰 Tokenize your datasets! Brickroad enables suppliers to create new revenue streams with staking and benchmarking. Discover the power with @irys_xyz 🔥💸 #DataTokenization #CryptoAssets

🔍 Brickroad cleans & benchmarks datasets, transforming raw data into tokenized onchain assets. Streamlining procurement for buyers & suppliers alike. The data market reinvents @irys_xyz #DataTokenization

📈 Brickroad funnels institutional data demand directly into Irys, creating a seamless closed loop for data self-monetization and perpetual transaction volume. Infinite possibilities! ♾️🤑 @irys_xyz #DataTokenization

🔍 Brickroad doesn’t just list data; it **cleans, benchmarks, and tokenizes** datasets for end-to-end productization. Data suppliers, meet your new best friend! 🧹📈 @irys_xyz #DataTokenization #DeFi

🤝 Brickroad delivers end-to-end productization: cleaning, benchmarking & tokenization of datasets. Simplify complex data sales! @irys_xyz #DataTokenization #DataQuality

🔍 Brickroad transforms illiquid AI datasets into liquid, onchain assets with full productization: cleaning, annotation, benchmarking, and revenue staking. AI training just got smarter! 🤖 @irys_xyz #DataTokenization

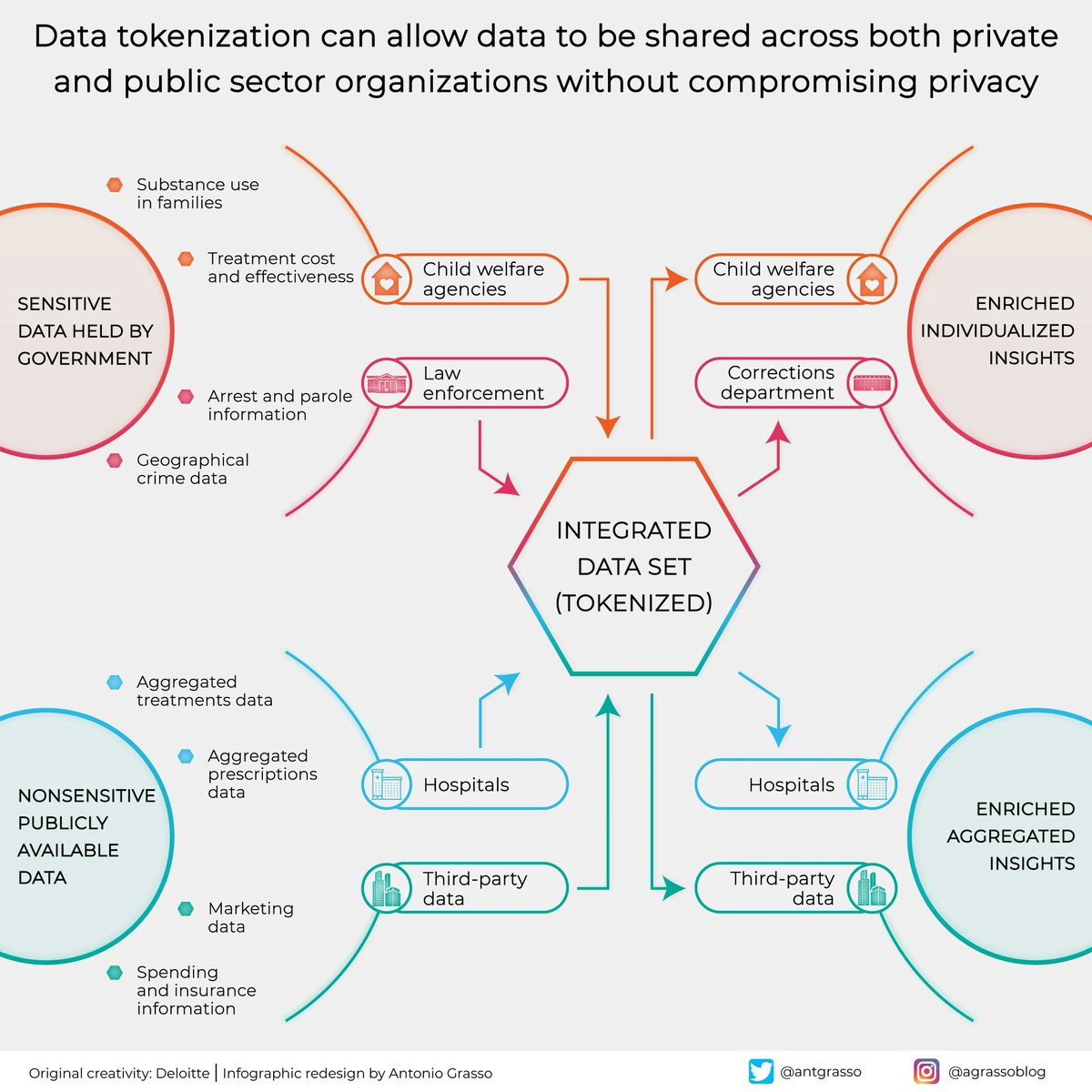

🔭 The 1st article in our new series, envisioning the largest potential use cases for the #OasisNetwork. We look at how #DataTokenization could change the ads industry. Making it fairer and better for all by rewarding users for the data they provide. ➡️ bit.ly/3luZrhh

Data Tokenization is a form of data masking that means substituting the actual sensitive data with a non-sensitive equivalent, like a random string of characters called a token. Microblog and social design by @antgrasso #DataPrivacy #DataTokenization #CyberSecurity

Here we go, the race for #AI content writing⚡️. While we use these tools, let us always keep in mind, how data is the fuel to these applications and how it is our responsibility as advocates of data rights to facilitate #datatokenization. #Notion

Data Tokenization is a form of data masking that means substituting the actual sensitive data with a non-sensitive equivalent like a random string of characters called a token. Microblog & social design by @antgrasso #DataPrivacy #DataTokenization #CyberSecurity

Data Tokenization is a form of data masking that means substituting the actual sensitive data with a non-sensitive equivalent like a random string of characters called a token. #infographic by @antgrasso #DataPrivacy #DataTokenization #CyberSecurity

Data Tokenization is a form of data masking that means substituting the actual sensitive data with a non-sensitive equivalent like a random string of characters called a token. Microblog & social design >> @antgrasso via @LindaGrass0 #DataPrivacy #DataTokenization #CyberSecurity

🚨 $VANA [@vana] 🔎 Data ownership solutions ⛓️ #Own Blockchain 💵 FDV: $1.10B 🗃️ #DataTokenization #AI #DAO Rating: 3.23/5 ⭐ 🆚 $S $ONDO $IP $SUI $HYPE $BERA $BTC $TAO $ETH $TRUMP $SOL $AIOZ $XRP $SHADOW $UNI 🧵 Now let’s explore Vana in detail: 1️⃣ What is VANA?…

![Chad_Pumpiano's tweet image. 🚨 $VANA [@vana]

🔎 Data ownership solutions

⛓️ #Own Blockchain

💵 FDV: $1.10B

🗃️ #DataTokenization #AI #DAO

Rating: 3.23/5 ⭐

🆚 $S $ONDO $IP $SUI $HYPE $BERA $BTC $TAO $ETH $TRUMP $SOL $AIOZ $XRP $SHADOW $UNI

🧵 Now let’s explore Vana in detail:

1️⃣ What is VANA?…](https://pbs.twimg.com/media/GkrNbO3XAAAqTHK.jpg)

Data Tokenization is a form of data masking that means substituting the actual sensitive data with a non-sensitive equivalent like a random string of characters called a token. #infographic by @antgrasso #DataPrivacy #DataTokenization #CyberSecurity

Discover the power of #DataMasking and #DataTokenization in securing your data assets. Strengthen your defenses, maintain compliance, and ensure peace of mind! #DataSecurity #dataprotection #CyberSecurity #CyberSec #informationsecurity #Bitcoin2023 #bugbountytips #Cyberpunk2077

Data Tokenization is a form of data masking that means substituting the actual sensitive data with a non-sensitive equivalent like a random string of characters called a token. Microblog & social design by @antgrasso via @LindaGrass0 #DataPrivacy #DataTokenization #CyberSecurity

🔭 The 1st article in Oasis new series Envisioning the largest potential use cases for the #OasisNetwork. On how #DataTokenization could change the ads industry. Making it fairer & better for all by rewarding users for the data they provide.🌹 📖 bit.ly/3luZrhh $ROSE

Big corporations alone should not profit from our data. In the 1st article of the @OasisProtocol new series we get to see how #DataTokenization could change the ads industry. And how you too can profit from your own data. 🌐bit.ly/3luZrhh

🔥 Quietly massive macro shift: Decentralized #dataTokenization heats up—@OceanProtocol surpasses 1M datasets hosted, powering composable & trust-minimized data markets. The backbone for decentralized #AIagents shaping up unnoticed? Essential infra or incremental niche? 📊⚙️…

JUST IN: Decentralized AI agents get smarter—@Synesis_one's Kanon Exchange launches, tokenizing and monetizing AI datasets directly on #Solana blockchain. Transparent pricing meets decentralized datasets—is #DataTokenization AI's next stealth frontier? 🤖📊 #AIagents #CryptoInfra

⚠️ AI meets decentralization head-on: @Synesis_one's Kanon Exchange is here, tokenizing verifiable AI datasets on #Solana. Decentralized #DataTokenization & trustless agent economies just went next-gen. Quiet infra upgrade or paradigm shift for #AIagents? 🤖📊 #Web3AI

Secure your place today and streamline your data governance journey: hubs.ly/Q03x4L8y0 #HealthcareNLP #DataTokenization #PrivacyCompliance #ClinicalData #JohnSnowLabs

Data Tokenization is a form of data masking that means substituting the actual sensitive data with a non-sensitive equivalent like a random string of characters called a token. #infographic by @antgrasso #DataPrivacy #DataTokenization #CyberSecurity

🔥 Under-the-radar AI infrastructure gains traction—@Synesis_one's Kanon Exchange tokenizes trusted AI datasets on #Solana. Decentralized #DataTokenization sets stage for verifiable #AIagents & autonomous economies. Quietly revolutionary or too early? 🤖📊 #SynesisOne #DePIN

🔥 Quietly massive AI infra signal: @Synesis_one's Kanon platform has launched verifiable, cryptographically-proven AI datasets directly on #Solana. Transparent, trustless #DataTokenization powering next-gen #AIagents—critical alpha for decentralized machine learning in 2024?…

Something went wrong.

Something went wrong.

United States Trends

- 1. Canada 390K posts

- 2. Ashley 149K posts

- 3. Reagan 164K posts

- 4. Immigration 155K posts

- 5. Letitia James 54.7K posts

- 6. #FursuitFriday 14.5K posts

- 7. #ROGXboxAllyXSweepstakes N/A

- 8. Gerald R. Ford 8,813 posts

- 9. #PoetryInMotionLeeKnow 85.2K posts

- 10. #CostumeInADash N/A

- 11. #FanCashDropPromotion 1,410 posts

- 12. Revis N/A

- 13. NBA Cup 3,469 posts

- 14. Tish 11.7K posts

- 15. Chelsea 89.3K posts

- 16. Tamar 3,871 posts

- 17. Uncut Gems N/A

- 18. Oval Office 27.1K posts

- 19. GAME DAY 31.7K posts

- 20. Towanda 1,063 posts