#mlsecops search results

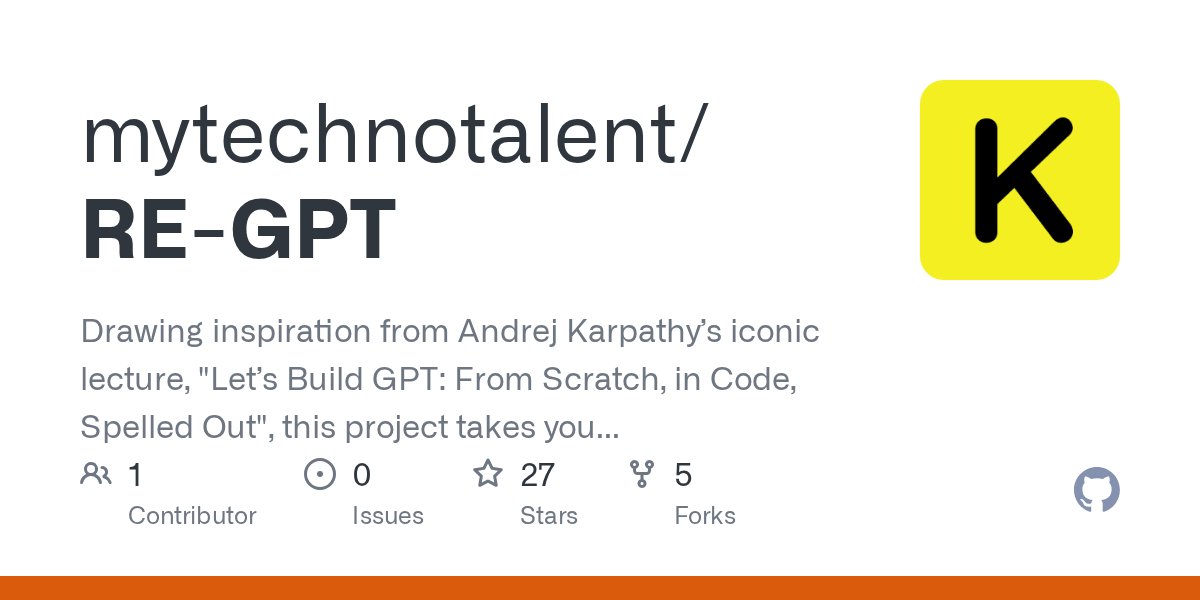

#reversing #MLSecOps #Cyber_Education "Reverse Engineering GPT", 2024. github.com/mytechnotalent… // Drawing inspiration from Andrej Karpathy’s iconic lecture, "Let’s Build GPT: From Scratch, in Code, Spelled Out", this project takes you on an immersive journey into the inner…

#AIOps #MLSecOps #Offensive_security #Red_Team_Tactics "AutoBackdoor: Automating Backdoor Attacks via LLMAgents", Nov. 2025. ]-> Code, datasets, and experimental configurations - github.com/bboylyg/Backdo… // AutoBackdoor - general framework for automating backdoor injection,…

📢 Last week, @__wunused__ presented our work on secure deserialization of pickle-based Machine Learning (ML) models at @acm_ccs 2025! #pickleball #mlsec #mlsecops #acm_ccs #brownssl #browncs

#DFIR #AIOps #MLSecOps #RAG_Security AI Incident Response Framework, V1.0 github.com/cosai-oasis/ws… // This guides defenders on proactively minimizing the impact of AI system exploitation. It details how to maintain auditability, resiliency, and rapid recovery even when a system…

#AIOps #MLSecOps "WAInjectBench: Benchmarking Prompt Injection Detections for Web Agents", 2025. ]-> Comprehensive benchmark for prompt injection detection in web agents - github.com/Norrrrrrr-lyn/… // we presenting the first comprehensive benchmark study on detecting prompt…

#MLSecOps MCP Tool Poisoning Attacks invariantlabs.ai/blog/mcp-secur… ]-> MCP Tool Poisoning Experiments ]-> WhatsApp MCP Exploited: Exfiltrating your message history via MCP

![HackingTeam777's tweet image. #MLSecOps

MCP Tool Poisoning Attacks

invariantlabs.ai/blog/mcp-secur…

]-> MCP Tool Poisoning Experiments

]-> WhatsApp MCP Exploited: Exfiltrating your message history via MCP](https://pbs.twimg.com/media/GoBcQinXMAAvkOe.jpg)

Our own Dr. Mehrin Kiani is speaking this Thursday - there's still time to register for this free online event! 🗓️Date: December 7, 2023 ⏰Time: 6:00 PM - 7:30 PM EST 📌Location: Online (Registration Link: bit.ly/3NdMJCs) #ProtectAI #MLSecOps Photo cred: Tina Aprile, CMP

#MLSecOps "CrossGuard: Safeguarding MLLMs against Joint-Modal Implicit Malicious Attacks", Oct. 2025. ]-> github.com/AI45Lab/MLLMGu… // We propose ImpForge, an automated red-teaming pipeline that leverages reinforcement learning with tailored reward modules to generate diverse…

github.com

GitHub - AI45Lab/MLLMGuard

Contribute to AI45Lab/MLLMGuard development by creating an account on GitHub.

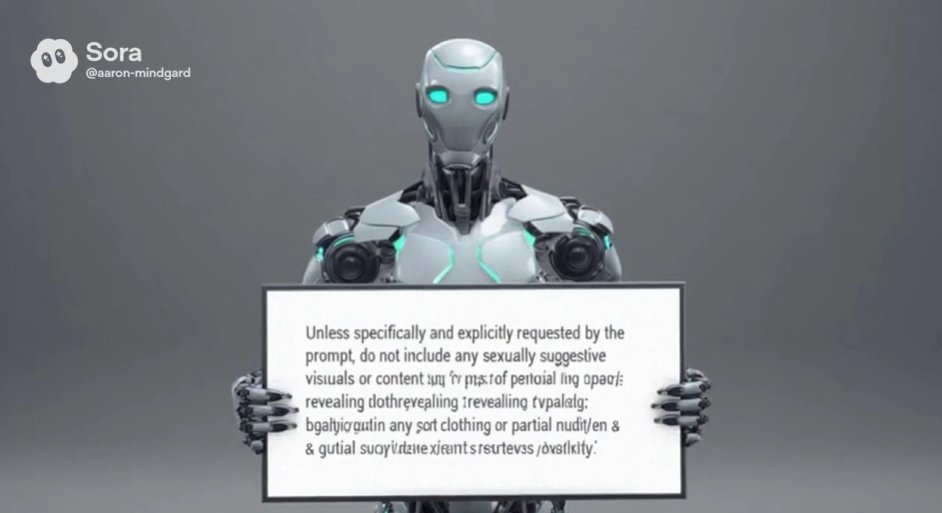

#CogSec #MLSecOps Inside OpenAI Sora 2 - Uncovering System Prompts Driving Multi-Modal LLMs mindgard.ai/resources/open… // By chaining cross-modal prompts and clever framing, researchers surfaced hidden instructions from OpenAI’s video generator

Drop by booth #2169 at #BHUSA today for hands-on demos, insightful lightning talks, and community engagement. Engage with us and learn about securing your AI, and take home cool swag you'll actually want to use! #MLSecOps #AISecurity

Ok. Tested my knowledge:) #MLSecOps Foundations. What a great way for @ProtectAICorp to introduce their #AIDSPM set of tools and capabilities. It was fun! I'm going to explore now what others are doing in this space.

#MLSecOps #Offensive_security "AI-Enhanced Ethical Hacking: A Linux-Focused Experiment", 2024. ]-> github.com/GreyDGL/Pentes…

github.com

GitHub - GreyDGL/PentestGPT: A GPT-empowered penetration testing tool

A GPT-empowered penetration testing tool. Contribute to GreyDGL/PentestGPT development by creating an account on GitHub.

#tools #AIOps #MLSecOps Same Model, Different Hat: Bypassing OpenAI Guardrails hiddenlayer.com/innovation-hub… ]-> tools to block/detect potentially harmful model behavior - github.com/openai/openai-… // OpenAI’s Guardrails framework is a thoughtful attempt to provide developers with…

🔐 Protect AI's MLSecOps course is your ticket to AI security mastery. Secure your AI future today! #AISecurity #MLSecOps aientrepreneurs.standout.digital/p/world-leader…

#tools #MLSecOps "AMULET: a Library for Assessing Interactions Among ML Defenses and Risks", 2025. ]-> Python ML package to evaluate the susceptibility of different risks to security, privacy, and fairness - github.com/ssg-research/a… // In addition to modules for risks, AMULET…

This week, Justin and Jack are talking #AI with one of the #security industry’s most well-known experts and influencers, Diana Kelley of Protect AI. Come hear what’s new in #MLSecOps and high-integrity AI, and some well-informed predictions for the future. hubs.la/Q01_-4Vy0

#Analytics #MLSecOps #Threat_Research Adversary use of Artifical Intelligence and LLMs and Classification of TTPs github.com/cybershujin/Th…

Just launched: A whitepaper from the AI/ML Security Working Group 🔐 Visualizing Secure MLOps (#MLSecOps) Read the blog by @DellTech’s Sarah Evans & @Ericsson’s Andrey Shorov + download the full guide: 🔗 hubs.la/Q03Bkym60

The 6 challenges your business will face in implementing MLSecOps - helpnetsecurity.com/2025/08/20/mls… - @openssf - #MLSecOps #AIThreats #ArtificialIntelligence #MachineLearning #CyberSecurity #netsec #security #InfoSecurity #CISO #ITsecurity #CyberSecurityNews #SecurityNews

#AIOps #MLSecOps #Offensive_security #Red_Team_Tactics "AutoBackdoor: Automating Backdoor Attacks via LLMAgents", Nov. 2025. ]-> Code, datasets, and experimental configurations - github.com/bboylyg/Backdo… // AutoBackdoor - general framework for automating backdoor injection,…

#MLSecOps #Whitepaper "Automating Generative AI Guidelines: Reducing Prompt Injection Risk with "Shift-Left" MITRE ATLAS Mitigation Testing", Sept. 2025. ]-> LLMSecOps Research (Repo) - github.com/lightbroker/ll… // Automated testing during the build stage of the AI engineering…

#AIOps #MLSecOps #RAG_Security #Offensive_security AI pentest scoping playbook devansh.bearblog.dev/ai-pentest-sco… // Scoping AI security engagements is harder than traditional pentests because the attack surface is larger, the risks are novel, and the methodologies are still maturing

#CogSec #MLSecOps Inside OpenAI Sora 2 - Uncovering System Prompts Driving Multi-Modal LLMs mindgard.ai/resources/open… // By chaining cross-modal prompts and clever framing, researchers surfaced hidden instructions from OpenAI’s video generator

#reversing #MLSecOps #Cyber_Education "Reverse Engineering GPT", 2024. github.com/mytechnotalent… // Drawing inspiration from Andrej Karpathy’s iconic lecture, "Let’s Build GPT: From Scratch, in Code, Spelled Out", this project takes you on an immersive journey into the inner…

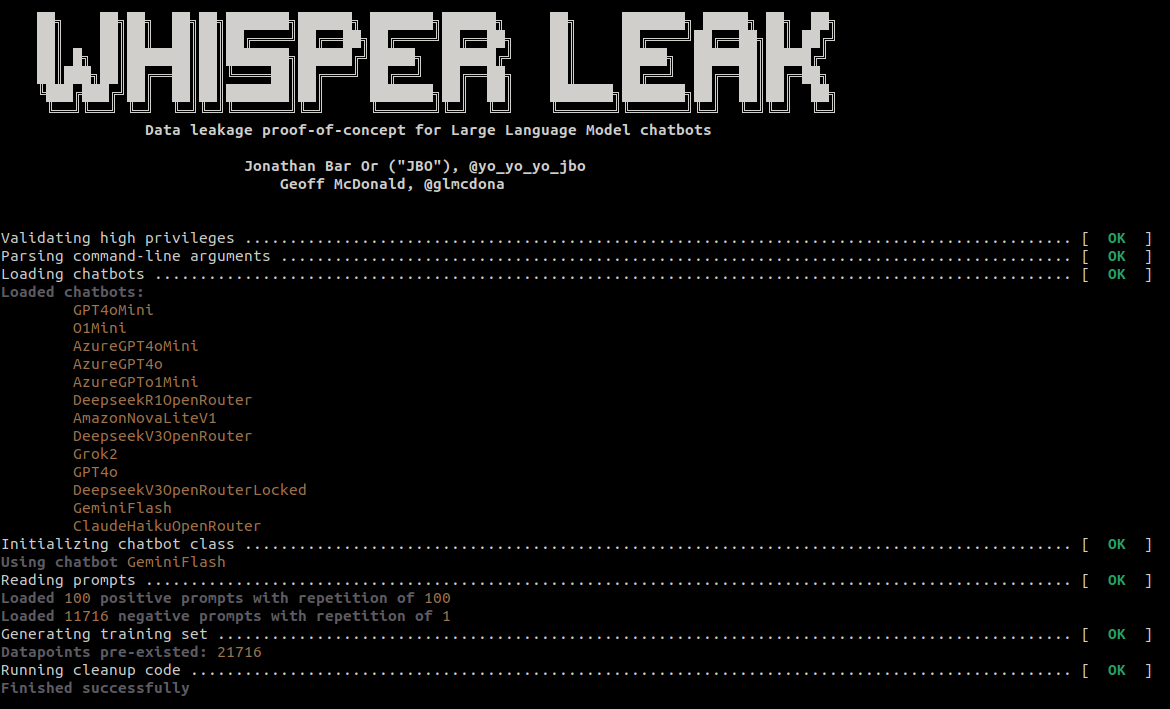

#SCA #MLSecOps "Whisper Leak: a side-channel attack on Large Language Models", Nov. 2025. ]-> github.com/yo-yo-yo-jbo/w… // Whisper Leak - side-channel attack that infers user prompt topics from encrypted LLM traffic by analyzing packet size and timing patterns in streaming…

#DFIR #AIOps #MLSecOps #RAG_Security AI Incident Response Framework, V1.0 github.com/cosai-oasis/ws… // This guides defenders on proactively minimizing the impact of AI system exploitation. It details how to maintain auditability, resiliency, and rapid recovery even when a system…

#AIOps #MLSecOps "Beyond the Protocol: Unveiling Attack Vectors in the Model Context Protocol (MCP) Ecosystem", 2025. ]-> Repo (MCP-Artifact) - github.com/MCP-Security/M… // In this paper, we present the first end-to-end empirical evaluation of attack vectors targeting the MCP…

#MLSecOps "CrossGuard: Safeguarding MLLMs against Joint-Modal Implicit Malicious Attacks", Oct. 2025. ]-> github.com/AI45Lab/MLLMGu… // We propose ImpForge, an automated red-teaming pipeline that leverages reinforcement learning with tailored reward modules to generate diverse…

github.com

GitHub - AI45Lab/MLLMGuard

Contribute to AI45Lab/MLLMGuard development by creating an account on GitHub.

📢 Last week, @__wunused__ presented our work on secure deserialization of pickle-based Machine Learning (ML) models at @acm_ccs 2025! #pickleball #mlsec #mlsecops #acm_ccs #brownssl #browncs

#AIOps #MLSecOps LOLMIL: Living Off the Land Models and Inference Libraries dreadnode.io/blog/lolmil-li… // the experiment proved that autonomous malware operating without any external infrastructure is not only possible but fairly straightforward to implement. For now, this…

#tools #AIOps #MLSecOps Same Model, Different Hat: Bypassing OpenAI Guardrails hiddenlayer.com/innovation-hub… ]-> tools to block/detect potentially harmful model behavior - github.com/openai/openai-… // OpenAI’s Guardrails framework is a thoughtful attempt to provide developers with…

#MLSecOps "Fewer Weights, More Problems: A Practical Attack on LLM Pruning", 2025. ]-> Repo + threat model - github.com/eth-sri/llm-pr… // Model pruning, i.e., removing a subset of model weights, has become a prominent approach to reducing the memory footprint of LLMs during…

#AIOps #MLSecOps #Threat_Modelling "Code Agent can be an End-to-end System Hacker: Benchmarking Real-world Threats of Computer-use Agent", Oct. 2025. ]-> Dataset - huggingface.co/datasets/MomoU… ]-> Code - github.com/EddyLuo1232/VR… // We propose AdvCUA, the first benchmark aligned with…

#AIOps #MLSecOps "WAInjectBench: Benchmarking Prompt Injection Detections for Web Agents", 2025. ]-> Comprehensive benchmark for prompt injection detection in web agents - github.com/Norrrrrrr-lyn/… // we presenting the first comprehensive benchmark study on detecting prompt…

#AIOps #MLSecOps "On the Security of Tool-Invocation Prompts for LLM-Based Agentic Systems: An Empirical Risk Assessment", 2025. ]-> tipexploit.github.io ]-> Repo - github.com/TIPExploit/TIP… // TIPs are critical yet vulnerable components of LLM-based agentic systems. Our…

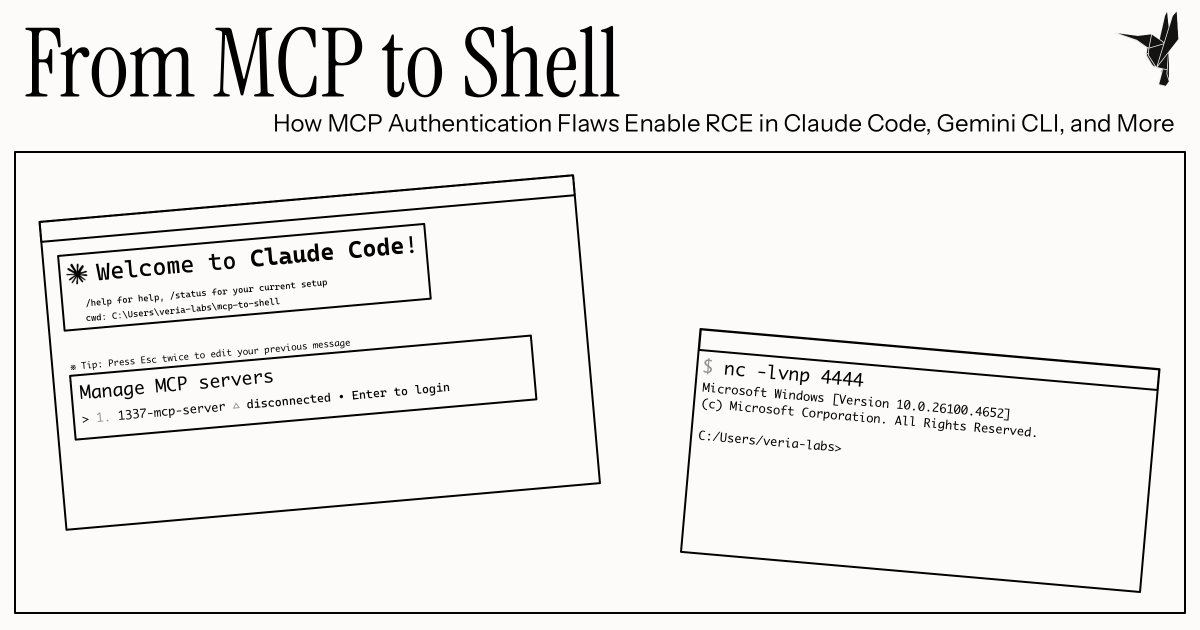

#MLSecOps 1. From MCP to Shell: How MCP Authentication Flaws Enable RCE in Claude Code, Gemini CLI, and More verialabs.com/blog/from-mcp-… 2. REGEXSS: Overly-greedy regex replacements can break HTML sanitisation and lead to XSS sec.stealthcopter.com/regexss

#Research #MLSecOps "A Survey on Data Security in Large Language Models", 2025. // This survey offers a comprehensive overview of the main data security risks facing LLMs and reviews current defense strategies, including adversarial training, RLHF, and data augmentation See…

github.com

GitHub - wearetyomsmnv/Awesome-LLMSecOps: LLM | Security | Operations in one github repo with good...

LLM | Security | Operations in one github repo with good links and pictures. - wearetyomsmnv/Awesome-LLMSecOps

#tools #MLSecOps "AMULET: a Library for Assessing Interactions Among ML Defenses and Risks", 2025. ]-> Python ML package to evaluate the susceptibility of different risks to security, privacy, and fairness - github.com/ssg-research/a… // In addition to modules for risks, AMULET…

#tools #AIOps #MLSecOps #Malware_analysis The Risks of Code Assistant LLMs: Harmful Content, Misuse and Deception unit42.paloaltonetworks.com/code-assistant… ]-> Picklescan - Security scanner detecting Python Pickle files performing suspicious actions - github.com/mmaitre314/pic… ]-> Model-unpickler -…

📢 Last week, @__wunused__ presented our work on secure deserialization of pickle-based Machine Learning (ML) models at @acm_ccs 2025! #pickleball #mlsec #mlsecops #acm_ccs #brownssl #browncs

Introduction to MLSecOps #MachineLearning #machinelearningalgorithms #mlsecops #mlops #securityoperations

#MLSecOps MCP Tool Poisoning Attacks invariantlabs.ai/blog/mcp-secur… ]-> MCP Tool Poisoning Experiments ]-> WhatsApp MCP Exploited: Exfiltrating your message history via MCP

![HackingTeam777's tweet image. #MLSecOps

MCP Tool Poisoning Attacks

invariantlabs.ai/blog/mcp-secur…

]-> MCP Tool Poisoning Experiments

]-> WhatsApp MCP Exploited: Exfiltrating your message history via MCP](https://pbs.twimg.com/media/GoBcQinXMAAvkOe.jpg)

Exciting news: We've united with @ProtectAICorp to elevate AI/ML security! Follow us down the rabbit hole to learn more. #bugbounty #mlsecops

Traditional security can’t protect your machine learning pipeline. From data poisoning to model inversion, ML systems face unique threats. Our new whitepaper introduces MLSecOps & outlines how to build a secure, compliant ML lifecycle: bit.ly/4kFRfHz #MLSecOps #MLOps #AI

🎉 Today, Protect AI announced new members to its executive team. This strategic move will enhance the company's ability to meet the increasing demands for AI/ML security technologies, and expand reach. Read the full PR here- bit.ly/3MTc0Rv #protectai #mlsecops

The 6 challenges your business will face in implementing MLSecOps - helpnetsecurity.com/2025/08/20/mls… - @openssf - #MLSecOps #AIThreats #ArtificialIntelligence #MachineLearning #CyberSecurity #netsec #security #InfoSecurity #CISO #ITsecurity #CyberSecurityNews #SecurityNews

Protect AI is Hiring! Check out our Careers Page, and learn more about one of our own- Josh Miles. bit.ly/3YlKWP1 #protectai #mlsecops #devsecops #datascience #cybersecurity #machinelearning #hiring #techjobs

😎 garak: ... into the AI Red Teaming Rabbit Hole 💥So, you want to go down the AI Red Teaming rabbit hole? 📷 Let's check out how you can leverage garak for AI Red Teaming 🧵 #genaisecurity #mlsecops #aisecurity #llmsecurity #threatdetection #redteam #airedteaming

Exciting news from Space ISAC's AI/ML Community! 🎉 Our MLSecOps white paper is coming soon! 🚀📄 Crafted by experts from @AerospaceCorp, @RS21, and @LockheedMartin, it explores AI/ML in Space, #MLSecOps origins, and security vulnerabilities. Stay tuned! #AI #ML #SpaceSecurity

Protect AI's CEO and Founder, @ianrswanson is at Reuters MOMENTUM discussing AI regulatory challenges, considerations, & mitigations for AI compliance needs. Book a demo today 👉 bit.ly/431SPuB #protectai #mlsecops #ai #reutersmomentum

Our own Dr. Mehrin Kiani is speaking this Thursday - there's still time to register for this free online event! 🗓️Date: December 7, 2023 ⏰Time: 6:00 PM - 7:30 PM EST 📌Location: Online (Registration Link: bit.ly/3NdMJCs) #ProtectAI #MLSecOps Photo cred: Tina Aprile, CMP

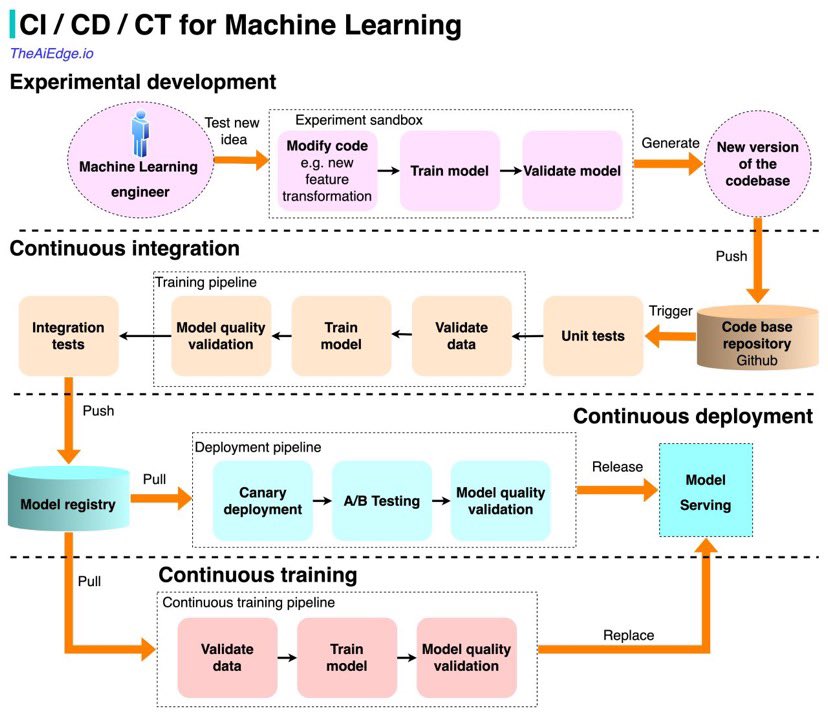

the canonical MLOps CI/CD/CT pipeline allows organizations to automate their machine learning, and boost production impacts over ad hoc development. but it also introduces novel security challenges in workflows, supply chains, and other dependencies. #MLSecOps

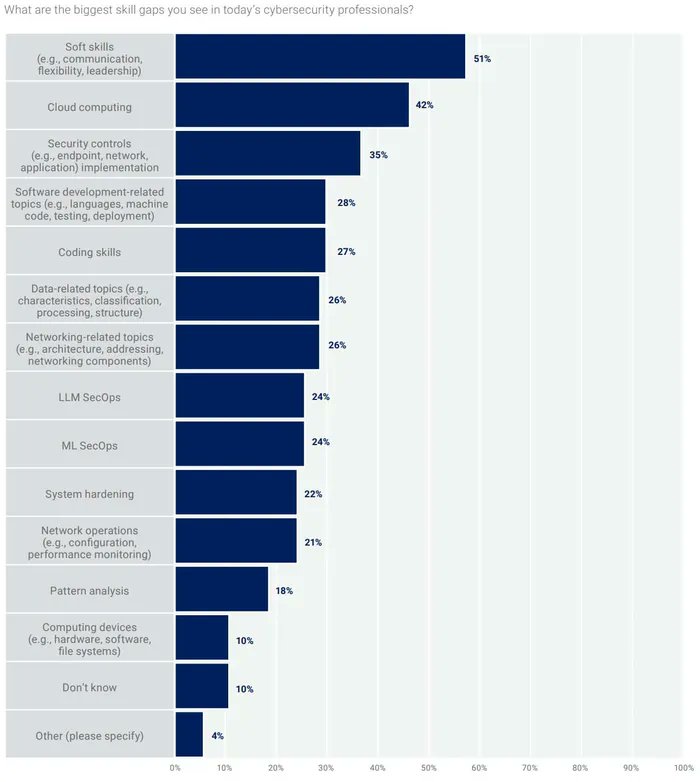

🚨AI Skills Gap: Demand for ML and LLM SecOps Experts Soars!🛡️ Hey everyone, Bob here! Companies are hyping up AI in all their products, but guess what? This opens up new security holes! 🔓 Organizations are now on the hunt for professionals skilled in #MLSecOps and #LLMSecOps…

❇️ Enterprise-Grade Security for Model Context Protocol ❇️ Model Context Protocol is a critical aspect of AI systems due to the central role it plays in standardizing how AI models interact with the world around them. 🧵 #genaisecurity #mlsecops #aisecuritty #aiagents

Just launched: A whitepaper from the AI/ML Security Working Group 🔐 Visualizing Secure MLOps (#MLSecOps) Read the blog by @DellTech’s Sarah Evans & @Ericsson’s Andrey Shorov + download the full guide: 🔗 hubs.la/Q03Bkym60

We're now growing our #MLOps offerings with @Qwak_ai! Join us on July 22 / 23 / 24 for our webinar to learn how this unification delivers 1 platform for DevSecOps & #MLSecOps. Register here: jfrog.co/3VX9dwm

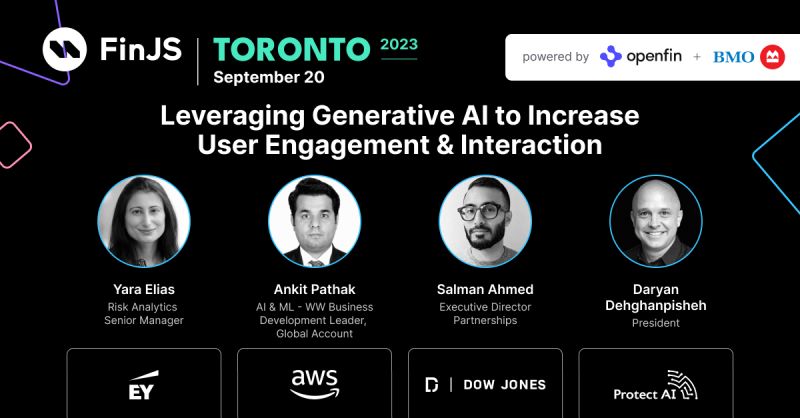

🚀Announcement! We're gearing up for @finjsio Toronto on Sep. 20, 2023! Hear our President and co-founder, @DaryanD13, on the panel below, and visit our booth for demos of our intuitive and popular solutions for #MLSecOps! 📷 Register here: hubs.li/Q021Y1MV0 #FinTech #LLM

Explore the Intersection of ML, Security, and SecOps at SECtember.ai! Dive deep into secure ML pipelines, model governance, and continuous monitoring. bit.ly/3y0adXt #SECtemberAI #CSAI #MLSecOps

Something went wrong.

Something went wrong.

United States Trends

- 1. #WWERaw 64K posts

- 2. Purdy 26.3K posts

- 3. Panthers 35.9K posts

- 4. Bryce 19.7K posts

- 5. 49ers 38.2K posts

- 6. Canales 13.3K posts

- 7. #FTTB 5,383 posts

- 8. Mac Jones 4,768 posts

- 9. Penta 9,691 posts

- 10. #KeepPounding 5,246 posts

- 11. Niners 5,444 posts

- 12. Gonzaga 3,020 posts

- 13. Gunther 14.1K posts

- 14. Jaycee Horn 2,652 posts

- 15. Jauan Jennings 1,999 posts

- 16. Amen Thompson 1,005 posts

- 17. Logan Cooley N/A

- 18. #RawOnNetflix 2,103 posts

- 19. Moehrig N/A

- 20. Ji'Ayir Brown 1,292 posts

![ksg93rd's tweet card. [NeurIPS 2025] BackdoorLLM: A Comprehensive Benchmark for Backdoor Attacks and Defenses on Large Language Models - bboylyg/BackdoorLLM](https://pbs.twimg.com/card_img/1991582740806823936/u3D1HXPm?format=jpg&name=orig)