Joydeep Biswas

@Joydeepb_robots

Associate Professor, Computer Science, UT Austin. Visiting Professor, Nvidia Robot doctor / CS Professor / he / him Also @[email protected]

You might like

Wrapping up a wonderful visit to @texas_robotics and @UTCompSci to give a FAI+Texas Robotics seminar. robotics.utexas.edu/events Thanks Roberto Martin-Martin, @PeterStone_TX , @Joydeepb_robots, @AgriRobot !

How can robots follow complex instructions in dynamic environments? 🤖 Meet ComposableNav — a diffusion-based planner that enables robots to generate novel navigation behaviors that satisfy diverse instruction specifications on the fly — no retraining needed. 📄 Just accepted…

Of course a simulator is useful when finetuning an LLM to generate robot programs. However, how can we synthesize simulation environments *on the fly* for *novel and arbitrary tasks*? Robo-Instruct shows how! Research led by @ZichaoHu99 in my lab - and accepted at COLM 2025

🚀 Generating synthetic training data for code LLM in robotics? 🤖 Frustrated with too many programs that look correct but actually invalid? Introducing Robo-Instruct — a simulator-augmented framework that: ✅ Verifies programs against robot API constraints ✅ Aligns…

🚀 Generating synthetic training data for code LLM in robotics? 🤖 Frustrated with too many programs that look correct but actually invalid? Introducing Robo-Instruct — a simulator-augmented framework that: ✅ Verifies programs against robot API constraints ✅ Aligns…

📢 Call for AAAI-26 Doctoral Consortium Submissions (Deadline: September 30 AoE) We invite doctoral students in the AI community to apply to the Doctoral Consortium at AAAI-26, held on January 20-21 2026 in Singapore!

Need 3D structure and motion from arbitrary in-the wild videos, and VSLAM/COLMAP letting you down (moving objects, imprecise calibration, degenerate motion)? Try ViPE! Led by @huangjh_hjh , and with a fantastic team from Nvidia.

[1/N] 🎥 We've made available a powerful spatial AI tool named ViPE: Video Pose Engine, to recover camera motion, intrinsics, and dense metric depth from casual videos! Running at 3–5 FPS, ViPE handles cinematic shots, dashcams, and even 360° panoramas. 🔗 research.nvidia.com/labs/toronto-a…

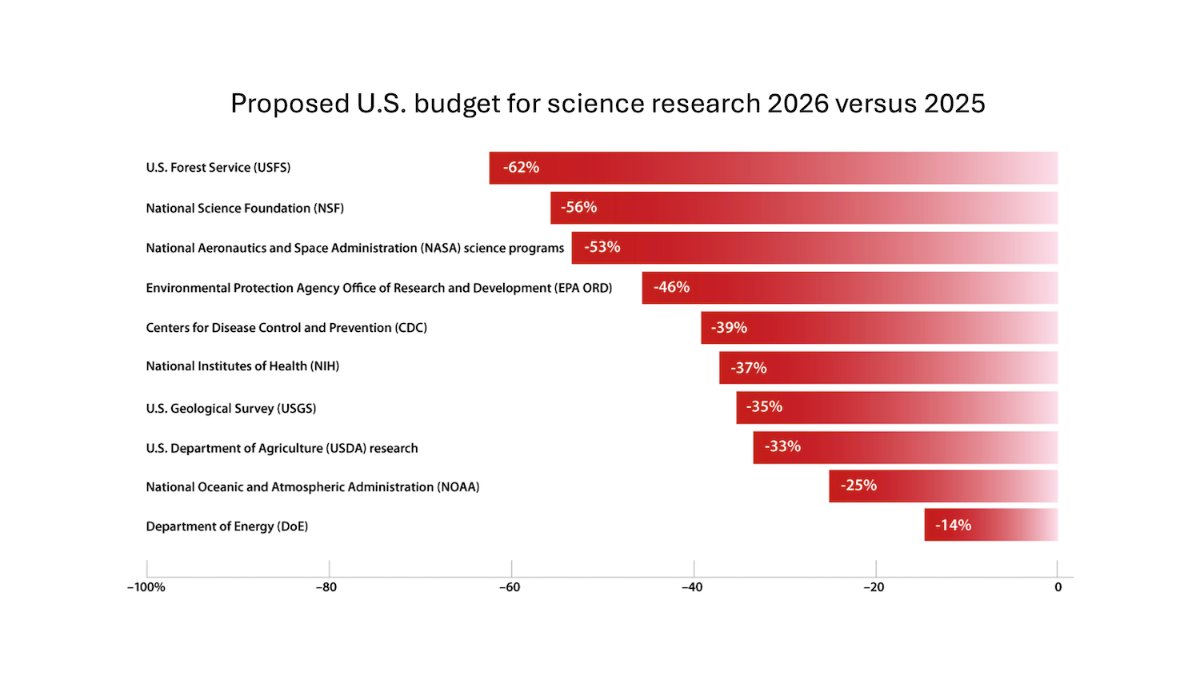

I am alarmed by the proposed cuts to U.S. funding for basic research, and the impact this would have for U.S. competitiveness in AI and other areas. Funding research that is openly shared benefits the whole world, but the nation it benefits most is the one where the research is…

🗺️ Scalable mapless navigation demands open-world generalization. Meet CREStE: our SOTA navigation model that nails path planning in novel scenes with just 3 hours of data, navigating 2 Km with just 1 human intervention. Project Page 🌐: amrl.cs.utexas.edu/creste A thread 🧵

How can robots learn household tasks from videos using just an iPhone, no robot hardware? Introducing SPOT, an object-centric framework that learns from minimal human demos, capturing the task-related constraints. (1/n)

🤔 NVIDIA's ReMEmbR project integrates large language and vision-language models with retrieval-augmented generation (RAG) to enable robots to reason and act autonomously over extended periods. By building and querying a long-horizon memory using vision transformers and vector…

Do you need a dense map to localize your robot over long-term deployments? We don’t think so! Want to know how? If you're at #IROS2024, come checkout my presentation on "ObVi-SLAM: Long-Term Object-Visual SLAM" at 10:15 (October 17) in Room 1.

Comet C/2023 A3, captured from East Austin, over downtown Austin.

This project started with us annoyed at papers evaluating CoT "reasoning" with only GSM8k & MATH. We didn't expect to find such strong evidence that these are the only type of problem where CoT helps! Credit to @juand_r_nlp & @kmahowald for driving the rigorous meta-analysis!

To CoT or not to CoT?🤔 300+ experiments with 14 LLMs & systematic meta-analysis of 100+ recent papers 🤯Direct answering is as good as CoT except for math and symbolic reasoning 🤯You don’t need CoT for 95% of MMLU! CoT mainly helps LLMs track and execute symbolic computation

Thrilled to share one of our projects at #NVIDIA this Summer - enabling long-horizon open-world perception and recall for mobile robots! Fantastic work by @_abraranwar over his internship, jointly with Yan Chang, John Welsh, and @SohaPouya nvidia-ai-iot.github.io/remembr/

How can #robots remember? 🤖 💭 For robots to understand and respond to questions that require complex multi-step reasoning in scenarios over long periods of time, we built ReMEmbR, a retrieval-augmented memory for embodied robots. 👀 Technical deep dive from #NVIDIAResearch…

I am thrilled to announce that UT CODa has been accepted at #IeeeTro24! Thank you to all of my collaborators at @Joydeepb_robots @amrl_ut who helped make this work possible. We are also excited to share the release of CODa version 2. More on CODa v2 in this🧵

Last semester, a new 1-credit-hour course we co-designed, “Essentials of AI for Life and Society,” made its debut, and now the lecture recordings are available online. Check them out here: bit.ly/4coXM6Q @UTCompSci, @PeterStone_TX, @Joydeepb_robots #TXCS

@NSF Funded Expedition Project Uses AI to Rethink Computer Operating Systems. Led by @adityaakella , co-PIs are @Joydeepb_robots, @swarat , Shuchi Chawla, @IsilDillig , @daehyeok_kim, Chris Rossbach, @AlexGDimakis and Sanjay Shakkottai. 👏👏👏 cns.utexas.edu/news/announcem…

United States Trends

- 1. Pat Spencer 2,364 posts

- 2. Kerr 5,054 posts

- 3. Jimmy Butler 2,470 posts

- 4. Podz 3,034 posts

- 5. Seth Curry 3,905 posts

- 6. Hield 1,497 posts

- 7. Mark Pope 1,855 posts

- 8. #DubNation 1,378 posts

- 9. Carter Hart 3,770 posts

- 10. Derek Dixon 1,205 posts

- 11. Connor Bedard 2,188 posts

- 12. Brunson 7,255 posts

- 13. #ThunderUp N/A

- 14. Caleb Wilson 1,121 posts

- 15. Jaylen Brown 8,987 posts

- 16. Notre Dame 38.5K posts

- 17. Knicks 14.4K posts

- 18. Kuminga 1,314 posts

- 19. Kentucky 29.4K posts

- 20. Celtics 16.4K posts

You might like

-

David Held

David Held

@davheld -

Xuesu Xiao

Xuesu Xiao

@XuesuXiao -

Peter Stone

Peter Stone

@PeterStone_TX -

Abhishek Gupta

Abhishek Gupta

@abhishekunique7 -

Scott Niekum

Scott Niekum

@scottniekum -

Dorsa Sadigh

Dorsa Sadigh

@DorsaSadigh -

Yuke Zhu

Yuke Zhu

@yukez -

Shuran Song

Shuran Song

@SongShuran -

Texas Robotics

Texas Robotics

@texas_robotics -

Learning Agents Research Group

Learning Agents Research Group

@utlarg -

Roberto

Roberto

@RobobertoMM -

Yifeng Zhu

Yifeng Zhu

@yifengzhu_ut -

Stefanos Nikolaidis

Stefanos Nikolaidis

@snikolaidis19 -

Ken Goldberg

Ken Goldberg

@Ken_Goldberg -

Guanya Shi ✈️ NeurIPS'25

Guanya Shi ✈️ NeurIPS'25

@GuanyaShi

Something went wrong.

Something went wrong.