Kaj Bostrom

@alephic2

NLP geek with a PhD from @utcompsci, now @ AWS. I like generative modeling but not in an evil way I promise. Also at http://bsky.app/profile/bostromk.net He/him

You might like

For inputs involving many steps, the operands for each step remain important until an identical depth. This indicates that the model is *not* breaking down the computation, solving subproblems, and composing their results together. 2/6

Definitely updated my mental model of CoT based on these results - give it a read, the paper delivers right off the bat and then keeps following up with more!

To CoT or not to CoT?🤔 300+ experiments with 14 LLMs & systematic meta-analysis of 100+ recent papers 🤯Direct answering is as good as CoT except for math and symbolic reasoning 🤯You don’t need CoT for 95% of MMLU! CoT mainly helps LLMs track and execute symbolic computation

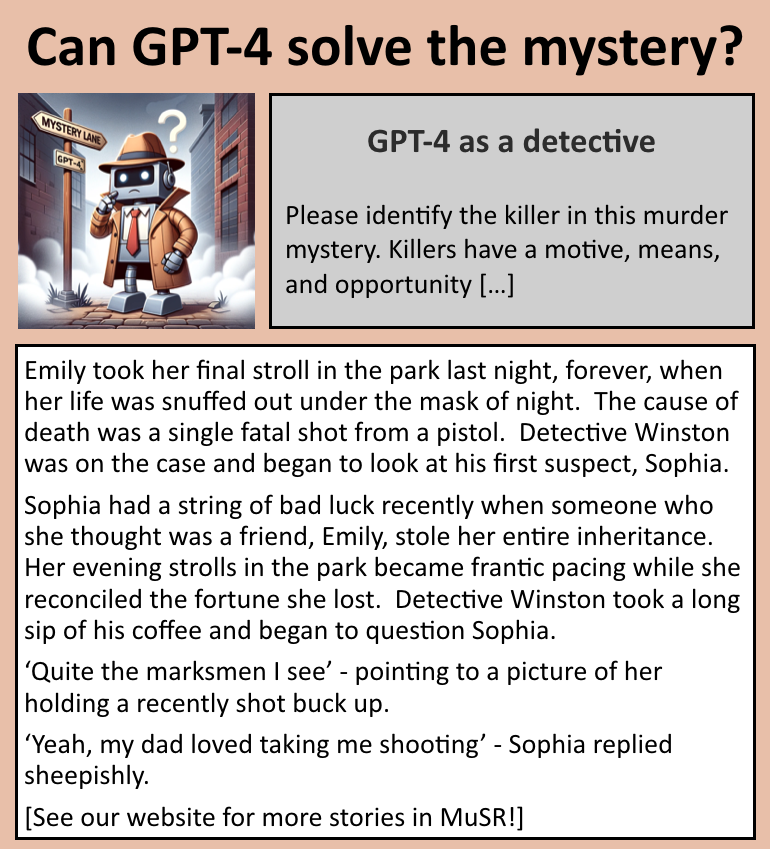

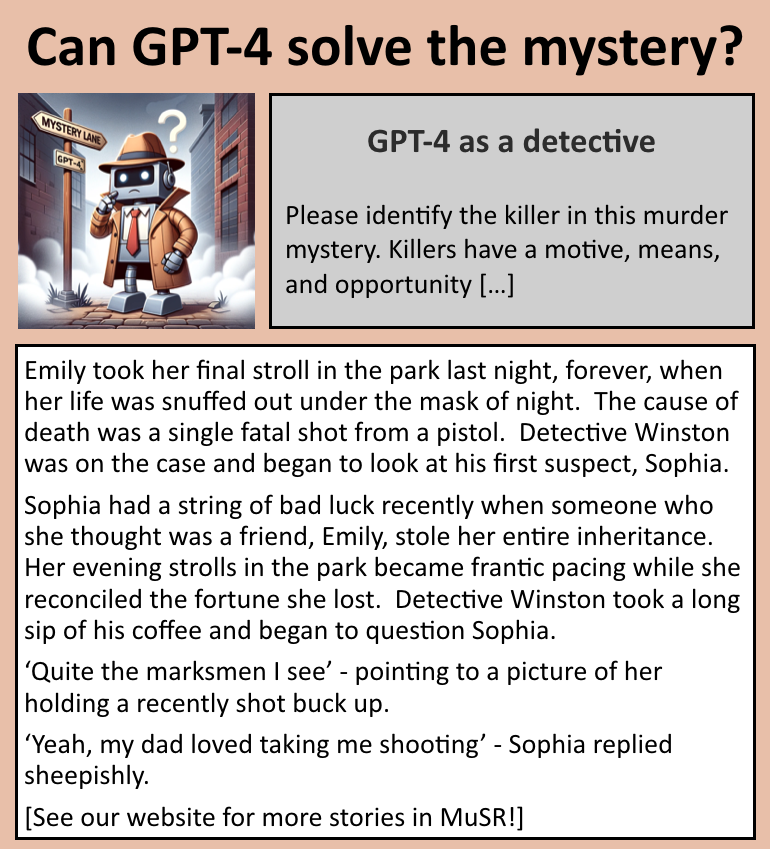

🍓 still has a way to go for solving murder mysteries. We ran o1 on our dataset MuSR (ICLR ’24). It doesn’t beat Claude-3.5 Sonnet with CoT. MuSR requires a lot of commonsense reasoning and less math/logic (where 🍓 shines) MuSR is still a challenge! More to come soon 😎

Super excited to bring ChatGPT Murder Mysteries to #ICLR2024 from our dataset MuSR as a spotlight presentation! A big shout-out goes to my coauthors, @xiye_nlp @alephic2 @swarat and @gregd_nlp See you all there 😀

GPT-4 can write murder mysteries that it can’t solve. 🕵️ We use GPT-4 to build a dataset, MuSR, to test the limits of LLMs’ textual reasoning abilities (commonsense, ToM, & more) 📃 arxiv.org/abs/2310.16049 🌐 zayne-sprague.github.io/MuSR/ w/ @xiye_nlp @alephic2 @swarat @gregd_nlp

After extensive training with various music generation neural networks and dedicating countless hours to prompting them, it's become even more evident to me that relying solely on text prompts as interface for music creation significantly limits the creative process.

Reducing all interfaces to text prompts is a failure of imagination and inhumane.

GPT-4 can write murder mysteries that it can’t solve. 🕵️ We use GPT-4 to build a dataset, MuSR, to test the limits of LLMs’ textual reasoning abilities (commonsense, ToM, & more) 📃 arxiv.org/abs/2310.16049 🌐 zayne-sprague.github.io/MuSR/ w/ @xiye_nlp @alephic2 @swarat @gregd_nlp

While demand for generative model training soars 📈, I think a new field is coalescing that’s focused on trying to make sense of generative models _once they’re already trained_: characterizing their behaviors, differences, and underlying mechanisms…so we wrote a paper about it!

LLMs are used for reasoning tasks in NL but lack explicit planning abilities. In arxiv.org/abs/2307.02472, we see if vector spaces can enable planning by choosing statements to combine to reach a conclusion. Joint w/ @alephic2 @swarat & @gregd_nlp NLRSE workshop at #ACL2023NLP

📣Call for papers! The Natural Language Reasoning and Structured Explanations Workshop will be the first of its kind at ACL 2023, and the deadline for paper submissions is April 24. Learn more and submit here: nl-reasoning-workshop.github.io

nl-reasoning-workshop.github.io

Natural Language Reasoning and Structured Explanations Workshop

Natural Language Reasoning and Structured Explanations Workshop ---

Three years in the making - our big review/position piece on the capabilities of large language models (LLMs) from the cognitive science perspective. Thread below! 1/ arxiv.org/abs/2301.06627

United States Trends

- 1. #CARTMANCOIN 1,838 posts

- 2. yeonjun 245K posts

- 3. Broncos 67.3K posts

- 4. Raiders 66.9K posts

- 5. Bo Nix 18.5K posts

- 6. Geno 19K posts

- 7. daniela 52.1K posts

- 8. Sean Payton 4,851 posts

- 9. #criticalrolespoilers 5,157 posts

- 10. #iQIYIiJOYTH2026xENGLOT 463K posts

- 11. Kehlani 10.7K posts

- 12. #NOLABELS_PART01 108K posts

- 13. #Pluribus 2,978 posts

- 14. Kenny Pickett 1,520 posts

- 15. Danny Brown 3,190 posts

- 16. Chip Kelly 2,008 posts

- 17. Tammy Faye 1,476 posts

- 18. Vince Gilligan 2,712 posts

- 19. Jalen Green 7,908 posts

- 20. Bradley Beal 3,697 posts

You might like

-

Tanya Goyal

Tanya Goyal

@tanyaagoyal -

Sachin Gururangan

Sachin Gururangan

@ssgrn -

Alexis Ross

Alexis Ross

@alexisjross -

Jessy Li

Jessy Li

@jessyjli -

Niranjan

Niranjan

@b_niranjan -

Yanai Elazar

Yanai Elazar

@yanaiela -

Ana Marasović

Ana Marasović

@anmarasovic -

Tuhin Chakrabarty

Tuhin Chakrabarty

@TuhinChakr -

Greg Durrett

Greg Durrett

@gregd_nlp -

Xi Ye

Xi Ye

@xiye_nlp -

Valentina Pyatkin

Valentina Pyatkin

@valentina__py -

Jonathan Berant

Jonathan Berant

@JonathanBerant -

Mengzhou Xia

Mengzhou Xia

@xiamengzhou -

Prasann Singhal

Prasann Singhal

@prasann_singhal -

Shuyan Zhou

Shuyan Zhou

@syz0x1

Something went wrong.

Something went wrong.