你可能會喜歡

A new handheld interface from MIT gives anyone the ability to train a robot for tasks in fields like manufacturing. The versatile tool can teach a robot new skills using one of three approaches: natural teaching, kinesthetic training, & teleoperation: bit.ly/4nTAw6F

Yale Philosophy offers a course on “Formal Philosophical Methods” — a broad introduction to probability, logic, formal semantics, etc. Instructor Calum McNamara has now made all materials for the course (78 pages) freely available static1.squarespace.com/static/6255ffe…

Wow! The core finding in the much-maligned Apple paper from @ParshinShojaee et al – that reasoning models generalize poorly in the face of complexity – has been conceptually replicated three times in three weeks. C. Opus sure didn’t see that coming. And a lot of people owe Ms.…

The programs we look at are quite simple and all represent novel combinations of familiar operations. We also find lower performance for more complex programs, especially for the compositions. Also, I have a sense that LLMs can handle OOD problems easier when represented in code

Ok I’ve read it now and as I expected the complaints about it are ill founded. It seems fine. I think it convincingly shows a lot of what I’ve been saying about these thinking models.

I still haven’t read that Apple paper but I see a lot of people complaining about it. To me, the complaints I’ve seen seem ill founded given what I understand about it, but obviously it’s hard for me to judge without having read the paper. What are the most reasonable complaints?

Friends, need your help. @antarikshB, a senior from IIT B has launched an incredible project of organizing all Sanskrit literature in one place, in a user-friendly manner. The service is free, not-for-profit, created purely out of passion. Media coverage will go a long way in…

I like how they use the infinite rotation to make planning easier. Taking advantage of how your humanoid doesn't need to be human

How can robots understand spatiotemporal language in novel environments without retraining? 🗣️🤖 In our #IROS2024 paper, we present a modular system that uses LLMs and a VLM to ground spatiotemporal navigation commands in unseen environments described by multimodal semantic maps

Recent results like Apple’s show that LLMs (even o1) flub on reasoning with simple changes to problems that shouldn’t matter. A consensus is building that it shows they are “just pattern matching.” But that metaphor is misleading: good reasoning itself can also be framed as “just…

Evaluation in robot learning papers, or, please stop using only success rate a paper and a 🧵 arxiv.org/abs/2409.09491

My (pure) speculation about what OpenAI o1 might be doing [Caveat: I don't know anything more about the internal workings of o1 than the handful of lines about what they are actually doing in that blog post--and on the face of it, it is not more informative than "It uses Python…

@jasonxyliu will present their @IJCAIconf survey paper on robotic language grounding. Please check out his talk (8/8 11:30) if you are at #IJCAI2024 In colab w/ @VanyaCohen, Raymond Mooney from @UTAustin, @StefanieTellex from @BrownCSDept, @drdavidjwatkins from The AI Institute

How do robots understand natural language? #IJCAI2024 survey paper on robotic language grounding We situated papers into a spectrum w/ two poles, grounding language to symbols and high-dimensional embeddings. We discussed tradeoffs, open problems & exciting future directions!

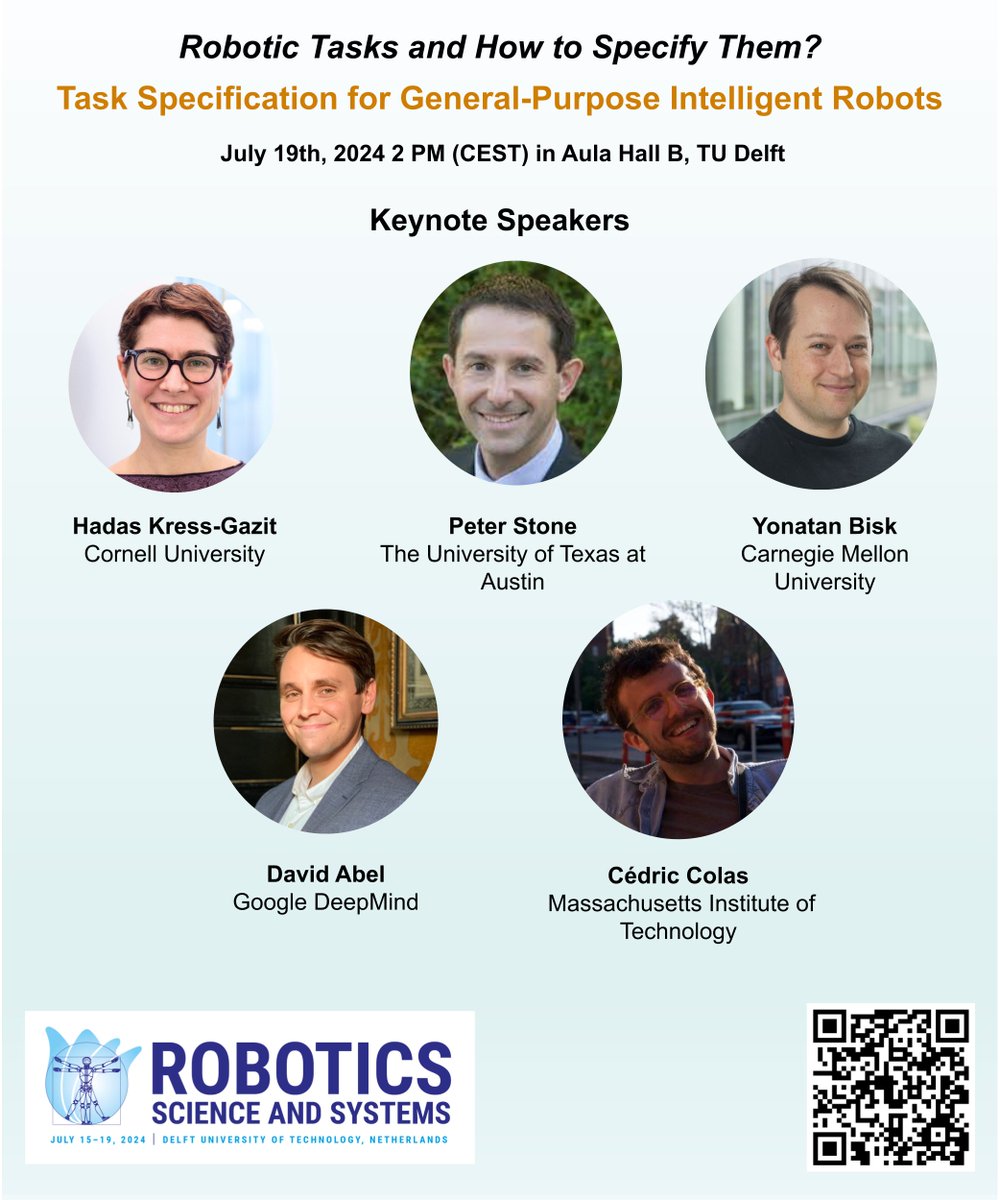

We will hear from an amazing line of speakers at our #RSS2024 workshop on robotic task specification tomorrow at 2 PM (CEST) in Aula Hall B @HadasKressGazit, @PeterStone_TX, @ybisk, @dabelcs, @cedcolas More details at: sites.google.com/view/rss-tasks…

RL in POMDPs is hard because you need memory. Remembering *everything* is expensive, and RNNs can only get you so far applied naively. New paper: 🎉 we introduce a theory-backed loss function that greatly improves RNN performance! 🧵 1/n

Ever wonder if LLMs use tools🛠️ the way we ask them? We explore LLMs using classical planners: are they writing *correct* PDDL (planning) problems? Say hi👋 to Planetarium🪐, a benchmark of 132k natural language & PDDL problems. 📜 Preprint: arxiv.org/abs/2407.03321 🧵1/n

Enforcing safety constraints with an LLM-modulo planner. Presented by @ZiyiYang96 at #ICRA2024

I'm at #ICRA2024 and will be presenting my paper titled "Plug in the Safety Chip: Enforcing Constraints for LLM-driven Robot Agents" (yzylmc.github.io/safety-chip/). Excited++ 🦾🤖

yzylmc.github.io

TWITTER BANNER TITLE META TAG

TWITTER BANNER DESCRIPTION META TAG

Solving new tasks zero shot using prior experience in a related task! Find @jasonxyliu at #ICRA2024

How can robots reuse learned policies to solve novel tasks without retraining? In our #ICRA2024 paper, we leverage the compositionality of task specification to transfer skills learned from a set of training tasks to solve novel tasks zero-shot

How can robots reuse learned policies to solve novel tasks without retraining? In our #ICRA2024 paper, we leverage the compositionality of task specification to transfer skills learned from a set of training tasks to solve novel tasks zero-shot

Back on April 1st I posted my three laws of robotics. Here are my three laws of AI. 1. When an AI system performs a task, human observers immediately estimate its general competence in areas that seem related. Usually that estimate is wildly overinflated. 2. Most successful AI…

United States 趨勢

- 1. George Santos 10.7K posts

- 2. Prince Andrew 41.4K posts

- 3. No Kings 299K posts

- 4. #askdave N/A

- 5. Duke of York 17.7K posts

- 6. Louisville 5,328 posts

- 7. #BostonBlue 1,112 posts

- 8. Gio Ruggiero N/A

- 9. Norm Benning N/A

- 10. Zelensky 86.3K posts

- 11. Rajah N/A

- 12. Teto 19.3K posts

- 13. Andrea Bocelli 25.2K posts

- 14. Arc Raiders 7,834 posts

- 15. #DoritosF1 N/A

- 16. #iwcselfieday N/A

- 17. Max Verstappen 11.8K posts

- 18. #SELFIESFOROLIVIA N/A

- 19. Chandler Smith N/A

- 20. Swig N/A

你可能會喜歡

-

Andreea Bobu

Andreea Bobu

@andreea7b -

CORE Robotics Lab

CORE Robotics Lab

@core_robotics -

Xuesu Xiao

Xuesu Xiao

@XuesuXiao -

Esmaeil (Esi) Seraj

Esmaeil (Esi) Seraj

@EsiSeraj -

Rika Antonova

Rika Antonova

@contactrika -

Science Robotics

Science Robotics

@SciRobotics -

CMU Robotics Institute

CMU Robotics Institute

@CMU_Robotics -

Nhan Tran

Nhan Tran

@megatran23 -

Tapomayukh "Tapo" Bhattacharjee

Tapomayukh "Tapo" Bhattacharjee

@TapoBhat -

Deepak Pathak

Deepak Pathak

@pathak2206 -

Yuke Zhu

Yuke Zhu

@yukez -

Karol Hausman

Karol Hausman

@hausman_k -

Vikash Kumar

Vikash Kumar

@Vikashplus -

Stefanie Tellex

Stefanie Tellex

@StefanieTellex -

Jeannette Bohg

Jeannette Bohg

@leto__jean

Something went wrong.

Something went wrong.