Marc-André Moreau

@awakecoding

Remote desktop protocol expert, OSS contributor and Microsoft MVP. I love designing products with Rust, C# and PowerShell. Proud to be CTO at Devolutions. 🇨🇦

Dit vind je misschien leuk

Run Mistral Large 3 on Ollama's cloud: ollama run mistral-large-3:675b-cloud

The Copilot CLI is now available via winget 🪟

What's the simplest zero-config alternative to LocalDB to launch an ASP.NET Kestrel application in WSL from Visual Studio 2026? It works well on the Windows host, but it's not supported in the Linux guest. I wonder if Visual Studio can launch containers easily?

I just realized many of Claude Code users internally had no idea they could just resume past chat sessions, and either started from scratch, or generated markdown files to "save state" This is something that's automatic and easily discoverable in GitHub Copilot in VSCode

Advent of Claude Day 4 - Session Management Accidentally closed your terminal? Laptop died? No problem. claude --continue → picks up your last conversation instantly claude --resume → shows a picker to choose any past session Context preserved. Momentum restored.

Why is it that every time I dare try the Copilot button in Outlook I *instantly* hit a limitation that makes it useless. Apparently searching into custom folders is not supported, so it's unable to find the emails I want, they're just invisible to Copilot

Is there a way in Outlook mobile to *select* emails from search results? I've got lots of emails matching very specific patterns I'd like to delete, but all I can do is open each one individually apparently

Markdown Monster 4.0 is out. Many new features & improvements: * Integrated LLM Chat interface * .NET 10 Runtime * Improved ARM64 support * Many Mermaid graph improvements * Support for Font ligatures * Many small UI improvements Check it out: markdownmonster.west-wind.com #markdown

I've been using ollama cloud for a month, I like it better to try running some of the latest open source models without using my local hardware resources. I can also connect it to GitHub Copilot in VSCode through custom models

Ministral 3 is now also available on Ollama's cloud: 14B: ollama run ministral-3:14b-cloud 8B: ollama run ministral-3:8b-cloud 3B: ollama run ministral-3:3b-cloud ollama.com/library/minist…

Mistral Large 3 is now available in Microsoft Foundry, delivering frontier-level instruction reliability, long-context comprehension, and multimodal reasoning with full Apache 2.0 openness. 🏢 Built for real enterprise workloads 🤖 Optimized for production assistants, RAG…

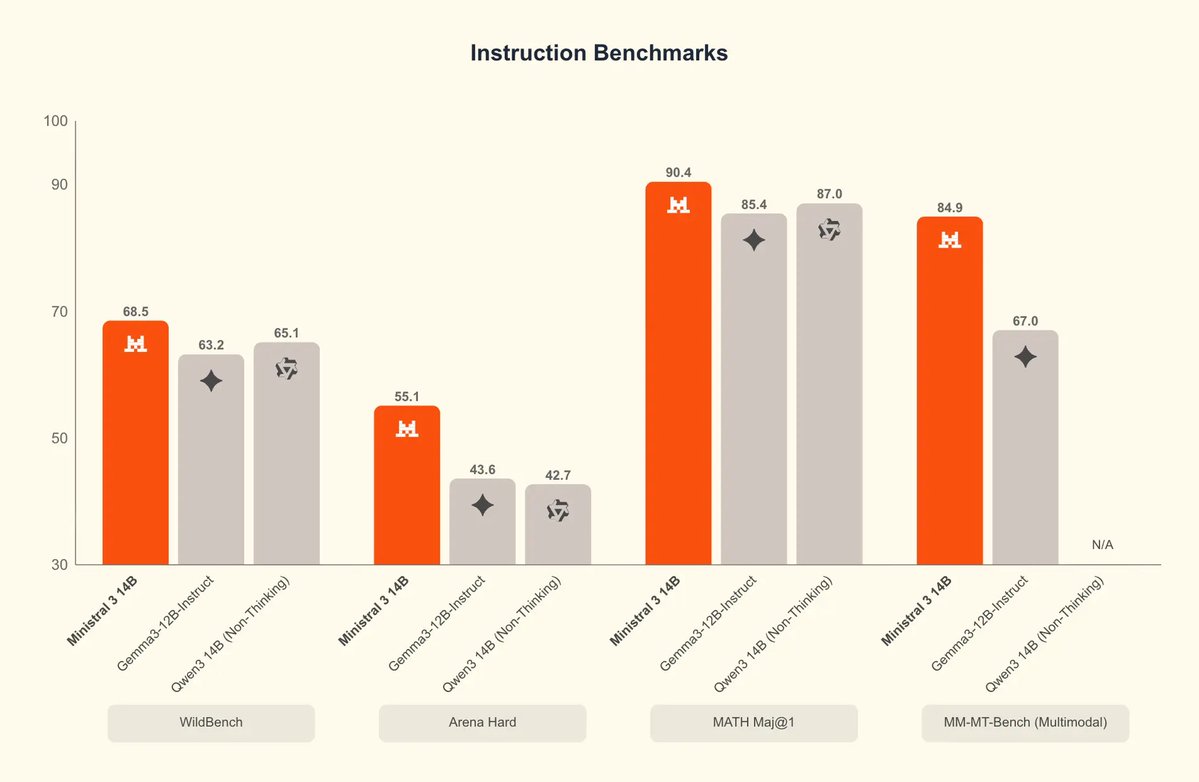

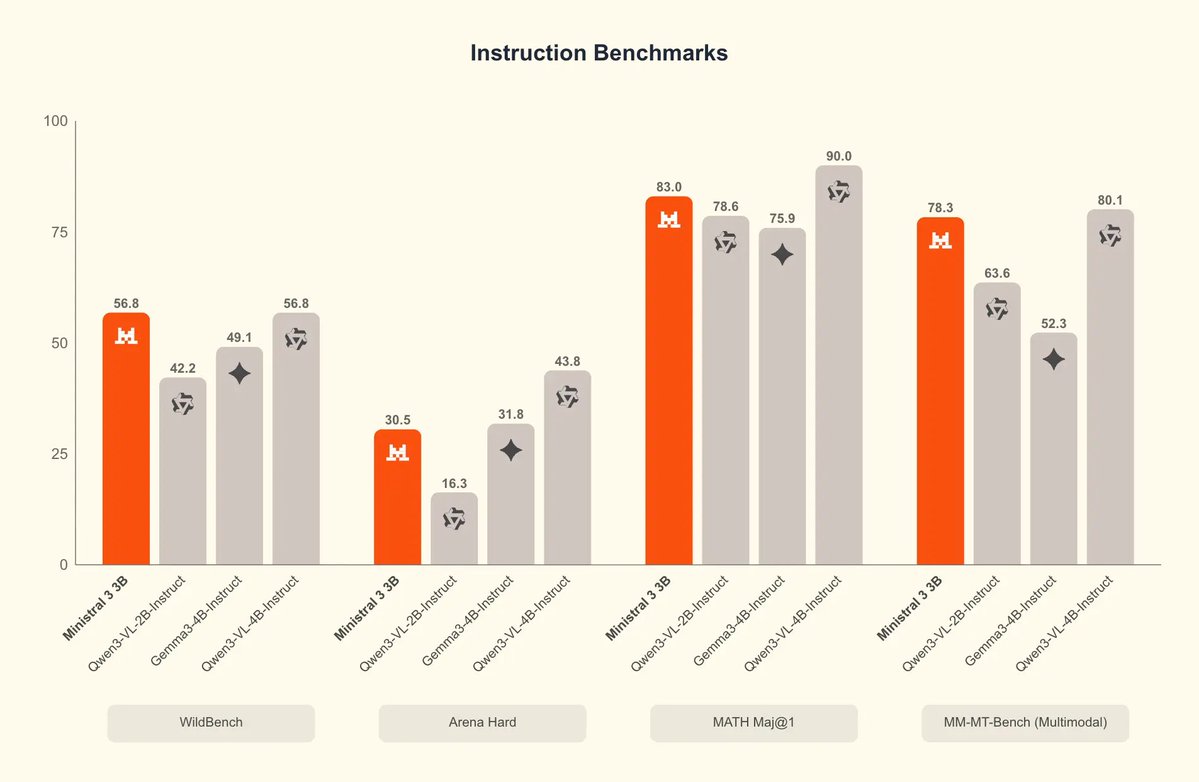

Introducing the Mistral 3 family of models: Frontier intelligence at all sizes. Apache 2.0. Details in 🧵

Mistral 3 is now available on Ollama v0.13.1 (currently in pre-release on GitHub). 14B: ollama run ministral-3:14b 8B: ollama run ministral-3:8b 3B: ollama run ministral-3:3b Please update to the latest Ollama.

Introducing the Mistral 3 family of models: Frontier intelligence at all sizes. Apache 2.0. Details in 🧵

NEW: @MistralAI releases Mistral 3, a family of multimodal models, including three start-of-the-art dense models (3B, 8B, and 14B) and Mistral Large 3 (675B, 41B active). All Apache 2.0! 🤗 Surprisingly, the 3B is small enough to run 100% locally in your browser on WebGPU! 🤯

Ok this is really awesome - Ministral 3B WebGPU with live video inferencing. The demo page literally downloads the model in the browser and it just... works. Try it out! huggingface.co/spaces/mistral…

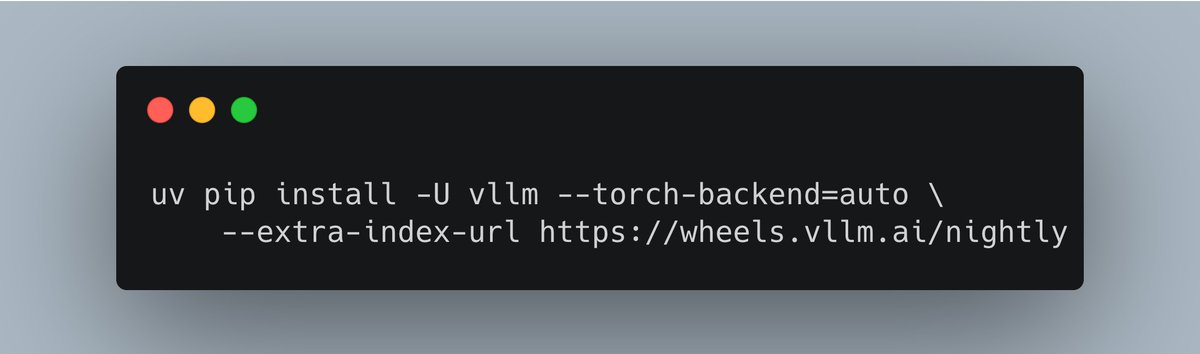

🎉 Congratulations to the Mistral team on launching the Mistral 3 family! We’re proud to share that @MistralAI, @NVIDIAAIDev, @RedHat_AI, and vLLM worked closely together to deliver full Day-0 support for the entire Mistral 3 lineup. This collaboration enabled: • NVFP4…

Introducing the Mistral 3 family of models: Frontier intelligence at all sizes. Apache 2.0. Details in 🧵

Introducing the Mistral 3 family of models: Frontier intelligence at all sizes. Apache 2.0. Details in 🧵

United States Trends

- 1. Cloudflare 28.4K posts

- 2. #heatedrivalry 29.1K posts

- 3. Cowboys 74K posts

- 4. LeBron 113K posts

- 5. Happy Farmers 1,606 posts

- 6. fnaf 2 27.6K posts

- 7. Pickens 14.9K posts

- 8. Lions 93K posts

- 9. Warner Bros 28.5K posts

- 10. Gibbs 20.8K posts

- 11. rUSD N/A

- 12. Paramount 21.6K posts

- 13. #PowerForce N/A

- 14. scott hunter 6,383 posts

- 15. Wizkid 176K posts

- 16. Shang Tsung 34.5K posts

- 17. Brandon Aubrey 7,499 posts

- 18. Davido 96.8K posts

- 19. Scott and Kip 3,671 posts

- 20. Eberflus 2,699 posts

Dit vind je misschien leuk

-

Justin Grote

Justin Grote

@JustinWGrote -

ThePowerShellPodcast

ThePowerShellPodcast

@PowerShellpod -

Elli Shlomo

Elli Shlomo

@ellishlomo -

Thomas Naunheim

Thomas Naunheim

@Thomas_Live -

Dr. Nestori Syynimaa

Dr. Nestori Syynimaa

@DrAzureAD -

an0n

an0n

@an0n_r0 -

S3cur3Th1sSh1t

S3cur3Th1sSh1t

@ShitSecure -

David Weston (DWIZZZLE)

David Weston (DWIZZZLE)

@dwizzzleMSFT -

Grzegorz Tworek

Grzegorz Tworek

@0gtweet -

Will Dormann is on Mastodon

Will Dormann is on Mastodon

@wdormann -

Fabian Bader

Fabian Bader

@fabian_bader -

Ryan

Ryan

@Haus3c -

Nathan McNulty

Nathan McNulty

@NathanMcNulty -

Ru Campbell

Ru Campbell

@rucam365 -

Merill Fernando

Merill Fernando

@merill

Something went wrong.

Something went wrong.