你可能會喜歡

does anyone actually use linkedIn, or do we all just log in once a month to accept random connection requests from strangers.

open source gives ideas. closed source takes them, scales them, hides them. fair game or just pure cheating?

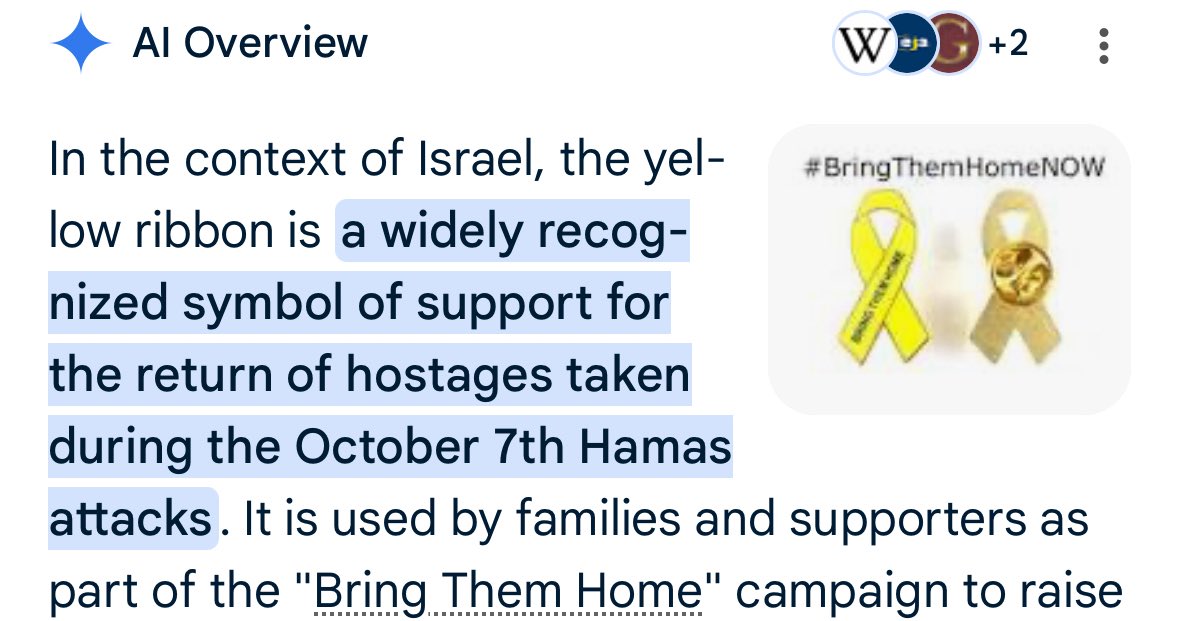

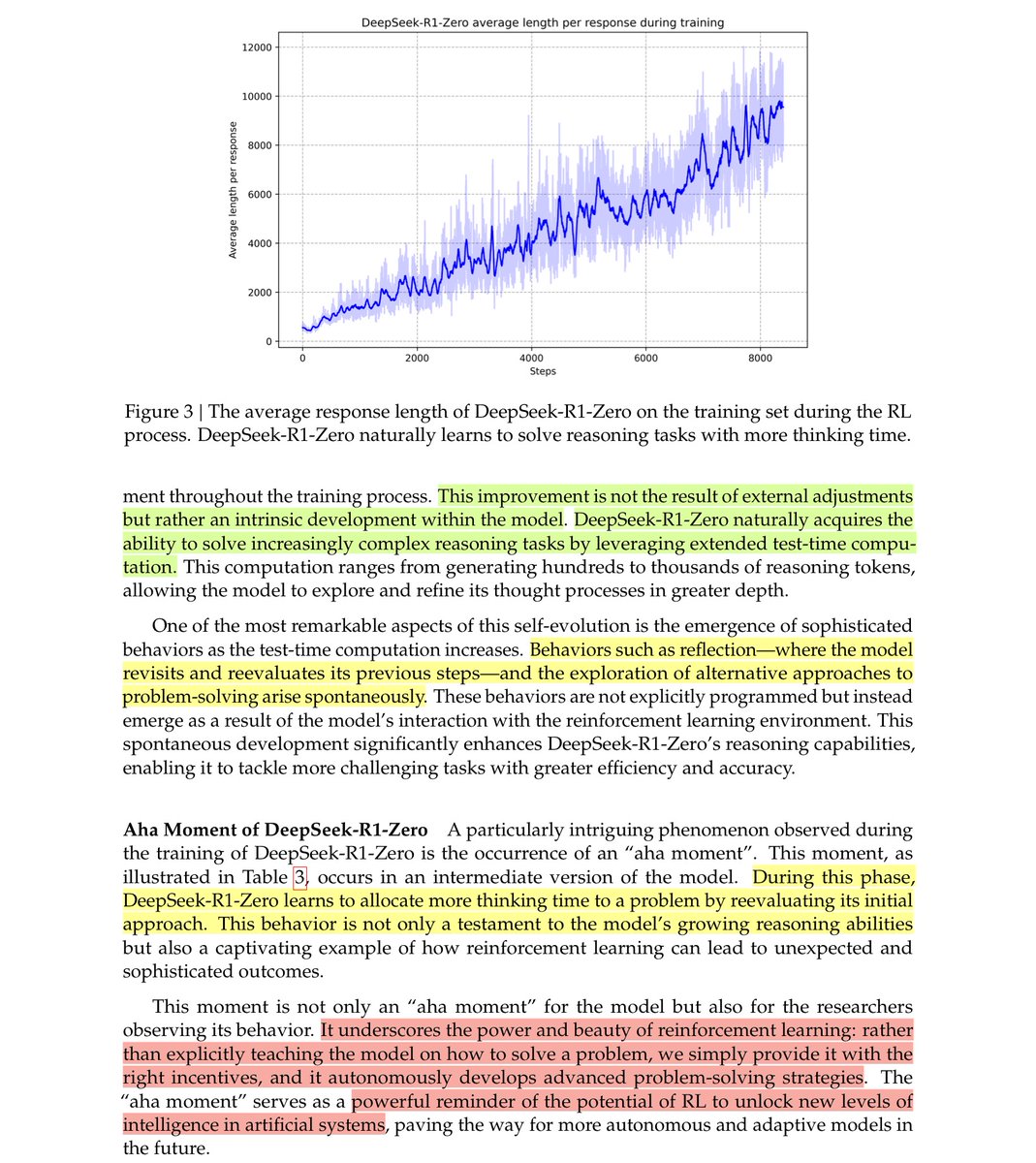

highlight your notes in a quick and easy way. credit : @adivekar_ -> green - quick read -> yellow - read slowly and imp -> red - read, think and understand

yeah, now this makes sense.

winter arc #2 (9hrs): -> read deepseek-math paper and grpo -> finished first 8 chap of rlhfbook by @natolambert -> read @kipperrii transformer inference arithmetic -> watched @elliotarledge vid on cublas and cublasLt -> wrote sgemm & hgemm in cublas -> @karpathy nanochat

does kl penalty and grad norm ultimately have the same effect on the grpo loss. if so, then why can't we just add grad norm instead of the kl penalty term.

notes on deepseek-math paper - deepseek-math-base -> pretrained model on code and math data - deepseekmath-instruct 7B -> sft using coT, poT and tool reasoning - deepseekmath-rl -> grpo on gsm8k and math questions - rl is increasing prob of correct response

winter arc #1 (9.5hrs): -> read context & sequence parallel -> failed to impl ring attn -> binge watched @willccbb vids on yt. -> went deep into deepseek - r1 and watched some vids. -> posted tweet an gpt2 impl in triton and got a like from karpathy -> overall not a bad day!!

i love how @dwarkesh_sp is trying to convince richard sutton that next token prediction is kinda like rl

just saw the @elliotarledge yt latest vid. man that’s so deep and thoughtful on how you spoke about your highs and lows. just wanted to say your an absolute inspiration man. good things will definitely happen soon brother!! keep inspiring us with your work and time lapses!!

why chatgpt is better than google ->compression : quick answers + stores a lot information. compression ratio is very good. ->context : able to identify your problems/questions which are not there on the internet and answer specifically.

man these llm's are so good without any context i wonder what happens if we give the right context to these llm's

this is not what i expected for humans vs robots to be

sam altman has a way of answering the question without actually answering the question while doing a podcast

United States 趨勢

- 1. D’Angelo 14.5K posts

- 2. Happy Birthday Charlie 86.9K posts

- 3. #BornOfStarlightHeeseung 55.5K posts

- 4. Angie Stone N/A

- 5. #csm217 1,627 posts

- 6. #tuesdayvibe 5,103 posts

- 7. Alex Jones 19.5K posts

- 8. Sandy Hook 6,235 posts

- 9. Pentagon 84.9K posts

- 10. #NationalDessertDay N/A

- 11. Brown Sugar 1,722 posts

- 12. Drew Struzan N/A

- 13. #PortfolioDay 5,496 posts

- 14. Cheryl Hines 1,689 posts

- 15. George Floyd 6,013 posts

- 16. Good Tuesday 38.9K posts

- 17. Taco Tuesday 12.5K posts

- 18. Powell 20K posts

- 19. Monad 214K posts

- 20. Riggins N/A

你可能會喜歡

-

dill

dill

@dill_sunnyb11 -

Bruno Henrique

Bruno Henrique

@Brunot3ch -

Salman Ibne Eunus

Salman Ibne Eunus

@ibne_eunus -

Martin Andrews

Martin Andrews

@mdda123 -

T Tian

T Tian

@gdsttian -

Bertrand Couture

Bertrand Couture

@bertrandcouture -

Mauricio Alzate

Mauricio Alzate

@Xarahenergy -

AMVITABLE®

AMVITABLE®

@amvitable -

Elias

Elias

@notes_own -

Milind Hanchinmani

Milind Hanchinmani

@mhanchinmani -

GabrielOrtega

GabrielOrtega

@Tsunami70510954 -

Sophy Sem

@semsphy -

Anuj Dutt

Anuj Dutt

@anujdutt92 -

DOKON🚃

DOKON🚃

@dokondokon -

Aditya Morolia

Aditya Morolia

@AdityaMorolia

Something went wrong.

Something went wrong.