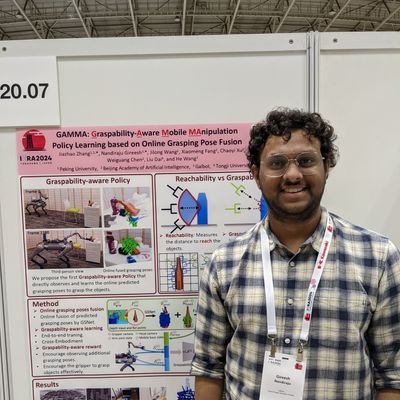

Jishnu Jaykumar Padalunkal

@jishnu_jaykumar

Research Intern @rai_inst I PhD Candidate | Research focus on improving #RobotPerception, #RobotLearning | Previously @iitkgp @NVIDIA @IISc @iiitvadodarasm

You might like

Excited to begin my #Fall25 #ResearchInternship at @rai_inst 🍁. I’ll be working on #grasping and #manipulation #policylearning using #tactilesensing — combining #robotics, #perception, and #control to bring touch into robotic #decisionmaking 🤖🖐️

This drone becomes a flying manipulator! 🥏 Researchers at the The University of Tokyo developed this aerial robot. Built with four pairs of ducted fans linked by actuated joints, Dragon can reshape itself mid-flight. This allows it to grasp objects and perform tasks…

Current robotic systems face constraints in loco-manipulation due to task-specific designs and fixed joint configurations, limiting adaptability in diverse environments. The ReLIC (Reinforcement Learning for Interlimb Coordination) framework being presented at #CoRL2025…

Getting robots to move swiftly and effortlessly through the unstructured world is more challenging than it seems. The RAI Institute is building robots that think, plan, and move like athletes - robots with mobility and intelligence of a professional bike trial rider that can…

We wrapped up the spring semester with a group dinner. Best wishes to the graduates from @IRVLUTD !!

Slow start, but the pace is heating up 🔥🤖. Grateful to hit 50 citations on #GoogleScholar — just the beginning of an exciting #robotics journey! 🚀📚 #LateBloomer utd.link/jpgs @IRVLUTD @UT_Dallas

Unitree B2-W Talent Awakening! 🥳 One year after mass production kicked off, Unitree’s B2-W Industrial Wheel has been upgraded with more exciting capabilities. Please always use robots safely and friendly. #Unitree #Quadruped #Robotdog #Parkour #EmbodiedAI #IndustrialRobot…

Wrapped up my 2nd season as a TA for Dr. @YuXiang_IRVL's robotics course. From being a student in the first cohort to TA'ing for the 3rd cohort, it’s been an amazing journey of growth and learning! Check out the course at labs.utdallas.edu/irvl/courses/f… #Robotics #utdallas @IRVLUTD

Finished my robotics course this semester. If you want to learn some basics in robotics (configuration space, rigid-body motion, kinematics, dynamics, motion planning and control), feel free to check it out: labs.utdallas.edu/irvl/courses/f… Homework is designed to learn ROS programming

Over the past 3 years, I had the chance to present some exciting works in the literature—from classic superpixels' use in computational geometry to the latest in action pretraining for robotics—at @UT_Dallas and @IRVLUTD. Check out utd.link/jpad-ppt #Robotics #AI #Research

🤖 What if you could train robots with just 3 human demonstrations instead of hundreds? 👀SkillGen from #NVIDIAResearch makes it possible. This breakthrough in robot learning generates high-quality training data from minimal human input. ✅ Generates 100+ demos from only 3…

Another fascinating area of work: teaching skills from humans to robots. Stay tuned—something along similar lines is in the works on our end! #robotics

I don’t know if we live in a Matrix, but I know for sure that robots will spend most of their lives in simulation. Let machines train machines. I’m excited to introduce DexMimicGen, a massive-scale synthetic data generator that enables a humanoid robot to learn complex skills…

Taking a break from research and celebrating the festivities is a must! 🎉 Loved building this demo and watching people interact with our robot, powered by our research tools. 🤖 Happy #Halloween @IRVLUTD @UT_Dallas @UTDallasNews Stay tuned for more fun stuff.

Paving the way, @AIatMeta is always a step ahead.

Today at Meta FAIR we’re announcing three new cutting-edge developments in robotics and touch perception — and releasing a collection of artifacts to empower the community to build on this work. Details on all of this new work ➡️ go.fb.me/mmmu9d 1️⃣ Meta Sparsh is the…

MBZUAI has released Nanda, the world’s most advanced open source Hindi large language model (LLM), developed by the University’s Institute of Foundation Models (IFM) in partnership with Inception (a @G42ai company) and @CerebrasSystems. The release marks a significant milestone…

Not every foundation model needs to be gigantic. We trained a 1.5M-parameter neural network to control the body of a humanoid robot. It takes a lot of subconscious processing for us humans to walk, maintain balance, and maneuver our arms and legs into desired positions. We…

I really like the design of Fetch for research. A pity that it is not manufactured anymore • It supports navigation and manipulation • built-in camera (no need to calibrate) • We can also use a PS4 controller for teleoperation (4X video by @saihaneesh_allu @jis_padalunkal)

🌟Curious how robots can explore the unknown and understand objects around them? Meet 'AutoX-SemMap'—a real-time system for autonomous semantic mapping! @IRVLUTD @YuXiang_IRVL. Learn more: utd.link/AutoX-SemMap. Thanks to @jis_padalunkal for his support. #Robotics (1/n)

United States Trends

- 1. Stanford 10.2K posts

- 2. #AEWWrestleDream 67.1K posts

- 3. Mike Norvell 1,417 posts

- 4. Florida State 9,705 posts

- 5. sabrina 63.2K posts

- 6. #byucpl N/A

- 7. Darby 11K posts

- 8. Hugh Freeze 2,994 posts

- 9. Utah 32.3K posts

- 10. Lincoln Riley 2,804 posts

- 11. Mizzou 6,192 posts

- 12. Bama 16.4K posts

- 13. Castellanos 3,798 posts

- 14. Sperry N/A

- 15. Kentucky 25.2K posts

- 16. Stoops 4,998 posts

- 17. Arch 26K posts

- 18. Nobody's Son 2,683 posts

- 19. Sark 4,590 posts

- 20. Sting 14.6K posts

Something went wrong.

Something went wrong.