你可能會喜歡

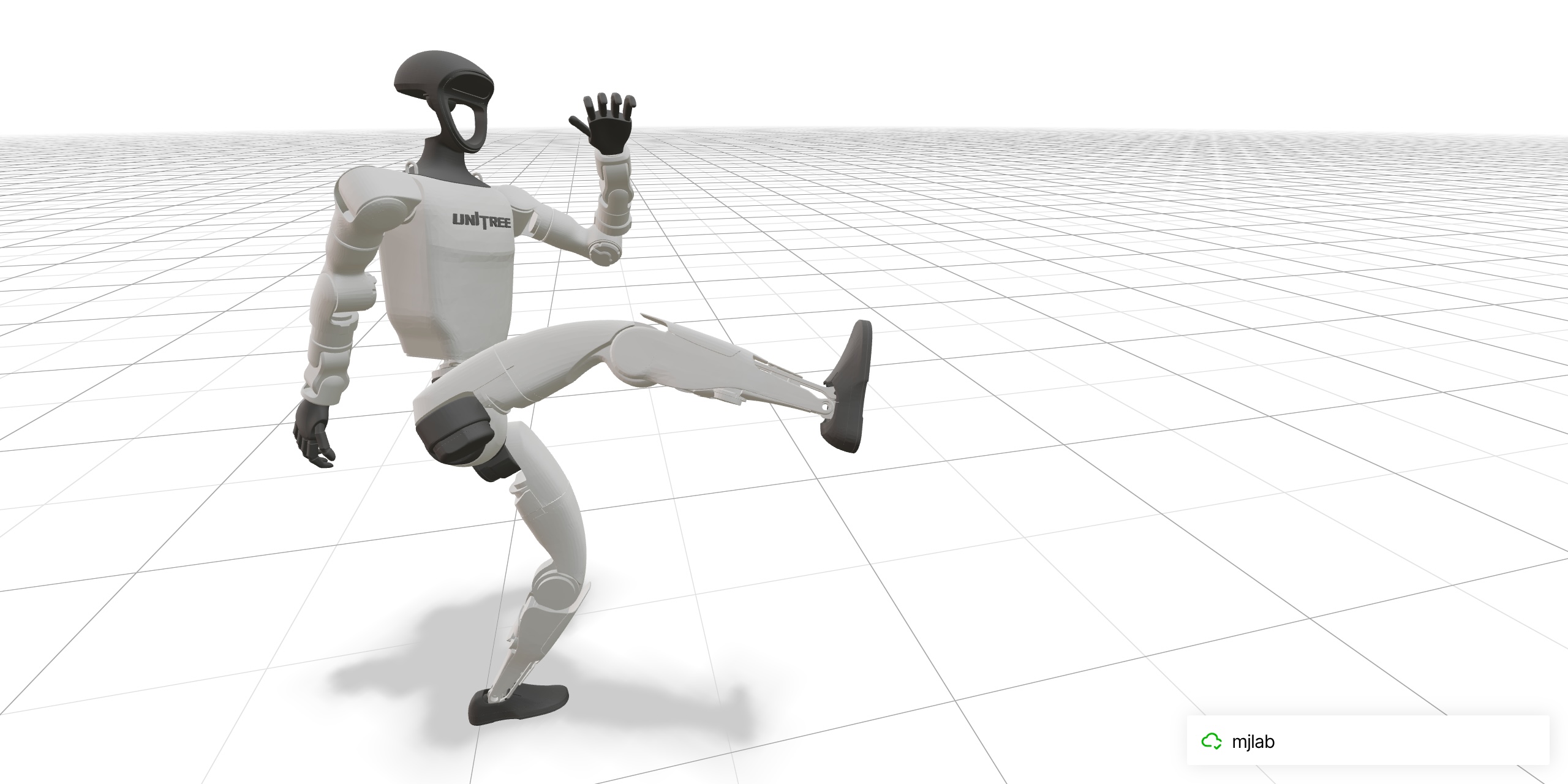

It was a joy bringing Jason’s signature spin-kick to life on the @UnitreeRobotics G1. We trained it in mjlab with the BeyondMimic recipe but had issues on hardware last night (the IMU gyro was saturating). One more sim-tuning pass and we nailed it today. With @qiayuanliao and…

Implementing motion imitation methods involves lots of nuisances. Not many codebases get all the details right. So, we're excited to release MimicKit! github.com/xbpeng/MimicKit A framework with high quality implementations of our methods: DeepMimic, AMP, ASE, ADD, and more to come!

Trained my first dancing policy and deployed it with mjlab this week! Thank you for the incredible work!

We open-sourced the full pipeline! Data conversion from MimicKit, training recipe, pretrained checkpoint, and deployment instructions. Train your own spin kick with mjlab: github.com/mujocolab/g1_s…

We've also upgraded the viser viewer in mjlab. You can now track your robot, visualize contact points and forces and change the FOV. This wouldn't have been possible without @brenthyi !

Underrated perk of strict typing: agents get instant feedback from the type checker, so they self-correct and give me correct, runnable code way more often :)

I forgot to mention: mjlab is fully typed and passes type checking with pyright. Been super helpful for catching bugs before running any code.

Had a great time playing with and integrating mjlab into HDMI (github.com/LeCAR-Lab/HDMI…). Learned a lot of fancy mujoco tricks from @kevin_zakka's super clean code. Love seeing parrallel envs in mujoco! Also feel free to play with our sim2real code (github.com/EGalahad/sim2r…)!

Our experience using mjlab has been quite similar: ~2x speedup over Isaac Lab when using objects 🙂

Performance-wise mjlab is faster by about 2x to isaaclab. (green and purple lines being mjlab). Without object, isaaclab and mjlab is actually similar in my case, but isaaclab slows down by 2x when object is included.

We're growing the uv team! uv is one of the fastest-growing developer tools ever, built and maintained by a small, talented team :) Write Rust, for Python. Full-time, open source, remote. We're very well-funded and in it for the long haul. Link in next tweet 👇 or DM me here.

Compared to the JAX backend, it's much faster. There's things you can't even compile with MJX and it's no problem for mjwarp. For example, mjlab supports rough terrains (not possible in mjx):

Of course mjlab supports the native MuJoCo viewer. Makes it a breeze to pause, slow down, inspect contacts, perturb the robot, etc. There's also a brand new pane for reward visualization :)

BTW, the robot falls over after the cartwheel because the reference motion ends. Also all these motion imitation policies were trained with 0 domain randomization :)

I'm super excited to announce mjlab today! mjlab = Isaac Lab's APIs + best-in-class MuJoCo physics + massively parallel GPU acceleration Built directly on MuJoCo Warp with the abstractions you love.

United States 趨勢

- 1. Auburn 46.1K posts

- 2. At GiveRep N/A

- 3. Brewers 65.7K posts

- 4. Cubs 56.8K posts

- 5. #SEVENTEEN_NEW_IN_TACOMA 33.8K posts

- 6. Georgia 68.6K posts

- 7. Gilligan 6,225 posts

- 8. #byucpl N/A

- 9. MACROHARD 4,869 posts

- 10. Utah 25.2K posts

- 11. Arizona 42.1K posts

- 12. Kirby 24.4K posts

- 13. Wordle 1,576 X N/A

- 14. #AcexRedbull 4,274 posts

- 15. Michigan 62.5K posts

- 16. #SVT_TOUR_NEW_ 25.5K posts

- 17. Boots 51.4K posts

- 18. #Toonami 2,998 posts

- 19. mingyu 91.4K posts

- 20. Hugh Freeze 3,284 posts

你可能會喜歡

-

Karol Hausman

Karol Hausman

@hausman_k -

Sasha Rush

Sasha Rush

@srush_nlp -

Deepak Pathak

Deepak Pathak

@pathak2206 -

Danijar Hafner

Danijar Hafner

@danijarh -

Abhishek Gupta

Abhishek Gupta

@abhishekunique7 -

Jascha Sohl-Dickstein

Jascha Sohl-Dickstein

@jaschasd -

Animesh Garg

Animesh Garg

@animesh_garg -

Sergey Levine

Sergey Levine

@svlevine -

Chelsea Finn

Chelsea Finn

@chelseabfinn -

Eric Jang

Eric Jang

@ericjang11 -

Jacky Liang

Jacky Liang

@jackyliang42 -

Dhruv Batra

Dhruv Batra

@DhruvBatra_ -

Fei Xia

Fei Xia

@xf1280 -

Jakob Foerster

Jakob Foerster

@j_foerst -

Pulkit Agrawal

Pulkit Agrawal

@pulkitology

Something went wrong.

Something went wrong.