Bala

@nbk_code

ML Scientist

💥 Today we say “hello world” from OpenAI for Science. We’re releasing a paper showing 13 examples of GPT-5 accelerating scientific research across math, physics, biology, and materials science. In 4 of these examples, GPT-5 helped find proofs of previously unsolved problems.

3 years ago we could showcase AI's frontier w. a unicorn drawing. Today we do so w. AI outputs touching the scientific frontier: cdn.openai.com/pdf/4a25f921-e… Use the doc to judge for yourself the status of AI-aided science acceleration, and hopefully be inspired by a couple examples!

MMaDA-Parallel Multimodal Large Diffusion Language Models for Thinking-Aware Editing and Generation

Tired to go back to the original papers again and again? Our monograph: a systematic and fundamental recipe you can rely on! 📘 We’re excited to release 《The Principles of Diffusion Models》— with @DrYangSong, @gimdong58085414, @mittu1204, and @StefanoErmon. It traces the core…

Diffusion LLMs explained by the man himself - @StefanoErmon youtube.com/watch?v=BaZT4a…

youtube.com

YouTube

Diffusion LLMs - The Fastest LLMs Ever Built | Stefano Ermon,...

Diffusion LMs are more data efficient than Autoregressive (AR) LMs, and the difference is insane. Due to its training objective, DLMs basically have built-in Monte Carlo augmentation. So when unique data is limited, DLMs would always surpass AR models, and harder to overfit!

Massive update for AI Engineers! Training diffusion models just got a lot easier. dLLM is an open-source library that does for diffusion models what Hugging Face did for transformers. Here's why this matters: Traditional autoregressive models generate text left-to-right, one…

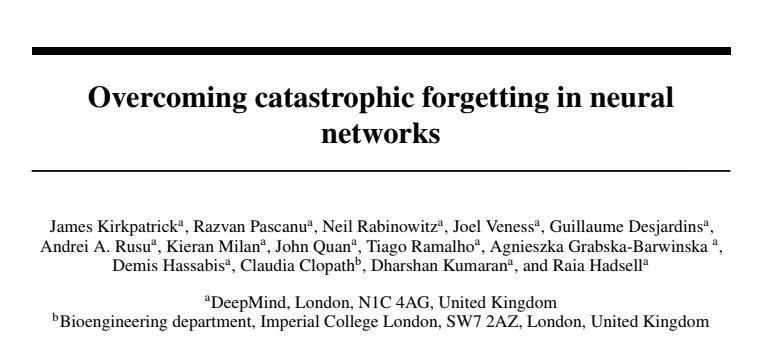

People working on continual learning should take notice of this fairly old paper: Elastic Weight Consolidation. The principle is simple - 1. Take a calibration dataset. 2. Do a forward and a backward pass to get gradients. 3. Compute Fisher information. 4. During real…

🚀 Hello, Kimi K2 Thinking! The Open-Source Thinking Agent Model is here. 🔹 SOTA on HLE (44.9%) and BrowseComp (60.2%) 🔹 Executes up to 200 – 300 sequential tool calls without human interference 🔹 Excels in reasoning, agentic search, and coding 🔹 256K context window Built…

We are excited to introduce Mercury, the first commercial-grade diffusion large language model (dLLM)! dLLMs push the frontier of intelligence and speed with parallel, coarse-to-fine text generation.

Training LLMs end to end is hard. Very excited to share our new blog (book?) that cover the full pipeline: pre-training, post-training and infra. 200+ pages of what worked, what didn’t, and how to make it run reliably huggingface.co/spaces/Hugging…

wrote a blog on KV caching. link in comments.

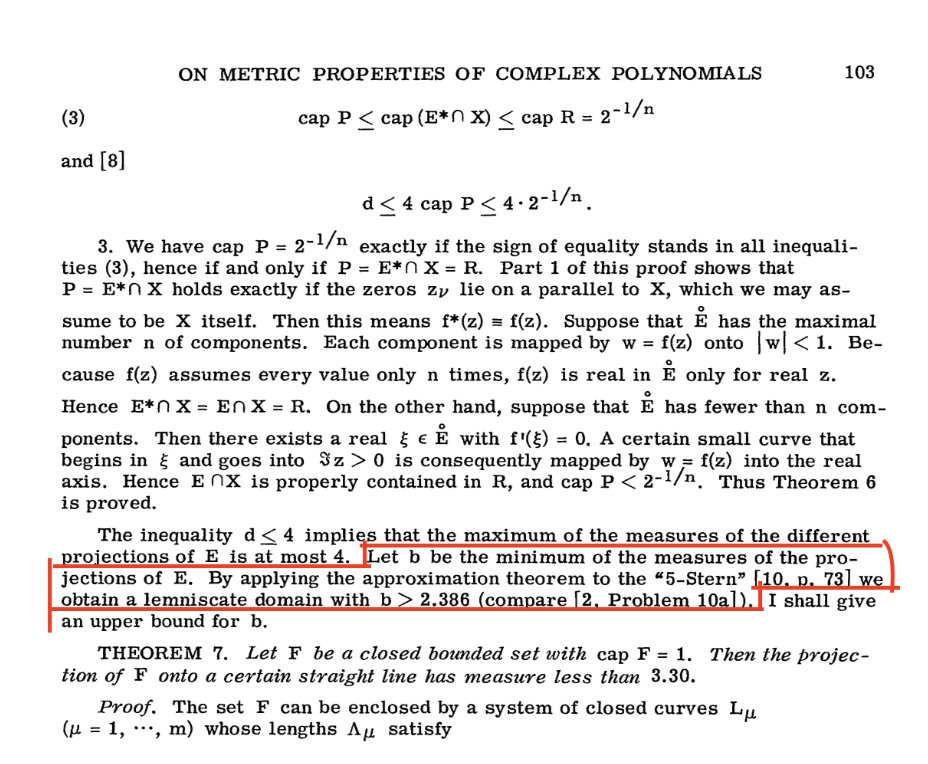

Sebastien Bubeck just cleared the air and honestly, it makes GPT-5’s achievement even more astonishing. No, GPT-5 didn’t magically solve a new Erdős problem. What it did was arguably harder to appreciate: it rediscovered a long-forgotten mathematical link, buried in obscure…

My posts last week created a lot of unnecessary confusion*, so today I would like to do a deep dive on one example to explain why I was so excited. In short, it’s not about AIs discovering new results on their own, but rather how tools like GPT-5 can help researchers navigate,…

I used ChatGPT to solve an open problem in convex optimization. *Part I* (1/N)

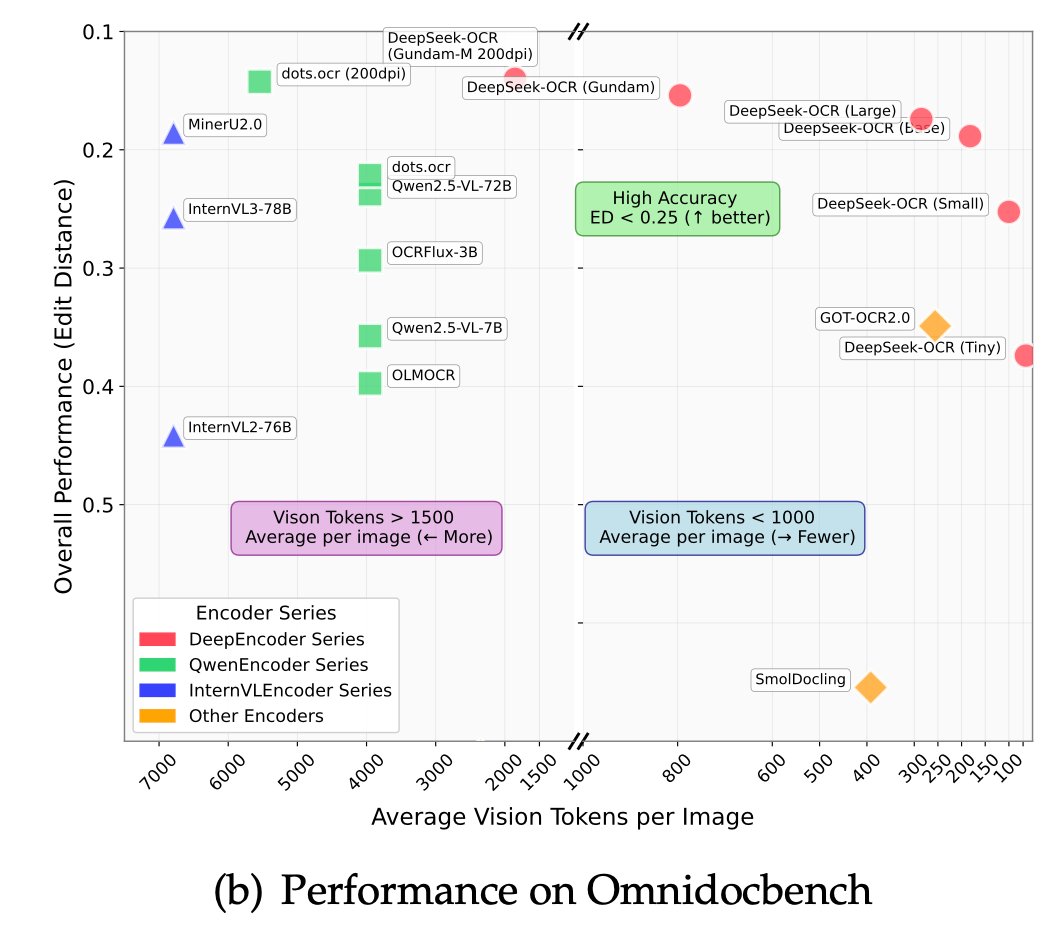

I quite like the new DeepSeek-OCR paper. It's a good OCR model (maybe a bit worse than dots), and yes data collection etc., but anyway it doesn't matter. The more interesting part for me (esp as a computer vision at heart who is temporarily masquerading as a natural language…

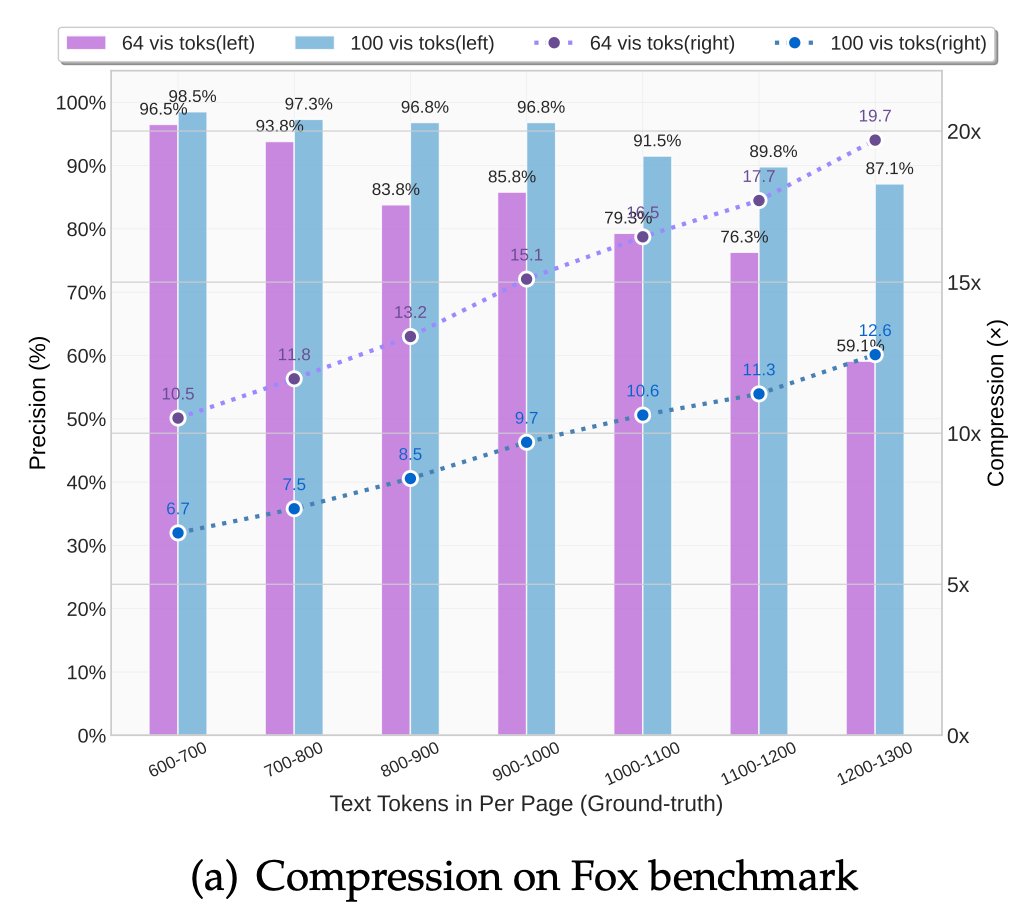

🚀 DeepSeek-OCR — the new frontier of OCR from @deepseek_ai , exploring optical context compression for LLMs, is running blazingly fast on vLLM ⚡ (~2500 tokens/s on A100-40G) — powered by vllm==0.8.5 for day-0 model support. 🧠 Compresses visual contexts up to 20× while keeping…

Replicate IMO-Gold in less than 500 lines: gist.github.com/faabian/39d057… The prover-verifier workflow from Huang & Yang: Winning Gold at IMO 2025 with a Model-Agnostic Verification-and-Refinement Pipeline (arxiv.org/abs/2507.15855), original code at github.com/lyang36/IMO25/

Excited to release new repo: nanochat! (it's among the most unhinged I've written). Unlike my earlier similar repo nanoGPT which only covered pretraining, nanochat is a minimal, from scratch, full-stack training/inference pipeline of a simple ChatGPT clone in a single,…

United States Trendy

- 1. Josh Allen 31.2K posts

- 2. Texans 51K posts

- 3. Bills 145K posts

- 4. Joe Brady 4,740 posts

- 5. #MissUniverse 330K posts

- 6. #MissUniverse 330K posts

- 7. Anderson 25.8K posts

- 8. McDermott 3,990 posts

- 9. Maxey 10.4K posts

- 10. #TNFonPrime 3,014 posts

- 11. Al Michaels N/A

- 12. Costa de Marfil 21.3K posts

- 13. Dion Dawkins N/A

- 14. Shakir 5,441 posts

- 15. #htownmade 3,289 posts

- 16. CJ Stroud 1,133 posts

- 17. #BUFvsHOU 3,125 posts

- 18. James Cook 5,532 posts

- 19. Spencer Brown N/A

- 20. Knox 5,539 posts

Something went wrong.

Something went wrong.