Wenting Zhao

@wzhao_nlp

reasoning & llms @Alibaba_Qwen Opinions are my own

Bạn có thể thích

Kimi AMA on K2 Thinking: 1. $4.6M training cost is not an official number 2. Trained on H800s (nerfed H100s) 3. KDA (Kimi Delta Attention) hybrids with NoPE MLA perform better than full MLA with RoPE 4. Muon scales well to 1T parameters. “there are tens of optimizers and…

This is such a cool talk. The more I work on LMs, the more I feel the recipe really is just as simple as 10% high-school ML work + 90% infra work to scale it up, which I find frustrating and fascinating at the same time 🥺🤩

Talk at Ray Summit on "Building Cursor Composer." Overview of the work from our research team. youtube.com/watch?v=md8D8e…

youtube.com

YouTube

Ray Summit 2025 Keynote: Building Cursor Composer with Sasha Rush

Team Eric 🫡

.@ericzelikman & 7th Googler @gharik are raising $1b for an AI lab called Humans&. I'm told Eric's paper STaR was an inspiration for OpenAI's reasoning models, and that he was also one of the star AI researchers labs fought over. forbes.com/sites/annatong…

Many people are confused by Minimax’s recent return to full attention - especially since it was the first large-scale pivot toward hybrid linear attention - and by Kimi’s later adoption of hybrid linear variants (as well as earlier attempts by Qwen3-Next, or Qwen3.5). I actually…

MiniMax M2 Tech Blogs on Huggingface: 1. huggingface.co/blog/MiniMax-A… 2. huggingface.co/blog/MiniMax-A… 3. huggingface.co/blog/MiniMax-A…

MiniMax M2 Tech Blog 3: Why Did M2 End Up as a Full Attention Model? On behave of pre-training lead Haohai Sun. (zhihu.com/question/19653…) I. Introduction As the lead of MiniMax-M2 pretrain, I've been getting many queries from the community on "Why did you turn back the clock…

If you happen to be in Shanghai next Monday, come hang out with us 🤩

We will have a pre-EMNLP workshop about LLMs next Monday at @nyushanghai campus! Speakers are working on diverse and fantastic problems, really looking forward to it! We also provide a zoom link for those who cannot join in person :) (see poster)

One personal reflection is how interesting a challenge RL is. Unlike other ML systems, you can't abstract much from the full-scale system. Roughly, we co-designed this project and Cursor together in order to allow running the agent at the necessary scale.

Tired to go back to the original papers again and again? Our monograph: a systematic and fundamental recipe you can rely on! 📘 We’re excited to release 《The Principles of Diffusion Models》— with @DrYangSong, @gimdong58085414, @mittu1204, and @StefanoErmon. It traces the core…

The question I got asked most frequently during COLM this year was what research questions can be studied in academia that will also be relevant to frontier labs. So I’m making a talk for this. What topics / areas should I cover? RL/eval/pretraining,?

Our latest post explores on-policy distillation, a training approach that unites the error-correcting relevance of RL with the reward density of SFT. When training it for math reasoning and as an internal chat assistant, we find that on-policy distillation can outperform other…

it’s tokenization again! 🤯 did you know tokenize(detokenize(token_ids)) ≠ token_ids? RL researchers from Agent Lightning coined the term Retokenization Drift — a subtle mismatch between what your model generated and what your trainer thinks it generated. why? because most…

youtube.com

YouTube

Let's build the GPT Tokenizer

Below is a deep dive into why self play works for two-player zero-sum (2p0s) games like Go/Poker/Starcraft but is so much harder to use in "real world" domains. tl;dr: self play converges to minimax in 2p0s games, and minimax is really useful in those games. Every finite 2p0s…

Self play works so well in chess, go, and poker because those games are two-player zero-sum. That simplifies a lot of problems. The real world is messier, which is why we haven’t seen many successes from self play in LLMs yet. Btw @karpathy did great and I mostly agree with him!

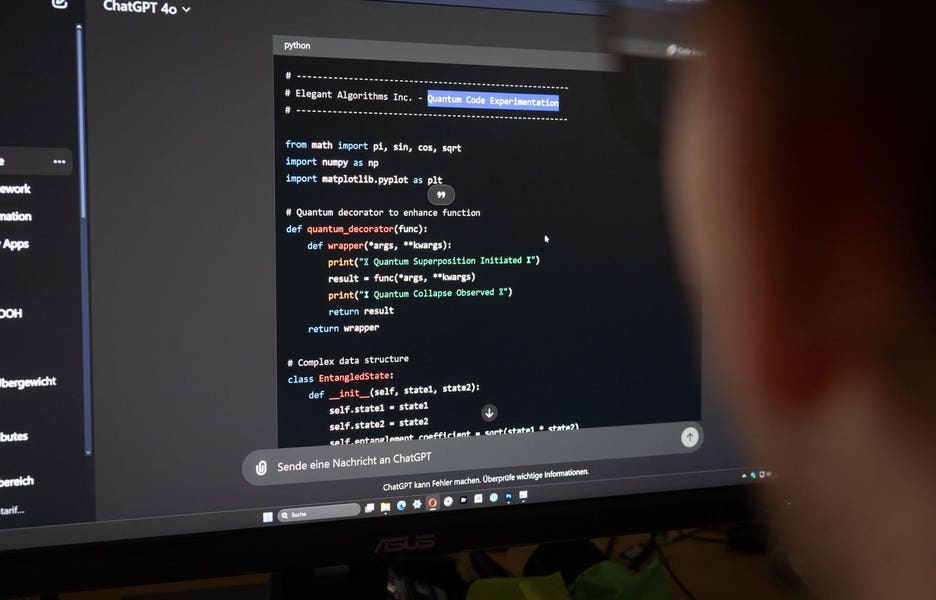

People ask about how to be hired by frontier labs? Understand and be able to produce every detail👇

Excited to release new repo: nanochat! (it's among the most unhinged I've written). Unlike my earlier similar repo nanoGPT which only covered pretraining, nanochat is a minimal, from scratch, full-stack training/inference pipeline of a simple ChatGPT clone in a single,…

Talk from Wenting Zhao of Qwen on their plans during COLM. Seems like 1 word is the plan still: scaling training up! Let’s go.

I was really looking forward to be at #COLM2025 with Junyang, but visa takes forever 😞 come ask me about Qwen: how is it like to work here, what features you’d like to see, what bugs you’d like us to fix, or anything!

Sorry about missing COLM due to my failure in my VISA application. @wzhao_nlp will be there and represent Qwen to give a speech and discuss on the panel about reasoning and agents!

Want to hear some hot takes about the future of language modeling, and share your takes too? Stop by the Visions of Language Modeling workshop at COLM on Friday, October 10 in room 519A! There will be over a dozen speakers working on all kinds of problems in modeling language and…

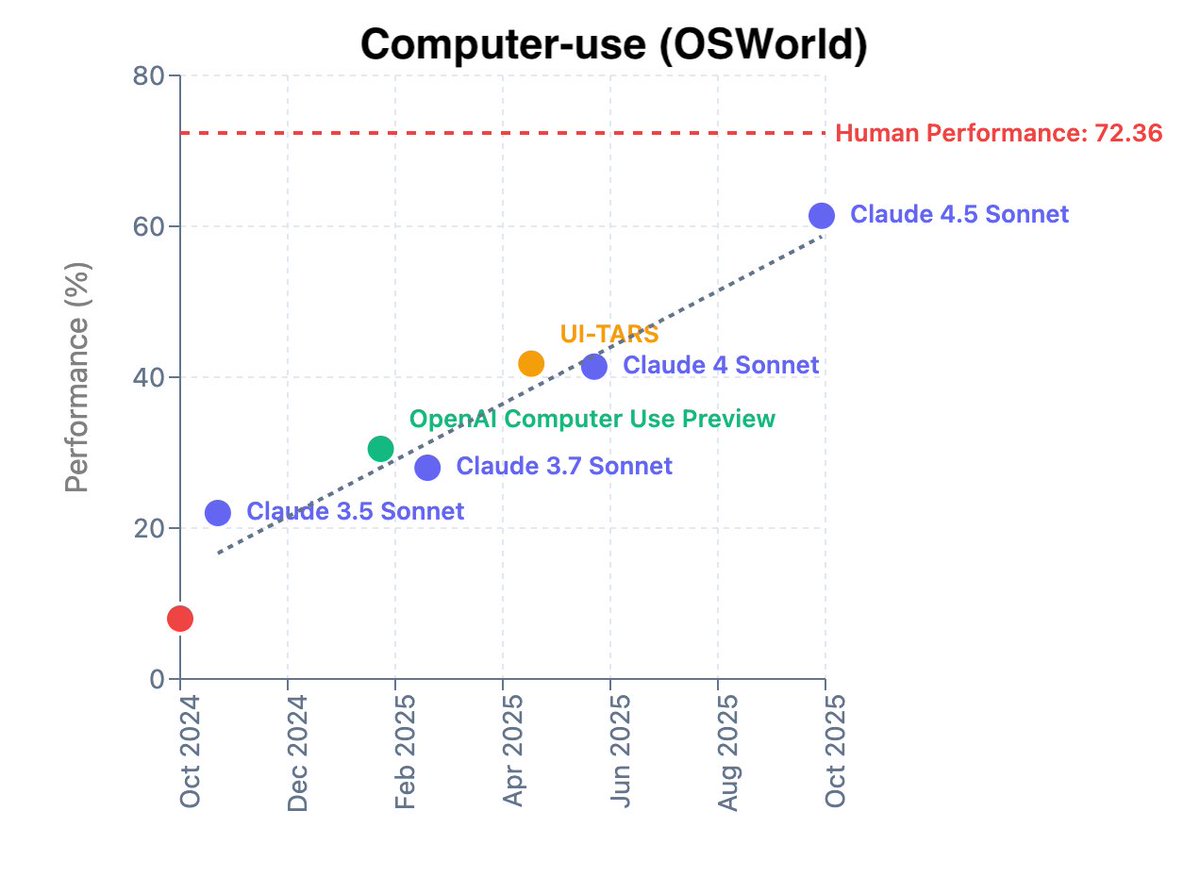

When @ethansdyer and I joined Anthropic last Dec and spearheaded the discovery team, we decided to focus on unlocking computer-use as a bottleneck for scientific discovery. It has been incredible to work on improving computer-use and witness the fast progress. In OSWorld for…

United States Xu hướng

- 1. Good Friday 40K posts

- 2. Cloudflare 34.2K posts

- 3. #FridayVibes 2,924 posts

- 4. Cowboys 74K posts

- 5. Happy Farmers 2,350 posts

- 6. #heatedrivalry 31.7K posts

- 7. Warner Bros 37.8K posts

- 8. Jake Tapper 46.5K posts

- 9. Wizkid 185K posts

- 10. Pickens 15.5K posts

- 11. The Gong Show N/A

- 12. Paramount 22.5K posts

- 13. Shang Tsung 38K posts

- 14. fnaf 2 28.3K posts

- 15. Gibbs 21.3K posts

- 16. #PowerForce 1,001 posts

- 17. Davido 103K posts

- 18. scott hunter 7,286 posts

- 19. Hiroyuki Tagawa 33.7K posts

- 20. The EU 118K posts

Bạn có thể thích

-

Yuntian Deng

Yuntian Deng

@yuntiandeng -

Zhaofeng Wu ✈️ NeurIPS

Zhaofeng Wu ✈️ NeurIPS

@zhaofeng_wu -

Yu Feng ✈️ NeurIPS

Yu Feng ✈️ NeurIPS

@AnnieFeng6 -

Hamish Ivison ➡️ neurips

Hamish Ivison ➡️ neurips

@hamishivi -

Prasann Singhal

Prasann Singhal

@prasann_singhal -

Yuling Gu

Yuling Gu

@gu_yuling -

Chenghao Yang

Chenghao Yang

@chrome1996 -

Yikang Shen

Yikang Shen

@Yikang_Shen -

Belinda Li

Belinda Li

@belindazli -

Zhaowei Wang

Zhaowei Wang

@ZhaoweiWang4 -

Joongwon Kim

Joongwon Kim

@danieljwkim -

Kyle Lo @ NeurIPS 2025

Kyle Lo @ NeurIPS 2025

@kylelostat -

Songlin Yang ✈️ NeurIPS '25

Songlin Yang ✈️ NeurIPS '25

@SonglinYang4 -

Ian Magnusson

Ian Magnusson

@IanMagnusson -

Nishant Subramani @ EMNLP

Nishant Subramani @ EMNLP

@nsubramani23

Something went wrong.

Something went wrong.