#distributedtraining search results

Communication is often the bottleneck in distributed AI. Gensyn’s CheckFree offers a fault-tolerant pipeline method that yields up to 1.6× speedups with minimal convergence loss. @gensynai #AI #DistributedTraining

🚀 Introducing Asteron LM – a distributed training platform built for the ML community. PS: Website coming soooon !! #FutureOfAI #OpenAICommunity #DistributedTraining #Startup #buildinpublic #indiehackers #aiforall #DemocratizingAI

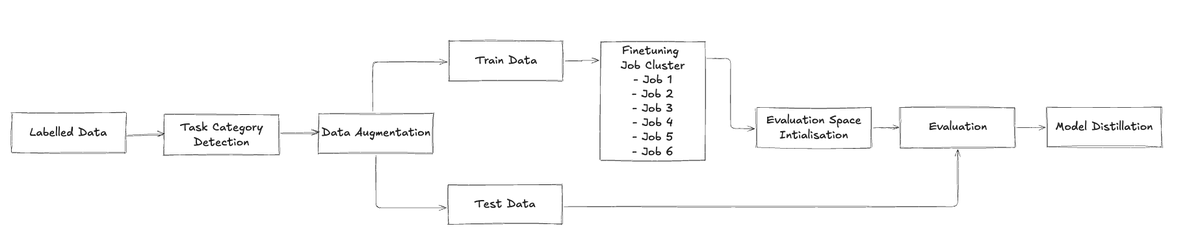

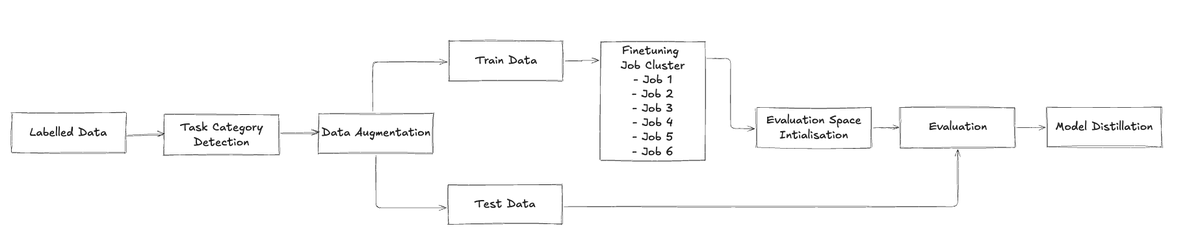

System Architecture Overview The system has two subsystems: • Data Processing – Manages data acquisition, enhancement, and quality checks. • #DistributedTraining – Oversees parallel fine-tuning, resource allocation, and evaluation. This division allows independent scaling,…

Data from block 3849132 (a second ago) --------------- 💎🚀 Subnet32 emission has changed from 2.587461% to 2.5954647% #bittensor #decentralizedAI #distributedtraining $tao #subnet @ai_detection

Elevate your #ML projects with our AI Studio’s Training Jobs—designed for seamless scalability and real-time monitoring. Support for popular frameworks like PyTorch, TensorFlow, and MPI ensures effortless #distributedtraining. Key features include: ✨ Distributed Training: Run…

RT Training BERT at a University dlvr.it/RnRh2T #distributedsystems #distributedtraining #opensource #deeplearning

Distributed Training in ICCLOUD's Layer 2 with Horovod + Mixed - Precision. Cuts training costs by 40%. Cost - effective training! #DistributedTraining #CostSaving

✅ Achievement Unlocked! Efficient #distributedtraining of #DeepSeek-R1:671B is realized on #openEuler 24.03! Built for the future of #AI, openEuler empowers #developers to push the boundaries of innovation. 🐋Full technical deep dive coming soon! @deepseek_ai #opensource #LLM

DeepSpeed makes distributed training feel like magic. What took 8 GPUs now runs on 2. Gradient accumulation and model sharding just work out of the box. #DeepSpeed #DistributedTraining #Python

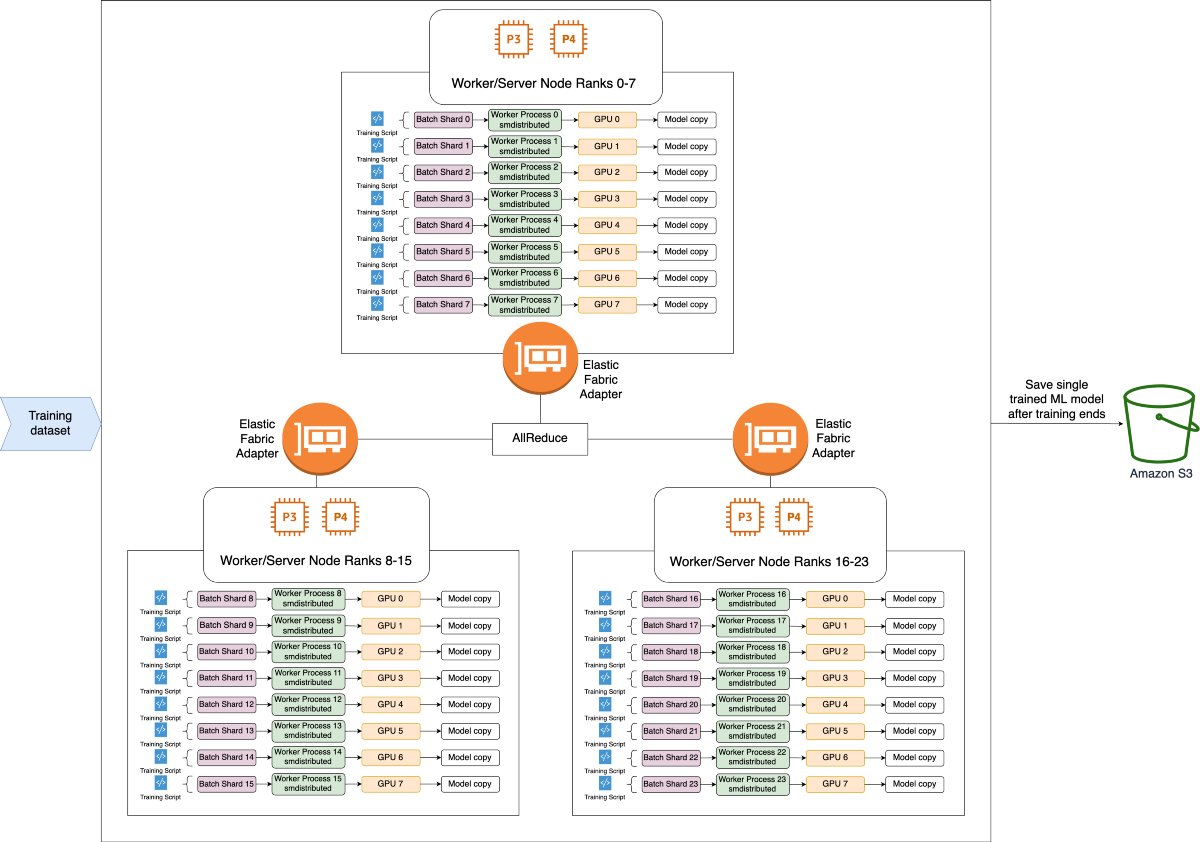

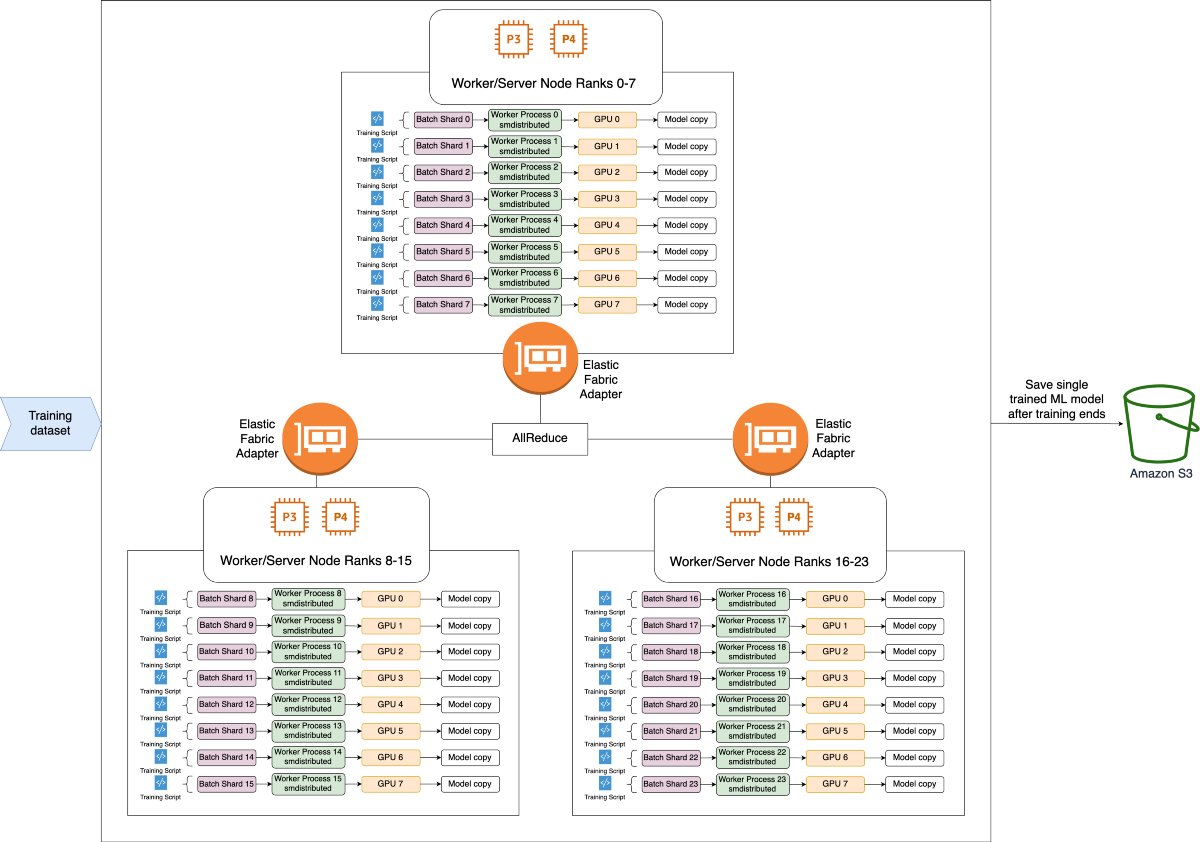

RT Single line distributed PyTorch training on AWS SageMaker dlvr.it/RjStv7 #distributedtraining #pytorch #sagemaker #datascience #deeplearning

Distributed training just got easier on Outerbounds! Now generally available, it supports multi-GPU setups with [@]torchrun and [@]metaflow_ray. Perfect for large data and models. Train efficiently, even at scale! 💡 #AI #DistributedTraining

![OuterboundsHQ's tweet image. Distributed training just got easier on Outerbounds! Now generally available, it supports multi-GPU setups with [@]torchrun and [@]metaflow_ray. Perfect for large data and models. Train efficiently, even at scale! 💡 #AI #DistributedTraining](https://pbs.twimg.com/media/GX2qQi9WcAALkzc.jpg)

RT Cost Efficient Distributed Training with Elastic Horovod and Amazon EC2 Spot Instances dlvr.it/RslZnC #elastic #amazonec2 #distributedtraining #horovod #deeplearning

RT Distributed Parallel Training — Model Parallel Training dlvr.it/SYG4xb #machinelearning #distributedtraining #largemodeltraining

Custom Slurm clusters deploy in 37 seconds. Orchestrate multi-node training jobs with H100s at $3.58/GPU/hr. Simplify distributed AI. get.runpod.io/oyksj6fqn1b4 #Slurm #DistributedTraining #HPC #AIatScale

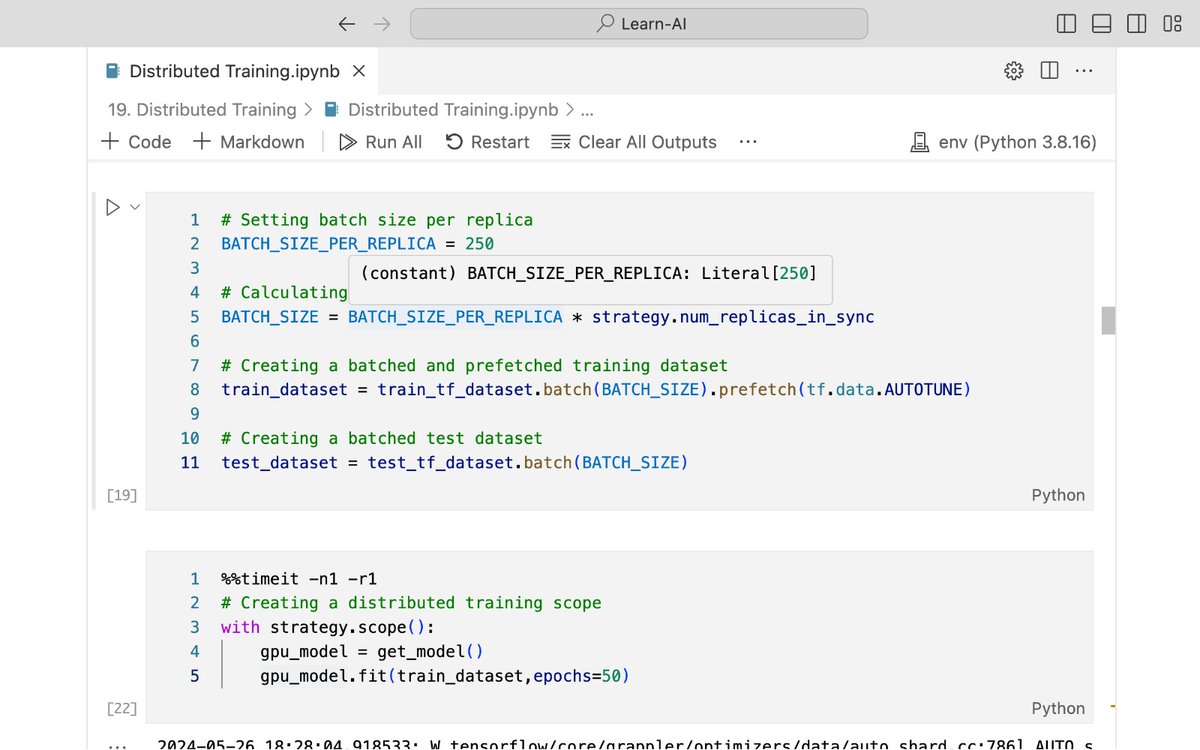

Day 27 of #100DaysOfCode: 🌟 Today, I coded for Distributed Training! 🖥️ 🔄 Even without multiple CPUs, I learned how to scale deep learning models across devices. Excited to apply this knowledge in future projects! #DistributedTraining #DeepLearning #AI

RT Smart Distributed Training on Amazon SageMaker with SMD: Part 2 dlvr.it/SYkhMQ #sagemaker #horovod #distributedtraining #machinelearning #tensorflow

RT Smart Distributed Training on Amazon SageMaker with SMD: Part 3 dlvr.it/SYktJ4 #distributedtraining #machinelearning #deeplearning #sagemaker

RT Speed up EfficientNet training on AWS by up to 30% with SageMaker Distributed Data Parallel Library dlvr.it/SH12hL #sagemaker #distributedtraining #deeplearning #computervision #aws

Here’s the post: 🔗 medium.com/@jenwei0312/th… If it stops one person from quitting distributed training — mission complete. ❤️🔥 #AIResearch #DistributedTraining #LLM #PyTorch #DeepLearning #MuonOptimizer #ResearchJourney #ZeRO #TensorParallel #FSDP #OpenSourceAI

DeepSpeed makes distributed training feel like magic. What took 8 GPUs now runs on 2. Gradient accumulation and model sharding just work out of the box. #DeepSpeed #DistributedTraining #Python

Communication is often the bottleneck in distributed AI. Gensyn’s CheckFree offers a fault-tolerant pipeline method that yields up to 1.6× speedups with minimal convergence loss. @gensynai #AI #DistributedTraining

The best platform for autoML #distributedtraining I've ever seen.

Remember that everyone outside of Bittensor and even those holding $TAO hoping for a lower entry on 56 alpha will shun these achievements. They are incentivized to. We know the truth; @gradients_ai is the best performing, fastest improving and lowest cost AutoML platform ever.

Custom Slurm clusters deploy in 37 seconds. Orchestrate multi-node training jobs with H100s at $3.58/GPU/hr. Simplify distributed AI. get.runpod.io/oyksj6fqn1b4 #Slurm #DistributedTraining #HPC #AIatScale

⚡️ As AI model parameters reach into the billions, Bittensor's infrastructure supports scalable, decentralized training—making artificial general intelligence more attainable through global, collaborative efforts rather than isolated labs. #AGI #DistributedTraining

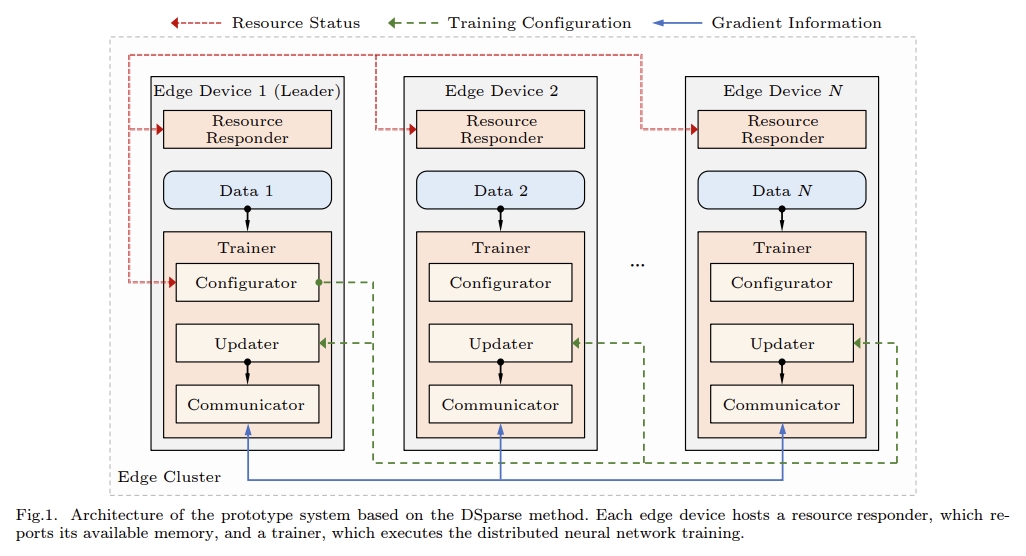

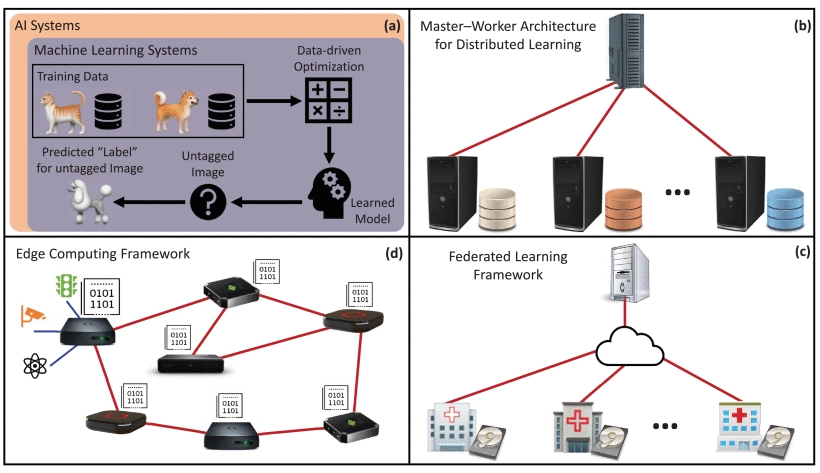

DSparse: A Distributed Training Method for Edge Clusters Based on Sparse Update jcst.ict.ac.cn/article/doi/10… #DistributedTraining #EdgeComputing #MachineLearning #SparseUpdate #EdgeCluster #Institute_of_Computing_Technology @CAS__Science @UCAS1978

Distributed Training: Train massive AI models without massive bills! Akash Network's decentralized GPU marketplace cuts costs by up to 10x vs traditional clouds. Freedom from vendor lock-in included 😉 #MachineLearning #DistributedTraining #CostSavings $AKT $SPICE

📚 Blog: pgupta.info/blog/2025/07/d… 💻 Code: github.com/pg2455/distrib… I wrote this to understand the nuts and bolts of LLM infra — If you're on the same path, this might help. #PyTorch #LLMEngineering #DistributedTraining #MLInfra

12/20Learn distributed training frameworks: Horovod, PyTorch Distributed, TensorFlow MultiWorkerStrategy. Single-GPU training won't cut it for enterprise models. Model parallelism + data parallelism knowledge is essential. #DistributedTraining #PyTorch #TensorFlow

12/20Learn distributed training frameworks: Horovod, PyTorch Distributed, TensorFlow MultiWorkerStrategy. Single-GPU training won't cut it for enterprise models. Model parallelism + data parallelism knowledge is essential. #DistributedTraining #PyTorch #TensorFlow

7/20Learn distributed training early. Even "small" LLMs need multiple GPUs. Master PyTorch DDP, gradient accumulation, and mixed precision training. These skills separate hobbyists from professionals. #DistributedTraining #Scaling #GPU

Distributed Training in ICCLOUD's Layer 2 with Horovod + Mixed - Precision. Cuts training costs by 40%. Cost - effective training! #DistributedTraining #CostSaving

. @soon_svm #SOONISTHEREDPILL Soon_svm's distributed training enables handling of extremely large datasets. #DistributedTraining

Communication is often the bottleneck in distributed AI. Gensyn’s CheckFree offers a fault-tolerant pipeline method that yields up to 1.6× speedups with minimal convergence loss. @gensynai #AI #DistributedTraining

System Architecture Overview The system has two subsystems: • Data Processing – Manages data acquisition, enhancement, and quality checks. • #DistributedTraining – Oversees parallel fine-tuning, resource allocation, and evaluation. This division allows independent scaling,…

Data from block 3849132 (a second ago) --------------- 💎🚀 Subnet32 emission has changed from 2.587461% to 2.5954647% #bittensor #decentralizedAI #distributedtraining $tao #subnet @ai_detection

Elevate your #ML projects with our AI Studio’s Training Jobs—designed for seamless scalability and real-time monitoring. Support for popular frameworks like PyTorch, TensorFlow, and MPI ensures effortless #distributedtraining. Key features include: ✨ Distributed Training: Run…

RT Speed up EfficientNet training on AWS by up to 30% with SageMaker Distributed Data Parallel Library dlvr.it/SH12hL #sagemaker #distributedtraining #deeplearning #computervision #aws

RT Training BERT at a University dlvr.it/RnRh2T #distributedsystems #distributedtraining #opensource #deeplearning

Distributed Training in ICCLOUD's Layer 2 with Horovod + Mixed - Precision. Cuts training costs by 40%. Cost - effective training! #DistributedTraining #CostSaving

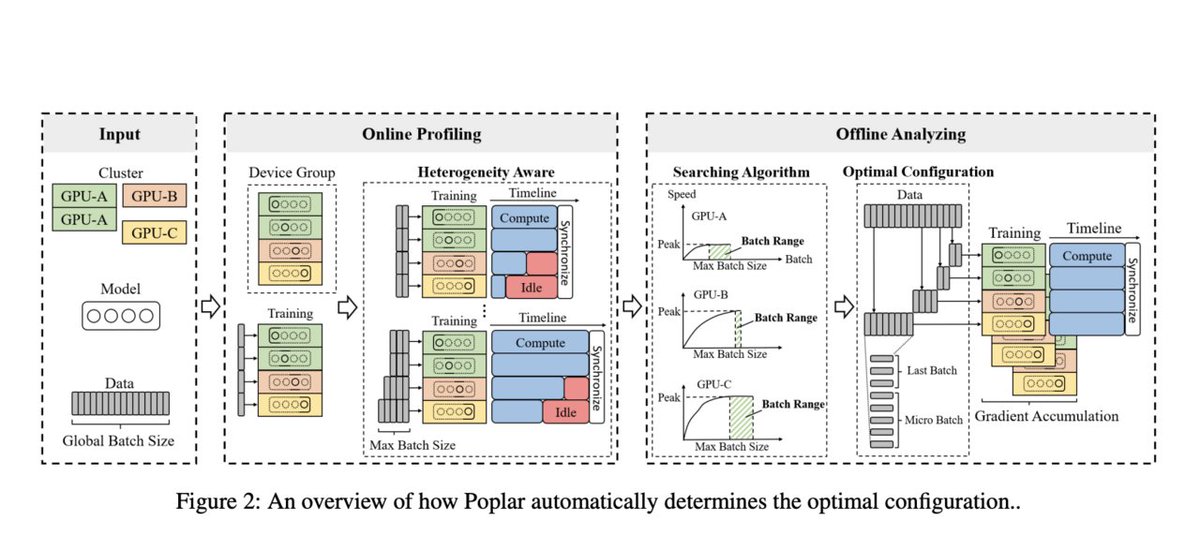

Poplar: A Distributed Training System that Extends Zero Redundancy Optimizer (ZeRO) with Heterogeneous-Aware Capabilities itinai.com/poplar-a-distr… #AI #DistributedTraining #HeterogeneousGPUs #ArtificialIntelligence #Poplar #ai #news #llm #ml #research #ainews #innovation #arti…

RT Single line distributed PyTorch training on AWS SageMaker dlvr.it/RjStv7 #distributedtraining #pytorch #sagemaker #datascience #deeplearning

RT Distributed Parallel Training — Model Parallel Training dlvr.it/SYG4xb #machinelearning #distributedtraining #largemodeltraining

RT Cost Efficient Distributed Training with Elastic Horovod and Amazon EC2 Spot Instances dlvr.it/RslZnC #elastic #amazonec2 #distributedtraining #horovod #deeplearning

This article reviews recently developed methods that focus on #distributedtraining of large-scale #machinelearning models from streaming data in the compute-limited and bandwidth-limited regimes. bit.ly/37i4QBo

RT Smart Distributed Training on Amazon SageMaker with SMD: Part 2 dlvr.it/SYkhMQ #sagemaker #horovod #distributedtraining #machinelearning #tensorflow

RT Smart Distributed Training on Amazon SageMaker with SMD: Part 3 dlvr.it/SYktJ4 #distributedtraining #machinelearning #deeplearning #sagemaker

RT Distributed Parallel Training: Data Parallelism and Model Parallelism dlvr.it/SYbnML #modelparallelism #distributedtraining #pytorch #dataparallelism

RT Effortless distributed training for PyTorch models with Azure Machine Learning and… dlvr.it/SHTbMx #azuremachinelearning #distributedtraining #pytorch #mlsogood

✅ Achievement Unlocked! Efficient #distributedtraining of #DeepSeek-R1:671B is realized on #openEuler 24.03! Built for the future of #AI, openEuler empowers #developers to push the boundaries of innovation. 🐋Full technical deep dive coming soon! @deepseek_ai #opensource #LLM

DSparse: A Distributed Training Method for Edge Clusters Based on Sparse Update jcst.ict.ac.cn/article/doi/10… #DistributedTraining #EdgeComputing #MachineLearning #SparseUpdate #EdgeCluster #Institute_of_Computing_Technology @CAS__Science @UCAS1978

Distributed training just got easier on Outerbounds! Now generally available, it supports multi-GPU setups with [@]torchrun and [@]metaflow_ray. Perfect for large data and models. Train efficiently, even at scale! 💡 #AI #DistributedTraining

![OuterboundsHQ's tweet image. Distributed training just got easier on Outerbounds! Now generally available, it supports multi-GPU setups with [@]torchrun and [@]metaflow_ray. Perfect for large data and models. Train efficiently, even at scale! 💡 #AI #DistributedTraining](https://pbs.twimg.com/media/GX2qQi9WcAALkzc.jpg)

Something went wrong.

Something went wrong.

United States Trends

- 1. #NationalCatDay 3,894 posts

- 2. FOMC 47.4K posts

- 3. Azure 15.7K posts

- 4. Huda 28.7K posts

- 5. The Federal Reserve 12.5K posts

- 6. Jennifer Welch 11.2K posts

- 7. Hutch 1,493 posts

- 8. Powell 33.6K posts

- 9. #SaveSudan 1,544 posts

- 10. NBA Street 2,528 posts

- 11. #SpaceMarine2 2,024 posts

- 12. #Spooktacular25 N/A

- 13. #SellingSunset N/A

- 14. Olandria 47.2K posts

- 15. Vistoso Bosses N/A

- 16. Jake Browning N/A

- 17. Jay Z 1,474 posts

- 18. South Korea 170K posts

- 19. Miran 12.5K posts

- 20. 25 BPS 10.7K posts