#pytorch 搜索结果

With its v5 release, Transformers is going all in on #PyTorch. Transformers acts as a source of truth and foundation for modeling across the field; we've been working with the team to ensure good performance across the stack. We're excited to continue pushing for this in the…

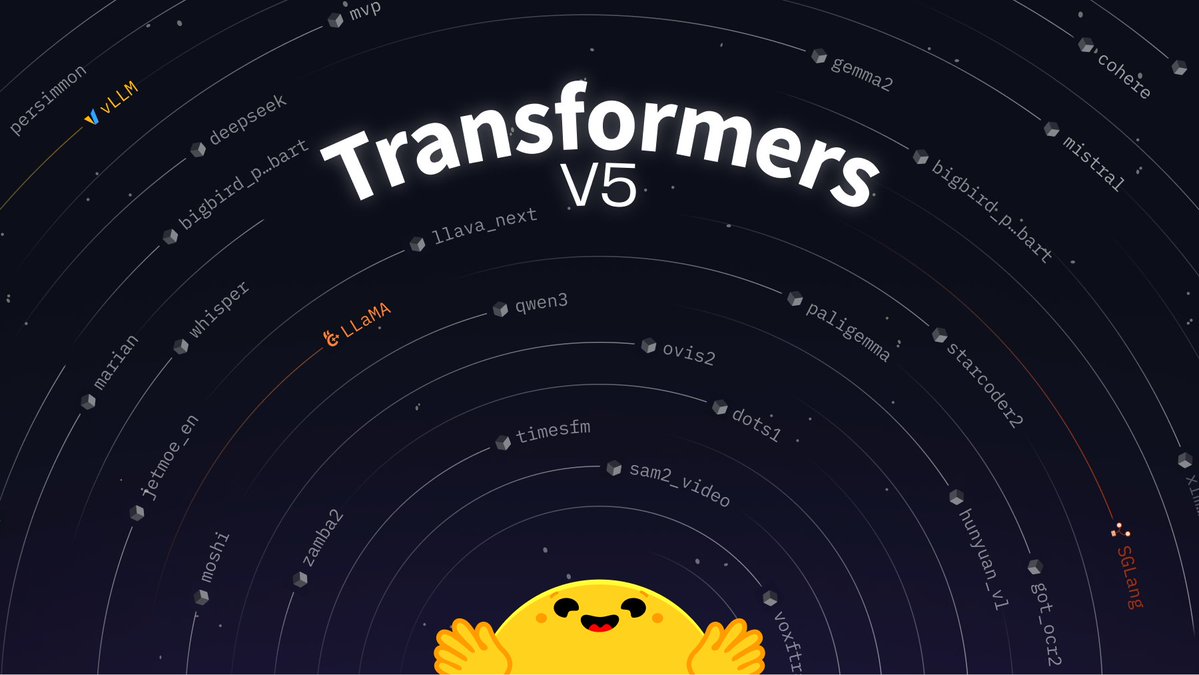

Transformers v5's first release candidate is out 🔥 The biggest release of my life. It's been five years since the last major (v4). From 20 architectures to 400, 20k daily downloads to 3 million. The release is huge, w/ tokenization (no slow tokenizers!), modeling & processing.

🚀 PyLO v0.2.0 is out! (Dec 2025) Introducing VeLO_CUDA 🔥 - a CUDA-accelerated implementation of the VeLO learned optimizer in PyLO. Checkout the fastest available version of this SOTA learned optimizer now in PyTorch. github.com/belilovsky-lab… #PyTorch #DeepLearning #huggingface

🔒💥 Critical security flaws in Picklescan allow execution of arbitrary code by loading untrusted PyTorch models! #CyberSecurity #PyTorch #Picklescan Source: thehackernews.com/2025/12/pickle…

🚨 Critical bugs in Picklescan allow malicious PyTorch models to bypass security scans & execute arbitrary code. High-risk alert! thehackernews.com/2025/12/pickle… #Picklescan #PyTorch #Vulnerability #CyberSecurity #MaliciousCode

Learn to create a BERT model from scratch using PyTorch! 🤖🔥 #BERT #MachineLearning #PyTorch #DataScience #NLP #DeepLearning #ModelTraining #AI machinelearningmastery.com/pretrain-a-ber…

👩💻 Ready to build real‑world AI models? Learn PyTorch in Python with this complete tutorial series. Watch here: youtube.com/playlist?list=… #Python #PyTorch #AI #DeepLearning #MachineLearning

Something went wrong.

Something went wrong.

United States Trends

- 1. #SpotifyWrapped 93.5K posts

- 2. Chris Paul 19.7K posts

- 3. Clippers 29.4K posts

- 4. #NSD26 12.7K posts

- 5. Good Wednesday 32.9K posts

- 6. #WednesdayMotivation 3,814 posts

- 7. $MSFT 12K posts

- 8. Hump Day 12.7K posts

- 9. #TierraDeAmorYSoberanía 1,574 posts

- 10. National Signing Day 4,544 posts

- 11. Nashville 31.8K posts

- 12. Fisherman 12K posts

- 13. Happy Hump 8,473 posts

- 14. #Wednesdayvibe 2,090 posts

- 15. Somalis 105K posts

- 16. Welcome Home 16.8K posts

- 17. Wordle 1,628 X N/A

- 18. Wonderful Wednesday 6,668 posts

- 19. Elden Campbell 1,291 posts

- 20. The BIGGЕST 297K posts