Zaid Khan

@codezakh

NDSEG Fellow / PhD @uncnlp with @mohitban47 working on automating env/data generation + program synthesis formerly @allenai @neclabsamerica

คุณอาจชื่นชอบ

How can an agent reverse engineer the underlying laws of an unknown, hostile & stochastic environment in “one life”, without millions of steps + human-provided goals / rewards? In our work, we: 1️⃣ infer an executable symbolic world model (a probabilistic program capturing…

#NeurIPS2025 is live! I'll be in San Diego through Saturday (Dec 06) and would love to meet prospective graduate students interested in joining my lab at JHU. If you're excited about multimodal AI, robotics, unified models, learning action/motion from video, etc. let’s chat!…

Sharing some personal updates 🥳: - I've completed my PhD at @unccs! 🎓 - Starting Fall 2026, I'll be joining the Computer Science dept. at Johns Hopkins University (@JHUCompSci) as an Assistant Professor 💙 - Currently exploring options + finalizing the plan for my gap year (Aug…

🤔 We rely on gaze to guide our actions, but can current MLLMs truly understand it and infer our intentions? Introducing StreamGaze 👀, the first benchmark that evaluates gaze-guided temporal reasoning (past, present, and future) and proactive understanding in streaming video…

🚨 Excited to be (remotely) giving a talk tomorrow 12/2 at the "Exploring Trust and Reliability in LLM Evaluation" #NeurIPS expo workshop! I’ll be presenting our work on pragmatic training to improve calibration and persuasion, and skill-based granular evaluation for data…

🏖️ Heading to San Diego for #NeurIPS (Dec 2-7th)! I will be presenting: Bifrost-1: Bridging Multimodal LLMs and Diffusion Models with Patch-level CLIP Latents 🗓️ Thu 4 Dec 4:30 p.m. PT — 7:30 p.m. PT | Exhibit Hall C,D,E #4412 Excited to chat about our follow-up work on…

🎉 Excited to share that Bifrost-1 has been accepted to #NeurIPS2025! ☀️ Bridging MLLMs and diffusion into a unified multimodal understanding and generation model can be very costly to train. ✨ Bifrost-1 addresses this by leveraging patch-level CLIP latents that are natively…

I will be at #NeurIPS2025 to present our work: "LASeR: Learning to Adaptively Select Reward Models with Multi-Armed Bandits". Come visit our poster: 🗓️ Thu 4 Dec, 4:30 p.m. – 7:30 p.m. PST | Exhibit Hall C,D,E #4108 Let's connect and chat about LLM post-training, inference-time…

Reward Models (RMs) are crucial for RLHF training, but: Using single RM: 1⃣ poor generalization, 2⃣ ambiguous judgements & 3⃣ over-optimization Using multiple RMs simultaneously: 1⃣ resource-intensive & 2⃣ susceptible to noisy/conflicting rewards 🚨We introduce ✨LASeR✨,…

⛱️ Heading to San Diego for #NeurIPS (Dec 2-7th)! I am on the industry job market & will be presenting: LASeR: Learning to Adaptively Select Reward Models with Multi-Arm Bandits (🗓️Dec 4, 4:30PM) Excited to chat about research (reasoning, LLM agents, post-training) & job…

🎉Excited to share that LASeR has been accepted to #NeurIPS2025!☀️ RLHF with a single reward model can be prone to reward-hacking while ensembling multiple RMs is costly and prone to conflicting rewards. ✨LASeR addresses this by using multi-armed bandits to select the most…

🚨 Thrilled to share Prune-Then-Plan! - VLM-based EQA agents often move back-and-forth due to miscalibration. - Our Prune-Then-Plan method filters noisy frontier choices and delegates planning to coverage-based search. - This yields stable, calibrated exploration and…

🚨Introducing our new work, Prune-Then-Plan — a method that enables AI agents to better explore 3D scenes for embodied question answering (EQA). 🧵 1/2 🟥 Existing EQA systems leverage VLMs to drive exploration choice at each step by selecting the ‘best’ next frontier, but…

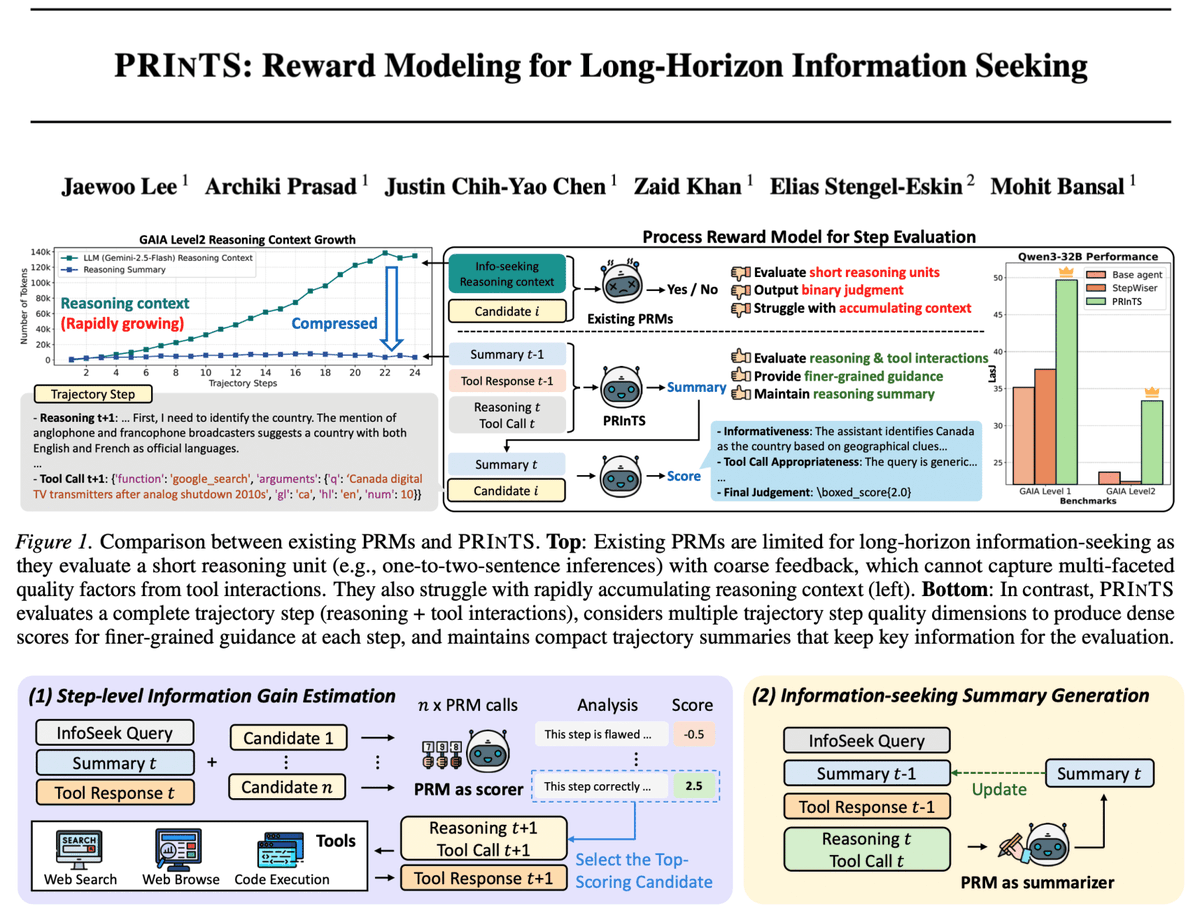

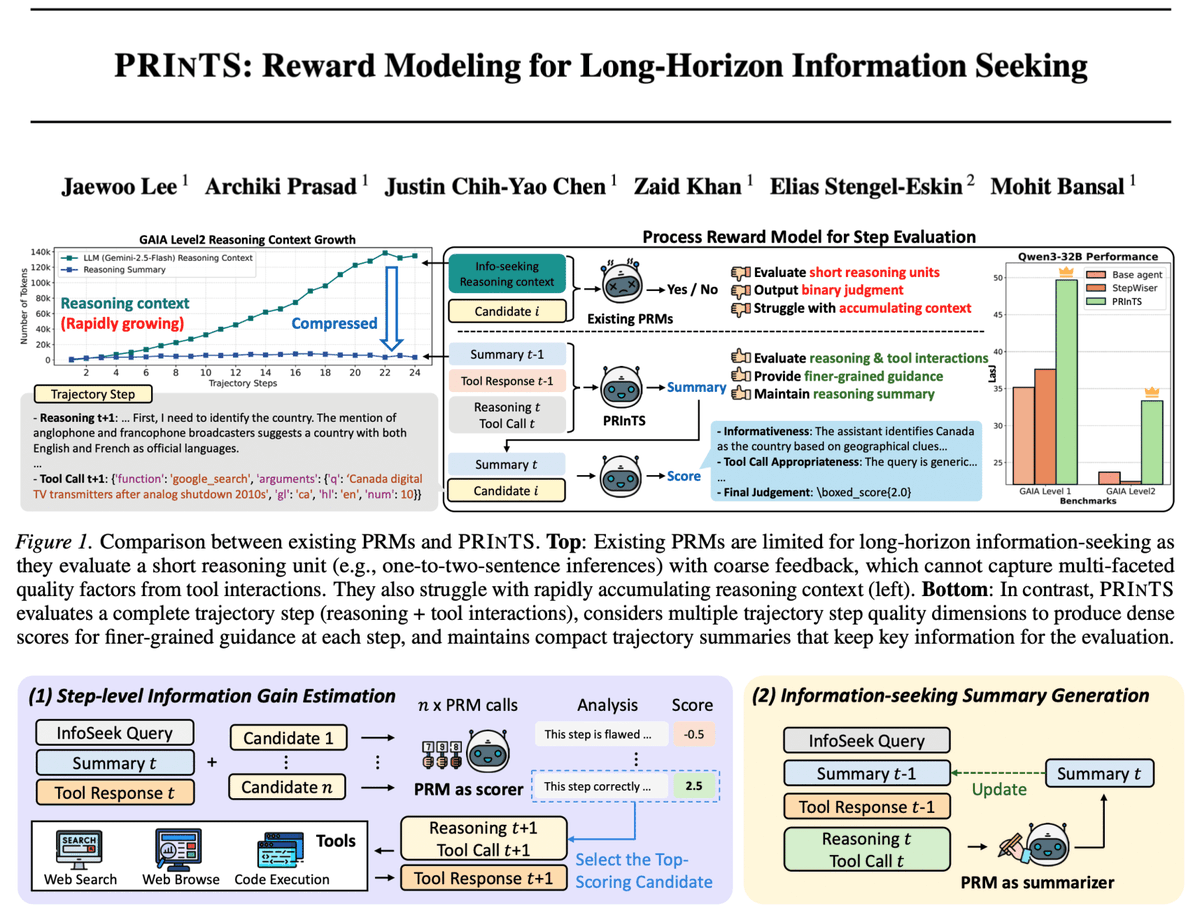

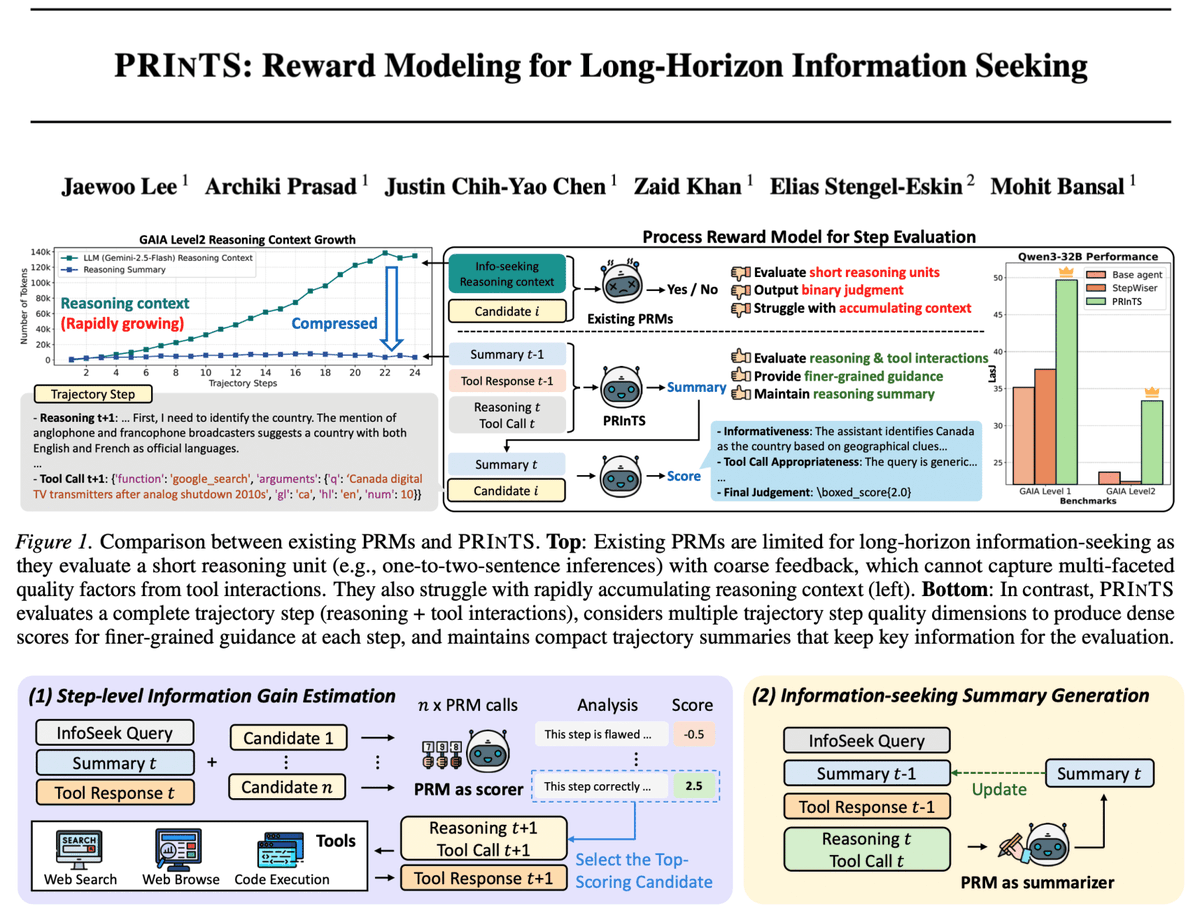

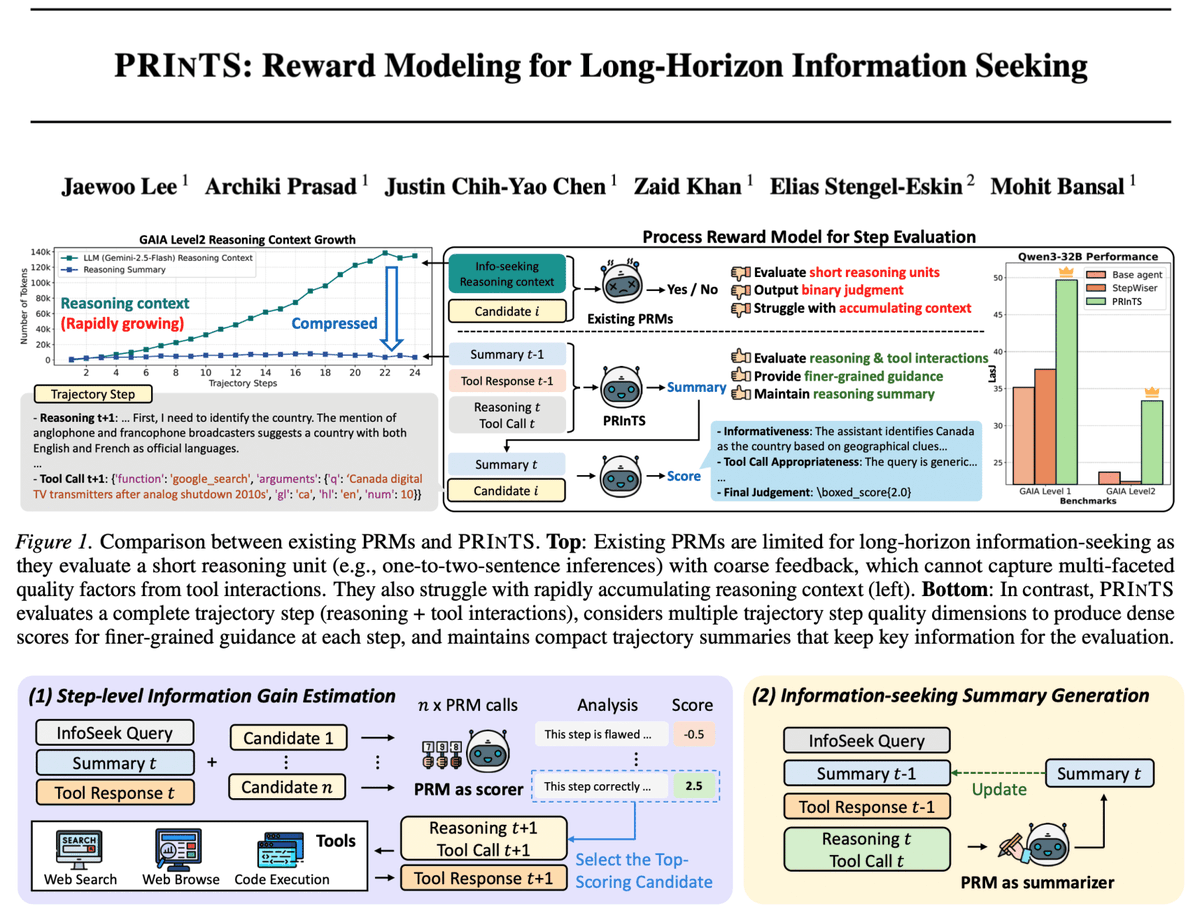

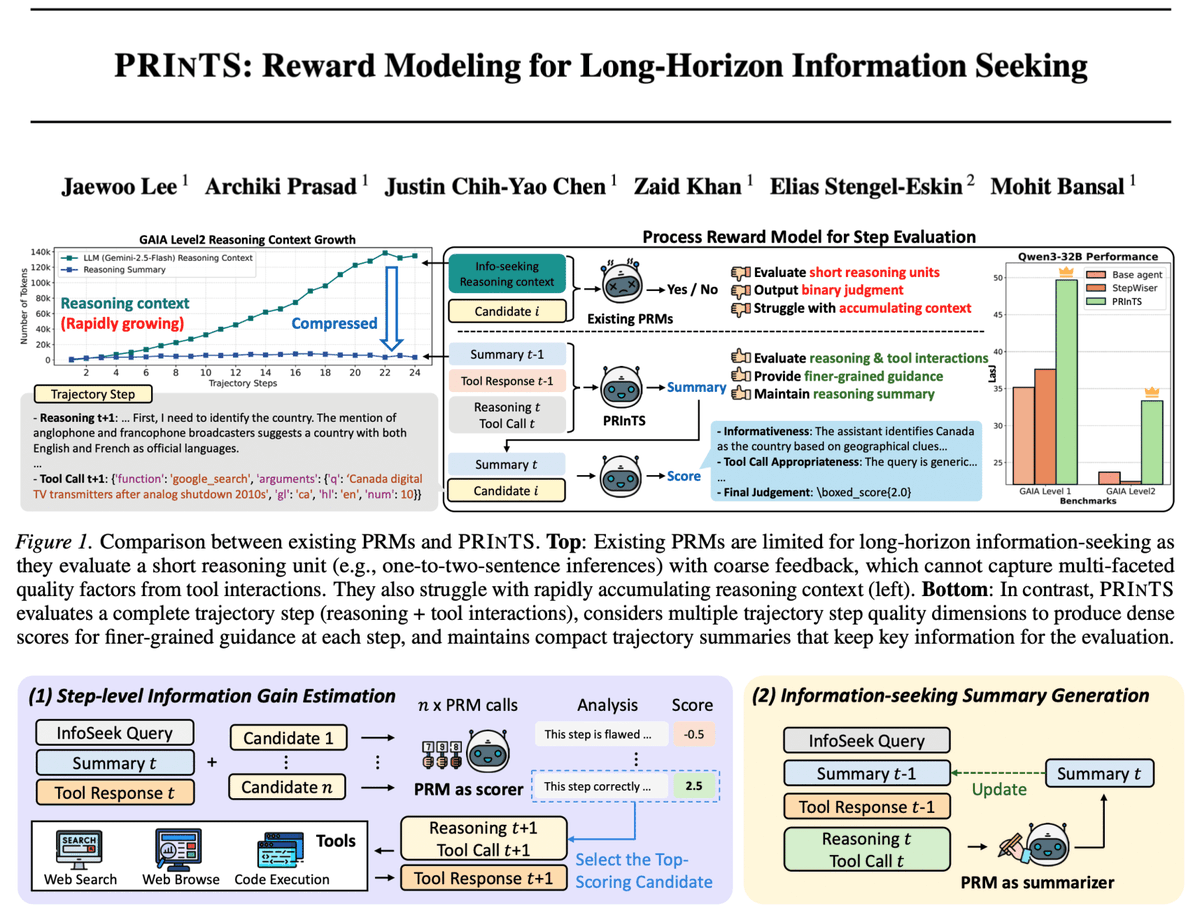

🚨 Check out our generative process reward model, PRInTS, that improves agents' complex, long-horizon information-seeking capabilities via: 1⃣ novel MCTS-based fine-grained information-gain scoring across multiple dimensions. 2⃣ accurate step-level guidance based on compression…

🚨 Excited to announce ✨PRInTS✨, a generative Process Reward Model (PRM) that improves agent’s long-horizon info-seeking via info-gain scoring + summarization. PRInTS guides open + specialized agents with major boosts 👉+9.3% avg. w/ Qwen3-32B across GAIA, FRAMES &…

Thanks @_akhaliq for posting about our work on guiding agents for long-horizon information-seeking tasks using a generative process reward model! For more details, see the original thread: x.com/ArchikiPrasad/…

PRInTS Reward Modeling for Long-Horizon Information Seeking

We want agents to solve problems that require searching and exploring multiple paths over long horizons, such as complex information seeking tasks which require the agent to answer questions by exploring the internet. Process Reward Models (PRMs) are a promising approach which…

🚨 Excited to announce ✨PRInTS✨, a generative Process Reward Model (PRM) that improves agent’s long-horizon info-seeking via info-gain scoring + summarization. PRInTS guides open + specialized agents with major boosts 👉+9.3% avg. w/ Qwen3-32B across GAIA, FRAMES &…

Long-horizon information-seeking tasks remain challenging for LLM agents, and existing PRMs (step-wise process reward models) fall short because: 1⃣ the reasoning process involves interleaved tool calls and responses 2⃣ the context grows rapidly due to the extended task horizon…

🚨 Excited to announce ✨PRInTS✨, a generative Process Reward Model (PRM) that improves agent’s long-horizon info-seeking via info-gain scoring + summarization. PRInTS guides open + specialized agents with major boosts 👉+9.3% avg. w/ Qwen3-32B across GAIA, FRAMES &…

🚨 PRInTS addresses key challenges for info-seeking agents + is compatible with trained/generalist LLMs (both open- + closed-source) by guiding agents towards better queries/actions in long-horizon tasks (GAIA, FRAMES, WebWalkerQA), w/ strong gains (e.g. +9.3% for Qwen 32B, +~4%…

🚨 Excited to announce ✨PRInTS✨, a generative Process Reward Model (PRM) that improves agent’s long-horizon info-seeking via info-gain scoring + summarization. PRInTS guides open + specialized agents with major boosts 👉+9.3% avg. w/ Qwen3-32B across GAIA, FRAMES &…

🚨 Excited to announce ✨PRInTS✨, a generative Process Reward Model (PRM) that improves agent’s long-horizon info-seeking via info-gain scoring + summarization. PRInTS guides open + specialized agents with major boosts 👉+9.3% avg. w/ Qwen3-32B across GAIA, FRAMES &…

🚨 Excited to share SketchVerify — a framework that scales trajectory planning for video generation. ➡️ Sketch-level motion previews let us search dozens of trajectory candidates instantly — without paying the cost of the time-consuming diffusion process. ➡️ A multimodal…

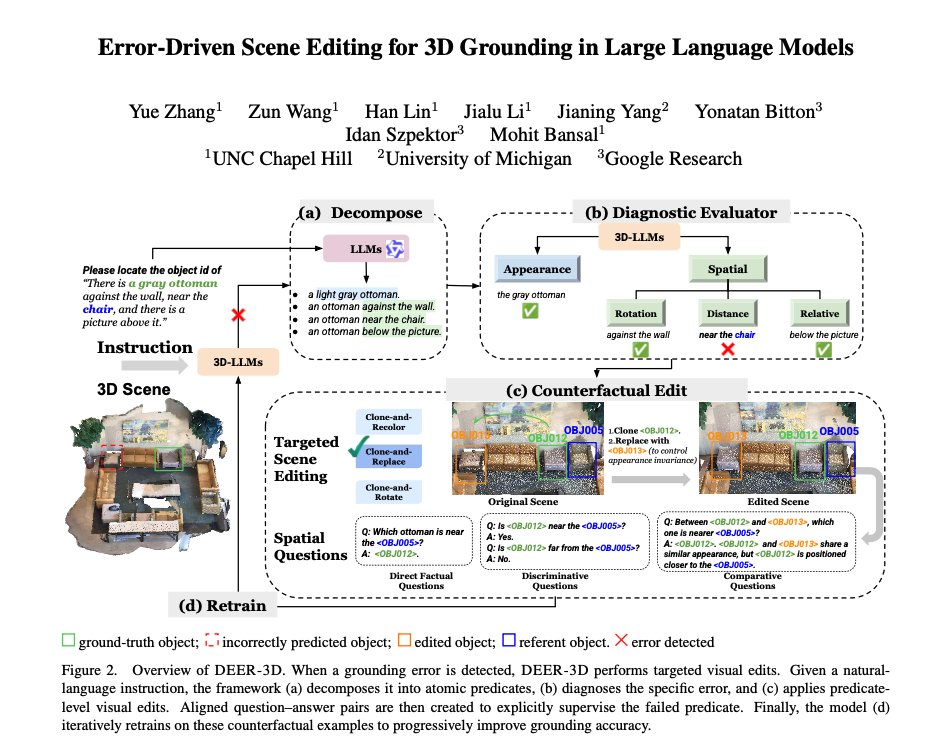

🚨 Thrilled to introduce DEER-3D: Error-Driven Scene Editing for 3D Grounding in Large Language Models - Introduces an error-driven scene editing framework to improve 3D visual grounding in 3D-LLMs. - Generates targeted 3D counterfactual edits that directly challenge the…

Today, we’re announcing the next chapter of Terminal-Bench with two releases: 1. Harbor, a new package for running sandboxed agent rollouts at scale 2. Terminal-Bench 2.0, a harder version of Terminal-Bench with increased verification

We live, feel, and create by perceiving the world as visual spaces unfolding through time — videos. Our memories and even our language are spatial: mind-palaces, mind-maps, "taking steps in the right direction..." Super excited to see Cambrian-S pushing this frontier! And,…

Introducing Cambrian-S it’s a position, a dataset, a benchmark, and a model but above all, it represents our first steps toward exploring spatial supersensing in video. 🧶

MLLMs are great at understanding videos, but struggle with spatial reasoning—like estimating distances or tracking objects across time. the bottleneck? getting precise 3D spatial annotations on real videos is expensive and error-prone. introducing SIMS-V 🤖 [1/n]

I'll be presenting ✨MAgICoRe✨ virtually tonight at 7 PM ET / 8 AM CST (Gather Session 3)! I'll discuss 3 key challenges in LLM refinement for reasoning, and how MAgICoRe tackles them jointly: 1⃣ Over-correction on easy problems 2⃣ Failure to localize & fix its own errors 3⃣…

🚨 Check out our awesome students/postdocs' papers at #EMNLP2025 and say hi to them 👋! Also, I will give a keynote (virtually) on "Attributable, Conflict-Robust, and Multimodal Summarization with Multi-Source Retrieval" at the NewSumm workshop. -- Jaehong (in-person) finished…

United States เทรนด์

- 1. FIFA 184K posts

- 2. FINALLY DID IT 426K posts

- 3. The Jupiter 96.6K posts

- 4. The WET 107K posts

- 5. Infantino 41.1K posts

- 6. Lauryn Hill 9,984 posts

- 7. Matt Campbell 7,937 posts

- 8. Warner Bros 194K posts

- 9. Kevin Hart 5,674 posts

- 10. The BONK 241K posts

- 11. Iowa State 7,100 posts

- 12. Rio Ferdinand 3,400 posts

- 13. $MAYHEM 2,846 posts

- 14. Morocco 50.3K posts

- 15. Hep B 1,448 posts

- 16. #NXXT_AI_Energy N/A

- 17. #FanCashDropPromotion 2,875 posts

- 18. HBO Max 76.8K posts

- 19. Aaron Judge 1,596 posts

- 20. Ted Sarandos 8,147 posts

คุณอาจชื่นชอบ

-

Alex Thiery

Alex Thiery

@alexxthiery -

Olivia White, PharmD, BCOP

Olivia White, PharmD, BCOP

@olivia__white1 -

Molly Miller

Molly Miller

@Molly_M_Miller -

Nicholas Rebold, PharmD, MPH, BCIDP, AAHIVP

Nicholas Rebold, PharmD, MPH, BCIDP, AAHIVP

@NicholasRebold -

Elizabeth Mieczkowski

Elizabeth Mieczkowski

@beth_miecz -

Ferhat

Ferhat

@0xCrispy -

majin bru

majin bru

@saibayadon -

Alireza FakhriRavari, PharmD, BCPS, BCIDP, AAHIVP

Alireza FakhriRavari, PharmD, BCPS, BCIDP, AAHIVP

@LordAlirezaF -

Dan van der Merwe

Dan van der Merwe

@danieljvdm -

Aishwarya Mandyam (at NeurIPS 2025)

Aishwarya Mandyam (at NeurIPS 2025)

@Aishwarya_R_M

Something went wrong.

Something went wrong.