내가 좋아할 만한 콘텐츠

50 LLM Projects with Source Code to Become a Pro 1. Beginner-Level LLM Projects → Text Summarizer using OpenAI API → Chatbot for Customer Support → Sentiment Analysis with GPT Models → Resume Optimizer using LLMs → Product Description Generator → AI-Powered Grammar…

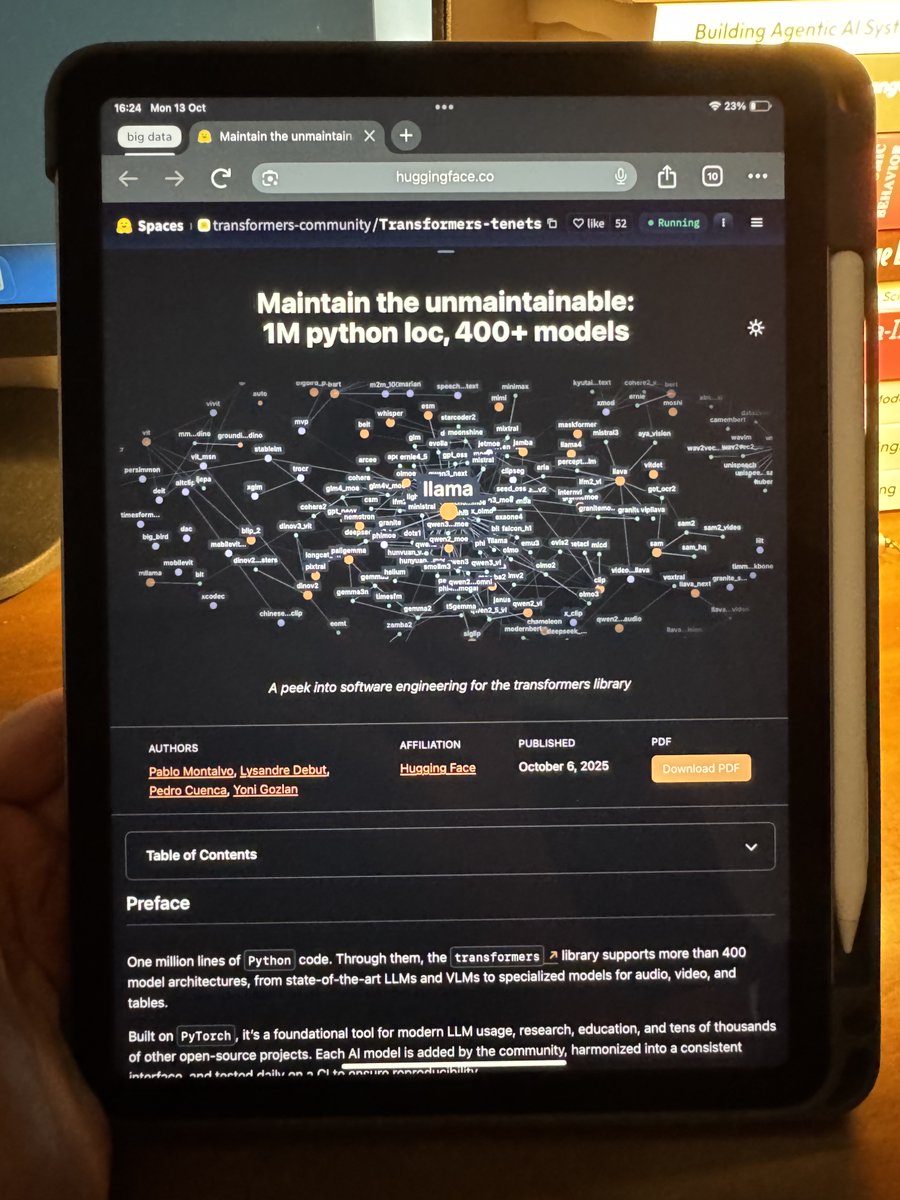

I left my plans for weekend to read this recent blog from HuggingFace 🤗 on how they maintain the most critical AI library: transformers. → 1M lines of Python, → 1.3M installations, → thousands of contributors, → a true engineering masterpiece, Here's what I learned:…

Excited to release new repo: nanochat! (it's among the most unhinged I've written). Unlike my earlier similar repo nanoGPT which only covered pretraining, nanochat is a minimal, from scratch, full-stack training/inference pipeline of a simple ChatGPT clone in a single,…

You're not depressed, you just lost your quest.

🔥Free Google Collab notebooks to implement every Machine Learning Algorithm from scratch Link in comment

how i got here: > i used to be and still tend towards having an obsessive/addictive personality > put many years of my life into video games > it was only 2 years ago i started to turn that around because i got other interests and starting really looking forward to the future >…

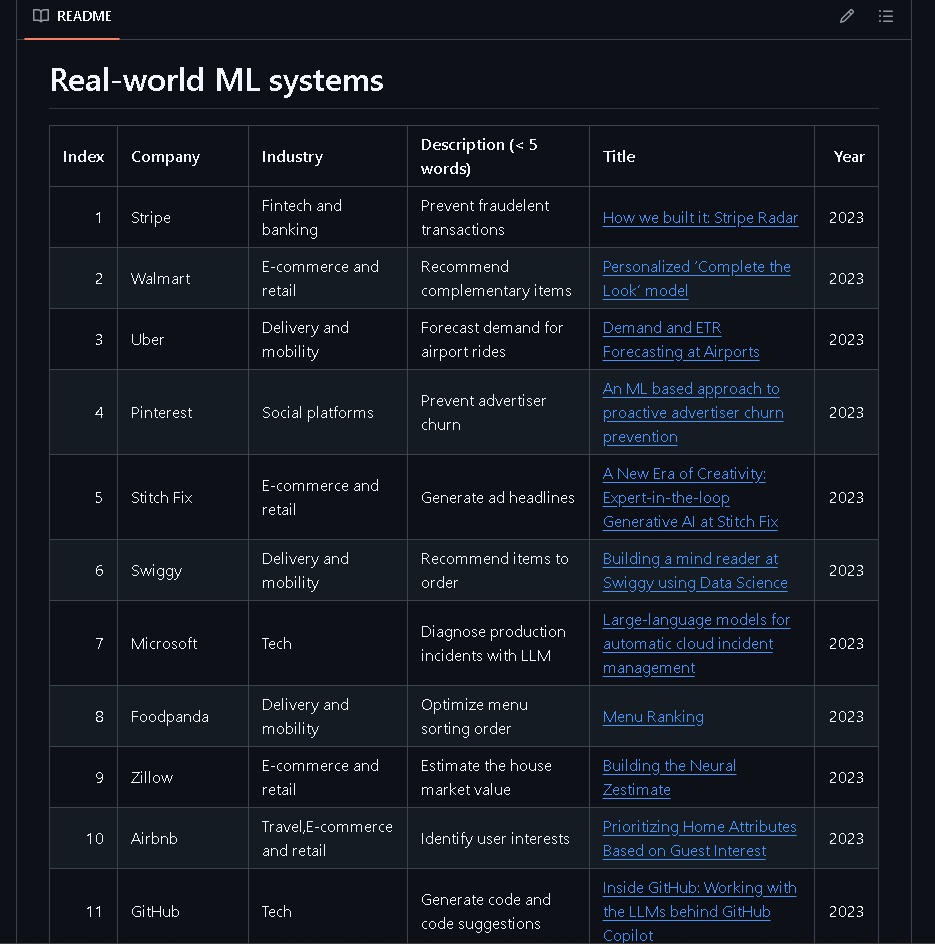

found a repo that has a massive collection of Machine Learning system design case studies used in the real world, from Stripe, Spotify, Netflix, Meta, GitHub, Twitter/X, and much more link in replies

Copy-pasting PyTorch code is fast — using an AI coding model is even faster — but both skip the learning. That's why I asked my students to write by hand ✍️. 🔽 Download: byhand.ai/pytorch After the exercise, my students can understand what every line really does and…

70 Python Projects with Source code for Developers Step 1: Beginner Foundations → Hello World Web App → Calculator (CLI) → To-Do List CLI → Number Guessing Game → Countdown Timer → Dice Roll Simulator → Coin Flip Simulator → Password Generator → Palindrome Checker →…

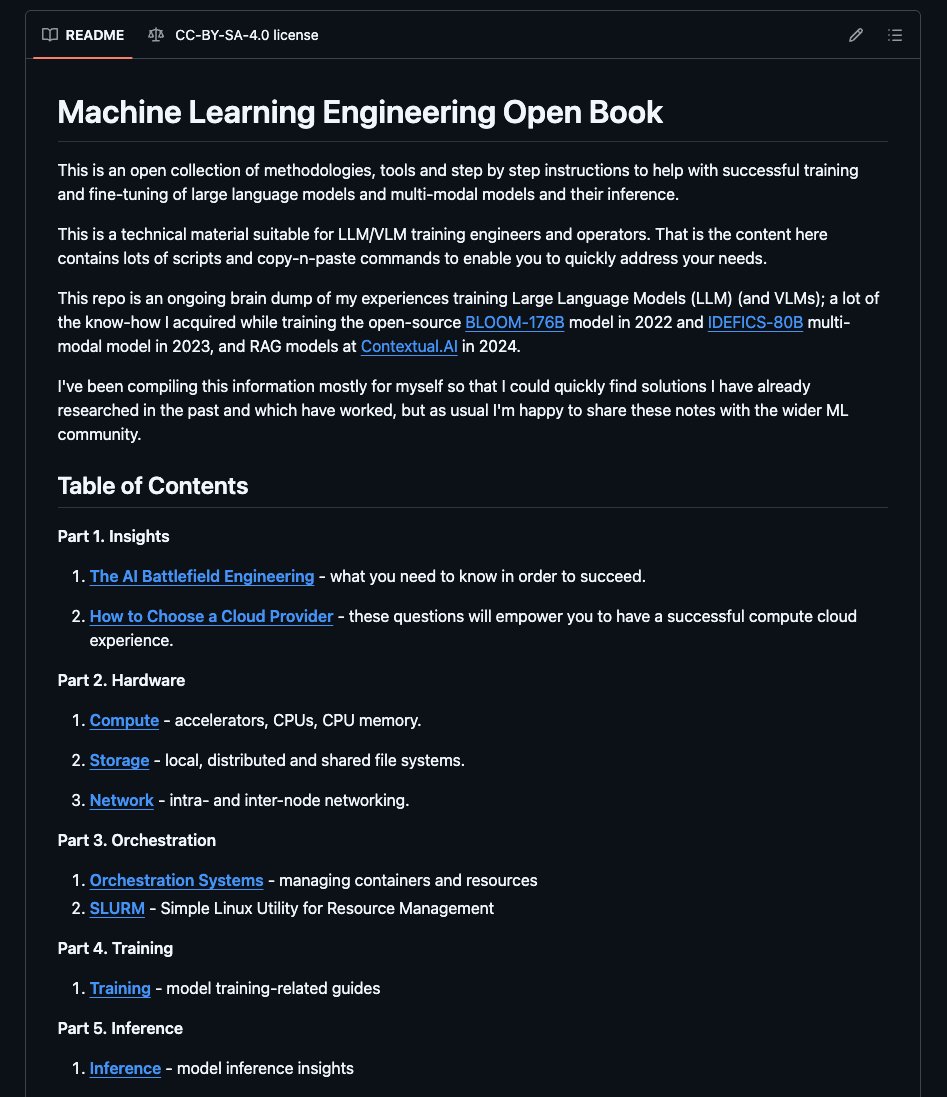

everything you need to get started in one repo

System prompts are getting outdated! Here's a counterintuitive lesson from building real-world Agents: Writing giant system prompts doesn't improve an Agent's performance; it often makes it worse. For example, you add a rule about refund policies. Then one about tone. Then…

Nethack is the best benchmark you've never heard of. I'd say that it makes ALE look like a toy, but well... it is

Introducing Scalable Option Learning (SOL☀️), a blazingly fast hierarchical RL algorithm that makes progress on long-horizon tasks and demonstrates positive scaling trends on the largely unsolved NetHack benchmark, when trained for 30 billion samples. Details, paper and code in >

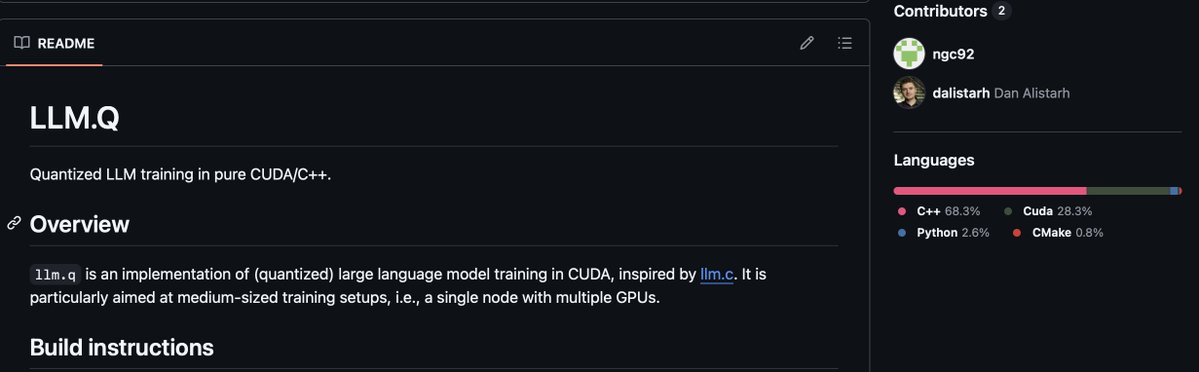

it's insane to me how little attention the llm.q repo has it's a fully C/C++/CUDA implementation of multi-gpu (zero + fsdp), quantized LLM training with support for selective AC it's genuinely the coolest OSS thing I've seen this year (what's crazier is 1 person wrote it!)

Finally had a chance to listen through this pod with Sutton, which was interesting and amusing. As background, Sutton's "The Bitter Lesson" has become a bit of biblical text in frontier LLM circles. Researchers routinely talk about and ask whether this or that approach or idea…

.@RichardSSutton, father of reinforcement learning, doesn’t think LLMs are bitter-lesson-pilled. My steel man of Richard’s position: we need some new architecture to enable continual (on-the-job) learning. And if we have continual learning, we don't need a special training…

As the divide between the super-rich and the rest widens, this strategy becomes increasingly relevant.

Great point that is missed by a lot of LLM “sellers”

I expect AI to produce a lot of value while trying to make a coherent body of knowledge out of its training data, but on its own, AI is limited to a sort of ancient Greek philosophical process, where the method is rhetoric, rather than the experimental process of science. This…

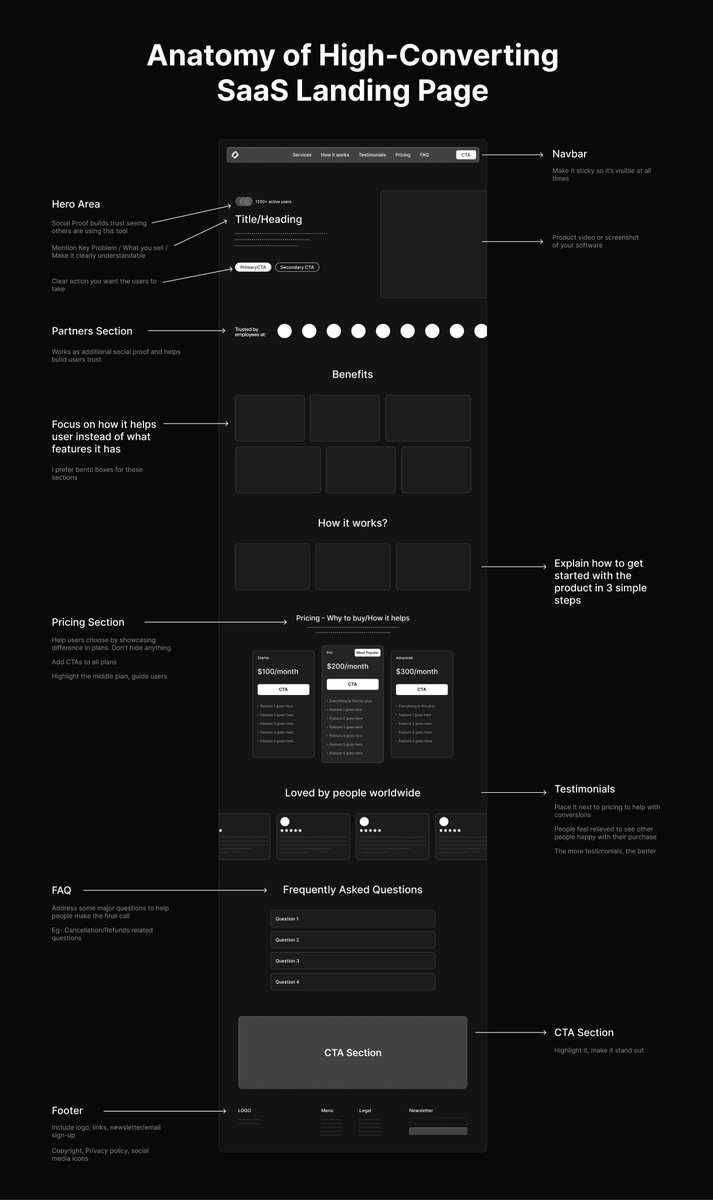

Use this structure for your SAAS Landing page Thank me later

real-time multi-person pose detection for body, face, hands, and feet

⏱️ From 10 hours to under 1 minute. @ParaboleAI achieved a 1,000x speedup in industrial optimization by running causal AI on NVIDIA GH200 Grace Hopper + Gurobi. This leap is enabling real-time, explainable decisions at massive scale. 🔗 nvda.ws/46nCtBH

United States 트렌드

- 1. D’Angelo 341K posts

- 2. Charlie 647K posts

- 3. Erika Kirk 70.1K posts

- 4. Young Republicans 25K posts

- 5. Politico 204K posts

- 6. #AriZZona N/A

- 7. #PortfolioDay 21.9K posts

- 8. Jason Kelce 5,212 posts

- 9. Pentagon 109K posts

- 10. Presidential Medal of Freedom 89.5K posts

- 11. George Strait 5,021 posts

- 12. #LightningStrikes N/A

- 13. Big 12 14.2K posts

- 14. Kai Correa N/A

- 15. Burl Ives N/A

- 16. Milei 317K posts

- 17. NHRA N/A

- 18. Drew Struzan 36.2K posts

- 19. Scream 3 1,051 posts

- 20. Brown Sugar 24.7K posts

Something went wrong.

Something went wrong.