Mike Carroll

@mikecarroll_eng

Engineer. Previously @Facebook

قد يعجبك

🤣

new sitcom idea: 18 yo chinese ccp spy joining an SF defense startup and realizing no one has actually done any work because they are too busy going to parties and chasing social clout, so she has to create all of the tech herself before she can send it back home

>Augmented reality + generative AGI means people can suddenly do expert-level work they never trained it's already here

Two underdiscussed possibilities: Augmented reality + generative AGI means people can suddenly do expert-level work they never trained for. Real-time overlays guide you through any task - repairs, construction, technical assembly, complex procedures. Visual guides showing…

Starship vs A380 & 737-8 When stacked with the Super Heavy booster Starship is taller than an A380 and a Max-8 on top of each other, 123m vs 73m+40m

Starship vs 737-8 Easy to forget how massive Starship is, 52m long vs 40m for a Max-8

18 months ago, @karpathy set a challenge: "Can you take my 2h13m tokenizer video and translate [into] a book chapter". We've done it! It includes prose, code & key images. It's a great way to learn this key piece of how LLMs work. fast.ai/posts/2025-10-…

Video lectures, MIT 6.824 Distributed Systems spring 2020, by Robert Morris nil.csail.mit.edu/6.824/2020/gen… youtube.com/playlist?list=…

ML concepts every data scientist should know for interviews: Bookmark this. 1. Bias-Variance Tradeoff 2. Cross-Validation Strategies 3. Regularization (L1, L2, Elastic Net) 4. Class Imbalance & Sampling Techniques 5. Feature Engineering & Selection 6. Overfitting vs…

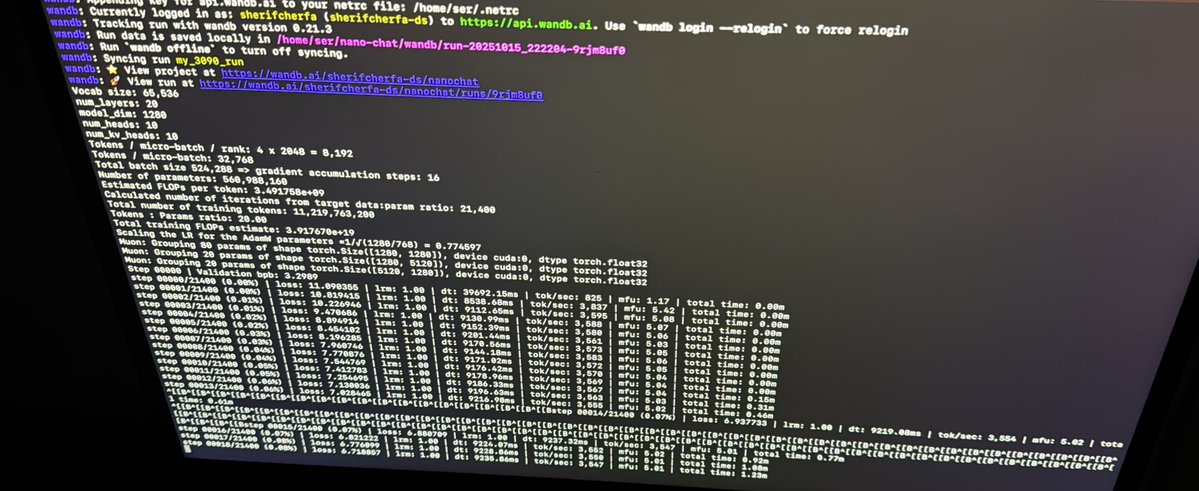

Training Andrej Karpathy’s Nanochat on 4x RTX 3090s at 225W each: Step 2,694/21,400 (12.59% done) Loss: 3.14 Runtime: 6.78 hours Throughput: 3,600 tok/sec Temps: 52-57°C VRAM: 19GB/24GB per card Total cost: 15$ at 55h Zero errors, perfectly stable

I don't like courses. Most were a waste of time. Yes, even at Stanford. If you're new to ML, take CS231N.

your honor i object, i dont know about harvard but stanford literally releases SOTA courses

My meeting budget: 5 min - meet someone new 10 min - solve a problem 15 min - identify + solve a problem Parkinson’s law: work expands so as to fill the time available for its completion.

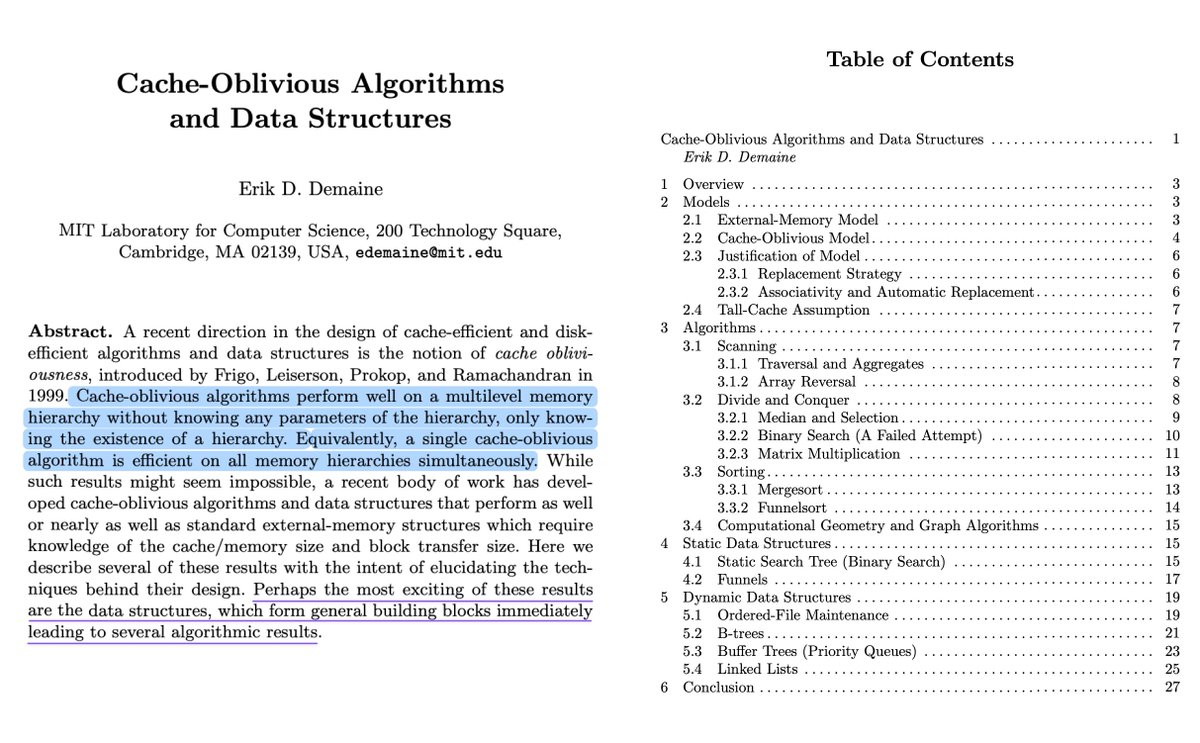

MIT's 6.851: Advanced Data Structures (Spring'21) courses.csail.mit.edu/6.851/spring21/ This has been on my recommendation list for a while, and the Memory hierarchy discussions are great in the context of cache-oblivious algorithms.

"Cache‑Oblivious Algorithms and Data Structures" by Erik D. Demaine erikdemaine.org/papers/BRICS20… This is a foundational survey on designing cache‑oblivious algorithms and data structures that perform as well as cache‑aware approaches that require hardcoding cache size (M) and block…

50 LLM Projects with Source Code to Become a Pro 1. Beginner-Level LLM Projects → Text Summarizer using OpenAI API → Chatbot for Customer Support → Sentiment Analysis with GPT Models → Resume Optimizer using LLMs → Product Description Generator → AI-Powered Grammar…

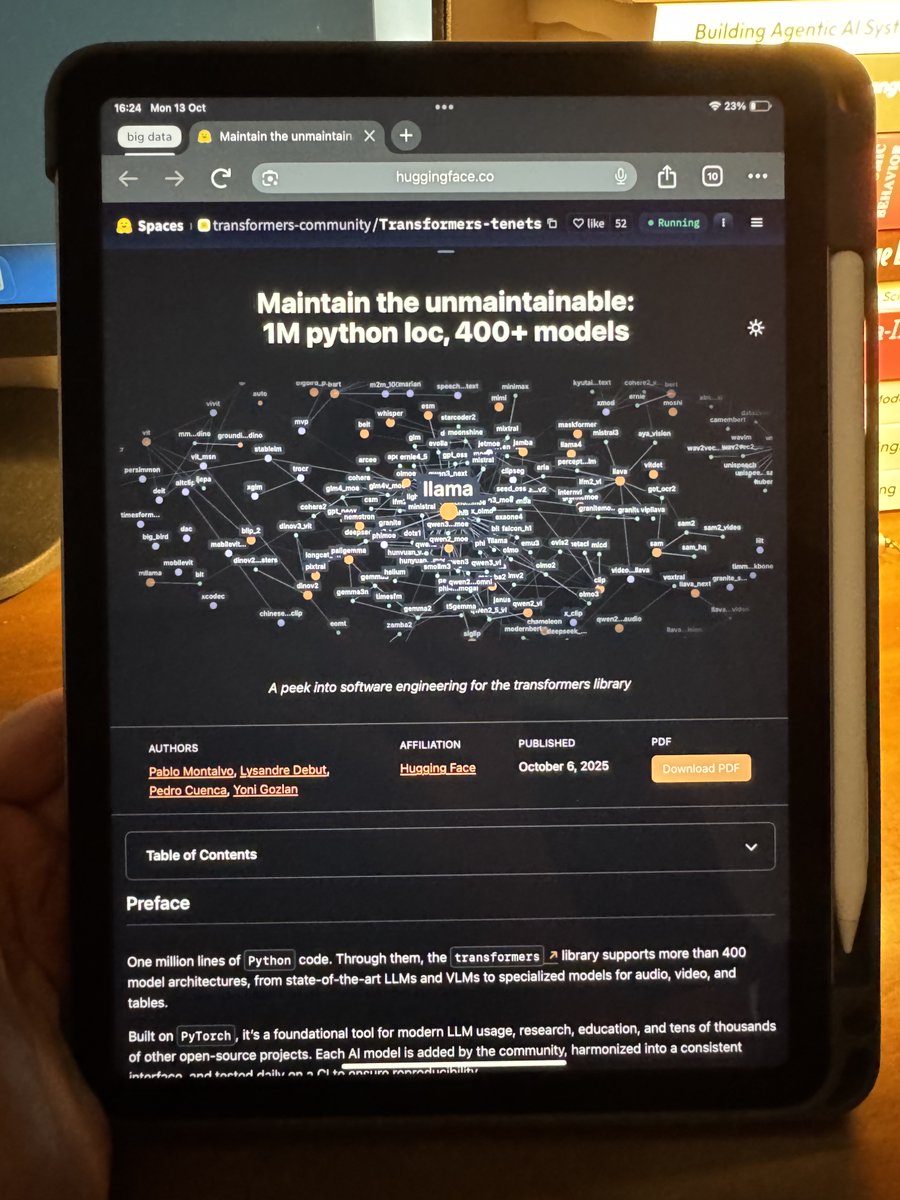

I left my plans for weekend to read this recent blog from HuggingFace 🤗 on how they maintain the most critical AI library: transformers. → 1M lines of Python, → 1.3M installations, → thousands of contributors, → a true engineering masterpiece, Here's what I learned:…

Excited to release new repo: nanochat! (it's among the most unhinged I've written). Unlike my earlier similar repo nanoGPT which only covered pretraining, nanochat is a minimal, from scratch, full-stack training/inference pipeline of a simple ChatGPT clone in a single,…

You're not depressed, you just lost your quest.

🔥Free Google Collab notebooks to implement every Machine Learning Algorithm from scratch Link in comment

how i got here: > i used to be and still tend towards having an obsessive/addictive personality > put many years of my life into video games > it was only 2 years ago i started to turn that around because i got other interests and starting really looking forward to the future >…

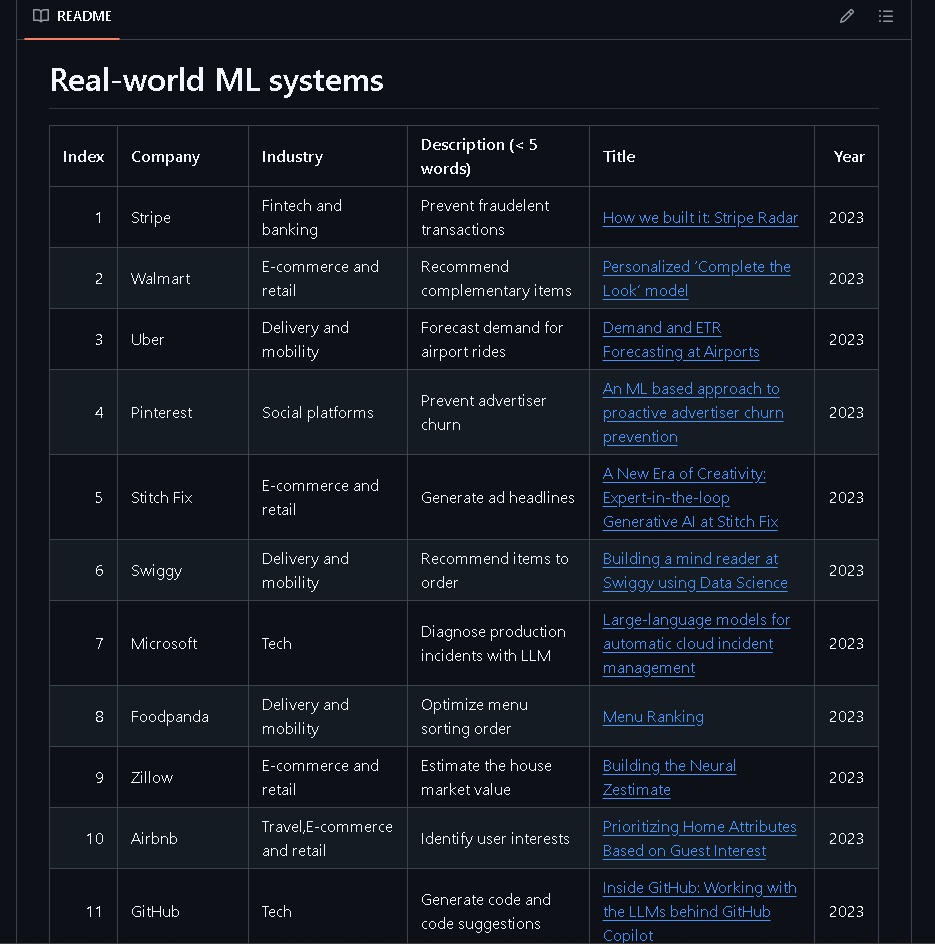

found a repo that has a massive collection of Machine Learning system design case studies used in the real world, from Stripe, Spotify, Netflix, Meta, GitHub, Twitter/X, and much more link in replies

Copy-pasting PyTorch code is fast — using an AI coding model is even faster — but both skip the learning. That's why I asked my students to write by hand ✍️. 🔽 Download: byhand.ai/pytorch After the exercise, my students can understand what every line really does and…

70 Python Projects with Source code for Developers Step 1: Beginner Foundations → Hello World Web App → Calculator (CLI) → To-Do List CLI → Number Guessing Game → Countdown Timer → Dice Roll Simulator → Coin Flip Simulator → Password Generator → Palindrome Checker →…

United States الاتجاهات

- 1. Ohtani 210K posts

- 2. Dodgers 255K posts

- 3. World Series 60K posts

- 4. Carson Beck 16.4K posts

- 5. $SAWA 1,584 posts

- 6. Miami 99.2K posts

- 7. Louisville 28.1K posts

- 8. Nebraska 17.9K posts

- 9. Brewers 55.8K posts

- 10. Emiru 6,363 posts

- 11. Babe Ruth 3,325 posts

- 12. NLCS 60.4K posts

- 13. Rhule 4,631 posts

- 14. #SmackDown 57.6K posts

- 15. NOCHE IS BACK 21.8K posts

- 16. 3 HRs 10.1K posts

- 17. Massie 29.6K posts

- 18. #BostonBlue 7,803 posts

- 19. 10 Ks 4,620 posts

- 20. George Santos 82.5K posts

Something went wrong.

Something went wrong.